Sorry if I wasn't clearer above (I have a knack for obfuscating my own point).

I am also an avid believer in measurements. I think hey are chiefly responsible for taking my system to the level it's at now.* I just haven't done any at the listening position for my main speakers. I did however measure them quite a lot awhile back, using the sine-sweep-derived windowed impulse method in Room EQ Wizard, in a much larger space than my living room (i.e. longer windows). I did a bit of driver correction based on these measurements.

I did take measurements at & around the listening position at home, but only for the subs, to do room correction.

I realize this overall approach doesn't really sync up with the Schroeder frequency since my room correction ends at the 75 Hz crossover frequency, but man, it all sounds pretty damn good.

As to tilt, I agree it is subjective and variable. In my last good car system for example, I was running around -2 dB/oct or maybe more (can't remember). Of course there are other factors involved in the car.

Jim

* Measurements yes, but also those damn 48 dB mid/tweet crossover slopes! Instead of sounding as they look on paper, with all their mathematical severity, they have taken the sound in the fully opposite direction - much more transparent, natural and organic. Quite a pleasant surprise.

I am also an avid believer in measurements. I think hey are chiefly responsible for taking my system to the level it's at now.* I just haven't done any at the listening position for my main speakers. I did however measure them quite a lot awhile back, using the sine-sweep-derived windowed impulse method in Room EQ Wizard, in a much larger space than my living room (i.e. longer windows). I did a bit of driver correction based on these measurements.

I did take measurements at & around the listening position at home, but only for the subs, to do room correction.

I realize this overall approach doesn't really sync up with the Schroeder frequency since my room correction ends at the 75 Hz crossover frequency, but man, it all sounds pretty damn good.

As to tilt, I agree it is subjective and variable. In my last good car system for example, I was running around -2 dB/oct or maybe more (can't remember). Of course there are other factors involved in the car.

Jim

* Measurements yes, but also those damn 48 dB mid/tweet crossover slopes! Instead of sounding as they look on paper, with all their mathematical severity, they have taken the sound in the fully opposite direction - much more transparent, natural and organic. Quite a pleasant surprise.

I chose arrays for a reason, to be able to play with the concept of phase at the listening position. The arrays work with the room, you won't find clear floor and/or ceiling reflections and I absorbed all other reflections.

This was done to be able to clearly hear the venue (if present, real or synthesized) within the recording. The bass line even empowers the midrange and it is a clear difference to me.

In my humble opinion you can't just change the phase at the listening position, you've got to make sure the speaker works with the room. With more conventional speakers this would take a lot of damping/absorbing and/or diffusion.

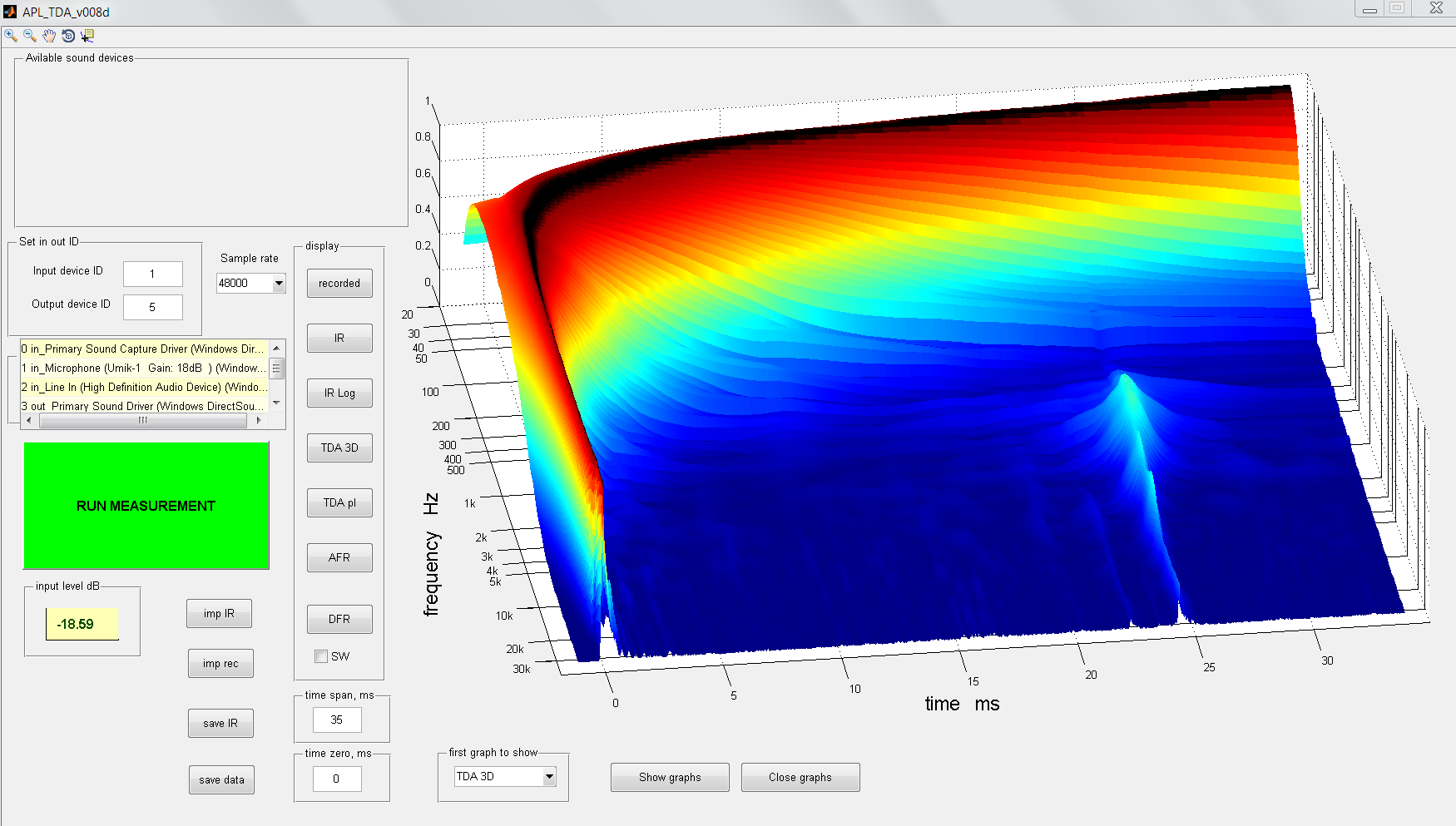

The end result as seen in APL_TDA is this:

Measured with a stereo pair, not at 1 m, but at the listening position.

I tried more than one phase correction, this one is a band pass correction that I ended up enjoying the most. Flat phase down to DC sounded weird on a couple of songs. The sense of timing was best if I followed the band pass behavior of the frequency response.

Compare it to a loop back of a clean impulse run trough my DAC:

I did not get here in a day. It wasn't as easy as straightening out the phase and be done with it. It took me the best part of a year to find something that worked for me.

I have heard everything in between, meaning I did get to sample a non corrected phase too. I prefer the corrected one. If it didn't sound any better I wouldn't keep it. Meaning I measure everything but I do listen to determine what I prefer. I expected a large improvement in imaging. That didn't happen. Imaging can be fine and dandy without going to such lengths.

But it did change perception. More so below 1 KHz than above. Percussion never sounded better i.m.h.o.

Participating in Pano's Fixing the Stereo Phantom Center thread has taught me a lot about the missing pieces I still had in my mind about phase. The effects that are discussed in that thread are very real within a room with strongly reduced early reflections.

This was done to be able to clearly hear the venue (if present, real or synthesized) within the recording. The bass line even empowers the midrange and it is a clear difference to me.

In my humble opinion you can't just change the phase at the listening position, you've got to make sure the speaker works with the room. With more conventional speakers this would take a lot of damping/absorbing and/or diffusion.

The end result as seen in APL_TDA is this:

Measured with a stereo pair, not at 1 m, but at the listening position.

I tried more than one phase correction, this one is a band pass correction that I ended up enjoying the most. Flat phase down to DC sounded weird on a couple of songs. The sense of timing was best if I followed the band pass behavior of the frequency response.

Compare it to a loop back of a clean impulse run trough my DAC:

I did not get here in a day. It wasn't as easy as straightening out the phase and be done with it. It took me the best part of a year to find something that worked for me.

I have heard everything in between, meaning I did get to sample a non corrected phase too. I prefer the corrected one. If it didn't sound any better I wouldn't keep it. Meaning I measure everything but I do listen to determine what I prefer. I expected a large improvement in imaging. That didn't happen. Imaging can be fine and dandy without going to such lengths.

But it did change perception. More so below 1 KHz than above. Percussion never sounded better i.m.h.o.

Participating in Pano's Fixing the Stereo Phantom Center thread has taught me a lot about the missing pieces I still had in my mind about phase. The effects that are discussed in that thread are very real within a room with strongly reduced early reflections.

Minimum-phase resonances coming from the loudspeaker itself (ie stable within the listening window), among others, can certainly be addressed using EQ.You can't fix resonances, the only cure is to d'ont trigger them.

Choosing the correct measurements and visualizations help to decide what can/should be adressed and what should not.

Isn't that satisfying enough for you? 😉 😀And the visual evidence was pretty unassailable - there was definitely something "wrong" before, and it sure looked "fixed" after.

I guess your two-way crossover is around 1.5 to 2kHz, a range in which we are not too sensitive to phase shifts.Did I miss something here? Is there anything else I might try in order to hear this better?

On the general subject of phase shift audibility, I am not convinced AB(X) tests are the best way to spot these kind of differences.

We seem to be very tolerant to phase shifts, and our brain adapts quickly to them (as well as other defects we are used to encounter) so that they become inaudible.

In y experience AB(X) tests with rapid and repetitive switches tend to make certain differences less and less audible.

Still, these kind of differences can sometimes be heard with "fresh" ears as sounding more "real".

This reminds me of a talk by PSI's chef engineer Alain Roux where he compared phase shifts to the use of salt in a dish: the more you get used to it the more everything tend to taste the same, masking subtle differences.

Here is the talk for those who can understand French: Importance de la maîtrise et de l'amortissement... par Alain Roux (PSI Audio) - Audio Days 2013

If you still have a taste of salt in the mouth I bet you cannot get the subtle differences of the next dish 😉

Last edited:

Minimum-phase resonances coming from the loudspeaker itself (ie stable within the listening window), among others, can certainly be addressed using EQ.

Choosing the correct measurements and visualizations help to decide what can/should be adressed and what should not.

We must be in an anechoic room or outside to measure the loudspeaker minimum-phase resonances ?

Do you have to do it again when you bring them indoors?Fwiw, I meant perfect responses in anechoic type environment. ..

I tune my speakers outdoors for closest substitute.

I've found that magnitude, phase, impluse, and yes ..I've even looked at square wave response...........I've found they all move together...fix one, and others get fixed...that's my real point here ....

But more importantly, sound improves. Hardest test of all imo, is off axis square wave response

You can generally get a precise enough representation of a loudspeaker anechoic response in a home environment by using different measurements techniques, typically close mic measurement for the lows and gating for the highs.We must be in an anechoic room or outside to measure the loudspeaker minimum-phase resonances ?

Isn't that satisfying enough for you? 😉 😀

On the general subject of phase shift audibility, I am not convinced AB(X) tests are the best way to spot these kind of differences.

We seem to be very tolerant to phase shifts, and our brain adapts quickly to them (as well as other defects we are used to encounter) so that they become inaudible.

In y experience AB(X) tests with rapid and repetitive switches tend to make certain differences less and less audible.

Still, these kind of differences can sometimes be heard with "fresh" ears as sounding more "real".

This reminds me of a talk by PSI's chef engineer Alain Roux where he compared phase shifts to the use of salt in a dish: the more you get used to it the more everything tend to taste the same, masking subtle differences.

Here is the talk for those who can understand French: Importance de la maîtrise et de l'amortissement... par Alain Roux (PSI Audio) - Audio Days 2013

If you still have a taste of salt in the mouth I bet you cannot get the subtle differences of the next dish 😉

Well, if we can't reliably detect phase distortion with audible AB testing, then what's the point of all this?

I'm not sure we should need "fresh" (whatever that means) ears to sense this distortion. 🙂

In my own experience, I believe I can reliably hear the effects of phase distortion using a simple procedure as outlined above. However, it's not always clear which way, (minimum-phase or linear-phase) is preferable. 🙂

The salt-masking analogy is amusing, but not really helpful.

There's way too much hand-waving on this topic.

Cheers,

Dave.

Do you have to do it again when you bring them indoors?

Yes. But doing it "again" is for a different purpose.

Outside is to fix the speaker independent of room....so the speaker can work its best no matter what room it's in.

Inside is to mate the speaker to the room.

IMO, .....

we really need two banks of eqs/filters.

The "outside" bank is part of the speaker itself and should never be changed.

The "inside" bank changes constantly...different rooms, different speaker placements, different listening positions, different furniture or acoustical treatments, etc etc,

But come to think of it, once having dialed the room in, it too should become fixed. So make that three banks...

Because we then need a third level of eq.....eq to taste.....for adjusting recordings. House curves etc.

I guess the real point in all the above rambling, is that I've found if i separate components, speaker vs room vs taste, I get much better results quicker that work across a broader array of source material.

I find it impossible to juggle all three together.

Sorry, I will try to come with a car analogy next time 😉The salt-masking analogy is amusing, but not really helpful.

Well, if we can't reliably detect phase distortion with audible AB testing, then what's the point of all this?

The point for me is to say "wow, that sounds good"....on as much and varied source material as happens.

I'm not overly fond of AB testing anymore. Too much short term bias.

I like to live with a setup for a while, moving around in listening room, listening from afar in other rooms, setting up outdoors, playing all kind of material at all kind of levels.....

.....and see whether I keep smiling at the sound, saying to myself "damn, that sounds good", or on the other hand feeling the sound still needs something.

Agreed, this is typically what sound engineers do to avoid making corrections with a subjective ear after hours of work.I'm not overly fond of AB testing anymore. Too much short term bias.

I like to live with a setup for a while, moving around in listening room, listening from afar in other rooms, setting up outdoors, playing all kind of material at all kind of levels.....

Still A/B tests are relevant for a ton of situations.

Regarding the "hand-waving" thing...

Phase linearization effects can be measured easily, and that is the most objective thing we can come up with.

Of course the audibility of said linearization is a different question, and tend to be much more subjective, taking into account the way our ear and brain interpret what we hear.

AB tests are a good way of testing things, and unsighted tests also take into account our expectation bias (although we tend to know what we are listening to on our systems, so should that bias be taken out of the equation?... 😉 ).

Still, many other bias and psychoacoustics effects still exist that are not necessarily well addressed with an AB test, like the ability to adapt (especially true for room response), or short term memory that tend to blend/blur things.

And even the simple fact that we are asked to concentrate and find differences between two signals, which is a form of expectation, and also place the brain in a different situation compared to casual listening (for me at least 😀).

Phase linearization effects can be measured easily, and that is the most objective thing we can come up with.

Of course the audibility of said linearization is a different question, and tend to be much more subjective, taking into account the way our ear and brain interpret what we hear.

AB tests are a good way of testing things, and unsighted tests also take into account our expectation bias (although we tend to know what we are listening to on our systems, so should that bias be taken out of the equation?... 😉 ).

Still, many other bias and psychoacoustics effects still exist that are not necessarily well addressed with an AB test, like the ability to adapt (especially true for room response), or short term memory that tend to blend/blur things.

And even the simple fact that we are asked to concentrate and find differences between two signals, which is a form of expectation, and also place the brain in a different situation compared to casual listening (for me at least 😀).

I was thinking that large soundwaves can't be diffracted or refracted in a room, and the subwoofer placement was just the right place were the cone has the more "grip on air".

So, i don't know why but i've tested my subs in various locations with narrow bands sounds in order to place them only where the CSD exibit no ringings and where the FR amplitude correspond exactly to the excited band (20dB above resonances).

It seems to me a to be starting point before anything else.

So, i don't know why but i've tested my subs in various locations with narrow bands sounds in order to place them only where the CSD exibit no ringings and where the FR amplitude correspond exactly to the excited band (20dB above resonances).

It seems to me a to be starting point before anything else.

Last edited:

Too often we interpret different as better. It's interesting to make a change and find one prefers it then after a prolonged period reverse the change and come to the same conclusion. I find a good test is whether the sound becomes tiring, it's as though if the brain has to make too many psychoacoustical adjustments it needs to rest

I've been reading along as this thread progresses and this is and has been a very interesting discussion. One slight subject that hasn't been mentioned is those very pretty CSD graphs as Wesayso has just demonstrated. I actually prefer a different type of graphing for that than this newer style. What is not discussed in respect to any CSD is the type of and length of the window used to analyze the signal and what smoothing has been applied to the FR. As has been shown with manufacturers technical data sheets it is fairly easy to manipulate that data to give nice looking results and mask real resonance issues, hiding a bad decay plot isn't all that hard. When actually developing a driver I use extremely close mic positions with the smallest diameter calibration mic and use no smoothing while looking for any real narrow band resonance issues.

So my real point is if you don't know the test conditions and the specifics about windowing and any smoothing used for a CSD they can become fairly useless if not downright misleading.

In my personal opinion correcting for basic phase rotation is good and whether we can hear those phase corrections in a specific speaker and room arrangement is still somewhat questionable most of the time it seems. Now where you probably notice these differences if you had a way to make a real comparison which is highly unlikely would be the comparison of different speaker configurations such as line array vs multi-way speaker enclosure with both phase corrected and in the same room. I would think that phase and room interactions of that wavefront would be something where the differences would be magnified. My money would be on Wesayso's approach to having the most phase correct sound field even if I don't build line arrays. I take what he says about the audibility of these changes very seriously, I would tend to agree with his assessment that these issues of phase changes and corrections are very subtle effects if not taken to extremes.

So my real point is if you don't know the test conditions and the specifics about windowing and any smoothing used for a CSD they can become fairly useless if not downright misleading.

In my personal opinion correcting for basic phase rotation is good and whether we can hear those phase corrections in a specific speaker and room arrangement is still somewhat questionable most of the time it seems. Now where you probably notice these differences if you had a way to make a real comparison which is highly unlikely would be the comparison of different speaker configurations such as line array vs multi-way speaker enclosure with both phase corrected and in the same room. I would think that phase and room interactions of that wavefront would be something where the differences would be magnified. My money would be on Wesayso's approach to having the most phase correct sound field even if I don't build line arrays. I take what he says about the audibility of these changes very seriously, I would tend to agree with his assessment that these issues of phase changes and corrections are very subtle effects if not taken to extremes.

APL_TDA is developed as a timing tool. It shows the peak energy curve at the microphone and it's timing. I would not call it a CSD graph specifically, though one can see the arrival of the frequencies at the microphone and the later arriving reflections within the room.

As is, it sort of presents a map of the room seen from the perspective of sound arrival at the mcrophone. The APL_TDA graph I posted was measured with the demo version (screen grab) at a time when that software was introduced on DIYA by it's creator.

The room correction or speaker correction? What can we do with dsp power now availabl

To arrive at the correction I use, I used REW and DRC-FIR. Not the tool set belonging to that APL program. I ran that just for fun and giggles to see what my setup would do. I made a little dance of joy when I saw the graphs come up on my screen to be honest. I had read the paper written by Mr. Raimond Skuruls and found some similarities to what I was trying to accomplish. That made me curious enough to try the demo software.

To get what I wanted to achieve I observed all early reflections I found in my measurements (and their influence on phase) and tried to source them back to places/walls in my room that were responsible for them. That's where absorbing damping panels were placed to bring the reflections down in level within the first 20 ms. All it took were 3 large absorbing panels. The nature of the array took care of the rest (like floor and ceiling reflections).

I asked a fellow forum participant, jim1961, to try the software in his studio-like treated room that was carefully created over years. The speakers he uses are a design by Troels Gravesen. That result can be found here...

It's painfully obvious his room is much better behaved than mine (which is still a functional living room), yet we can see his bass response is stretched out in time due to the crossovers that were used in his speakers (LR2 if I remember correctly). The single return seen at 25 ms is intentional. It's a Haas Kicker (a subject for a different discussion).

Without looking at the room/speaker collaboration I doubt we will get any definitive answers to the questions asked here. All I know I'm pleased with my solution. I'd advise anyone willing to try it to take a good look at what's happening right there in that listening spot. To do it right takes way more effort than adjusting phase from a measurement in my opinion. But RePhase can be a very helpful tool to get you there.

You've got to admire that correct behavior of the speaker from Troels. At least I know I do. No DSP was used for that APL_TDA result in this post! This is what a good pair of speakers in a "Studio like" treated room looks like.

As is, it sort of presents a map of the room seen from the perspective of sound arrival at the mcrophone. The APL_TDA graph I posted was measured with the demo version (screen grab) at a time when that software was introduced on DIYA by it's creator.

The room correction or speaker correction? What can we do with dsp power now availabl

To arrive at the correction I use, I used REW and DRC-FIR. Not the tool set belonging to that APL program. I ran that just for fun and giggles to see what my setup would do. I made a little dance of joy when I saw the graphs come up on my screen to be honest. I had read the paper written by Mr. Raimond Skuruls and found some similarities to what I was trying to accomplish. That made me curious enough to try the demo software.

To get what I wanted to achieve I observed all early reflections I found in my measurements (and their influence on phase) and tried to source them back to places/walls in my room that were responsible for them. That's where absorbing damping panels were placed to bring the reflections down in level within the first 20 ms. All it took were 3 large absorbing panels. The nature of the array took care of the rest (like floor and ceiling reflections).

I asked a fellow forum participant, jim1961, to try the software in his studio-like treated room that was carefully created over years. The speakers he uses are a design by Troels Gravesen. That result can be found here...

It's painfully obvious his room is much better behaved than mine (which is still a functional living room), yet we can see his bass response is stretched out in time due to the crossovers that were used in his speakers (LR2 if I remember correctly). The single return seen at 25 ms is intentional. It's a Haas Kicker (a subject for a different discussion).

Without looking at the room/speaker collaboration I doubt we will get any definitive answers to the questions asked here. All I know I'm pleased with my solution. I'd advise anyone willing to try it to take a good look at what's happening right there in that listening spot. To do it right takes way more effort than adjusting phase from a measurement in my opinion. But RePhase can be a very helpful tool to get you there.

You've got to admire that correct behavior of the speaker from Troels. At least I know I do. No DSP was used for that APL_TDA result in this post! This is what a good pair of speakers in a "Studio like" treated room looks like.

Last edited:

Regarding phase distortion talk yesterday and the hand waving 🙂

Try listen this youtube track on flat phase verse with rotating phase caused by XO points, as pos tell its not night and day in AB tests but for me the flat phase is more real like when i play guitar myself.

Link youtube: YouTube

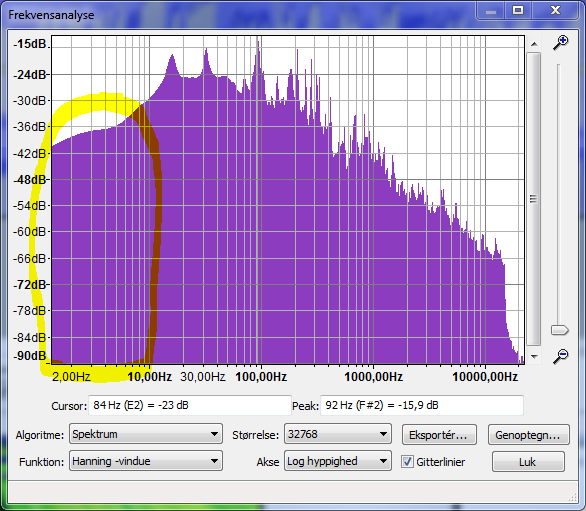

Before listening that youtube track suggest start with low volume setting because lows down to DC when player hit the guitar deck are rough relative non-filtered which is visible in this Audacity spectrum:

Regarding why we are not so sensitive to phase rotations above say 1500Hz as pos mention, a comment could be that in we have natural hearing fading out in say 12-30kHz we would never be able to hear how a real say 10kHz transient sounds like because that needs a lot more bandwidth to replicate a perfect 10kHz SQ wave-shape and beside that we used to audio gear is fading out in 20kHz area, be it vinyl medium or digital alias filters or tweeters and recording microphones natural roll off.

Other fun for these discussions is run a real track thru Rephase created filters which will show we increase dynamic range having phase rotation, below is a 5dB dynamic range Van Halen track as clean verse exposed to stop-bands and exposed to stop-bands and phase rotations.

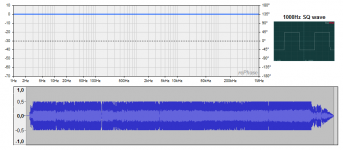

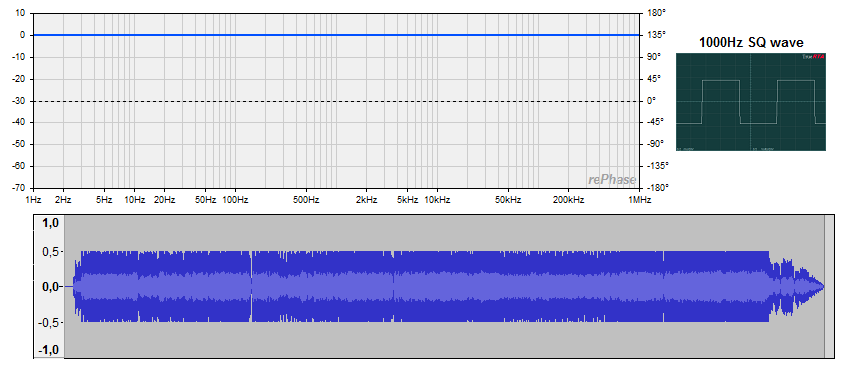

Original 5dB dynamic track run thru neutral Rephase convolution filter just to investigate Rephase filter is clean and dymamic range stays exactly the same 5dB:

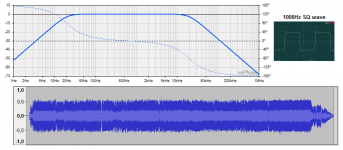

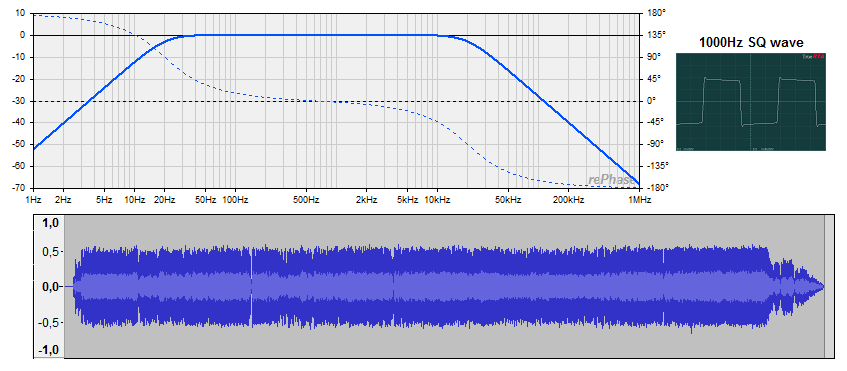

Original 5dB dynamic track run thru Rephase convolution filter that expose track to stop-bands at 20Hz / 20kHz, which increase tracks dynamic range from 5 to 7dB:

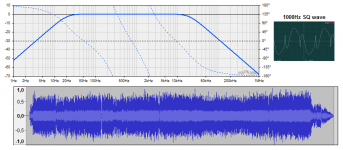

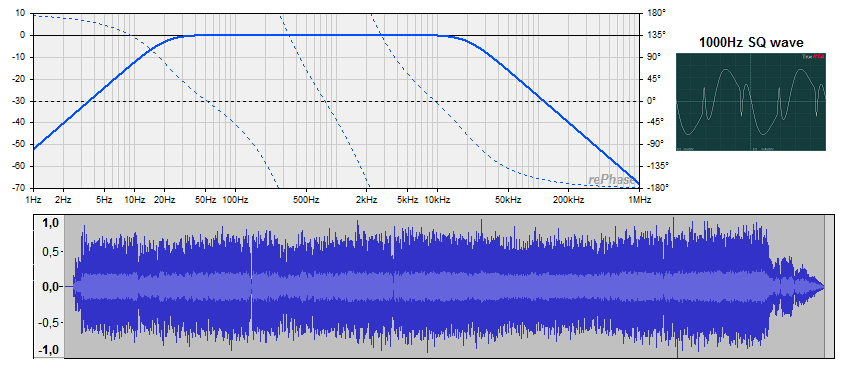

Original 5dB dynamic track run thru Rephase convolution filter that expose track to stop-bands at 20Hz / 20kHz plus 4th order LR XO points at 300Hz and 2kHz, which increase tracks dynamic range from 5 to 11dB:

Above observations was done using JRiver "Disk Writer" tool to convolute tracks, and "Analyze Audio" tool to get DR numbers, and before process had to lower track level in Audacity by 6dB else processing would show red overloads in Audacity window, which in a way scare me and makes me think i wanna start my first point in DSP engine by lowering level by 6dB, pos is welcome comment on that point and what is happening : ).

Can we conclude from above that if final mix engineer or users monitor track via multi way speaker we have 11dB DR and if we monitor via head phones or nice system as wesayso's line arrays we listen 7dB DR ?

Try listen this youtube track on flat phase verse with rotating phase caused by XO points, as pos tell its not night and day in AB tests but for me the flat phase is more real like when i play guitar myself.

Link youtube: YouTube

Before listening that youtube track suggest start with low volume setting because lows down to DC when player hit the guitar deck are rough relative non-filtered which is visible in this Audacity spectrum:

Regarding why we are not so sensitive to phase rotations above say 1500Hz as pos mention, a comment could be that in we have natural hearing fading out in say 12-30kHz we would never be able to hear how a real say 10kHz transient sounds like because that needs a lot more bandwidth to replicate a perfect 10kHz SQ wave-shape and beside that we used to audio gear is fading out in 20kHz area, be it vinyl medium or digital alias filters or tweeters and recording microphones natural roll off.

Other fun for these discussions is run a real track thru Rephase created filters which will show we increase dynamic range having phase rotation, below is a 5dB dynamic range Van Halen track as clean verse exposed to stop-bands and exposed to stop-bands and phase rotations.

Original 5dB dynamic track run thru neutral Rephase convolution filter just to investigate Rephase filter is clean and dymamic range stays exactly the same 5dB:

Original 5dB dynamic track run thru Rephase convolution filter that expose track to stop-bands at 20Hz / 20kHz, which increase tracks dynamic range from 5 to 7dB:

Original 5dB dynamic track run thru Rephase convolution filter that expose track to stop-bands at 20Hz / 20kHz plus 4th order LR XO points at 300Hz and 2kHz, which increase tracks dynamic range from 5 to 11dB:

Above observations was done using JRiver "Disk Writer" tool to convolute tracks, and "Analyze Audio" tool to get DR numbers, and before process had to lower track level in Audacity by 6dB else processing would show red overloads in Audacity window, which in a way scare me and makes me think i wanna start my first point in DSP engine by lowering level by 6dB, pos is welcome comment on that point and what is happening : ).

Can we conclude from above that if final mix engineer or users monitor track via multi way speaker we have 11dB DR and if we monitor via head phones or nice system as wesayso's line arrays we listen 7dB DR ?

Attachments

Last edited:

This change in peak values is a known effect, and would happen just the same with normal (non inverted) all-pass filters, and even with IIR low/high pass filters to an extend.

By changing phase you affect the timing and summation of peaks at different frequencies, and this can lead to summation exceeding the original signal (albeit on average it will be less than the original).

Having a 6dB margin in the DSP is a good practice, and digital volume control at the source or inside the DSP (like with an openDRC) tend to solve that point anyway.

And DACs generally also present better distortion specs that way.

This practice also protect you from inter sample clipping, which is a real thing (with or without DSP processing) that only a few DACs adress (like the ESS Sabre with a 3dB margin).

By changing phase you affect the timing and summation of peaks at different frequencies, and this can lead to summation exceeding the original signal (albeit on average it will be less than the original).

Having a 6dB margin in the DSP is a good practice, and digital volume control at the source or inside the DSP (like with an openDRC) tend to solve that point anyway.

And DACs generally also present better distortion specs that way.

This practice also protect you from inter sample clipping, which is a real thing (with or without DSP processing) that only a few DACs adress (like the ESS Sabre with a 3dB margin).

Last edited:

Regarding why we are not so sensitive to phase rotations above say 1500Hz as pos mention, a comment could be that in we have natural hearing fading out in say 12-30kHz we would never be able to hear how a real say 10kHz transient sounds like because that needs a lot more bandwidth to replicate a perfect 10kHz SQ wave-shape and beside that we used to audio gear is fading out in 20kHz area, be it vinyl medium or digital alias filters or tweeters and recording microphones natural roll off.

Don't forget what happens when listening to a stereo setup with both our ears 😉.

- Home

- Design & Build

- Software Tools

- rePhase, a loudspeaker phase linearization, EQ and FIR filtering tool