Yeah this message could be improved. And there should probably be a warning if no form of rate adjust is enabled.@HenrikEnquist : Please would you consider extending the msg "Capture device supports rate adjust" with a conditioned "but rate adjust is not enabled"? Thanks!

Hey guys, on the latest v2, I set up camilladsp on win7. I needed 2 normal wasapi inputs / 10 output channels to ASIO. So I used loopbeaudio, the only thing I could find that would give me 10 virtual channels on one device in windows. Thing is, it works flawlessly untill I try to pipe the other end of the virtual cable to a matrix router to send it to asio. Then it constantly gets buffer underruns, time difference values of 0.02 (expected 0.01). Sr is 48k. This is a difference of 2x (I've seen Henrik updated the stream reset from 1.5 to 1.75). But this seems like a proper missed event, and the audio coming through is extremely choppy, there are at least 5 of these every second.

Tried every setting in camilladsp, buffer sizes, rate controls. Nothing helps.

Any idea what might be the case?

I'll have to do the covolution through reaper, and I'd rather use camilladsp cause the software is just so very lovely.

Tried every setting in camilladsp, buffer sizes, rate controls. Nothing helps.

Any idea what might be the case?

I'll have to do the covolution through reaper, and I'd rather use camilladsp cause the software is just so very lovely.

Routed camilladsp output through SAR (synchronous audio router) using reaper and it works just fine. So it's an issue in loopbeaudio or in vb matrix

Good question. IMHO the code for rate adjust as is will not work optimally with just two periods per buffer as typically the reported alsa delay (i.e. buffer fill) is updated at period boundary by the driver and there would be two incoming chunks per one period. Henrik is considering adding an inner thread to the alsa thread (used e.g. already in CDSP wasapi adapter which uses only two periods per buffer as well). IIUC such a change would uncouple the chunk size from the buffer/period sizes (apart of other positive effects).

That would be sweet! I’ll write up an issue in the repo to track it if there isn’t one already.

Hi,

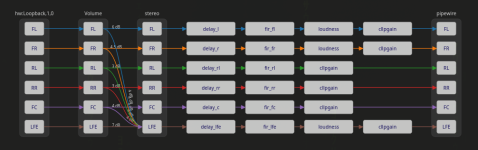

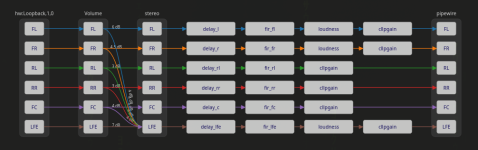

Posting my setup for 2.0 to 5.1, no upmixing. Used both as a home theater (MLP):

and for playing games or computer work (2nd listening position, at the keyboard, 90 degrees left of MLP). Center speaker not used in this situation and its signal, whenever present, is being centered between the new front speakers. In case you wonder why so different gains for the new front speakers, I'm sitting 3-4x closer to the left one:

Hope that makes sense. In case you wonder why positive gains, I loose 9 dB with REW FIR filters. HPF for satellites and LPF for subwoofer are handled by the FIR filters. Still work in progress, but you get the idea.

The labels are very useful. It can get pretty confusing with numbers.

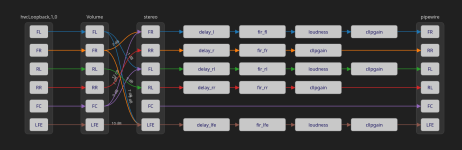

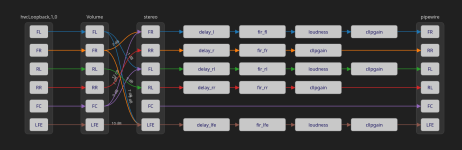

Posting my setup for 2.0 to 5.1, no upmixing. Used both as a home theater (MLP):

and for playing games or computer work (2nd listening position, at the keyboard, 90 degrees left of MLP). Center speaker not used in this situation and its signal, whenever present, is being centered between the new front speakers. In case you wonder why so different gains for the new front speakers, I'm sitting 3-4x closer to the left one:

Hope that makes sense. In case you wonder why positive gains, I loose 9 dB with REW FIR filters. HPF for satellites and LPF for subwoofer are handled by the FIR filters. Still work in progress, but you get the idea.

The labels are very useful. It can get pretty confusing with numbers.

IIUC it allows an application to drive the clock of the loopback. That could be interesting as an alternative way of implementing rate adjust in CamillaDSP, much more flexible than the way discussed in https://www.diyaudio.com/community/...ck-timer-source-to-playback-hw-device.408077/I noticed a recent Linux patch for user space driven ALSA timers here.

@siraaris : Thanks for the interesting link! Such facility can be used for devices without fixed clocks, such as the aloop mentioned in the patches. HDA Audio is clocked by the HDA controller, just like other HW soundcards.

On the other hand, very often there is a HW soundcard at the end of the chain supplied by master-clocked input stream, resulting in two clocks in the chain and some alignment must be performed anyway. Slaving the aloop to the incoming stream is useful, but still the output of aloop would have to be aligned to the HW soundcard.

But e.g. USB audio adaptive method was designed exactly for the purpose of aligning the USB stream to some incoming stream. Currently AFAIK all implementations of USB adaptive audio have fixed clock by the USB controller, making it basically identical to USB synchronous audio. But since the adaptive device on the other side has PLL tunable to the incoming rate, the USB audio host driver could be timed by the incoming stream.

On the other hand, very often there is a HW soundcard at the end of the chain supplied by master-clocked input stream, resulting in two clocks in the chain and some alignment must be performed anyway. Slaving the aloop to the incoming stream is useful, but still the output of aloop would have to be aligned to the HW soundcard.

But e.g. USB audio adaptive method was designed exactly for the purpose of aligning the USB stream to some incoming stream. Currently AFAIK all implementations of USB adaptive audio have fixed clock by the USB controller, making it basically identical to USB synchronous audio. But since the adaptive device on the other side has PLL tunable to the incoming rate, the USB audio host driver could be timed by the incoming stream.

IMHO the methods are quite similar - either the application calls the trigger method of the user-space timer, or the linked playback driver calls the aloop timer trigger method inside the kernel. So IIUC either the playback driver in its period_elapsed handler calls the timer trigger of aloop automatically, or CDSP in its playback period_elapsed handler (basically when woken up by the driver) must call the user-space timer trigger. Yes, the advantage of the user-space timer is that CDSP could kick-start the timer separately (and then e.g. leave if for the playback callback), while for the slaved aloop timer the playback master soundcard must start running. That's certainly more flexible but still the kickstarting would have to be handled somehow, in both cases. Also the period times would have to be equal if the user-space timer were to be triggerred from the playback callback, IIUC.that could be interesting as an alternative way of implementing rate adjust in CamillaDSP, much more flexible than the way discussed in https://www.diyaudio.com/community/...ck-timer-source-to-playback-hw-device.408077/

For aloop things are simple, no timing jitter needs to be considered. But e.g. in USB adaptive the stream needs to be as fluent as possible, to make life of the device PLL easier, minimizing its output clock jitter. Also for USB gadget the USB audio specs say the async stream may vary only by one audio frame against the nominal frame count, e.g. https://github.com/torvalds/linux/b...ivers/usb/gadget/function/u_audio.c#L224-L237 . Making that limitation play well with a user-space timer which could be triggered with any jitter may be quite difficult.

But even for aloop the timer jitter may be important if used in a chain like CDSP which expects the data to flow in from the capture device in some smooth regular way.

Last edited:

Help me understand something real quick, please 🙂

What is the total expected system latency using alsa and camilladsp?

As alsa is a hal, like asio, I'm guessing it's capture buffer is actually the camilladsp chunksize. So basically the latency would be 3x the chunksize, give or take?

Not taking into account any convolution/eq

I know that the internal buffer is supposed to be 2x the chunksize in v2, and I'm a bit confused by the buffer level too.

What is the total expected system latency using alsa and camilladsp?

As alsa is a hal, like asio, I'm guessing it's capture buffer is actually the camilladsp chunksize. So basically the latency would be 3x the chunksize, give or take?

Not taking into account any convolution/eq

I know that the internal buffer is supposed to be 2x the chunksize in v2, and I'm a bit confused by the buffer level too.

For aloop things are simple, no timing jitter needs to be considered. But e.g. in USB adaptive the stream needs to be as fluent as possible, to make life of the device PLL easier, minimizing its output clock jitter. Also for USB gadget the USB audio specs say the async stream may vary only by one audio frame against the nominal frame count, e.g. https://github.com/torvalds/linux/b...ivers/usb/gadget/function/u_audio.c#L224-L237 . Making that limitation play well with a user-space timer which could be triggered with any jitter may be quite difficult.

But even for aloop the timer jitter may be important if used in a chain like CDSP which expects the data to flow in from the capture device in some smooth regular way.

I'm particularly interested in multi-speaker, multi-channel DIY projects, where the number of channels required can quickly exceed what is generally available in single audio interfaces, requiring use of multiple pro-audio devices, so excited to see ALSA being able to use for example PTP.

Something along the lines :

DSP Host: Audio source (eg Roon) -> Loopback/P | Loopback/C <- CDSP -> AES67 ALSA Driver (16 Ch), which is configured to fan out to 2 x 8CH AES67 targets:

2 x Speaker Hosts: Configured 1 each for Left and Right Speakers, as AES67 8CH endpoints, Playback to Hifiberry DAC 8x.

A PTP clock, which AES67 needs, would tie in Loopback, so that everything is on the same clock (the AES67 open source server and driver already support PTP).

I don't know whether the Hifiberry DAC8x is suitable.

Yes that's a pretty good guess.So basically the latency would be 3x the chunksize, give or take?

The Alsa buffer size doesn't matter for latency. Buffer level is measured as how many frames that are left in the playback device buffer when the next chunk arrives. Increase it to make underruns less likely, or reduce it to reduce latency.I know that the internal buffer is supposed to be 2x the chunksize in v2, and I'm a bit confused by the buffer level too.

OK, you could slave the loopback on the transmitter machine to the AES67 playback/transmitter alsa device (clocked by PTP internally), using some method (either the user-space timer or the existing loopback external timer feature). Or you can use the existing CDSP rate-adjust feature and fine-tune the loopback clock to the AES67 playback alsa device.A PTP clock, which AES67 needs, would tie in Loopback, so that everything is on the same clock (the AES67 open source server and driver already support PTP).

On the receiver device - incoming stream clocked at PTP pace, outgoing stream clocked by RPi hardware clock timing the 8ch I2S interface. Two master clocks, some form of async resampling required.

Or you can use a single 16ch I2S device directly, e.g. Radxa Pi S with USB gadget. Hooked to 8 pieces of 2USD PCM5102A DAC miniboards from aliexpress, the same chip as used in DAC8X.

Or any other 16ch USB DAC.

Many options. But all the AES67 ones need async resampling on the receiver if a fix-clocked audio interface to the DAC is used.

Last edited:

I’ve set this up before with ASYNC resampling to USB devices and it worked but could never get a stable image - shifting of image occurred etc. Admittedly I was using alsaloop between the AVTP or AES67 ALSA endpoints and the USB DACs.

What receiver SW with PTP sync did you use? Alsaloop cannot receive RTP with PTP-synced timestamps.I've set this up before with ASYNC resampling to USB devices and it worked but could never get a stable image - shifting of image occurred etc.

I tried Gstreamer/AVTP as well as the AES67 approach synced by PTP in both cases. From memory there were some PTP sequencing issues that I didn’t resolve before attempting to bridge to USB audio.

In any case, the ideal outcome :

A central host running CDSP, with 2 (or more for my project) active speakers connected via AoIP.

On the CDSP and transport side, can use something like a MADI interface connected to a hybrid MADI/AoIP DAC, a native Dante card which are becoming available, use GStreamer, on ALSA AVTP with a multi aggregate or an AES67 / Ravenna driver. There are also other options I guess.

But as you say, the final bridge between transport and devices is what’s needed to make this happen.

A solid working approach as described would enable more complex DIY DSP projects I think.

My own project involves 4 x 8ch-based speakers. At the moment I can handle these with a central 32ch DAC.

But ideally I would like to have each speakers endpoint, amp and dac in the active speakers connected to a central CDSP host based on open source and open standards.

In any case, the ideal outcome :

A central host running CDSP, with 2 (or more for my project) active speakers connected via AoIP.

On the CDSP and transport side, can use something like a MADI interface connected to a hybrid MADI/AoIP DAC, a native Dante card which are becoming available, use GStreamer, on ALSA AVTP with a multi aggregate or an AES67 / Ravenna driver. There are also other options I guess.

But as you say, the final bridge between transport and devices is what’s needed to make this happen.

A solid working approach as described would enable more complex DIY DSP projects I think.

My own project involves 4 x 8ch-based speakers. At the moment I can handle these with a central 32ch DAC.

But ideally I would like to have each speakers endpoint, amp and dac in the active speakers connected to a central CDSP host based on open source and open standards.

IIUC gstreamer is a solid RTP+PTP renderer. It only lacks a proper async low-latency resampler for the fixed-clock output audio device, such as the one in CDSP. The async resampler in gstreamer just drops/interpolates samples. But very likely inaudible for listening purposes.

Yes but that introduces major complications. Some link between GST and CDSP is required. Alsa loopback is a clock master - GST would still need to resample. GST cannot control aloop rate like CDSP does. CDSP cannot receive RTP+PTP.

The shortest chain would require fine-tuning clock of the output audio device. Maybe commercial Dante renderers have some tunable FPGA-based PLL as master clock. Si5340A/B/C could do that too, via I2C. Linux USB-audio driver could be modified to offer a pitch alsa ctl similar to USB gadget playback pitch (basically identical functionality), hooking adaptive USB audio device with built-in PLL. Many options, but none readily available.

A software-only chain (i.e. fixed HW clock, proper async resampling) would require e.g.

The shortest chain would require fine-tuning clock of the output audio device. Maybe commercial Dante renderers have some tunable FPGA-based PLL as master clock. Si5340A/B/C could do that too, via I2C. Linux USB-audio driver could be modified to offer a pitch alsa ctl similar to USB gadget playback pitch (basically identical functionality), hooking adaptive USB audio device with built-in PLL. Many options, but none readily available.

A software-only chain (i.e. fixed HW clock, proper async resampling) would require e.g.

- adding a good async resampler to GST, or

- adding a GST interface to CDSP, or

- making a CDSP element for GST, or

- using CDSP as a PA sink in pipewire graph which presumably supports RTP + PTP too - quoting PW 1.2:

orThe RTP modules can now use direct clock timestamps to send and receive packets. This makes it possible to synchronize sender and receiver with a PTP clock, for example.

- using solely latest PW as it supports all the features required (RTP+PTP, proper async resampling to alsa sink) and countless other useful options such as clean switching between input sources.

- Home

- Source & Line

- PC Based

- CamillaDSP - Cross-platform IIR and FIR engine for crossovers, room correction etc