Isn't that a standard two times interpolating filter, that is, a combination of inserting zeros and brick-wall low-pass filtering? Instead of a windowed sinc, you can also use the Parks-McClellan program (based on the Remez exchange algorithm) to find a finite impulse response that approximates the ideal sinc.

No zero stuffing. Instead of sinc interpolation of very high samples, it is Hamming windowed.

All I find from Park McClellan is FIR filter design, any reference for the sinc?

Last edited:

Sorry Hayk, it is for 100% zero stuffing with one zero.

No question about that, believe me.

Exactly what I did with 3 zero’s.

Hans

P.s. with such a short filter you will get miserable results.

No question about that, believe me.

Exactly what I did with 3 zero’s.

Hans

P.s. with such a short filter you will get miserable results.

Last edited:

I think you are both right, actually.

The filter from post #301 could be one of the two polyphase decompositions of a two times interpolating 32-tap FIR filter (two times oversampling filter), the other polyphase decomposition is then a group of zeros, a one and another group of zeros.

Real interpolating filters are never made of an oversampler that inserts zeros and then a low-pass filter. In practice these two steps are intertwined because it is more computationally efficient.

If you would first insert zeros and then pass it though a normal filter, for each output sample, half the filter coefficients would be multiplied by zero. You can save half the multiplications by leaving out those coefficients. You then end up with a filter of half the length that uses two different coefficient sets for the odd and even output samples, so in fact it becomes a time variant filter. For some reason those coefficient (sub-)sets are know as polyphase decompositions.

In this specific case, one of the two polyphase decompositions has only one one and a bunch of zeros as its coefficients, so you can actually time multiplex between a delayed version of the input signal and the output signal of the filter of post #301. That way you need only a quarter of the multiplications.

The filter from post #301 could be one of the two polyphase decompositions of a two times interpolating 32-tap FIR filter (two times oversampling filter), the other polyphase decomposition is then a group of zeros, a one and another group of zeros.

Real interpolating filters are never made of an oversampler that inserts zeros and then a low-pass filter. In practice these two steps are intertwined because it is more computationally efficient.

If you would first insert zeros and then pass it though a normal filter, for each output sample, half the filter coefficients would be multiplied by zero. You can save half the multiplications by leaving out those coefficients. You then end up with a filter of half the length that uses two different coefficient sets for the odd and even output samples, so in fact it becomes a time variant filter. For some reason those coefficient (sub-)sets are know as polyphase decompositions.

In this specific case, one of the two polyphase decompositions has only one one and a bunch of zeros as its coefficients, so you can actually time multiplex between a delayed version of the input signal and the output signal of the filter of post #301. That way you need only a quarter of the multiplications.

Regarding Parks-McClellan, all design methods for linear-phase FIR low-pass filters lead to some finite-length approximation of a sinc. The Parks-McClellan approximation leads to an equiripple frequency response in the pass and the stop band.

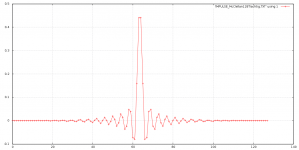

As an example, attached is the impulse response of a 128-tap Parks-McClellan filter with a pass band up to 0.22675 fs, stop band from 0.27325 fs onwards, 12778 times as large error in the pass band as in the stop band. The picture with the discrete points is the correct plot, the other one has nonexistent line segments added for clarity.

As an example, attached is the impulse response of a 128-tap Parks-McClellan filter with a pass band up to 0.22675 fs, stop band from 0.27325 fs onwards, 12778 times as large error in the pass band as in the stop band. The picture with the discrete points is the correct plot, the other one has nonexistent line segments added for clarity.

Attachments

Maybe they used a standard two times oversampling digital filter for the circuit of post #258 and sent the odd words to one DAC and the even words to the other DAC, with 1/88200 s delay between them. Or they just made a half cycle delay filter like the one from post #301 to feed one of the DACs, still with 1/88200 s shift between the DACs. That way they can have a good suppression of the first two images and still claim it is a non-oversampling DAC without steep analogue filter.

There are all kind of tricks to reduce the amount of multiplications when calculation resources are restricted, but at the end if more samples come out then came in, it boils mostly down to be a smart derivative of zero stuffing and straight convolution, tricks that may or may not result in a loss of sound quality.

So when enough processing power available, why bother ?

Over the last years since processing power is abundant, even music programmes like JRiver can do the convolution whith your self fabricated Fir filter coefficients.

With a 1000 point Fir and 4 times upsampling, 44e6 multiplications have to be done per second, which even a fast Intel processor can achieve.

A graphic NVidia card can do at least 100 times more than that.

There is a clear development with DAC’s having all sorts of different filters, and DSP’s or FGPA’s can do the job with ease.

So I think the challenge is nowadays not to have the smartest algorithm but to have the best sounding filter that can beat the supposed clarity of NOS.

Hans

P.s. I have never read a test in Stereophile of a NOS Dac that beats all the oversamplers, so what to think of that ??

So when enough processing power available, why bother ?

Over the last years since processing power is abundant, even music programmes like JRiver can do the convolution whith your self fabricated Fir filter coefficients.

With a 1000 point Fir and 4 times upsampling, 44e6 multiplications have to be done per second, which even a fast Intel processor can achieve.

A graphic NVidia card can do at least 100 times more than that.

There is a clear development with DAC’s having all sorts of different filters, and DSP’s or FGPA’s can do the job with ease.

So I think the challenge is nowadays not to have the smartest algorithm but to have the best sounding filter that can beat the supposed clarity of NOS.

Hans

P.s. I have never read a test in Stereophile of a NOS Dac that beats all the oversamplers, so what to think of that ??

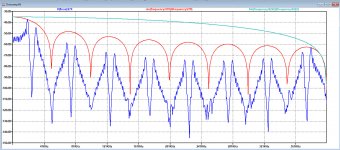

I simulated the Accuphase solution and added 8 time shifted samples of a NOS Dac.

All the supersonic images are still there, but as can be seen, compared to the Sinc of a single sample NOS Dac in Red, these images are suppressed quite a bit depending on their frequency, so it is effectively in some way.

But an analogue filter will still be needed to correct the loss of level at the high end side of the audio range, caused by the Sinc.

Hans

.

All the supersonic images are still there, but as can be seen, compared to the Sinc of a single sample NOS Dac in Red, these images are suppressed quite a bit depending on their frequency, so it is effectively in some way.

But an analogue filter will still be needed to correct the loss of level at the high end side of the audio range, caused by the Sinc.

Hans

.

Attachments

There are all kind of tricks to reduce the amount of multiplications when calculation resources are restricted, but at the end if more samples come out then came in, it boils mostly down to be a smart derivative of zero stuffing and straight convolution, tricks that may or may not result in a loss of sound quality.

It seems unlikely to me that adding a bunch of zeros is going to improve the sound quality.

With a 1000 point Fir and 4 times upsampling, 44e6 multiplications have to be done per second, which even a fast Intel processor can achieve.

Actually 176.4 million multiplications per second are needed when you don't use polyphase decompositions: 1000 times 4 times 44.1 kHz. Of those 176.4 million multiplications, 132.2 million will be multiplications with the stuffed zeros.

Marcel,

Right, adding zero’s don’t improve things, but it helps to understand the process, because that’s basically what’s it all about.

The execution how to do it is up to the designer and as long as no shortcuts are taken that are harmful, that’s o.k.

The solution with two Dac’s and only odd’s in between being processed is absolutely better as adding two time shifted samples, but it doesn’t seem the best way to follow because the whole chain from beginning to end differs from the even channel adding extra noise.

We agree on the calculations: 176M-132M are exactly the 44Mflops that I mentioned. 😀

Hans

Right, adding zero’s don’t improve things, but it helps to understand the process, because that’s basically what’s it all about.

The execution how to do it is up to the designer and as long as no shortcuts are taken that are harmful, that’s o.k.

The solution with two Dac’s and only odd’s in between being processed is absolutely better as adding two time shifted samples, but it doesn’t seem the best way to follow because the whole chain from beginning to end differs from the even channel adding extra noise.

We agree on the calculations: 176M-132M are exactly the 44Mflops that I mentioned. 😀

Hans

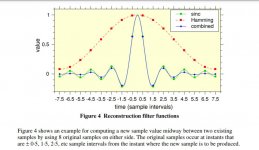

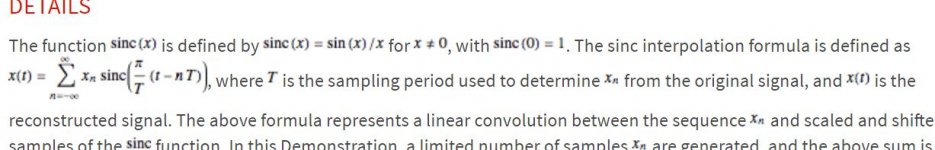

proceeding with sinc-Hamming interpolation. The formula for sinc is

As I am interpolating at midpoint, the sin(pi(n-0.5) is equal to +1 for impair and -1 for pair samples, by this the sinc=+-1/pi*(n-0.5)

This gives +1/(pi*0.5) for the nearest samples and -1/(pi*1.5) for the next and 1/(pi*14.5) for the last ones. these factors need to be multiplied by the Hanning window following the formula.

This formula multiplied by the sinc gives the following constants to multiply from the nearest to the last ones.

+0.6302 -0.2038 +0.1170 -0.0771 +0.0545 -0.0395 +0.0288 -0.0209 +0.0149 -0.00104 +0.007 -0.0046 +0.0036 -0.0021 +0.0018

As I am interpolating at midpoint, the sin(pi(n-0.5) is equal to +1 for impair and -1 for pair samples, by this the sinc=+-1/pi*(n-0.5)

This gives +1/(pi*0.5) for the nearest samples and -1/(pi*1.5) for the next and 1/(pi*14.5) for the last ones. these factors need to be multiplied by the Hanning window following the formula.

This formula multiplied by the sinc gives the following constants to multiply from the nearest to the last ones.

+0.6302 -0.2038 +0.1170 -0.0771 +0.0545 -0.0395 +0.0288 -0.0209 +0.0149 -0.00104 +0.007 -0.0046 +0.0036 -0.0021 +0.0018

Marcel,

I was just thinking.

When applying for in between samples a convolution to be merged with the time shifted original samples, the interpolated points will end up with pre and post ringing which the original ones don't have.

That will create some funny artefacts.

Harmless ??

Hans

I was just thinking.

When applying for in between samples a convolution to be merged with the time shifted original samples, the interpolated points will end up with pre and post ringing which the original ones don't have.

That will create some funny artefacts.

Harmless ??

Hans

For the time-shifted original samples, the ringing just passes through zero at all instants but one; the argument of the sine in sin(x)/x is an integer multiple of pi.

One other thing that I came across.

The British Dac manufacturer Chord has two models called Hugo and Dave.

Hugo has a 26.368 point reconstruction Fir filter and Dave even 164.000 points.

To calculate, they use 8 DSP cores paralleled !

But sound wise, more and more short minimal phase filters seem to be preferred by Stereophile, like for instance the Dacs from Ayre and dCs are using.

Ayre used to have a white paper on their site explaining their minimum phase filter, but I can't find it anymore.

Hans

The British Dac manufacturer Chord has two models called Hugo and Dave.

Hugo has a 26.368 point reconstruction Fir filter and Dave even 164.000 points.

To calculate, they use 8 DSP cores paralleled !

But sound wise, more and more short minimal phase filters seem to be preferred by Stereophile, like for instance the Dacs from Ayre and dCs are using.

Ayre used to have a white paper on their site explaining their minimum phase filter, but I can't find it anymore.

Hans

OT Warning:

Some people say Chord DAVE sounds more like real music than the $100,000 complete dCs Vivaldi setup. One guy who said that owns both of them. In addition, Chord has a 'prescaler' for use with DAVE and Hugo TT 2 that adds a million tap preprocessing filter before the dac. People say both dacs sound better with the prescaler. Don't know about Stereophile in every case, but have it on good account that sometimes they can't say what they know or they will lose advertising revenue they need to survive. Only the German hifi magazines are willing to tell it like it is.

Anyway, longer filters do seem to be preferred by many.

Some people say Chord DAVE sounds more like real music than the $100,000 complete dCs Vivaldi setup. One guy who said that owns both of them. In addition, Chord has a 'prescaler' for use with DAVE and Hugo TT 2 that adds a million tap preprocessing filter before the dac. People say both dacs sound better with the prescaler. Don't know about Stereophile in every case, but have it on good account that sometimes they can't say what they know or they will lose advertising revenue they need to survive. Only the German hifi magazines are willing to tell it like it is.

Anyway, longer filters do seem to be preferred by many.

Last edited:

For the time-shifted original samples, the ringing just passes through zero at all instants but one; the argument of the sine in sin(x)/x is an integer multiple of pi.

O.k. Thanks, that sounds smart, so I’m looking forward to see Hayk’s results from this setup.

Hans

Attachments

Last edited:

Hayk,

Well done, but if you don't mind, could you make your .asc file availabe ?

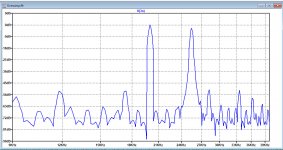

I'm a bit puzzled by your images.

To start with, when I sim the spectrum of a 5msec 20Khz signal sampled at 22usec intervals, I get the image as below, quite different from your image over the same frequency band.

Hans

Well done, but if you don't mind, could you make your .asc file availabe ?

I'm a bit puzzled by your images.

To start with, when I sim the spectrum of a 5msec 20Khz signal sampled at 22usec intervals, I get the image as below, quite different from your image over the same frequency band.

Hans

Attachments

I did not check if the multiplexer B4 is correct. To note that for round number 22us, the sampling frequency is 45.45khz. The FFT measures the dbrms, 1vp is -3db. the 20khz is measuring -4.2db, that is attenuated by only -1.2db instead of -3.2db.

Attachments

Last edited:

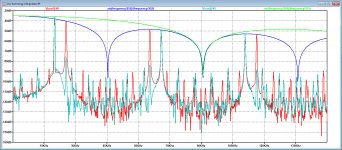

Here is what I get from it.

Time input signal plus DA output in the first image.

Spectrum of the signal without in Red and with frequency doubling by midpoint insertion in Magenta in the second image.

Fs is 45.45Khz, so the first mirror is at 25.45Khz and is effectively suppressed.

Also the original level at 20Khz which at Fs = 45.45K went down by -2.9dB, now with frequency doubling only goes down with -0.7dB, both because of the two different Sinc envelopes.

Hans

.

Time input signal plus DA output in the first image.

Spectrum of the signal without in Red and with frequency doubling by midpoint insertion in Magenta in the second image.

Fs is 45.45Khz, so the first mirror is at 25.45Khz and is effectively suppressed.

Also the original level at 20Khz which at Fs = 45.45K went down by -2.9dB, now with frequency doubling only goes down with -0.7dB, both because of the two different Sinc envelopes.

Hans

.

Attachments

- Home

- Source & Line

- Digital Line Level

- Analog Delta-Sigma interpolation DAC