Thanks, I find the desmos geometry tool lots of fun!🙂 There is new tone -function available, but didn't have time to put that in play here.

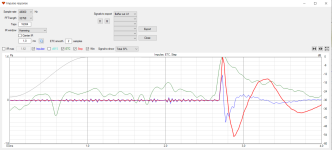

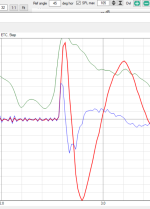

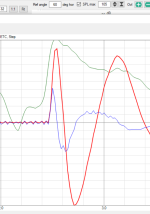

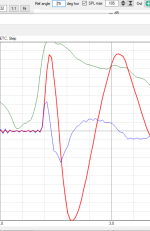

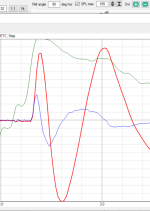

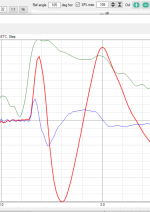

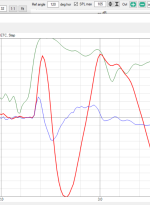

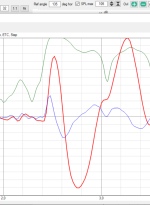

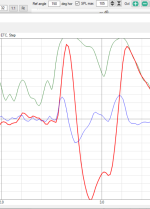

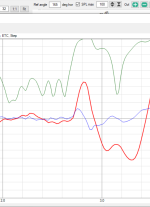

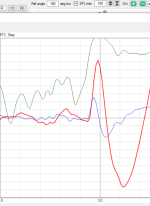

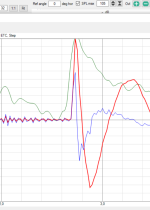

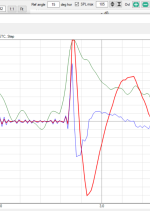

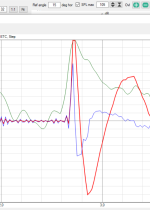

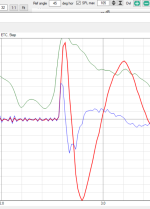

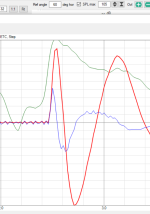

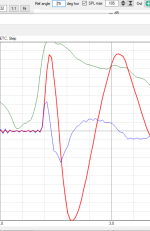

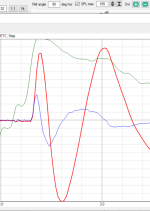

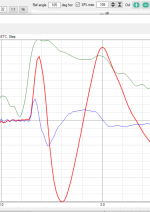

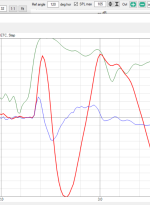

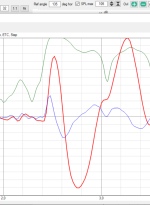

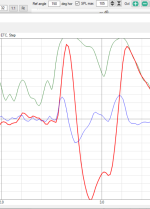

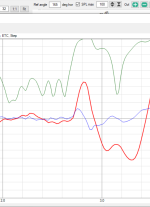

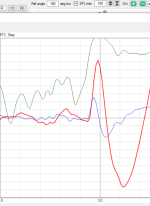

ABEC simulations indicate the acoustic center is at the throat though, not at the mouth. This is evident if you look at impulse responses for measurement set where throat was rotation axis, as it is by default with ath sims. If you move the rotation axis to mouth and redo the sim and inspect impulse responses of various angles, the impulse advances early as you look at >0 angles, functions similarly as the Desmos sim. This indicates the acoustic center is at the throat and not at mouth as we've been thinking it is.

edit.

I just checked, same thing happens with real measurement I did earlier and posted about this stuff, rotation axis ~mouth. Impulse advances as I start to increase angle. This means the acoustic center comes closer to mic with rotation = acoustic center behind rotation, closer to throat than mouth. Click one of the impulses and cycle through to see magic movie 🙂

ABEC simulations indicate the acoustic center is at the throat though, not at the mouth. This is evident if you look at impulse responses for measurement set where throat was rotation axis, as it is by default with ath sims. If you move the rotation axis to mouth and redo the sim and inspect impulse responses of various angles, the impulse advances early as you look at >0 angles, functions similarly as the Desmos sim. This indicates the acoustic center is at the throat and not at mouth as we've been thinking it is.

edit.

I just checked, same thing happens with real measurement I did earlier and posted about this stuff, rotation axis ~mouth. Impulse advances as I start to increase angle. This means the acoustic center comes closer to mic with rotation = acoustic center behind rotation, closer to throat than mouth. Click one of the impulses and cycle through to see magic movie 🙂

Last edited:

My experience: For the best set of measured polar responses, each drivers acoustic center should ideally be stacked on the axis of rotation.

Usually not doable, unless each driver has it's own separate enclosure., or the whole speaker was crafted with said physical alignment.

The problem with aligning mouths, and then using constant time delays to counter the offsets in distance to their acoustic centers, is that the constant delays are only accurate at the angle used to set them.

Usually not doable, unless each driver has it's own separate enclosure., or the whole speaker was crafted with said physical alignment.

The problem with aligning mouths, and then using constant time delays to counter the offsets in distance to their acoustic centers, is that the constant delays are only accurate at the angle used to set them.

Aligning "acoustic centers" can't be the best way. Imagine a very long horn with its throat aligned vertically on top of a woofer. For the forward sound, this can be fine but as you move off axis, the path to the woofer will get much shorter, so at 90° the path difference will be more than the length of the horn.

The better way is to align the mouth on top of the woofer and add a delay to the woofer, so both wavefronts emanate from this place at the same time for all off axis angles. (They are also the closest together this way.) Even if the correct delay is not exactly the same for all directions, it's still much better than the previous case.

- It must be a data interpretation thing then.

The better way is to align the mouth on top of the woofer and add a delay to the woofer, so both wavefronts emanate from this place at the same time for all off axis angles. (They are also the closest together this way.) Even if the correct delay is not exactly the same for all directions, it's still much better than the previous case.

- It must be a data interpretation thing then.

Last edited:

Yes, since there is no way to skip this when acoustic center is recessed into a physical structure, all we can do is optimize what is most important, the listening window making sure the device size and position is so that at least within listening window distance between acoustic centers is close enough cosidering wavelength at crossover.

Last edited:

Of course it's not at the mouth, but it takes sound some time to get there. Until then, this sound inside the horn is not seen anywhere else, so it's irrelevant for the polars.This indicates the acoustic center is at the throat and not at mouth as we've been thinking it is.

Well, it is irrelevant for single device polars, but not when crossed over to another source whose acoustic center is not at same axis given path length difference is long enough to cause phase difference and superposition reduce from max 6db making dip it seems.

See post #15,986 showing summed response of A460G2 simulated waveguide and simulated woofer at 500Hz LR4 xo and at 1000Hz LR4 xo. One image is for acoustic centers aligned showing nice smooth response to off-axis, the other with frequency response dipping toward off-axis is when waveguide acoustic center is behind woofer's, mouth at woofers plane.

On the low 500Hz crossover the effect is minor towards listening window, but with 1000Hz xo it shows up at the 15deg line already.

See post #15,986 showing summed response of A460G2 simulated waveguide and simulated woofer at 500Hz LR4 xo and at 1000Hz LR4 xo. One image is for acoustic centers aligned showing nice smooth response to off-axis, the other with frequency response dipping toward off-axis is when waveguide acoustic center is behind woofer's, mouth at woofers plane.

On the low 500Hz crossover the effect is minor towards listening window, but with 1000Hz xo it shows up at the 15deg line already.

Last edited:

It's irrelevant for all the polars, alone or the whole system level. I'm not able to comment on those data, as I don't know all the details. Did you compensate the added delay?

- Certainly all of this makes a very strong case for having the crossover frequency as low as possible.

- Certainly all of this makes a very strong case for having the crossover frequency as low as possible.

Nope didn't compensate, delay affects all directions, so doesn't fix it because it's an acoustic issue how acoustic sound sums with phase difference (well, actual path length time delay) that changes with direction, while the other one stays put. At least I'm currently believing the data is right and logical, but doesn't mean it is so it would be cool if you had time to play with this stuff.

You can take out data from VACS, simulate any waveguide from ath and you get data with rotation axis at throat. Then do the same device but with the Offset to move rotation axis at the mouth. You might be able to directly compare data in VACS as well, arrival of impulse to various angles. If you want to compare to a woofer you could do simple woofer box sim with same VACS settings. You can export data and use in VituixCAD as well if you want to, but I think the same data would reveal same things no matter which software.

You can take out data from VACS, simulate any waveguide from ath and you get data with rotation axis at throat. Then do the same device but with the Offset to move rotation axis at the mouth. You might be able to directly compare data in VACS as well, arrival of impulse to various angles. If you want to compare to a woofer you could do simple woofer box sim with same VACS settings. You can export data and use in VituixCAD as well if you want to, but I think the same data would reveal same things no matter which software.

No, it doesn't, that's the point. Once you compensate for the travel time inside the horn, you can forget about it. What's left then are the path differences between the woofer and the mouth, as if it wasn't a horn at all, but a "diaphragm".delay affects all directions,

It seems it does, problem is the travel time is not constant relative to the other source to all output directions, as per illustrated in various ways. Observer is closer either source, when they are at an angle. You can compensate the waveguide, but it still differs per direction in a system context if acoustic centers of sources don't align showing the same issue.

Please, do 🙂You can compensate the waveguide

I think that the impulses from waveguide still arrive at various times at various angles when they are aligned at mouth with baffle, so I can add constant delay to woofer but the waveguide still behaves the same so not sure why I'd do that? I can do it once more but my extra time costs one more good optimized waveguide in your shop everyone can buy, for 1.4", as per in #15,980 ?😀

ps. your waveguides are really good, I just want a bit different directivity and I cannot seem to get it optimized as good as yours so hoping one becomes available at some point.

ps. your waveguides are really good, I just want a bit different directivity and I cannot seem to get it optimized as good as yours so hoping one becomes available at some point.

Last edited:

I'm not sure, simulated and measured data seems to agree to each other and within that context the results are as posted. I'm not sure what the wavefronts are, and what is their contribution. Also my data sample is small, so hoping others had some data to compare with. mark100 already seems to agree so there is one.

Problem 1: Stereo is not able to reproduce an illusion of reality! The format with 2 speakers simply can't do that!The question of how a speaker should be deigned and placed in a room in order to reproduce a perfect illusion of reality is as much a question about the speaker as it is a question on how to record.

Listen to (good) Atmos music and then switch back to Stereo - you immediately know what I'm speaking about. But also Atmos can't reproduce, you need to go to Ambisonics to START talking about reproduction of a sound field.

We are just used to Stereo and sound engineers try to make it sound good and believable.

Digitally generated sounds and directly connected instruments to mixers do apply as no one could now what the sound - these kind of sounds can only be subjectively judged as sounding more or less nice but not how "real" they sound...

Music is not always about documenting an acoustical event! To be honest - it's not about it most of the time!

In a classic recording you want to have better sound as at the best seat in the real room. Few non musicians know the real sound of a drumset and nobody wants to listen to it, it's far from what we like on a recording. The amount of music in the market trying to honestly reproduce something (an not making it "bigger than life") is VERY small.

A musician has an idea. You want to express something, move your listeners to a spot. Transport a feeling. The art is not about reproducing instruments ... this get's boring quickly.

It will allow you to position the waveguide and the woofer optimally with respect to each other for all the off-axis angles, not just the on-axis.[...] so not sure why I'd do that?

(I don't claim it will improve your existing crossover right away, only that it will allow such solution.)

Last edited:

The speaker interacts with the room. You need different behaviour in a modern glass/concrete room as in a well done linear listening room or a bad treated listening room.I don't know the answer, that's the reason I still make these different versions. It may be a preference thing to a degree, the problem is that there's no standard, and there have been very few such experiments done, if any. Reports of people building both would be certainly valuable. I haven't done such test myself yet, but I'd like to.

- It seems that we, as listeneres, prefer flat direct sound but not really flat total in-room power (which would lead to a slightly rising DI). But why? Is this independent of loudspeakers used in making the recordings, or is it given by the common radiation pattern used? Would it change if true constant-directivity speakers were used universally from the beginning? Questions that probably nobody knows the answers for. For PA this is different of course.

We are USED to the behaviour that low frequencies are stronger as high frequencies cause that is what the average room does together with the average speaker. So music is produced and mixed that way. Sound engineers job is to "translate" the music for the customer. In their homes and to their listening devices. Therefore you check your mix with headphones and in your car or with a kitchen radio.

With a true constant directivity speaker you still have the dampening behaviour of a common room which is not linear at all and will give a non constant response in the room.

So after all ... it's a big mess and musicians/producer/engineers try to deal with it the best they can 🤓

- Home

- Loudspeakers

- Multi-Way

- Acoustic Horn Design – The Easy Way (Ath4)