You might have. The main difference between the original sounds and the recordings of them is that the low midrange is kind of muddy in the copies. One one of bohrok2610 recordings there is a little less mud/blur in the low midrange frequency sounds as compared to the other recording, which is probably due to a difference in the FPGA codes. If your dac is very precise and clear in the low midrange the effect should be easy to hear, but many dacs/amps/speakers are kind of muddy/blurry down in that area so the differences may be easily masked. The differences I am speaking of are evident in the sounds in the first several seconds of each recording. The low midrange drum hits and bass guitar are sort of blurred/muddy. Its not a gross amount, but its there.Really thought I might have heard a difference on some of the percussive hits.

If you want to look for technical differences, you might try checking phase differences between the files at low midrange frequencies, however they may not be constants due to dither effects. Noise and or correlated noise may be different too.

Last edited:

Can't see a reason to compare two blurred and damaged recordings just because that's the best ADC I have. What am I trying to accomplish by that? Its obviously not what I want to compare, nor what I want to talk about as a difference of the dac sound, etc. Its a difference of the ADC sound which is something else again.

Why not compare one of the recordings to the original? I think showing test results indicating you can reliably tell a difference would go a long way in this discussion.

Have you tried comparing recordings against the original?

Here is a quick attempt of the original vs dw2. Sighted, I really thought the original sounded much sweeter than the recording, particularly on piano notes and the harmonica. Unfortunately, my test results don't prove this out.

Code:

Trial 1, user: B actual: A 0/1

Trial 2, user: A actual: B 0/2

Trial 3, user: B actual: A 0/3

Trial 4, user: B actual: B 1/4

Trial 5, user: A actual: A 2/5

Trial 6, user: B actual: B 3/6

Trial 7, user: A actual: B 3/7

Trial 8, user: A actual: A 4/8

Trial 9, user: B actual: A 4/9

Trial 10, user: B actual: A 4/10

Trial 11, user: B actual: A 4/11

Trial 12, user: A actual: B 4/12

Trial 13, user: A actual: A 5/13

Trial 14, user: A actual: A 6/14

Trial 15, user: B actual: A 6/15

Trial 16, user: A actual: A 7/16

Probability of guessing: 77.3%

A=Reference,B=Comparison

Test type: ABX

-----------------

DeltaWave v2.0.13, 2024-05-15T12:20:38.2806928-04:00

Reference: 09 St. Louis Blues.wav[?] 2590578 samples 44100Hz 16bits, ch=0, MD5=00

Comparison: St. Louis Blues - 60s-dw2_+2dB_32_nodelay.wav[?] 2590578 samples 44100Hz 32bits, ch=0, MD5=00

LastResult: Ref=C:\Users\mdsim\Downloads\09 St. Louis Blues.wav:[?], Comp=C:\Users\mdsim\Downloads\Transfer\St. Louis Blues - 60s-dw2_+2dB_32_nodelay.wav:[?]

LastResult: FilterType=0, Bandwidth=0

LastResult: FilterType=0, Bandwidth=0

LastResult: Drift=0, Offset=-7181.273718707 Phase Inverted=False

LastResult: Freq=44100

Settings:

Gain:True, Remove DC:True

Non-linear Gain EQ:False Non-linear Phase EQ: False

EQ FFT Size:65536, EQ Frequency Cut: 0Hz - 0Hz, EQ Threshold: -500dB

Correct Non-linearity: False

Correct Drift:False, Precision:30, Subsample Align:True

Non-Linear drift Correction:False

Upsample:False, Window:Kaiser

Spectrum Window:Kaiser, Spectrum Size:32768

Spectrogram Window:Hann, Spectrogram Size:4096, Spectrogram Steps:2048

Filter Type:FIR, window:Kaiser, taps:262144, minimum phase=False

Dither:False bits=0

Trim Silence:True

Enable Simple Waveform Measurement: False

Signature: 769b6747a848df16ea00d6b45b6cc146Would love to see some test results from others.

Michael

Did you read my previously linked article from the Technical University Dresden, on how our expectations shape our hearing?

WE HEAR WHAT WE EXPECT TO HEAR

I just did. It shows some evidence that humans (and other mammals) use a form of differential encoding and therefore their brains show more of a response when they hear something unexpected than when they hear what they expected to hear. Basically, humans subconsciously compare the sound to what they expect to hear and mainly respond to the differences.

Why on Earth someone came up with the title "We hear what we expect to hear" for a PR summary of it is unclear to me. It appears to contradict the contents of the actual article.

"Sweeter" is a bad thing to try to listen for. What kind of speakers do you have, can they make a big image? How about the stereo imaging between the speakers? Can you place virtual objects in the soundstage with your eyes closed?I really thought the original sounded much sweeter than the recording, particularly on piano notes and the harmonica...

Other than that, you need to find VERY specific things to listen for. Sweeter is not specific enough. For vocal harmonies and pianos, you need to listen to sound between the played notes. The depth and texture of the beat notes in harmonies for vocals and piano. For piano you also listen to the resonance of the sound board and the other unplayed strings. In both the vocal case and in the piano case you are listening for textures. Like looking at rocks in a riverbed. Are they pebbles, sand, jagged rocks? For aural textures it is rather analogous.

However if your system is not good enough most subtle differences will be lost/masked.

Moreover, you have to practice. Didn't read what I quoted from Howie Hoyt? Start with big differences and learn to hear them blind. It takes training and practice. Then reduce the level of the differences and practice again.

For me when I got best at DBT I had to learn to memorize very weird little sound differences, not like any natural sound you ever heard in your life. DACs can produce alien-like noises and artifacts. You have to memorize them to hear them blind.

Anyway, let's hear about your system first. How much detail can you really hear from it? Maybe you need a Marcel dac? Maybe one with cleaned up clocking?

I gave it a try. Really thought I might have heard a difference on some of the percussive hits.

You probably did.

The results are initially hidden so you don't know how you are doing until you hit reveal results. Turns out I did great in the beginning and then it all fell apart.

Can you try again and this time do 5 trials, take a break, relax and come back later, do another 5 etc?

For example, at 7/10 you have a "SCORE" that we can consider offer a reasonable confidence, given the small sample size, there was an audible difference.

Code:Trial 1, user: A actual: A 1/1 Trial 2, user: B actual: B 2/2 Trial 3, user: A actual: A 3/3 Trial 4, user: B actual: B 4/4 Trial 5, user: B actual: B 5/5 Trial 6, user: B actual: A 5/6 Trial 7, user: B actual: A 5/7 Trial 8, user: A actual: A 6/8 Trial 9, user: A actual: B 6/9 Trial 10, user: A actual: A 7/10 Trial 11, user: A actual: B 7/11 Trial 12, user: B actual: A 7/12 Trial 13, user: B actual: B 8/13 Trial 14, user: A actual: B 8/14 Trial 15, user: B actual: A 8/15 [/QUOTE] Did your attention fade with the number of trials? I have observed a similar tendency in myself and others in ABX. Limit the number of presentations per setting. Use multiple rounds of listening. Let us take a statistical analysis of the chance of a "lucky coin" giving 5/5 ? Anyone? It's 1/32 or ~3% or we may state with .97 confidence that this sequence is not likely the result of chance. So analysing the result in more depth suggests in fact that you reliably heard a difference in the first five presentations with 97% confidence (only 3 in 100 identical test will return this result) and that external factors (test fatigue, pretty girl walking by etc.) defocused attention in later trials. So I would suggest your tests does in fact supports that differences may be present and that test itself acts as randomising factor with increasing numbers of trials. So, I suggest you try 5 trial tests on 10 different days. If you get a better than 3/5 average you are above chance, so when your "score" falls below the point where multiple 5/5 results can get a 3/5 average in the sample set, you can stop. This 5 X 10 tests gives a good sample set and allows us for example to state with .94 confidence that if you got 31 right there was a real difference. And we can to reject the chance that there was a difference but it was obscured by a mix of poor statistics, small sample size and test stress if you get less 31 with better than .9 confidence, meaning we about balanced risks of "false positives/negatives" and that such a chance is minimal. In other words, maximise your "chance" of getting it right by using few trials. For bonus points, after having taken the first 5 trials, which we count, keep going to 15 and see if the pattern of a high correct identification in the first few trials and then a fall off continues and also if you can learn to avoid the attention fade that causes you go from 5/5 in the first few trials to 2/5 in the last trials. I knew one individual who had literally thought himself to "beat ABX", a famous and prolific recordist of classical music. He was in a number of ABX tests where I scored completely random and he got a "perfect score" each time. That was in the 90's. Maybe you can do the same and teach yourself to penetrate the fog of ABX. I tried but it's hard work. Thor

The commonly used p-value < 0.05 requires 8/10.For example, at 7/10 you have a "SCORE" that we can consider offer a reasonable confidence, given the small sample size, there was an audible difference.

That reminds me of another problem with Foobar ABX, it incorrectly calculates probability of guessing. IIRC, if you get the questions ALL wrong then its says the probability of guessing is 100%. But guessing should not lead to getting all the questions wrong!

I just did. It shows some evidence that humans (and other mammals) use a form of differential encoding and therefore their brains show more of a response when they hear something unexpected than when they hear what they expected to hear. Basically, humans subconsciously compare the sound to what they expect to hear and mainly respond to the differences.

Is that what you read?

"For their study, the team used functional magnetic resonance imaging (fMRI) to measure brain responses of 19 participants while they were listening to sequences of sounds. The participants were instructed to find which of the sounds in the sequence deviated from the others.

Then, the participants’ expectations were manipulated so that they would expect the deviant sound in certain positions of the sequences.

The neuroscientists examined the responses elicited by the deviant sounds in the two principal nuclei of the subcortical pathway responsible for auditory processing: the inferior colliculus and the medial geniculate body.

Although participants recognised the deviant faster when it was placed on positions where they expected it, the subcortical nuclei encoded the sounds only when they were placed in unexpected positions."

In a more accessible way, when listeners expected to hear to the "different sound" they PERCIEVED the different sound, but the neural pathways did not process the difference. In other words they heard what was expected. Their reaction was faster. Probably their discrimination was also improved.

Something that is missing is the counter test, where a "non-different" sound is played in a position where the a "learned expectation" expects a "different" and we need to see if suddenly the difference is heard despite not being present.

Why on Earth someone came up with the title "We hear what we expect to hear" for a PR summary of it is unclear to me. It appears to contradict the contents of the actual article.

Not really. The Authors conclude of the complete study:

Dr Alejandro Tabas, first author of the publication, states on the findings: "Our subjective beliefs on the physical world have a decisive role on how we perceive reality. Decades of research in neuroscience had already shown that the cerebral cortex, the part of the brain that is most developed in humans and apes, scans the sensory world by testing these beliefs against the actual sensory information. We have now shown that this process also dominates the most primitive and evolutionary conserved parts of the brain. All that we perceive might be deeply contaminated by our subjective beliefs on the physical world."

That is where the headline comes from.

Thor

However, let's not fool ourselves. One set of 10 trials by one person is not statistically significant. You could reasonably get that result by chance.The commonly used p-value < 0.05 requires 8/10.

For the "law of large numbers" to be meaningful you probably need more like 100 trials. Are you seriously going to struggle through 100 trials to barely start to convince skeptics?

Also, there are some skeptical people who like to see Foobar ABX scores, but they want to see ALL of them. All the times you were practicing and failed they will hold against you as evidence you just got lucky one time then stopped.

The commonly used p-value < 0.05 requires 8/10.

The use of 0.05 requires at least 50 trials for a balanced risk of type A & B statistical errors.

Applying it to smaller datasets causes a strong weighting towards "false negatives", that is a failure to reject the null hypothesis when it should be rejected.

See the following for more information on relevant details on the statistics:

How Conventional Statistical Analyses Can Prevent Finding Audible Differences in Listening Tests

Author: Leventhal, Les

Affiliation: University of Manitoba, Winnipeg, Manitoba, Canada

AES Convention: 79 (October 1985) Paper Number:2275

Publication Date: October 1, 1985

Type 1 and Type 2 Errors in the Statistical Analysis of Listening Tests

AES Volume 34 Issue 6 pp. 437-453; June 1986

(followed by two corrections published the same year in JAES, and comments by David Clark, Tom Nousaine, and Daniel Shanefield)

Statistically Significant Poor Performance in Listening Tests

JAES Volume 42 Issue 7/8 pp. 585-587;

Publication Date: July 1, 1994

Analyzing Listening Tests with the Directional Two-Tailed Test

Authors: Leventhal, Les; Huynh, Cam-Loi

JAES Volume 44 Issue 10 pp. 850-863; October 1996

Publication Date:October 1, 1996

Thor

Thor suggested 10 trials.However, let's not fool ourselves. One set of 10 trials by one person is not statistically significant. You could reasonably get that result by chance.

Yes, since it is quite easy to just pick the one time you got lucky, especially if only 10 trials are included.Also, there are some skeptical people who like to see Foobar ABX scores, but they want to see ALL of them. All the times you were practicing and failed they will hold against you as evidence you just got lucky one time then stopped.

However, let's not fool ourselves. One set of 10 trials by one person is not statistically significant. You could reasonably get that result by chance.

Correction. It is SIGNIFICANT but lacks statistical power.

As said, we can easily calculate the chance of this occurring at random and at least they are pretty small.

For the "law of large numbers" to be meaningful you probably need more like 100 trials. Are you seriously going to struggle through 100 trials to barely start to convince skeptics?

Me? No. I did a lot of tests where subjects were paid (or were employees) and where we got sufficiently large n to have confidence, significant and power in a statistical sense.

Among results were that OPA1652 is reliably preferred over OPA1642 and that generic (RVT) vs Panasonic Os-Con vs. Elna Silmic has reliable preference's for Os-Con and Silmic, with the more preferred part depending on precise position.

Also, there are some skeptical people who like to see Foobar ABX scores, but they want to see ALL of them. All the times you were practicing and failed they will hold against you as evidence you just got lucky one time then stopped.

Yes, there are some who do not seek knowledge but top assert the orthodoxy they adhere to.

Thor

I had the same experiences before DW came to light. It was actually created by its author over the frustration with DiffMaker etc and start all over from scratch. It is really pro level software, strictly IME and IMHO.Hmmm, I used other "audio file nulling" software tools before and found them rather useless.

Maybe DW is different from earlier attempts, but I find I do not trust the process, especially not ADC's that are non-deterministic and sample aligned.

There is just too much manipulation in the recording process

But I fully agree that sample-synced recording is required to obtain more realistic results in any diff test. One way to do this could be to add a "dummy" SPDIF output from a scaled down clock of the DAC and then use this to sync, for example, an ADI-2 Pro. I'm using this sync option often and it gives excellent results.

Thor suggested 10 trials.

NO. I suggested 5 presentations on 10 days, giving a total n of 50!

Thor

But I fully agree that sample-synced recording is required to obtain more realistic results in any diff test. One way to do this could be to add a "dummy" SPDIF output from a scaled down clock of the DAC and then use this to sync, for example, an ADI-2 Pro. I'm using this sync option often and it gives excellent results.

I only have the Quantasylum Analyser as ADC, I do not think it permits external sync.

I would suggest that if we record PCM, using one of the latest generation of 1MSPS 20 Bit multibit ADC's would be suggested (768kHz SR) as any system which uses digital filters and conversion from DS to PCM will act as a pretty strong "leveler" at low levels.

There is a reason than a current generation AP-5XX uses an ADC with 20MHz Multibit DS Output Rate and 114dBc SDFR at 1MHz....

Thor

And that is a reason I reject forced choice. If a mistake is going to be held against someone, then they should have the right to decide to take a chance or not. If there is a button that says, "no opinion" or something like that, then it might be okay. The statisticians might have to deal with it but it would be their problem, not the test taker's problem.Yes, since it is quite easy to just pick the one time you got lucky, especially if only 10 trials are included.

There seems to be seven people who have downloaded the files but only 2 results. One inconclusive and another which failed. I don't see the need to continue this exercise so time to move on. Thanks for all participants!

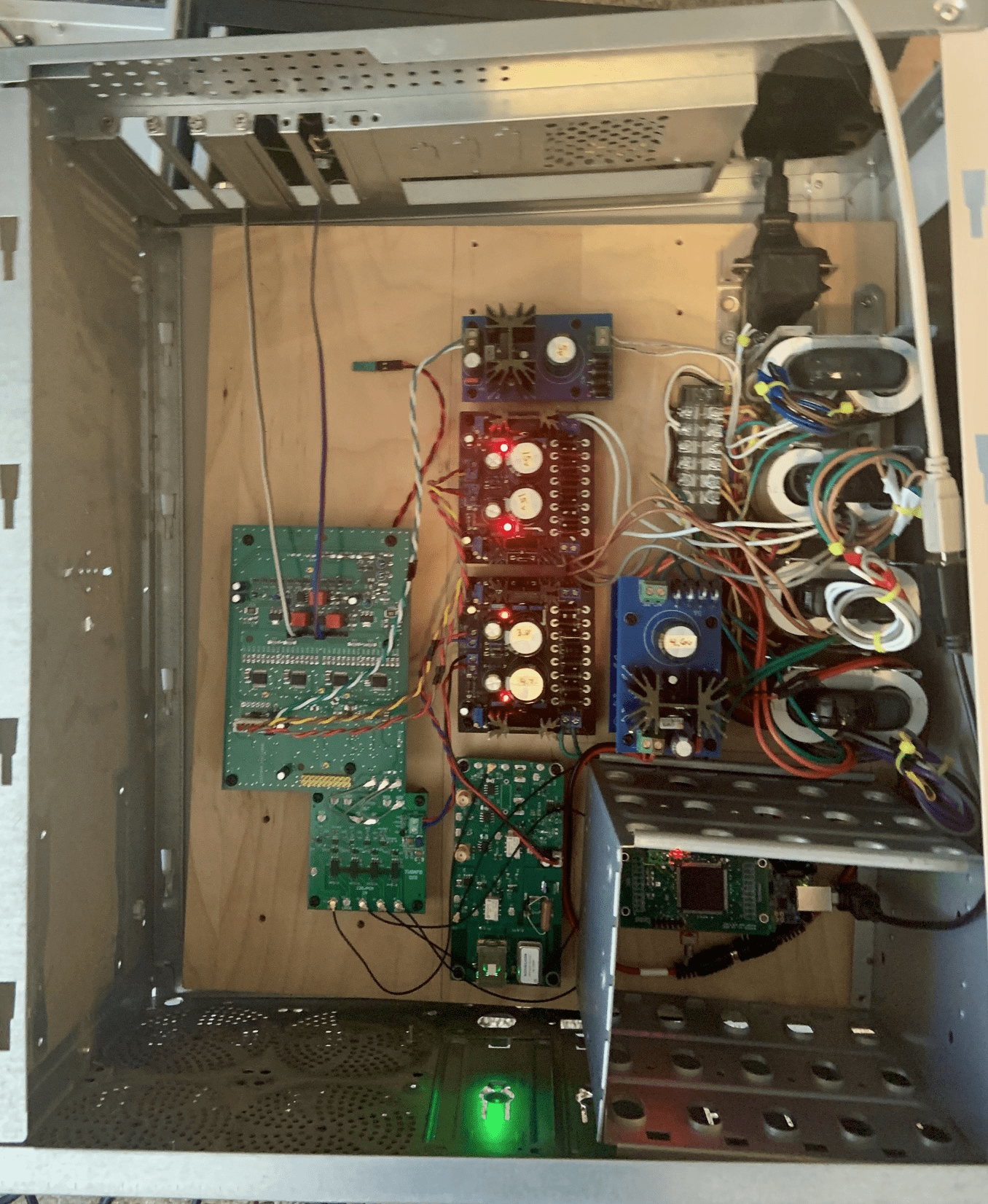

Getting back to Marcel's dac, here is the current state:

Basically it the same as last time, but with some quickie shielding added (which helps the sound, at least it does in the EMI/RFI environment here). Also, SE analog outputs are routed through an Andrea Mori SE DSD dac discrete output stage. The output stage is powered by another Andrea board, a quad shunt regulator (powered by two split-bobbin transformers not shown in the pic).

Its sounding quite good actually. Probably I will change plans at this juncture and see if I can clock the dac from some reference 22/24MHz clocks. Will see if I can put together a frequency multiplier to drive the I2SoverUSB board.

Basically it the same as last time, but with some quickie shielding added (which helps the sound, at least it does in the EMI/RFI environment here). Also, SE analog outputs are routed through an Andrea Mori SE DSD dac discrete output stage. The output stage is powered by another Andrea board, a quad shunt regulator (powered by two split-bobbin transformers not shown in the pic).

Its sounding quite good actually. Probably I will change plans at this juncture and see if I can clock the dac from some reference 22/24MHz clocks. Will see if I can put together a frequency multiplier to drive the I2SoverUSB board.

- Home

- Source & Line

- Digital Line Level

- Return-to-zero shift register FIRDAC