I assume you used the Trim Front & End settings. That removes 10s from the beginning and 20s from the end. So you were comparing spectras of 4min30s and 30s recordings.They were both supposed to be for 10s, starting at 10s from the beginning then ending at 20s into it. Its that not how it works, given there is no manual?

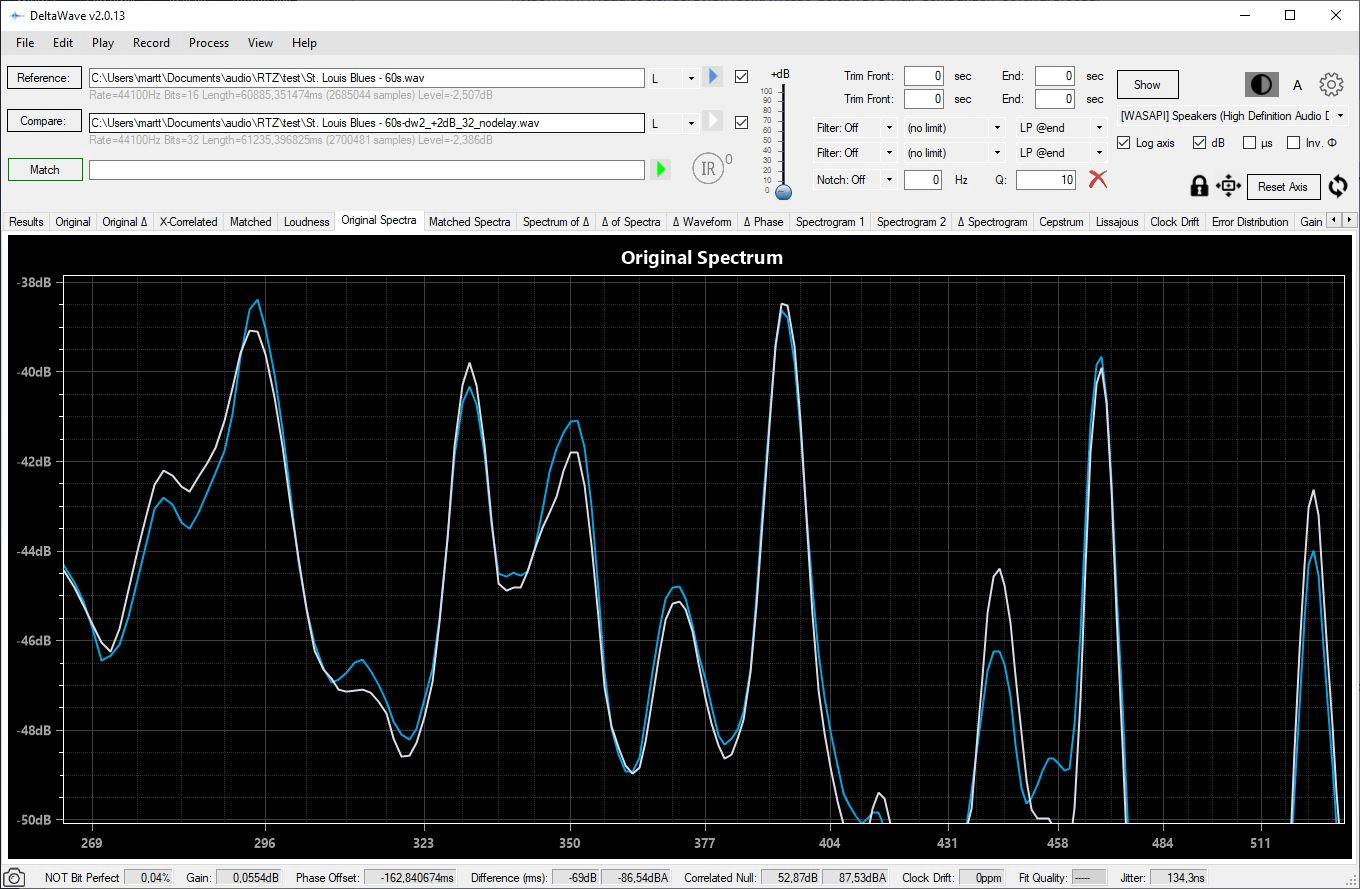

Okay, it appears obvious some bins need to move up and some need to move down in amplitude, and by different amounts. Only EQ can do that.This is how original spectras look when both are 60s recordings (reference is first 60s from the original).

Marcel, what do you think?

No. Those spectras are calculated from unmatched waveforms so there is still time shift which can easily cause spectras to look different.

However the pic is of the average FR over the full 60s. It does not show short-time spectral energy variations, right?

Also, by adjusting bin values it should be possible to achieve arbitrary average FR matching in a numerical sense. IOW, we can carry out the math with arbitrary precision.

Also, by adjusting bin values it should be possible to achieve arbitrary average FR matching in a numerical sense. IOW, we can carry out the math with arbitrary precision.

If time shift and gain adjustment is done first by autocorrelation that should solve for optimal matching in the time domain. After that frequencies should not be shifted unless playback speed or some issue like that has caused a pitch shift in the music.

To repeat: waveforms are matched and spectras are calculated from waveforms. When you make a recording of 60s the delay (or latency) in the setup will be added. So the resulting recording is 60s+delay (normally delay is zeros in front). If spectra is calculated from orignal waveforms they will be different due to the delay.

Time segments should be matched first in the time domain.

Was that done for the case in this image?

Was that done for the case in this image?

No. That shows spectras calculated from original waveforms. The help text for that tab is "Original, unaligned spectra of both waveforms".

I have taken the same stance on any claims based on sighted subjective listening tests. No matter who makes the claim.

We agree that unstructured sighted listening tests lack statistical significance and power.

But ABX is just rank pseudo science and is as useless.

Worse, not only can it not give any confidence that the failure to reject the null hypothesis is a result of an absence of audible differences, worse, even a positive test does not provide any actionable intelligence.

The lack of eliminating bias in the subject (commonly subjects know what is tested and have an opinion, on fact, commonly "Take an ABX Test" is issued as challenge to individual that are highly likely to be biased) adds to already challenging statistical setup.

Hence, if we insist on tests that eliminate bias, have reasonable statistical significance and power and provide actionable intelligence for development, clearly different test protocols are required.

Thus recommending ABX is counterproductive. Recommending casual sighted listening tests without sufficient level matching is counterproductive.

Only tests that make sure to involve significant numbers of subjects, a test that is truly blind, not just to the identity of the devices/algorithms etc. being tested but also what actually is being tested, which may indeed actually be no difference at all AND tests that give actionable intelligence (e.g. how are the items perceived in relation to specific aspects of sound quality / audible fidelity impairments) are worth bothering with.

Otherwise what we do is in the noise floor and just wastes time.

Thor

I don't disagree with that but until better test protocols are commonly available it is better to use the ones we have. Same with measurements: until Gedlee metric (or any other alternative) is commonly available in SW tools I don't see any point in using it.

I don't consider what diyaudio is about as science. Asking for ABX is simply to have some controls in place.

I don't consider what diyaudio is about as science. Asking for ABX is simply to have some controls in place.

Most recordings (if not all) go through ADCs. Most of ADCs that have been used in recordings are quite likely not better than what was used in this test.

Degradation is cumulative and compounding. Losses and misalignments compound. As a result we tend to see a push towards maximum signal fidelity or a minimisation of signal fidelity impairment.

Of course "signal fidelity impairment" is not really reliably related to "audible fidelity" or indeed "good sound". Minimum "audible fidelity impairments" nor minimum "signal fidelity impairments" provide subjectively "good sound".

Instead "good sound" seems related to a mix of factors not currently specifically evaluated in audio testing on one hand and masking effects of observable signal fidelity impairments caused by optimising the system for alternative trade-off's in the definition of the goals for signal fidelity.

However, this is not relevant to evaluating a given source file and how it is altered by being passed through a series of conversions boost in digital and analogue domains.

Such evaluation, unless we adjust our criterions, is limited to signal fidelity.

Otherwise, if we perform listening tests, adding an additional set of conversions is counterproductive.

That is, listening to re-recorded analog output from a proces involving PCM-2-DSD conversion in the digital domain, conversion to analogue and amplification in analogue circuits and then passing further analogue circuits before conversion to noise shaped multibit Delta Sigma and from there to PCM is not a valid test of the DAC by listening.

Thor

We are still guessing at what DeltaWave is doing. Its getting us nowhere. IMHO its useless without proper documentation.No. That shows spectras calculated from original waveforms. The help text for that tab is "Original, unaligned spectra of both waveforms".

That said, the same music played twice in row on the same system should essentially have the same FFT spectrum each time. It doesn't matter when the Play button was pressed. Same for two files virtually the same as each other. It doesn't matter when play is pressed. The FFTs of the whole recordings should be virtually identical.

Moreover, moving bins up or down in value is EQ, unless the gain factor (multiplier) is the same constant for every bin, in which case its volume control.

Moving data in bins to the left or to the right is pitch shifting. Its how to make a tenor sound like Darth Vader. Move the tenor's bins to the left to pitch them down.

That's all I have to say about this subject for now.

Last edited:

When you make a recording of DAC output with ADC there is no pilot tone (or whatever) to synchronize playback and recording so ADC does not know where actual data starts. If they are started at the same time latency of the setup will be included in the beginning. For shorter recordings the FFT of the waveforms will be different.That said, the same music played twice in row on the same system should essentially have the same FFT spectrum each time. It doesn't matter when the Play button was pressed. Same to for two files virtually the same as each other. It doesn't matter when play is pressed. The FFTs of the whole recordings should be virtually identical.

We are still guessing at what DeltaWave is doing. Its getting us nowhere. IMHO its useless without proper documentation.

Here is the documentation -> https://deltaw.org/mydoc_quickstart.html.

Moreover, moving bins up or down in value is EQ, unless the gain factor (multiplier) is the same constant for every bin, in which case its volume control.

Moving data in bins to the left or to the right is pitch shifting. Its how to make a tenor sound like Darth Vader. Move the tenor's bins to the left to pitch them down.

Look at the Results tab. It will tell you the gain difference between the files as well as the timing offset. Both values are constant.

Michael

@mdsimon2

How is matched spectra calculated?

EDIT: Didn't see your query about phase, I think you can only see the delta of phase, not absolute.

Michael

I don't disagree with that but until better test protocols are commonly available

You will not get something like Audio ABX in simplicity "just listen ten times for a result" that is valid. Audio ABX gives an answer quickly and easily, the only problem is that the answer is wrong/useless.

it is better to use the ones we have.

We are back at the streetlight effect.

NO, IT IS NOT BETTER to perform pointless tests that give at best a false confidence at the reality of the outcome.

That is like validating searching for your lost car keys under the streetlight, when you actually dropped them near your car parked in Sumerian darkness, muds and weeds.

You can look forever, you will not find your keys.

Same with measurements: until Gedlee metric (or any other alternative) is commonly available in SW tools I don't see any point in using it.

This logic in extrema suggests that, in absence of widely available antibiotics, sulfa, disinfectants etc. we should be in favour of weapons salve because that's what's available, even if we can very logically reason that there is no way in which weapons salve actually can work.

I don't consider what diyaudio is about as science. Asking for ABX is simply to have some controls in place.

Again, Audio ABX lacks in balanced confidence levels of the avoidance of statistical errors. Or a little more accessible, Audio ABX is so strongly biased towards minimising the risk of "false positives" that as result we have minimal confidence we are not having a "false negative".

Any such test lacks significance AND power in a statistical sense. If you go from "pass/fail" to reasonable statistics (e.g. confidence interval) you find that with Audio ABX you are in statistical no mans land, where you need to either increase your dataset size dramatically or admit that you cannot conclude anything.

Until and unless ABX can be adjusted to offer results that offer both significance and power in a statistical sense, they are the same as the Pepsi test, including the deliberately build in bias towards a specific outcome.

Thor

We are still guessing at what DeltaWave is doing.

May I suggest a vastly superior approach?

1) Validate the digital domain for matching gains. Non-matching digital gains can be compensated later, if known.

2) Set up reliably sample start aligned chains for the two systems, compensate latency at the source (hopefully latency is not variable).

3) Perform nulling in the analogue domain. Using modern methods nulls of better than 60dB should be possible.

4) Amplify, Record and Analyse the Analogue domain subtraction result. Recording the two signals in parallel and adding a latency compensated version of the original would aid additional analysis. Always generate as much data as feasible.

Now we have a valid test of the actual DAC's, algorithms etc.

Thor

PS, as test signals I would propose to add noise loading, that is a multiband filtered white noise spectrum that is the noise equivalent of multi tone testing to single frequency sine wave signals.

Please describe which the above audible effects would be determined to be audible or inaudible by use of DeltaWave.

I don't know what is audible to you.

I think @bohrok2610 suggested this in the beginning, but if you listen to the two files (DeltaWave also offers a comparator that you can use to listen after the files have been time / gain aligned -> https://deltaw.org/comparator.html) and point out areas where you where you can reliably tell a difference, we could then look at the DeltaWave results to help understand the differences at those moments in time.

Michael

- Home

- Source & Line

- Digital Line Level

- Return-to-zero shift register FIRDAC