You just convolve the two impulses with each other. That will give you a new filter with a length that is the sum of the lengths of the two original filters. The new filter likely has very small amplitude at the ends, so you can probably re-window and cut the length down a bit without losing anything of value.I hope this question is not too far off topic...

I've often read that two FIR filters can be combined into one in order to achieve the same results as cascading both filters. I just never grasped how to take two FIR filters and join them into a new one. How's this actually done in practice?

I just checked, yes it's different. The GUI doesn't use the mute functionality, it just sets the volume to -99dB instead to mute. I don't remember why it's like this (haven't touched that part for quite a while), but it's definitely not good. I'll change it to use the set/get_mute commands.Is the mute functionality in the GUI different from the mute functionality when using get_mute() and set_mute()?

Thanks for the explanation. If I understand this correctly, convolving impluse a with impulse b would lead to the same result as convolving b with a, right? Sorry for these "explain to me like I'm five" type of questions. I suck at math, my greatest talent is putting square pegs into round holes.You just convolve the two impulses with each other. That will give you a new filter with a length that is the sum of the lengths of the two original filters. The new filter likely has very small amplitude at the ends, so you can probably re-window and cut the length down a bit without losing anything of value.

Yes convolving a with b gives the exact same result as convolving b with a.

It's easier to see in frequency space. There a convolution becomes a simple multiplication, and we know that a*b = b*a.

It's easier to see in frequency space. There a convolution becomes a simple multiplication, and we know that a*b = b*a.

Thanks for the feedback. Not a huge deal for me as I don't use the GUI for volume control but figured it might be something worth changing,I just checked, yes it's different. The GUI doesn't use the mute functionality, it just sets the volume to -99dB instead to mute. I don't remember why it's like this (haven't touched that part for quite a while), but it's definitely not good. I'll change it to use the set/get_mute commands.

Michael

Websocket is a very common standard. You can use any language as long as there is a websocket client library available for it (which probably means nearly all languages). You also need libraries for reading and writing json and yaml (also probably nearly all languages).Wondering if i can write a java app on mac to detect sample rate change and reload config or does websocket need as python app only..

Sounds good...i use java daily so prefer that...json and yaml should be fine..Websocket is a very common standard. You can use any language as long as there is a websocket client library available for it (which probably means nearly all languages). You also need libraries for reading and writing json and yaml (also probably nearly all languages).

I finally got a rme ufx+ to use with camilldsp..

It presents itself as 94 channel device..how do figure out correct channels to use in camilladsp

It presents itself as 94 channel device..how do figure out correct channels to use in camilladsp

By trial/error? IIUC it has 12 analog channels, they would likely be in a block, maybe the first channels?

The graphic display of filters... I'm on a bit old build so might have changed, but - I don't get the display... the vertical lines - what are these - there are 8 of them between 10Hz and 1k... ?? Pointing to the curve to find 100 Hz and do but don't hit a line... below is a low shelf, 150 Hz Q 0.5. There must be something fishy going on here ;-D

//

//

Attachments

Another one.... Mono...

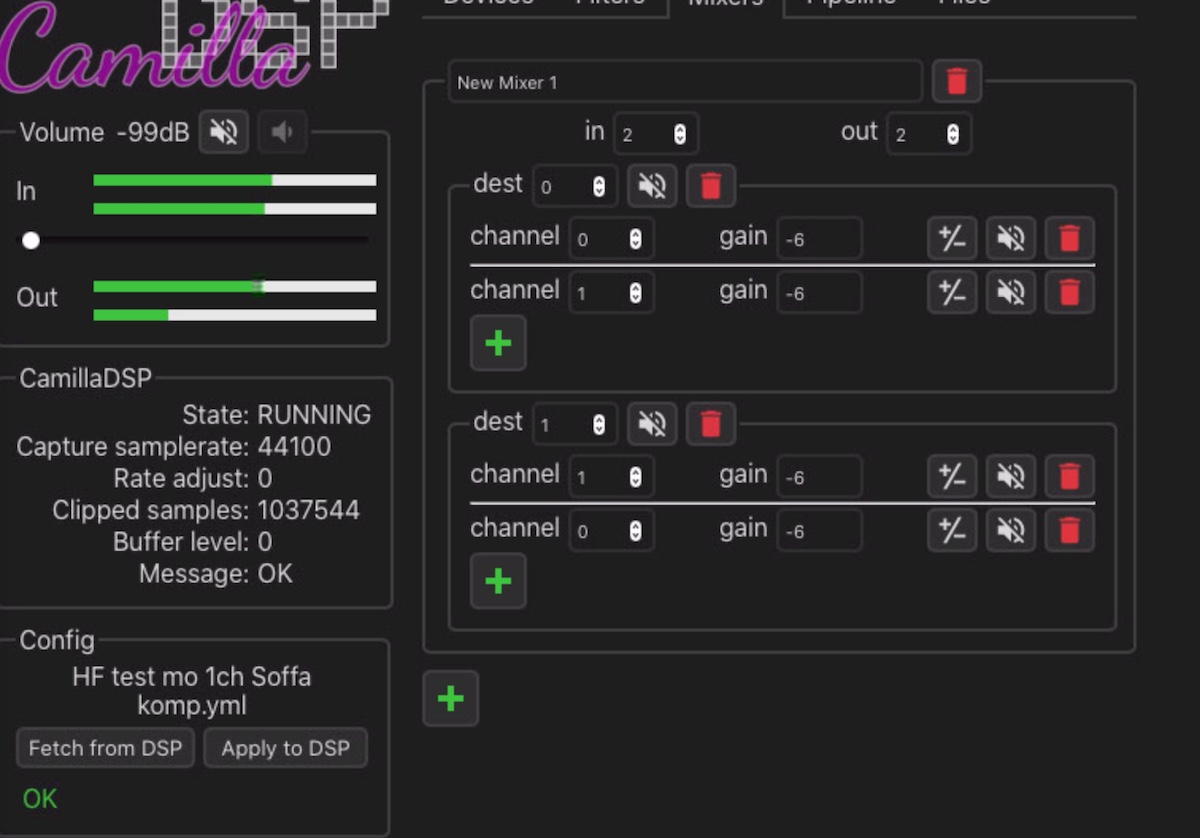

Isn't my mixer the way to turn 2ch stereo into 2 channels where both are mono and based on the stereo input channels?

I see the output level meters jump differently indicating that they are not the same?

//

Isn't my mixer the way to turn 2ch stereo into 2 channels where both are mono and based on the stereo input channels?

I see the output level meters jump differently indicating that they are not the same?

//

The previous version has some issues with the scales and tick marks in the plots. This will be much improved in the next one!The graphic display of filters... I'm on a bit old build so might have changed, but - I don't get the display... the vertical lines - what are these - there are 8 of them between 10Hz and 1k... ?? Pointing to the curve to find 100 Hz and do but don't hit a line... below is a low shelf, 150 Hz Q 0.5. There must be something fishy going on here ;-D

//

That looks correct, should work. Did you also add it to the pipeline?Another one.... Mono...

Isn't my mixer the way to turn 2ch stereo into 2 channels where both are mono and based on the stereo input channels?

I see the output level meters jump differently indicating that they are not the same?

Yes, and muting destinations work from the Mixer page.

Is it really mono? Where is the info for the output level meters taken?

//

Is it really mono? Where is the info for the output level meters taken?

//

The level meters take the values from the playback thread, just before the samples are send to the output device. They should definitely be identical, there may be a bug here.Yes, and muting destinations work from the Mixer page.

Is it really mono? Where is the info for the output level meters taken?

//

Could you show the pipeline tab of the gui?

Does it behave differently if you flip the order of the sources for channel 1 (it's first 1, then 0 now, try to put them the other way).

I twiddled with the source channels (channels - which should be called "source" instead of "channel" to match the "dest" used in that window) but found no difference. Sent you the config files.

//

//

Got the files and looked through the config. The confusion is that the mixer is placed at the beginning of the pipeline. That makes the two channels identical to begin with, but then the pipeline contains quite different filters for the two channels. For example the -6dB Gain filter is only applied to channel 1.

Just move the mixer to the end!

Just move the mixer to the end!

It's not that the channels are not levelled - its that the differences between them vary, like between stereo channels... as mono, they should have a constant level relation - they don't here - thats whats so strange. I still wonder where in the pipeline the out level meters measure?

This is for development of a speaker of which I only have one individual for now. So ch1 is tweeter and ch0 is bass - 2-way speaker. I dont want L in tweeter and R for bass.... hence the differences in filters.... but I had hoped for mono into the pipe-line by mixing incoming channels x-wise...

Mixer placing in PL... how to think about that? Rules?

//

This is for development of a speaker of which I only have one individual for now. So ch1 is tweeter and ch0 is bass - 2-way speaker. I dont want L in tweeter and R for bass.... hence the differences in filters.... but I had hoped for mono into the pipe-line by mixing incoming channels x-wise...

Mixer placing in PL... how to think about that? Rules?

//

The level meters measure input level just after the capture device, before the data gets sent to the pipeline, and output level after the pipeline just before the data gets sent to the output device.

If you start with mono as two identical channels, and then apply very different filters to the two channels, then I would expect the levels to behave exactly as you describe. Depending on the input, they will have stronger and weaker passages at different times.

Try on simple test. Just remove all filters and keep just the mixer in the pipeline. Now the output levels for the two channels should be identical.

Where in the pipeline to place the mixer, well that depends on what you want to accomplish.

If you start with mono as two identical channels, and then apply very different filters to the two channels, then I would expect the levels to behave exactly as you describe. Depending on the input, they will have stronger and weaker passages at different times.

Try on simple test. Just remove all filters and keep just the mixer in the pipeline. Now the output levels for the two channels should be identical.

Where in the pipeline to place the mixer, well that depends on what you want to accomplish.

- Home

- Source & Line

- PC Based

- CamillaDSP - Cross-platform IIR and FIR engine for crossovers, room correction etc