This looks like a great test load PMA. For the non linear L2device, do you have winding/core details because people will have to be able to build this.

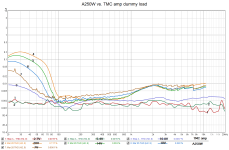

This load shows deep impedance dips and large phase angles in regions of the audio bandwidth where you would normally get a lot of music energy - so an ideal test bed IMV.

(Maybe a BOM also?)

This load shows deep impedance dips and large phase angles in regions of the audio bandwidth where you would normally get a lot of music energy - so an ideal test bed IMV.

(Maybe a BOM also?)

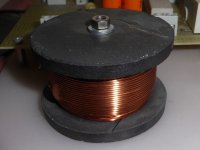

Unfortunately not exactly. Please see photo below, diameter = 65 mm, height = 40 mm, Cu wire dia = 1mm.For the non linear L2device, do you have winding/core details because people will have to be able to build this.

---------------------

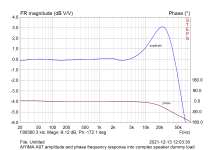

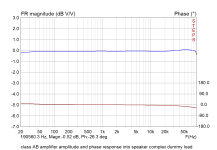

All, I prefer hard data to debates or anecdotal stories on amplifier testing, so some more plots.

I have been giving this whole AFOM idea some more thought. I do not think it should be used as a weapon, as we see on some websites that are obsessed with THD, as a sole measure of performance or quality. At the end of the day, people listen to what they like, and no one (objectivist or subjectivist) has any right to tell them what is right or wrong. The objective should be to indicate that an amplifier has been well-engineered within the constraints of the designer's chosen approach. For example, if someone decides to go for a zero feedback amplifier, then the assessment framework should still be able to point to a well-designed amplifier, despite higher THD than one using global feedback because the amplifier is exemplary in recovery from overload, hum and noise, etc.

The assessment should also act as a trigger to designers if there are areas of major failure. If for example the hum/noise is only measuring -40 dBV, that clearly is a problem that has to be visited in the design. So the tool acts to highlight problems in the design/engineering phase, and then for final assessment .

IMV, we should not make the measures so tight and constrained that only one kind of amplifier gets a top rating e.g. 1ppm distortion, 130 dB PSRR, 20 milli Ohm Zout etc etc.

Separately, what we should be guarding against is dogma that says a certain approach is better, or unfounded subjective (both from the subjectivists and objectivists) claims about the performance of an amplifier.

I will try to start a first draft next week for review.

The assessment should also act as a trigger to designers if there are areas of major failure. If for example the hum/noise is only measuring -40 dBV, that clearly is a problem that has to be visited in the design. So the tool acts to highlight problems in the design/engineering phase, and then for final assessment .

IMV, we should not make the measures so tight and constrained that only one kind of amplifier gets a top rating e.g. 1ppm distortion, 130 dB PSRR, 20 milli Ohm Zout etc etc.

Separately, what we should be guarding against is dogma that says a certain approach is better, or unfounded subjective (both from the subjectivists and objectivists) claims about the performance of an amplifier.

I will try to start a first draft next week for review.

It's been some hours .. I waited.

I'll just offer some thoughts... I have a passion for (and perhaps some relevant experience in) standards... so...

I know there are some that may say that it's "easy" to determine if something is well-engineered or not. Wonderful, then let those that review the results make that determination.

I advocate for reporting the facts without "judgment" or pre-determined merit. Let the reviewer decide if the results are "good or bad".

From my experience with standards committees, it's hard enough to determine a set of measurements that a group of reasonable people can agree indicate (nevertheless encompass) the performance of a product. It's damn-near impossible to get people to agree upon what makes something "good" or not and assign a rating.

As you've said....

I agree... so why bother with a "rating"...?

So... just report the results. No weighting. No "rating". It can be an unprecedented set of tests that (while likely not all-inclusive) can be consistently performed by a relatively large number of people for the purposes of comparing the objective characteristics of various audio amplifiers.... nothing more... nothing less.

IMO, it cannot and should not be implied that some "high score" means that one amplifier is "better" than another. The controversy around "SINAD" alone is enough to make me queezy.

People can make their own determinations based on their own acceptance criteria... unless the dream is that this become more marketing than engineering. 😉. I'm KIDDING!

My ... 1 cent.

I'll be excited whichever way this turns... it's long overdue.

I'll just offer some thoughts... I have a passion for (and perhaps some relevant experience in) standards... so...

Agreed. No bias.I do not think it should be used as a weapon

No... just performance. Quality in and of itself is a tenuous term that brings subjectivity into the mix. Who defines high quality?performance or quality

Not in my opinion. That requires an engineering analysis and a level of understanding around the intent of the designer. If the designer intended to create a 10% THD amplifier for their own reasons... they've met their objective. Who's to say that's not a quality amplifier? A standardized set of measurements is a herculean task on its own. Deciding whether something was (in someone's eyes) done "well" or not, again is completely subjective.The objective should be to indicate that an amplifier has been well-engineered within the constraints of the designer's chosen approach

I know there are some that may say that it's "easy" to determine if something is well-engineered or not. Wonderful, then let those that review the results make that determination.

I advocate for reporting the facts without "judgment" or pre-determined merit. Let the reviewer decide if the results are "good or bad".

Again... if you don't worry about "rating". It's irrelevant. All types of amplifiers can be reported upon.only one kind of amplifier gets a top rating

From my experience with standards committees, it's hard enough to determine a set of measurements that a group of reasonable people can agree indicate (nevertheless encompass) the performance of a product. It's damn-near impossible to get people to agree upon what makes something "good" or not and assign a rating.

As you've said....

no one (objectivist or subjectivist) has any right to tell them what is right or wrong

I agree... so why bother with a "rating"...?

YES!Separately, what we should be guarding against is dogma that says a certain approach is better, or unfounded subjective (both from the subjectivists and objectivists) claims about the performance of an amplifier.

So... just report the results. No weighting. No "rating". It can be an unprecedented set of tests that (while likely not all-inclusive) can be consistently performed by a relatively large number of people for the purposes of comparing the objective characteristics of various audio amplifiers.... nothing more... nothing less.

IMO, it cannot and should not be implied that some "high score" means that one amplifier is "better" than another. The controversy around "SINAD" alone is enough to make me queezy.

People can make their own determinations based on their own acceptance criteria... unless the dream is that this become more marketing than engineering. 😉. I'm KIDDING!

My ... 1 cent.

I'll be excited whichever way this turns... it's long overdue.

A problem is that we have no objective tests for stereo imaging. No common test for asymmetrical cross talk. All we have for imaging are listening tests. And nobody in the forum, including reputable companies like Purifi can afford to do them to professional publication quality standards. IIRC @lrisbo of Purifi said they have done some informal testing in collaboration with a university, but they can't afford to do listening tests of publication quality.

Also, seems to me the people who speak out most emphatically about the uselessness of non-controlled listening tests just may be some of the least skilled listeners out there. So of its natural for them to believe what they say. However, maybe consider this: the professionals that record, mix, and master the music you listen to all do it sighted. It takes them years to gain the listening skills they need, and not everyone who starts out on that journey has what it takes to become successful at it. Those people are skilled experts, and yes, they can be fooled if you set out to fool them, but the way they normally work, using equipment they are familiar with, reference CDs, etc., is all sighted. So, there are obviously some people who are better at sighted listening than others. Just like anything else, I might add. Some people can make it to being really good at playing professional basketball, not everybody can. And it takes a long time and a lot of practice to get good at it.

Returning to the subject of stereo imaging, I have two headphone amps with fabulous, incredible objective measurements. Neither one has a cross feed. I tried both of them as line amps in my stereo system. One destroyed the imaging. The other was lopsided. Neither one was useable. How could that happen? My guess would be its because AP analyzers don't have an objective test for it.

IMHO, the failure of the designer to perform proper subjective listening tests resulted in the sale of two faulty products.

Also, seems to me the people who speak out most emphatically about the uselessness of non-controlled listening tests just may be some of the least skilled listeners out there. So of its natural for them to believe what they say. However, maybe consider this: the professionals that record, mix, and master the music you listen to all do it sighted. It takes them years to gain the listening skills they need, and not everyone who starts out on that journey has what it takes to become successful at it. Those people are skilled experts, and yes, they can be fooled if you set out to fool them, but the way they normally work, using equipment they are familiar with, reference CDs, etc., is all sighted. So, there are obviously some people who are better at sighted listening than others. Just like anything else, I might add. Some people can make it to being really good at playing professional basketball, not everybody can. And it takes a long time and a lot of practice to get good at it.

Returning to the subject of stereo imaging, I have two headphone amps with fabulous, incredible objective measurements. Neither one has a cross feed. I tried both of them as line amps in my stereo system. One destroyed the imaging. The other was lopsided. Neither one was useable. How could that happen? My guess would be its because AP analyzers don't have an objective test for it.

IMHO, the failure of the designer to perform proper subjective listening tests resulted in the sale of two faulty products.

Last edited:

^ FWIW... I agree to an extent. We just perhaps look at it slightly differently.

To me... It's not a problem that "we" don't have those tests. However, it would be nice if we did and/or if they were cheaper, less time consuming, and more reliable.

We have lots and lots of meaningful tests that can be conducted. As long as people quit with the seemingly intentionally contentious arguments around "one set of tests to rule them all"; whether those tests are objective, subjective, or a combination both; I think we're just fine.

I've never run into another situation personally where so many people like to argue over whether more information is better or not. Sure, information can be misinterpreted. Bad analysis of even the most controlled data can lead to incorrect conclusions.

However, and this is a very careful however... what's more dangerous is garbage / uncontrolled data. I have no problem whatsoever with people claiming they can hear something that others claim they couldn't possibly. What harm does it cause? Too many 'objectivists' seem to want to save 'subjectivists' from their own peril. Who gives a rat's patootey? People can have some fun. It's a hobby for most people. Similarly, too many 'subjectivists' continually, incessantly, and forgive me for saying, annoyingly, point out each and every time that listening is the only way to truly evaluate things properly. I'm not in any way implying that you're one of those people... but it happens in every thread. It's inevitable.

Use the data... or don't. As long as no one tries to imply "quality" or "better sound" ... groooooooooooooovy in my book. People can use the data to draw whatever conclusions they may. One piece of a complex puzzle.

I think you mentioned a medical background. If not, my apologies. If so, you know that ANY kind of properly controlled human study is $$$$$ compared to hooking up a bit of kit to some other kit and jotting down some results. Don't throw the baby out with the bathwater ... the objective tests have value. Just because we can't do a full study of each and every facet of each and every amplifier with objective and subjective tests.... doesn't mean that we can't use what we have. Let's face it, people are going to make whatever decisions they're going to make anyway re: the overall objective vs. subjective argument. That's not a hill I'm going to die on.

All I advocate for is more controlled measurements and standardized collection of the objective data. That doesn't mean I discount (proper) listening tests in any way... or that I discount people's opinions re: what they like. Heck, I have my own theory that I'd NEVER pay to test. I think a vast majority of my friends from a particular country prefer a different tonal profile to what I prefer. Almost to a person... Yes, this is anecdotal and FILLED with bias. So, anything re: "preference", I leave out anyway. I like what I like... even if it's wrong and meaningless. I'm not publishing in a peer-reviewed journal. I'm having fun.

Now... as for trained listeners. Wonderful. I personally like the methods that look for "defects" or differences vs. preferences. I'm sure there are better listeners out there than I. No question. Do I trust paid critics/reviewers? No, not much. I won't discount that's perhaps because my tastes don't align with theirs and/or my audio memory sucks wind or that I'm just highly susceptible to confirmation bias... or their rooms are better than mine or...or...or...or... One thing is true though; unless I was experiencing the same sensations they were at the same time, and under the same circumstances ... I don't care what they think. Others may hang on their every word. We can all live happily.

That's just fun conversation....

As for "AFOM" - I support whatever comes out. However, I'll only participate if everyone that uses / shares the data uses the same methodology for the line-item tests to allow meaningful and controlled comparison of the data sets. One person's

To me... It's not a problem that "we" don't have those tests. However, it would be nice if we did and/or if they were cheaper, less time consuming, and more reliable.

We have lots and lots of meaningful tests that can be conducted. As long as people quit with the seemingly intentionally contentious arguments around "one set of tests to rule them all"; whether those tests are objective, subjective, or a combination both; I think we're just fine.

I've never run into another situation personally where so many people like to argue over whether more information is better or not. Sure, information can be misinterpreted. Bad analysis of even the most controlled data can lead to incorrect conclusions.

However, and this is a very careful however... what's more dangerous is garbage / uncontrolled data. I have no problem whatsoever with people claiming they can hear something that others claim they couldn't possibly. What harm does it cause? Too many 'objectivists' seem to want to save 'subjectivists' from their own peril. Who gives a rat's patootey? People can have some fun. It's a hobby for most people. Similarly, too many 'subjectivists' continually, incessantly, and forgive me for saying, annoyingly, point out each and every time that listening is the only way to truly evaluate things properly. I'm not in any way implying that you're one of those people... but it happens in every thread. It's inevitable.

Use the data... or don't. As long as no one tries to imply "quality" or "better sound" ... groooooooooooooovy in my book. People can use the data to draw whatever conclusions they may. One piece of a complex puzzle.

I think you mentioned a medical background. If not, my apologies. If so, you know that ANY kind of properly controlled human study is $$$$$ compared to hooking up a bit of kit to some other kit and jotting down some results. Don't throw the baby out with the bathwater ... the objective tests have value. Just because we can't do a full study of each and every facet of each and every amplifier with objective and subjective tests.... doesn't mean that we can't use what we have. Let's face it, people are going to make whatever decisions they're going to make anyway re: the overall objective vs. subjective argument. That's not a hill I'm going to die on.

All I advocate for is more controlled measurements and standardized collection of the objective data. That doesn't mean I discount (proper) listening tests in any way... or that I discount people's opinions re: what they like. Heck, I have my own theory that I'd NEVER pay to test. I think a vast majority of my friends from a particular country prefer a different tonal profile to what I prefer. Almost to a person... Yes, this is anecdotal and FILLED with bias. So, anything re: "preference", I leave out anyway. I like what I like... even if it's wrong and meaningless. I'm not publishing in a peer-reviewed journal. I'm having fun.

Now... as for trained listeners. Wonderful. I personally like the methods that look for "defects" or differences vs. preferences. I'm sure there are better listeners out there than I. No question. Do I trust paid critics/reviewers? No, not much. I won't discount that's perhaps because my tastes don't align with theirs and/or my audio memory sucks wind or that I'm just highly susceptible to confirmation bias... or their rooms are better than mine or...or...or...or... One thing is true though; unless I was experiencing the same sensations they were at the same time, and under the same circumstances ... I don't care what they think. Others may hang on their every word. We can all live happily.

That's just fun conversation....

As for "AFOM" - I support whatever comes out. However, I'll only participate if everyone that uses / shares the data uses the same methodology for the line-item tests to allow meaningful and controlled comparison of the data sets. One person's

Last edited:

Combining a set of objective measurements by means of a subjective weighting will give you a subjective judgement. Not an objective one.

There is research showing that too much information can be worse than a moderate amount of information. When there is too much information to easily make sense of then people may just throw up their hands and ignore it all.I've never run into another situation personally where so many people like to argue over whether more information is better or not.

^ Very true. Doesn't go against my point nor is the likely reason people are arguing, but nonetheless that's accurate.

So... let's leave out the subjective side of things, and focus on the topic of the thread which is objective measurements. 😉

You know I'm kidding. I've enjoyed the chat. Thanks!

So... let's leave out the subjective side of things, and focus on the topic of the thread which is objective measurements. 😉

You know I'm kidding. I've enjoyed the chat. Thanks!

Design quality i.e. 'Did I as a designer attend to all the important things to make sure my design is foolproof and meets certain minimum standards on a range of parameters' is exactly what AFOM is trying to address. It's also trying to make sure useless effort and complexity aren't wasted on parameters that beyond a certain point mean nothing. No one will ever hear the difference between 0.01% distortion and 1ppm distortion, and designers who chase that metric at the expense of other more important metrics should not get rewarded for it.It's been some hours .. I waited.

I'll just offer some thoughts... I have a passion for (and perhaps some relevant experience in) standards... so...

Agreed. No bias.

View attachment 1256701

No... just performance. Quality in and of itself is a tenuous term that brings subjectivity into the mix. Who defines high quality?

Not in my opinion. That requires an engineering analysis and a level of understanding around the intent of the designer. If the designer intended to create a 10% THD amplifier for their own reasons... they've met their objective. Who's to say that's not a quality amplifier? A standardized set of measurements is a herculean task on its own. Deciding whether something was (in someone's eyes) done "well" or not, again is completely subjective.

I know there are some that may say that it's "easy" to determine if something is well-engineered or not. Wonderful, then let those that review the results make that determination.

I advocate for reporting the facts without "judgment" or pre-determined merit. Let the reviewer decide if the results are "good or bad".

Again... if you don't worry about "rating". It's irrelevant. All types of amplifiers can be reported upon.

From my experience with standards committees, it's hard enough to determine a set of measurements that a group of reasonable people can agree indicate (nevertheless encompass) the performance of a product. It's damn-near impossible to get people to agree upon what makes something "good" or not and assign a rating.

As you've said....

I agree... so why bother with a "rating"...?

YES!

So... just report the results. No weighting. No "rating". It can be an unprecedented set of tests that (while likely not all-inclusive) can be consistently performed by a relatively large number of people for the purposes of comparing the objective characteristics of various audio amplifiers.... nothing more... nothing less.

IMO, it cannot and should not be implied that some "high score" means that one amplifier is "better" than another. The controversy around "SINAD" alone is enough to make me queezy.

People can make their own determinations based on their own acceptance criteria... unless the dream is that this become more marketing than engineering. 😉. I'm KIDDING!

My ... 1 cent.

I'll be excited whichever way this turns... it's long overdue.

Emphasis added is mine. That's what I have trouble with. It's now subjective. You seem to be going toward certain things being weighted more than others.and designers who chase that metric at the expense of other more important metrics should not get rewarded for it.

I truly don't have an issue at all, and I'll do what very little I can to support the effort, but your statements alone tell me that there's subjectivity around it.

I have no horse in the race, and I'm excited either way.

A monstrous kudos to you for taking on just getting a list of tests together. Now, if there are actual methods for those tests (to be followed strictly) along with sampling and reporting guidelines, then we're talking good stuff.

Regardless of the answer that you're clearly looking for... My point is and will remain... You said you wanted an objective assessment. You're making it subjective.

I like the objective metrics (potentially scaled accordingly) all by themselves. As soon as there is a judgement re: audibility or importance, it's subjective. It may be subjectively "correct", but it is by definition subjective.

Showing (as an example) a "2" rating vs. the actual data (to me) isn't the way to go.

With that said, I'll always offer my thoughts, and I look forward to continued discussions. I may be dead wrong. I like to learn and discuss. I am new to audio, but not to standards creation. I've already had my mind changed about a few things just by reading others' comments and yours too.

It's not a hill for me to die on or "argue" about. It's simply an opinion, and I think a well-reasoned opinion. I appreciate that you even read my thoughts, consider them, and respond to them.

I like the objective metrics (potentially scaled accordingly) all by themselves. As soon as there is a judgement re: audibility or importance, it's subjective. It may be subjectively "correct", but it is by definition subjective.

Showing (as an example) a "2" rating vs. the actual data (to me) isn't the way to go.

With that said, I'll always offer my thoughts, and I look forward to continued discussions. I may be dead wrong. I like to learn and discuss. I am new to audio, but not to standards creation. I've already had my mind changed about a few things just by reading others' comments and yours too.

It's not a hill for me to die on or "argue" about. It's simply an opinion, and I think a well-reasoned opinion. I appreciate that you even read my thoughts, consider them, and respond to them.

^  👍

👍

Yes, and I suppose where I'm coming from is a passion for agreeing on a set of specifications and how they'll be measured consistently across a broad group of people.

Less important (to me) is an overall metric. However, the really really nice thing about having a lot of raw specification data gathered in a controlled manner, is that it can be used to compare amplifiers in a meaningful way in any number of "algorithms".

There could be the Bonsai algorithm (which will surely be the most popular and the best) along with the ItsAllInMyHead algorithm which people will ignore b/c it puts a disproportionate (to some) amount of weight behind cost / Watt.

I'm not saying the world will end if there is some sort of "rating" with "weightings". It's simply that they are by definition subjective. I'd like to at the very least ensure that if the trouble is gone through to collect a lot of data / measurements across a variety of amplifiers that it's not only distilled down to a "56".

tl;dr - show me the spec sheet!

🙂

Thanks to all. I'll bow out now. I don't want to suck the oxygen out of the room. I think my point's clear, and saying it over and over doesn't add to a thoughtful discussion.

👍

👍

Yes, and I suppose where I'm coming from is a passion for agreeing on a set of specifications and how they'll be measured consistently across a broad group of people.

Less important (to me) is an overall metric. However, the really really nice thing about having a lot of raw specification data gathered in a controlled manner, is that it can be used to compare amplifiers in a meaningful way in any number of "algorithms".

There could be the Bonsai algorithm (which will surely be the most popular and the best) along with the ItsAllInMyHead algorithm which people will ignore b/c it puts a disproportionate (to some) amount of weight behind cost / Watt.

I'm not saying the world will end if there is some sort of "rating" with "weightings". It's simply that they are by definition subjective. I'd like to at the very least ensure that if the trouble is gone through to collect a lot of data / measurements across a variety of amplifiers that it's not only distilled down to a "56".

tl;dr - show me the spec sheet!

🙂

Thanks to all. I'll bow out now. I don't want to suck the oxygen out of the room. I think my point's clear, and saying it over and over doesn't add to a thoughtful discussion.

Too much information is certainly overwhelming if there is no goal. Without a goal, there is no way to put the information in order as pertains to something. If there is too much information you can't just keep it all in your head and allow a goal to emerge from it. Many people only work this way and they will find themselves unable to cope once complexity reaches a certain level. To get past this you learn to break things down into smaller goals, which often seem oversimplified to the point of absurdity, and yet are the only practical way to proceed.

This is just what a "figure of merit" is. What we are trying to do for the user is to present differences in amplifier specs in a way that is relevant to an end user's goals.

For instance someone might want to find the quietest amp possible, and sorting amps by noise they might end up with a unity gain buffer, which won't get loud enough for their source.

A good thing to do would be to list all the ways in which current amplifier comparisons are not relevant to the end user's goals. The more clear it is the problem we are trying to solve, the more likely we will see a solution.

This is just what a "figure of merit" is. What we are trying to do for the user is to present differences in amplifier specs in a way that is relevant to an end user's goals.

For instance someone might want to find the quietest amp possible, and sorting amps by noise they might end up with a unity gain buffer, which won't get loud enough for their source.

A good thing to do would be to list all the ways in which current amplifier comparisons are not relevant to the end user's goals. The more clear it is the problem we are trying to solve, the more likely we will see a solution.

I would say that from a very basic, and objective perspective, all parameters matter equally. This might feel "wrong" from some perspective but if we want to do an objective technical figure of merit, I think it needs to be unweighted in terms of included measurements. There will be some anyway because we just cant multiply the values coming out of e.g. 12 measurements. But I think we should skip the discussion if clipping is 2x less important than 3 tone IMD. Define the measurements that we know matter, figure out the how to give each measurement type a figure of merit. Sum the figures for a amp merit - here comes the weighting whether we like it or not.. or?

//

//

All parameters and measurements are important but there are always limits when it is pointless and useless to make more parameter improvements. It starts to be expensive then with no audible effect. To me, parameters are important, but, stability and reliability first. All else seems to be an individual ego competition.

Well, do we want to limit the scope to just reliability then? That isn't too hard, but it does have the problem that we can't necessarily determine reliability without destructive testing or taking it apart. I had written a post about test loads but didn't bother to send it.

If we choose not to include sound quality however we will end up with poor amps ranked high just because those amps don't explode.

If we really want a useful metric we must some way or some how contend with the fact that 0.5% tube distortion can sound okay and 0.05% crossover distortion can sound bad. I'm not sure this is as hard as it's made out to be. We just need to set a reasonable threshold for crossover distortion.

There are papers that give equations for audibility metrics for different kinds of distortion. We know of the Gedlee metric. I thought another was posted in this thread. One metric is not perfect, so we can use two or three of the most sucessful ones. No one seems to want to or know how to convert those equations into a form we can actually use though.

If we choose not to include sound quality however we will end up with poor amps ranked high just because those amps don't explode.

If we really want a useful metric we must some way or some how contend with the fact that 0.5% tube distortion can sound okay and 0.05% crossover distortion can sound bad. I'm not sure this is as hard as it's made out to be. We just need to set a reasonable threshold for crossover distortion.

There are papers that give equations for audibility metrics for different kinds of distortion. We know of the Gedlee metric. I thought another was posted in this thread. One metric is not perfect, so we can use two or three of the most sucessful ones. No one seems to want to or know how to convert those equations into a form we can actually use though.

No, but you need neither 2kV/us, nor 0.000001% THD. It brings audibly nothing, but most probably worse reliability. Of course, you would get high score in ego charts 😉.

- Home

- Amplifiers

- Solid State

- AFOM: An attempt at an objective assessment of overall amplifier quality