Another update:

I was getting intermittent results in the lower frequencies (sub and bass) and identified the root cause being the RT (Ringing Truncation) phase of DRC-FIR. Once disabled (will revisit later in more detail), the low frequency amplitude corrections consistently snapped into place.

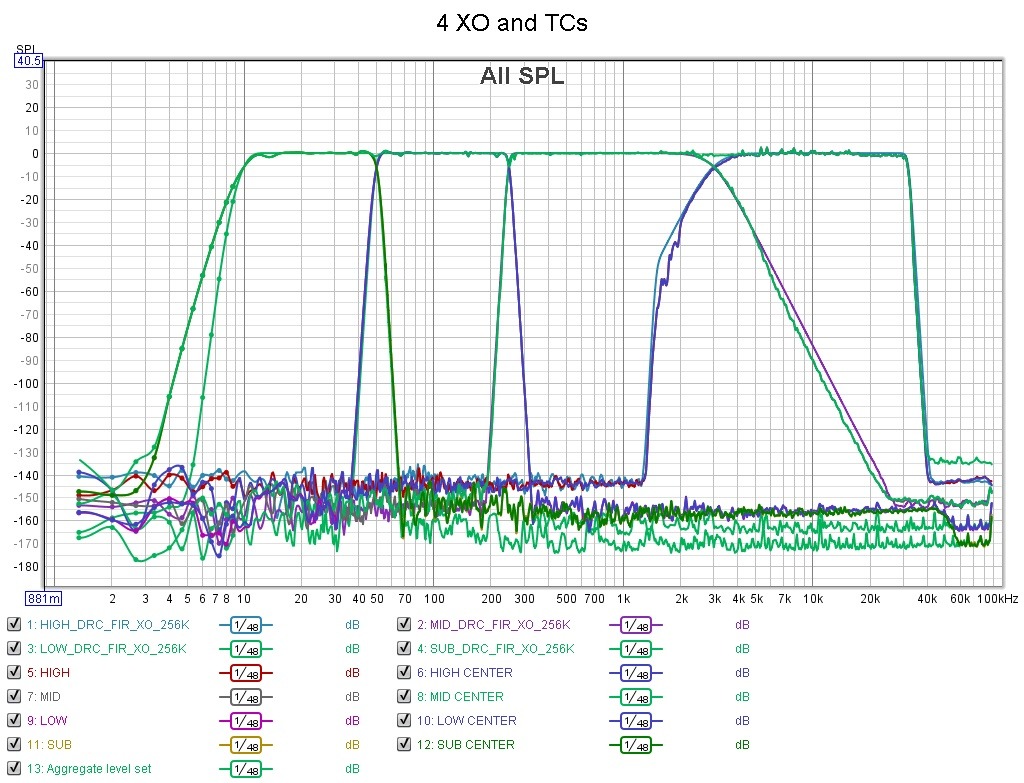

The following are 4 actual sweeps (sub,bass,midrange and tweeter), corrected individually, test convolved individually and merged into an aggregate test convolution of all 4 drivers.

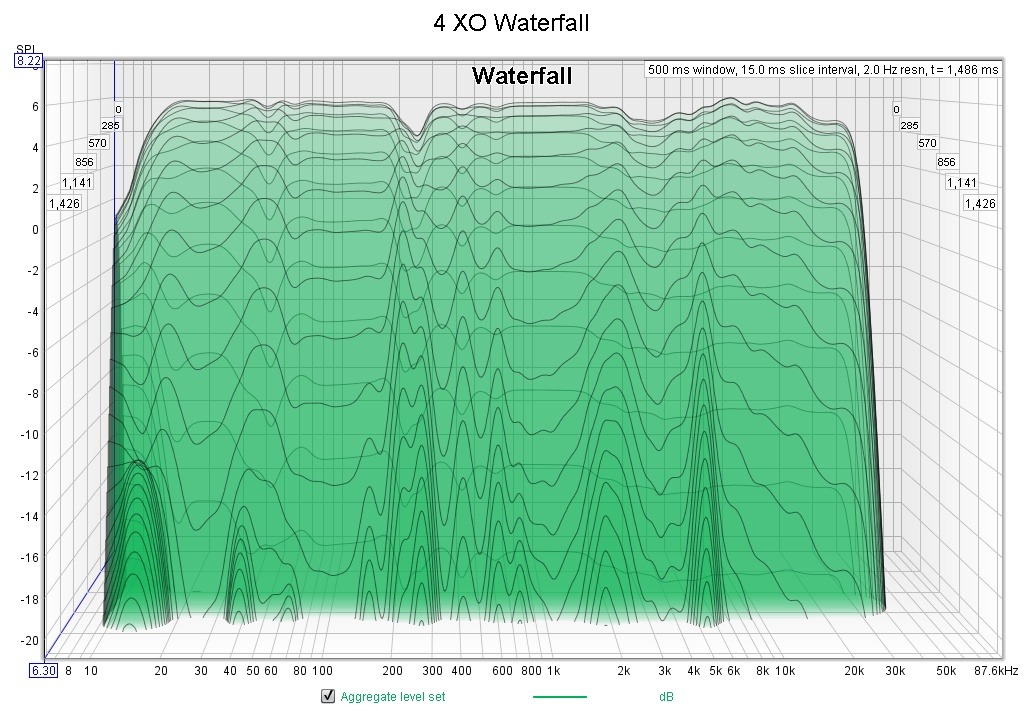

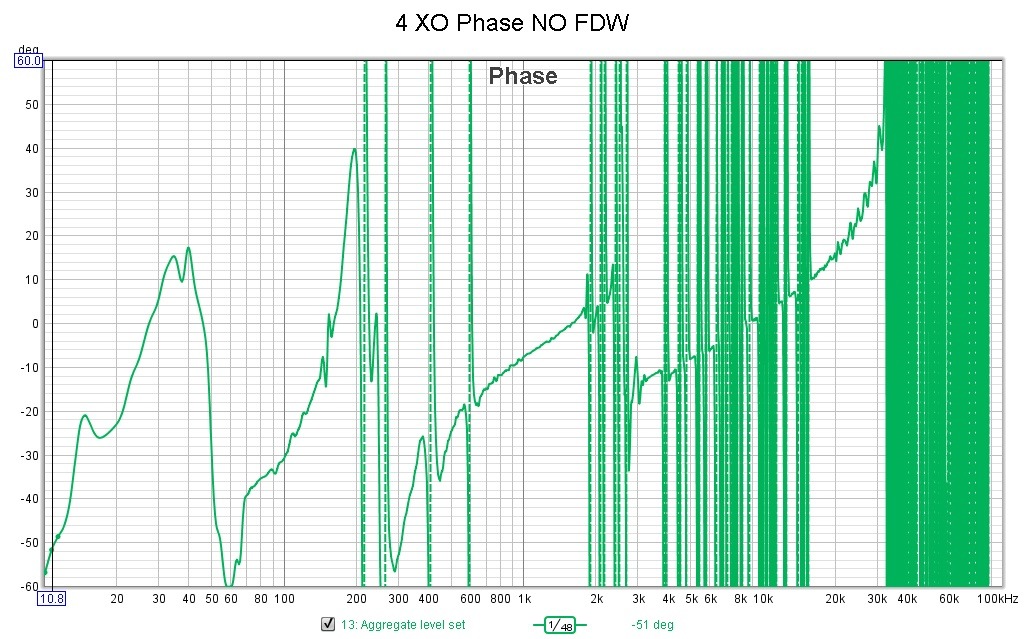

I am working on the better automating the phase integration between the 4 drivers next with current state shown in the last plot (with no FDWing enabled). The phase discontinuity between the bass and midrange panels exhibits itself in a non-flat summing across the XO region (as seen in the waterfall plot at 250Hz) which hopefully will be shortly automated.

Plot windows are displayed with Blackman-Harris-4 windowing, no FDWing and 1/48th/octave smoothing for readability.

176.4kHz sampling rate, 256K taps.

Waterfall using 1/3rd octave smoothing.

Crossover points at 50Hz, 250Hz and 3kHz.

I was getting intermittent results in the lower frequencies (sub and bass) and identified the root cause being the RT (Ringing Truncation) phase of DRC-FIR. Once disabled (will revisit later in more detail), the low frequency amplitude corrections consistently snapped into place.

The following are 4 actual sweeps (sub,bass,midrange and tweeter), corrected individually, test convolved individually and merged into an aggregate test convolution of all 4 drivers.

I am working on the better automating the phase integration between the 4 drivers next with current state shown in the last plot (with no FDWing enabled). The phase discontinuity between the bass and midrange panels exhibits itself in a non-flat summing across the XO region (as seen in the waterfall plot at 250Hz) which hopefully will be shortly automated.

Plot windows are displayed with Blackman-Harris-4 windowing, no FDWing and 1/48th/octave smoothing for readability.

176.4kHz sampling rate, 256K taps.

Waterfall using 1/3rd octave smoothing.

Crossover points at 50Hz, 250Hz and 3kHz.

Be aware that final SPL level is determined per channel and not set at a fixed level.

So some manual tuning to get the levels correct is required, between channels in a side and the left/right output.

Might be something you want to address, though the way it is done is to avoid any clipping.

I have created a tool to convert back and forth between dB and relative RMS values to adjust the RMS levels between drivers without guesswork. Enter the desired relative delta in dB and it will generate the relative RMS/NormFactor setting. I am now trying to incorporate it into the controller to automate the balance the RMS levels between all driver XO/Corrections in the left and right channels.

I figured out how to sum FIRs in source code (instead of doing it in REW with the trace arithmetic functions) to make composite FIRs in addition to merging FIRs. I made some general library tools that can be directed to either sum or multiply in the frequency domain to facilitate both functionalities. Wish I would have known how to play with FFTs a long time ago.

The tool can now composite (sum) all XO's/channel into a single FIR for analysis (see cyan plot below) without having to manually do it in REW. It can also create test convolutions of each XO/correction and its corresponding signal and then composite all of the test convolutions/channel into a single FIR for analysis (see gold plot below).

Once the composite XO and composite test convolutions are generated, they can be quickly compared in REW.

My rig is stereo 4-way, so it can composite (4 drivers) x (2 channels) x (N plot) sets in seconds. This greatly speeds up the analysis work in REW. This new composite functionality should feed into the following work.

There are 3 different normalization options in DRC-FIR and I am now working on automating the relative normalization setting generations in DRC-FIR so the reliance on relative gain settings in JRMC or CamillaDSP are minimized and hopefully eliminated along with manual intervention.

The following plot shows the crossovers, the uncorrected signal and the generated corrections. Note: The purple sub XO has a deliberate 6dB/octave bass boost in it, so it shouldn't be flat.

I discovered that by doing corrections at the driver level, one may be able to achieve a finer degree of control of the artifacts and THD.

In this plot, it became apparent that the bass driver had the most severe corrections (due to room modes) which resulted in elevated THD, but those settings were fine for the other drivers. Being such, each driver could have more or less correction based on its unique situation and characteristics. In my situation, the bass needs a WE of @ 0.7 to get rid of the artifacts where the other drivers are happy with higher WE settings.

Aggregating the individual PS FIRs would be another use for the composite tool and subsequent analysis.

Here is another plot of the bass driver's correction settings (WE of 1.0 vs 0.8) that only cause artifacts in the bass driver but not the other 3.

Note the extended purple "tines" that are attenuated in the blue plot with a lower WE setting of 0.8.

The code is getting close to becoming my daily driver. Progress is being made in spite of the requisite learning curve. There are a few more refinements in the works once I find an appropriate automated solution(s) for them. One being the improvement of each drivers phase as a subset of the whole system and possibly incorporating the requisite delays into each FIR. This could minimize/eliminate the reliance on the convolver's delay settings similar to the automated gain leveling.

Any and all ideas, critiques and constructive criticisms are welcomed.

TTYL

Last edited:

I do have a question: the merge function, is it like a toolbox component? Say I have 2 FIR files I want to merge, con I do it with your version or script?

And what length and which placement of the IR peak would that get me? For instance if I merge two different length's IR's? Or one IR has a different placement of the peak than the other...

I have merged FIR files in a lot of different ways, but I've always had the need to cut them to length to make it work.

Tedious work that I'd like to get rid of. 🙂

And what length and which placement of the IR peak would that get me? For instance if I merge two different length's IR's? Or one IR has a different placement of the peak than the other...

I have merged FIR files in a lot of different ways, but I've always had the need to cut them to length to make it work.

Tedious work that I'd like to get rid of. 🙂

I do have a question: the merge function, is it like a toolbox component? Say I have 2 FIR files I want to merge, con I do it with your version or script?

And what length and which placement of the IR peak would that get me? For instance if I merge two different length's IR's? Or one IR has a different placement of the peak than the other...

I have merged FIR files in a lot of different ways, but I've always had the need to cut them to length to make it work.

Tedious work that I'd like to get rid of. 🙂

What tools have you used to merge files and cut them to length ? Maybe I can glean some tips from them.

Right now I am "retapping" everything to the same length before and after the merge. I modified the code to output in "like" formats to get everything to "play nice" together. I am planning on adding configurable #taps and normalizations. If there is a better way to do this, please chime in.

I still need to come up to speed on "cut them to length" if it is done differently than applying windows to them around their center points. DRC-FIR uses mostly Blackman windows so I have stayed the course with the new code.

I am open to suggestions for appropriate use cases and additional functionality, but want to first work towards completion of the automated driver level solution.

I will add CLI interfaces to DRC-FIR to for the merges:

( e.g. drc --merge --[sum|mult] --MergeIn=A.pcm --MergeIn=B.pcm [--MergIn=C.pcm, ... ] --MergeOut=out.pcm ).

I see a need to sum more than 2 FIRs (e.g. N-Way speaker).

Is there a need to multiply more than 2 FIRs ??? Might as well add the functionality should the uses case ever arise.

I added convenience shell calls out to SoX after the merged PCM has been written to convert the PCM to a WAV file so it can be fed straight into REW, JRMC and/or CamillaDSP. Actually, it calls out to my wrapper to bypass SoX's 32-bit thunking on F64 files.

Currently, I am calling the functions within test harness subroutines within the code using 256k taps, 176.4kHz and F64 sample sizes. I plan to make it more generic, but that will require additional CLI parameters (and/or config file) for each supplied input/output file.

Instead of wearing out my shiny new NVMe drive, the test code is writing out a ramdisk. =)

The code was rubber stamping (cut-n-paste) the output file functionality code for each output file and I have tried to rewrite those sections to eliminate the redundancies with calls to re-usable functions to facilitate maintenance and ease the addition of new functionality with minimal code growth. The Octave plotting code also made heavy use of cut-n-paste code where reusable functions would have made it much smaller and manageable.

Other functionality that have been added is the ability to retap FIRS and dump the various window models from the CLI interface.

Any specific options you would like to see with the retapping function (nTaps, desired window, etc.) ???

Basically I've used anything I could find, I even convolved files with JRiver (record to disk) and REW.

Using JRiver required a longer wav file as input and was cut to length using an old version of CoolAudio Pro.

Using REW to combine FIR's also took some after work, but I have to admit I've never tried using the windowing in REW to do the cutting.

It might just work like that. I've often used RePhase to generate some corrective EQ FIR files after using DRC. Mainly to remove some over-correction

or to balance left and right channels or simply to add a crossover slope. EQ work sometimes is done in REW too and saved as FIR file there.

I don't rely on DRC-FIR to get it all right in one go. I've always tweaked the results further due to the quirks of line arrays and their behavior. But also

to cater to my room, with 4 channels available in the bass region one can use the strong points of each "way" to get a better end result.

For that to happen I do not always use a 'text-book' crossover. The sub might help out in an area where the array faces a (room) null or vice-versa.

Basically I measure the speaker(s) (using some pré EQ) and run that trough DRC-FIR. (I usually run about 3 sweeps per channel)

Next I run another sweep (or 3) with the FIR correction in place to get the base line of the correction.

After that step, some EQ is applied to balance left-right and to target over-correction. (both in bass where the room is dominant and top end where

combing might influence the correction applied)

These steps are done within REW, sometimes with some additional RePhase work. For that to go well I need to convolve several FIR files together.

The basic correction together with some corrective EQ work and/or RePhase generated FIR files.

I wouldn't dream of automating all of that, but I would be open to have better tools to join FIR files.

Even though the above seems like a lot of work, I think it is worth it for me, to adjust it to my specific room/situation.

I've spend a lot of time trying to "read my room" to know what is what. Find where potential nulls are, where reflections come from etc.

Measuring at several spots in my listening "area" to find the things in common in those measurements. I've found that a single spot correction works best,

instead of using a vector average of multiple measurements, but it still is good to know what is position dependent and what is common within that space.

Line arrays are quite forgiving in that area, except for parallel planes. Single drivers show more position dependent quirks.

I can't quite get the summation of FIR files for a N-Way speaker. As far as I use them (6 channels), I use one FIR file (or a left/right channel of a stereo FIR)

per channel and use the configuration script language to define which FIR file goes where? Anyway, I do usually convolve more than 2 files together 😉.

But what do you mean by that? Is it about the DRC correction together with the crossover slopes on both ends? Or is there more going on...

I run at 44100 sample rate and use 65536 taps (impulse centered). I let JRiver scale the correction for other sample rates. Most of my music is CD based

so I don't see the need to upsample everything. I used to do that but after rigorous testing I had to conclude I really did not hear a difference.

Yes, I've heard differences in some higher sample rate recordings, compared to the ordinary CD versions, but after testing their spectrum with a simple

spectrum analyzer, those differences were down to mastering differences, showing a different frequency balance. So I do have a collection of High Res Audio

based on a preference for their specific mastering. Just my opinion/experience with High-Res audio. I expected to find more differences than balance alone.

I bet the bit-rate can make more difference than the sample rate in the end. My current DAC is 24 bit.

Using JRiver required a longer wav file as input and was cut to length using an old version of CoolAudio Pro.

Using REW to combine FIR's also took some after work, but I have to admit I've never tried using the windowing in REW to do the cutting.

It might just work like that. I've often used RePhase to generate some corrective EQ FIR files after using DRC. Mainly to remove some over-correction

or to balance left and right channels or simply to add a crossover slope. EQ work sometimes is done in REW too and saved as FIR file there.

I don't rely on DRC-FIR to get it all right in one go. I've always tweaked the results further due to the quirks of line arrays and their behavior. But also

to cater to my room, with 4 channels available in the bass region one can use the strong points of each "way" to get a better end result.

For that to happen I do not always use a 'text-book' crossover. The sub might help out in an area where the array faces a (room) null or vice-versa.

Basically I measure the speaker(s) (using some pré EQ) and run that trough DRC-FIR. (I usually run about 3 sweeps per channel)

Next I run another sweep (or 3) with the FIR correction in place to get the base line of the correction.

After that step, some EQ is applied to balance left-right and to target over-correction. (both in bass where the room is dominant and top end where

combing might influence the correction applied)

These steps are done within REW, sometimes with some additional RePhase work. For that to go well I need to convolve several FIR files together.

The basic correction together with some corrective EQ work and/or RePhase generated FIR files.

I wouldn't dream of automating all of that, but I would be open to have better tools to join FIR files.

Even though the above seems like a lot of work, I think it is worth it for me, to adjust it to my specific room/situation.

I've spend a lot of time trying to "read my room" to know what is what. Find where potential nulls are, where reflections come from etc.

Measuring at several spots in my listening "area" to find the things in common in those measurements. I've found that a single spot correction works best,

instead of using a vector average of multiple measurements, but it still is good to know what is position dependent and what is common within that space.

Line arrays are quite forgiving in that area, except for parallel planes. Single drivers show more position dependent quirks.

I see a need to sum more than 2 FIRs (e.g. N-Way speaker)

I can't quite get the summation of FIR files for a N-Way speaker. As far as I use them (6 channels), I use one FIR file (or a left/right channel of a stereo FIR)

per channel and use the configuration script language to define which FIR file goes where? Anyway, I do usually convolve more than 2 files together 😉.

But what do you mean by that? Is it about the DRC correction together with the crossover slopes on both ends? Or is there more going on...

I run at 44100 sample rate and use 65536 taps (impulse centered). I let JRiver scale the correction for other sample rates. Most of my music is CD based

so I don't see the need to upsample everything. I used to do that but after rigorous testing I had to conclude I really did not hear a difference.

Yes, I've heard differences in some higher sample rate recordings, compared to the ordinary CD versions, but after testing their spectrum with a simple

spectrum analyzer, those differences were down to mastering differences, showing a different frequency balance. So I do have a collection of High Res Audio

based on a preference for their specific mastering. Just my opinion/experience with High-Res audio. I expected to find more differences than balance alone.

I bet the bit-rate can make more difference than the sample rate in the end. My current DAC is 24 bit.

Last edited:

I bet the bit-ratecan make more difference than the sample rate in the end. My current DAC is 24 bit.

Bit depth obviously 😉. Reminder to myself: don't reply when in a hurry 🙂.

Higher bit depth plus higher sample rate isn't a bad thing to strive for though. If your hardware can process it adequately.

I believe you can merge - get the H(f) of several cascaded filters - in Vituix of all places. Draw the schematic with a G(f) block for each filter than export the impulse response of the filter chain's buffer. Vituix has IR to G(f) function at the bottom of its tools menu

I use summation to simulate/predict/analyze/test/debug how the aggregated N-XOs and various stages of processing would perform in unison against a baseline fullrange measurement and solution.I can't quite get the summation of FIR files for a N-Way speaker. As far as I use them (6 channels), I use one FIR file (or a left/right channel of a stereo FIR)

per channel and use the configuration script language to define which FIR file goes where? Anyway, I do usually convolve more than 2 files together 😉.

But what do you mean by that? Is it about the DRC correction together with the crossover slopes on both ends? Or is there more going on...

This is in addition to analyzing the various steps at a per driver level (without summation) to find out where the solution algorithms are NOT performing as expected (e.g. as you suggested, the phase of each driver mating into the phase of the next driver causing discontinuities in the sums for one use case). More on this later.

I sum:

NOTE: XO=Crossover, MC=Mic Corrected Measurement, PS=Solution, IS=Inverse, PSXO=Combined XO and PS, PSXOTC=Test Convolution of PSXO against the original sweep measurements.

- Raw Crossovers: (SubXO + LowXO + MidXO + HighXO)

- Mic Corrected Measurements: (SubMC + LowMC + MidXO + HighXO)

- Raw Solutions: (SubPS + LowPS + MidPS + HighPS)

- Raw Inverses: (SubIS + LowIS + MidIS + HighIS)

- Test Convolutions: (SubPSXOTC + LowPSXOTC + MidXOTC + HighXOTC)

- Etc.

My scripts run solutions on each driver individually and all drivers as a full range sweep (so I actually have 5 sweep measurements per channel: Sub, Low, Mid, High, Full).

I can then compare the summed aggregate plots against the full range plots as a baseline to verify how the driver-level code changes are performing against the baseline DRC-FIR fullrange code. It is how I identified what parts of DRC-FIR do no like/work at the driver-level. It can also help identify where corrections might be better addressed externally with the physical hardware setup.

Now for the "More on this later" mentioned above:

After asking if the code should support multiplying more than 2 FIRs, I found a use-case. I discovered I can make pure phase FIRs in RePhase (e.g. +/- 10, 15, 45, 90, 135 degrees with no other changes). I can then merge/multiply those against the individual driver solutions to modify the overall phase of each driver. I haven't figured out how to do this in code by modifying the imaginary portions of the FFT yet (reading up on that as we speak), but happily stumbled (blind squirrel theory) on this merge/multiply method.

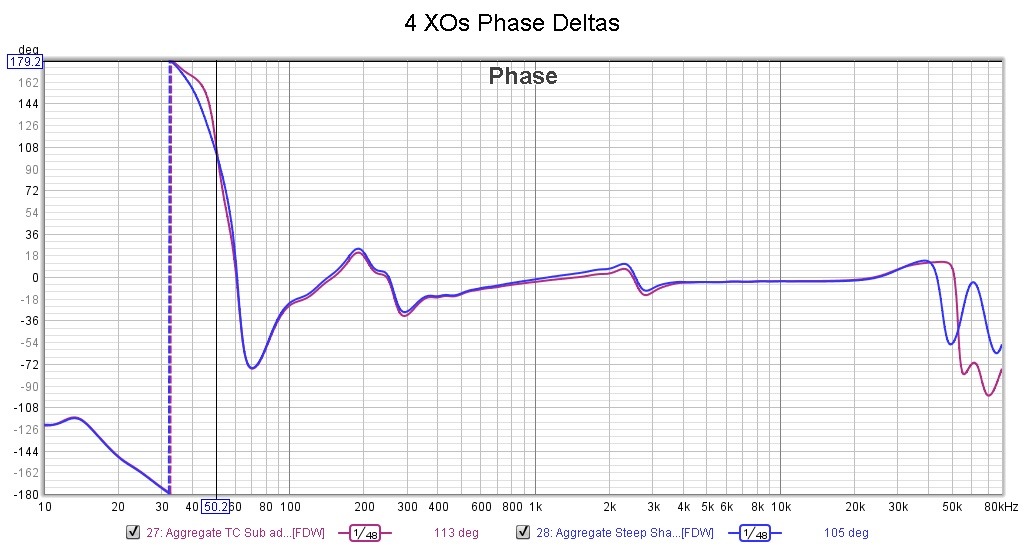

DRC-FIR had the HIGH and MID phases fairly flat and horizontal ( ---- and ---- ) though could still use some minor improvements.

My SUB and BASS panels needed the most phase correction and this method nicely tilts their phase. DRC-FIR "flattened" out the phase, but didn't correct the tilt.

The SUB and BASS tilters were off in different directions ( / and \ ). By multiplying the SUB and BASS with their respective corrective flat linear phase FIRS, the resulting tilts are corrected accordingly ( / and \ becomes --- and --- ) facilitating improved XO regions.

The subsequent summed test convolutions IR and STEP plots reflected this improvement accordingly by more closely matching the baseline summed XO plots.

It allows the edges of the XOs to sum more accurately and is an improvement over the vanilla DRC-FIR code. This was one of the primary goals of the driver level correction efforts. Individual driver's phase correction before summation INSTEAD of trying to correct phase as a average of 2 drivers (which is impossible with full-range corrections).

This improves phase scenarios that DIR-FIR can't ( /|/, \|\, /\ and \/ becomes ---- instead of an averaged compromise). Now to automate it (eventually doing it directly in code vs the merge/multiply) .

In summary, the PSXO 2*FIR merge/multiply use case becomes a 3*FIR PSXOPHASE merge/multiply use case.

Last edited:

Continuation of the "More on this later" mentioned above:

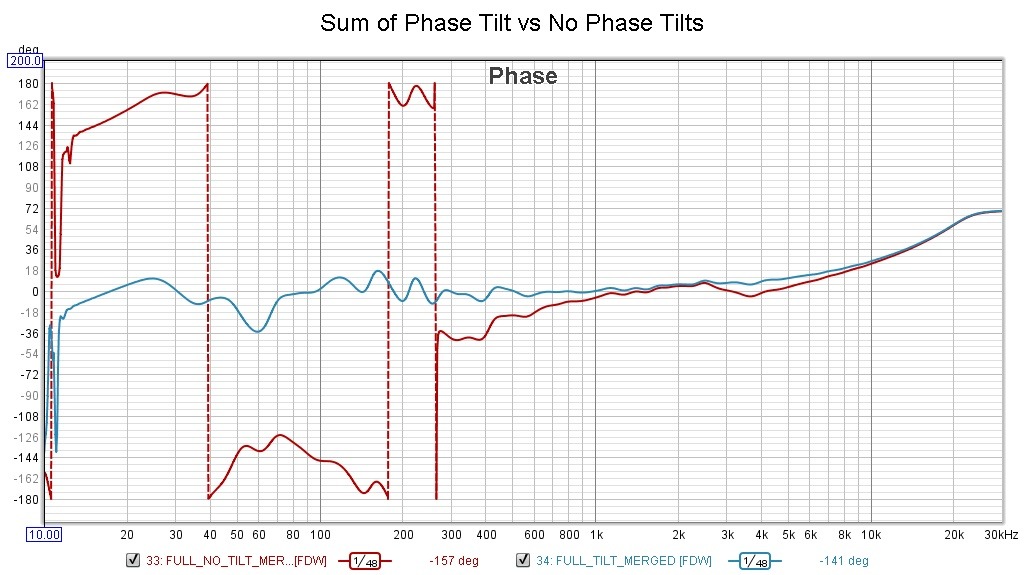

This plot shows how DRC-FIR was flattening out the phase of each driver, but wasn't adjusting the "tilts" (see 50Hz, 250Hz and 3kHz crossover regions). The merge/multiplying of a RePhase generated +/-N phase FIRs improves these phase incontinuity steps by leveling their "tilts" such they line up before being summed.

Here is a picture of some OB/Dipole line arrays and OB/Dipole Servo Subs designed by Danny Richie of GR Research. Note they are physically housed in separate baffles (2 baffles/side) with different distances from the listening position. The above functionality could be applied to such configurations. I am running line arrays in 3 different baffles (sub, bass and (mid/high)), so these automated corrections are what I am trying to achieve. My subs are DIY made from Danny's drivers and the rest is a combination of Magnepan drivers and NEO8 midranges.

Other systems such as sub/satellite, Wisdom Audio multi-baffle modes, Magnepan muti-baffle modeles, etc. could also utilize this functionality.

This plot shows how DRC-FIR was flattening out the phase of each driver, but wasn't adjusting the "tilts" (see 50Hz, 250Hz and 3kHz crossover regions). The merge/multiplying of a RePhase generated +/-N phase FIRs improves these phase incontinuity steps by leveling their "tilts" such they line up before being summed.

Here is a picture of some OB/Dipole line arrays and OB/Dipole Servo Subs designed by Danny Richie of GR Research. Note they are physically housed in separate baffles (2 baffles/side) with different distances from the listening position. The above functionality could be applied to such configurations. I am running line arrays in 3 different baffles (sub, bass and (mid/high)), so these automated corrections are what I am trying to achieve. My subs are DIY made from Danny's drivers and the rest is a combination of Magnepan drivers and NEO8 midranges.

Other systems such as sub/satellite, Wisdom Audio multi-baffle modes, Magnepan muti-baffle modeles, etc. could also utilize this functionality.

Last edited:

I just read a thread which appears to confirm my phase approach above by Uli, the creator of Acourate who is light-years beyond me.

My first approximation was using a RePhase phase only FIR to do the correction. Uli takes it one step further by making a matching phase FIR plot and then inverting it before applying it to flatten out the phase. Much more accurate than my "straight line" first approximation, but basically the same work-flow.

We were both applied the FIR and then rewindowing it back down to the original size.

Original German Thread

English "Translation" Thread

My first approximation was using a RePhase phase only FIR to do the correction. Uli takes it one step further by making a matching phase FIR plot and then inverting it before applying it to flatten out the phase. Much more accurate than my "straight line" first approximation, but basically the same work-flow.

We were both applied the FIR and then rewindowing it back down to the original size.

Original German Thread

English "Translation" Thread

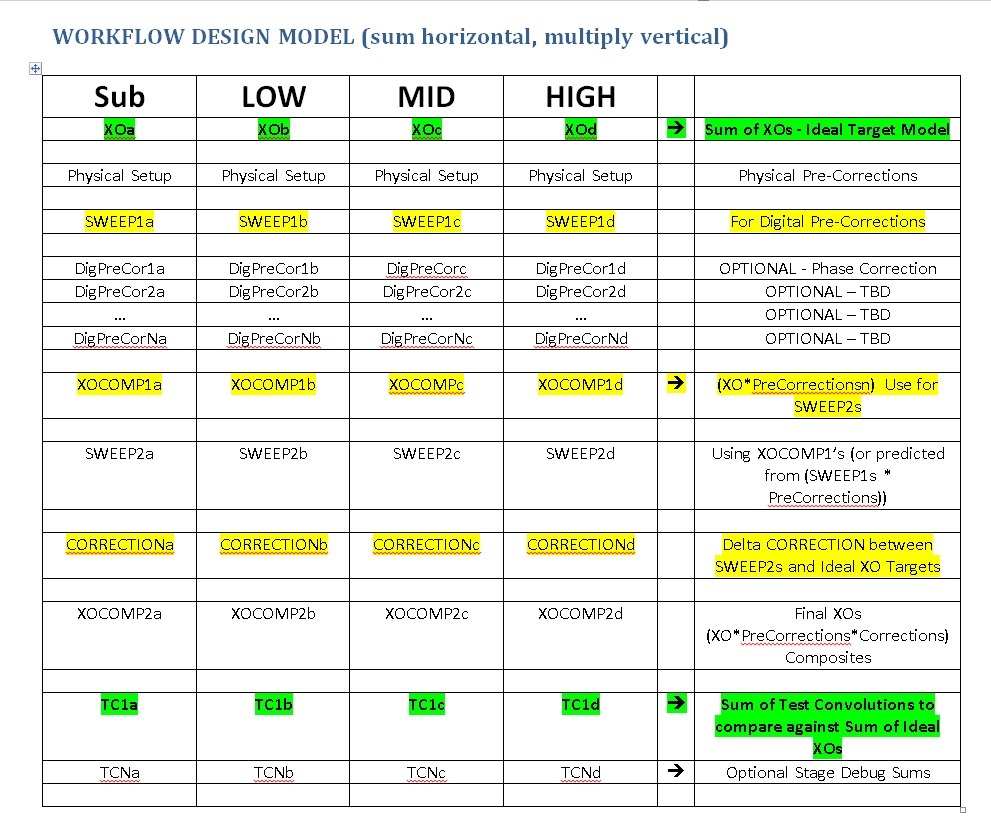

The structure is starting to take shape.

It looks like the physical and digital workflows can all be viewed as table driven with 1 or more drivers.

If a step needs refinement, adjustments are made, back up a couple steps/rows and then advance again in an iterative progression, converging on the final solution.

The beginning and ending green row results (individual and summed) should closely mirror each other on successful implementation.

Based on Uli's post and other threads/videos I have read/viewed (e.g. from Mitcho), it looks like there is definitely a need for digital pre-correction stage(s) (e.g. driver phase, sub correction, OB/Dipole considerations, etc.) in addition to the the physical pre-correction stage(s) (e.g. speaker placement, toe-in/tilt, initial gains, polarity flips, room treatments, etc.) before the final correction is calculated and composited with the XO and pre-correction(s).

Making the digital pre-correction stage flexible for 0 or more correction types appears to be the thing to do. Since the vertical columns will be merged and retapped down to single FIRs of the same size, there should be few (hopefully no) resulting alignment issues when placed in the convolution engine.

Merge(sums) are represetned horizontally.

Merge(multiplies) are represented vertically.

It looks like the physical and digital workflows can all be viewed as table driven with 1 or more drivers.

If a step needs refinement, adjustments are made, back up a couple steps/rows and then advance again in an iterative progression, converging on the final solution.

The beginning and ending green row results (individual and summed) should closely mirror each other on successful implementation.

Based on Uli's post and other threads/videos I have read/viewed (e.g. from Mitcho), it looks like there is definitely a need for digital pre-correction stage(s) (e.g. driver phase, sub correction, OB/Dipole considerations, etc.) in addition to the the physical pre-correction stage(s) (e.g. speaker placement, toe-in/tilt, initial gains, polarity flips, room treatments, etc.) before the final correction is calculated and composited with the XO and pre-correction(s).

Making the digital pre-correction stage flexible for 0 or more correction types appears to be the thing to do. Since the vertical columns will be merged and retapped down to single FIRs of the same size, there should be few (hopefully no) resulting alignment issues when placed in the convolution engine.

Merge(sums) are represetned horizontally.

Merge(multiplies) are represented vertically.

Be aware of how or more specifically when you create that phase correction. It might take a bit of time to find the right frequency dependent window.

I've used shorter windows than for my frequency correction (about 3 cycles, active in bass only), but larger (EP) windows than DRC-FIR recommends in

my prior corrections.

Lately I've just made sure to get an optimum minimum phase correction in DRC and use RePhase for phase manipulation (if needed). Mainly because

it resulted in a cleaner correction with less pré-ringing doing it like that.

Inverting too long a (Phase) window would likely be too much a point correction only valid for that specific (measurement) point. It would let in too much of the room.

I believe both Audiolense and Acourate also use different (window) settings/lengths for phase correction and frequency correction. I recall looking closely at Audiolense

for inspiration of what to use for DRC at that time. Basically the EP stage is the inverting phase stage.

I've used shorter windows than for my frequency correction (about 3 cycles, active in bass only), but larger (EP) windows than DRC-FIR recommends in

my prior corrections.

Lately I've just made sure to get an optimum minimum phase correction in DRC and use RePhase for phase manipulation (if needed). Mainly because

it resulted in a cleaner correction with less pré-ringing doing it like that.

Inverting too long a (Phase) window would likely be too much a point correction only valid for that specific (measurement) point. It would let in too much of the room.

I believe both Audiolense and Acourate also use different (window) settings/lengths for phase correction and frequency correction. I recall looking closely at Audiolense

for inspiration of what to use for DRC at that time. Basically the EP stage is the inverting phase stage.

I will keep this in mind.Be aware of how or more specifically when you create that phase correction. It might take a bit of time to find the right frequency dependent window.

I've used shorter windows than for my frequency correction (about 3 cycles, active in bass only), but larger (EP) windows than DRC-FIR recommends in

my prior corrections.

Lately I've just made sure to get an optimum minimum phase correction in DRC and use RePhase for phase manipulation (if needed). Mainly because

it resulted in a cleaner correction with less pré-ringing doing it like that.

Inverting too long a (Phase) window would likely be too much a point correction only valid for that specific (measurement) point. It would let in too much of the room.

I believe both Audiolense and Acourate also use different (window) settings/lengths for phase correction and frequency correction. I recall looking closely at Audiolense

for inspiration of what to use for DRC at that time. Basically the EP stage is the inverting phase stage.

If you look at Uli's post, it is done at the driver level, not full range. He is also correcting/inverting the phase's "trend curve" which implies a window of sorts. His plots show all of the room's "hash" still remains after the correction so each "wiggle" is not corrected.

My "slope/tilt" correction is similar in nature. It corrects the overall tilt of each drivers phase trend, not an exact inverse of each wiggle. The above workflow should be flexible enough to facilitate either or none of the approaches.

The detailed corrections should still be handled by DRC-FIR's controls after the pre-corrections, thus the user can fine coarse/tune it as desired. Or that is the current plan.

Another update:

Added Command Line Interfaces (CLI) to merge and resize/retap (change # of taps) FIRs. The retapping CLI allows you to specify the number of taps, input file format (e.g. F32, F16, INT32, INT16) as well as the final window algorithm to be applied (e.g. Hanning, Rectangle, Blackman, Blackman-Harris, Bartlett, Triangle, etc.).

The following code snippets shows examples of merging (sum and multi) FIRS using NormFactors to adjust the amplitude of the merged output FIR. Global settings are made in the X.drc file (such as NormTypes, SampleRates, etc.) so they don't have to be added on the CLI, but can be if desired. The CLI can merge up to 10 FIRs at once (hope that is enough but can be easily increased).

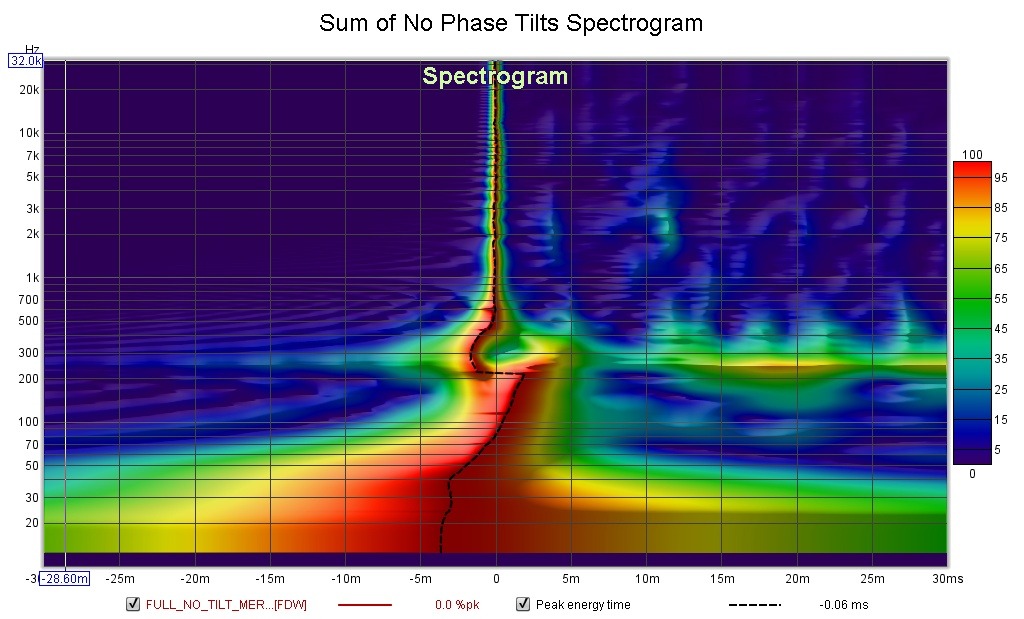

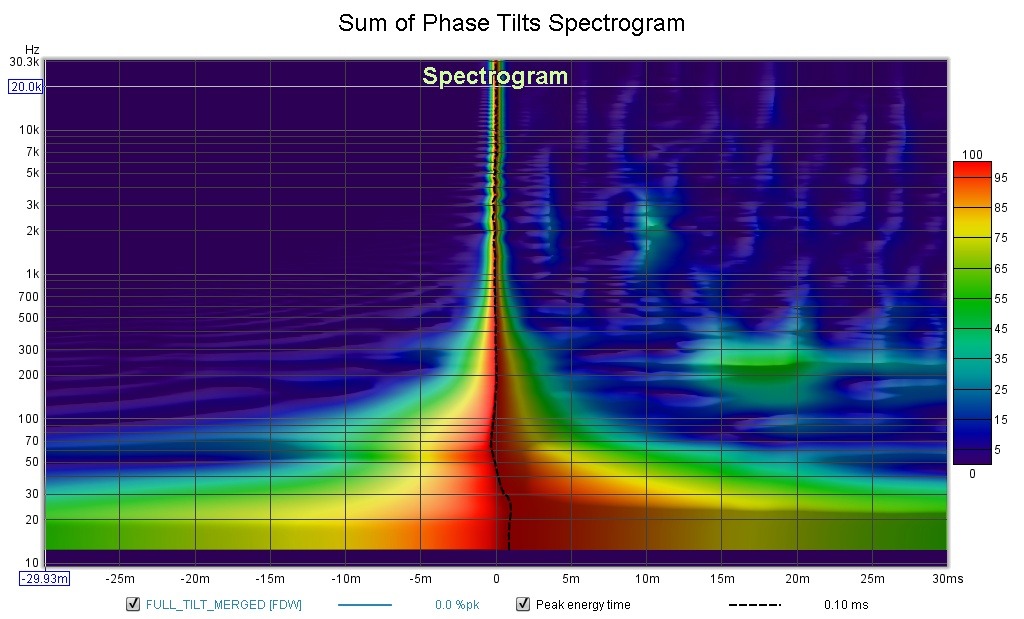

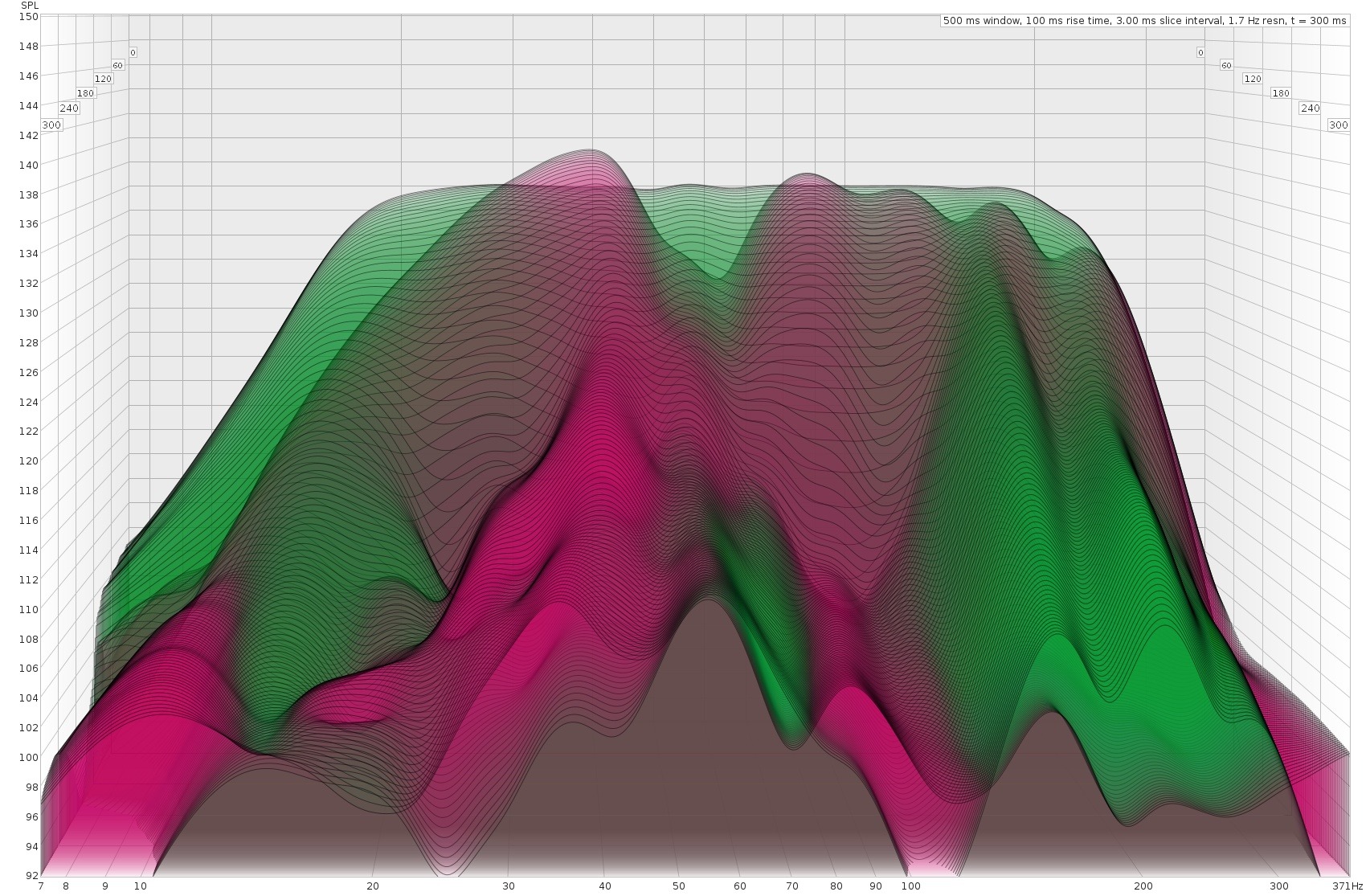

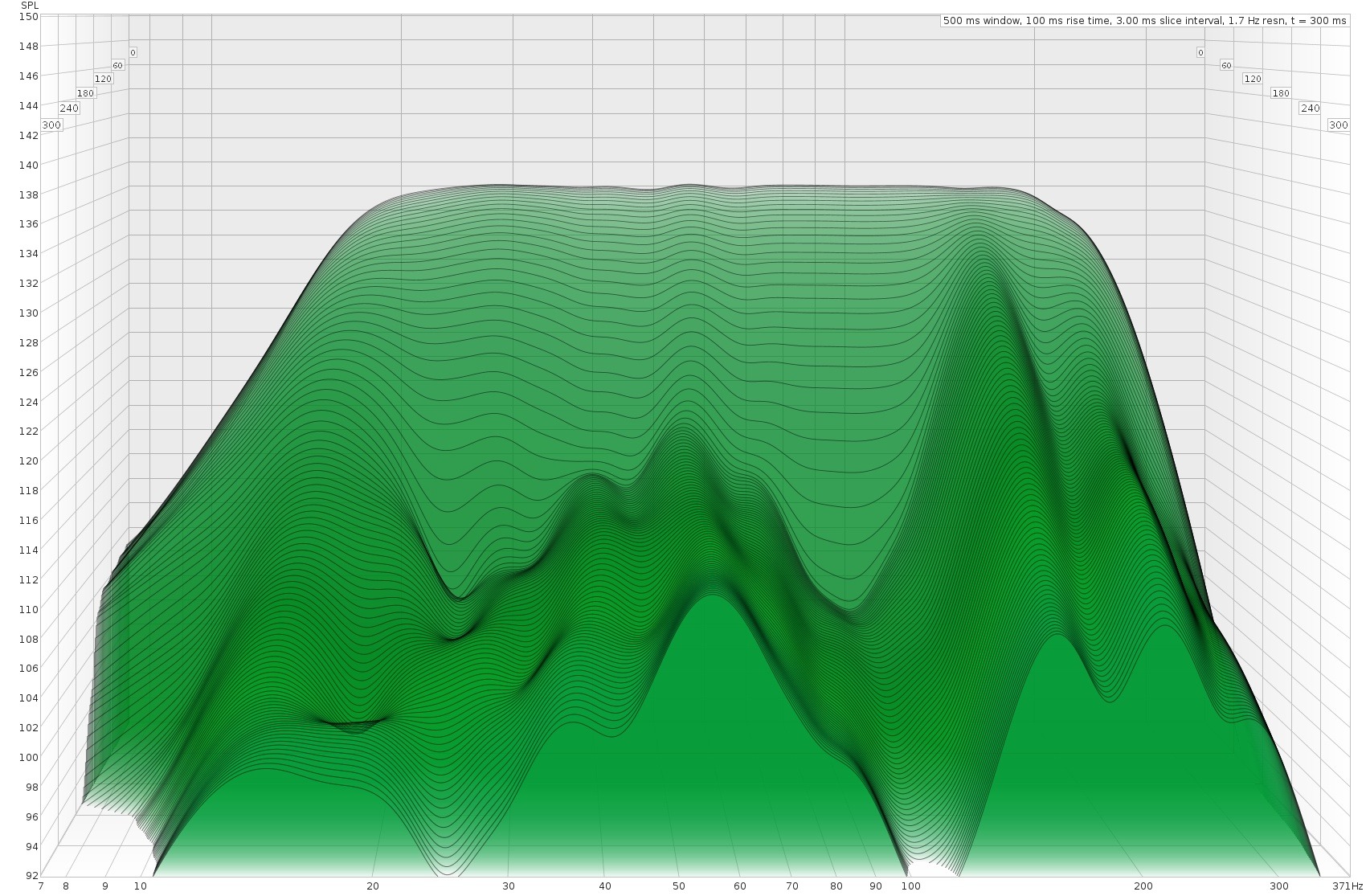

The following plots shows the summations of Test Convolutions of 4 drivers both with and without the Phase Tilt correction functionality recently added discussed above. I tried to drop in Uli's Phase curves for each of the 4 drivers centered at 20Hz, 50Hz, 250Hz and 3kHz. If you look closely, you can see each of those corrections. This is one of the major design goal milestones (especially for multi-baffle systems).

The Sub and Bass had their polarities intentionally inverted 180 degrees in the original measurements. If you look at the code snippets below, you will see 180 degree FIRs being merged to the Sub and Bass results in addition to their respective tilt FIRs (3-way merges). The Mid and High only has their tilt FIRs merged (2-way merges). This is a quick first pass and it looks like the 60Hz dip can be improved upon.

The Spectrograms depict the phase alignments of the 3 separate physical speaker baffles a bit more dramatically.

I used this little utility that takes the FIR's current RMS/NormFactor and the desired delta in dB which then returns the new RMS/NormFactor to scale the FIR to its desired amplitude. I used this utility to derrive the NormFactors used in the bash script below to balance the amplitude of all of the drivers. It works quite well.

Bash script to merge test FIRs via new CLI of the modified DRC-FIR code.

Added Command Line Interfaces (CLI) to merge and resize/retap (change # of taps) FIRs. The retapping CLI allows you to specify the number of taps, input file format (e.g. F32, F16, INT32, INT16) as well as the final window algorithm to be applied (e.g. Hanning, Rectangle, Blackman, Blackman-Harris, Bartlett, Triangle, etc.).

The following code snippets shows examples of merging (sum and multi) FIRS using NormFactors to adjust the amplitude of the merged output FIR. Global settings are made in the X.drc file (such as NormTypes, SampleRates, etc.) so they don't have to be added on the CLI, but can be if desired. The CLI can merge up to 10 FIRs at once (hope that is enough but can be easily increased).

The following plots shows the summations of Test Convolutions of 4 drivers both with and without the Phase Tilt correction functionality recently added discussed above. I tried to drop in Uli's Phase curves for each of the 4 drivers centered at 20Hz, 50Hz, 250Hz and 3kHz. If you look closely, you can see each of those corrections. This is one of the major design goal milestones (especially for multi-baffle systems).

The Sub and Bass had their polarities intentionally inverted 180 degrees in the original measurements. If you look at the code snippets below, you will see 180 degree FIRs being merged to the Sub and Bass results in addition to their respective tilt FIRs (3-way merges). The Mid and High only has their tilt FIRs merged (2-way merges). This is a quick first pass and it looks like the 60Hz dip can be improved upon.

The Spectrograms depict the phase alignments of the 3 separate physical speaker baffles a bit more dramatically.

I used this little utility that takes the FIR's current RMS/NormFactor and the desired delta in dB which then returns the new RMS/NormFactor to scale the FIR to its desired amplitude. I used this utility to derrive the NormFactors used in the bash script below to balance the amplitude of all of the drivers. It works quite well.

Code:

USAGE: log20 Old relative[RMS|NormFactor] desriedDeltaDB ==> New [RMS|NormFactor]

log20 0.007310 1

Old 0.007310 [RMS|NormFactor] + 1.000000 dB ==> New 0.008202 [RMS|NormFactor]

C:

#include <stdio.h>

#include <string.h>

#include <strings.h>

#include <math.h>

double DbToNormFactorRms(double dB) {

return pow( 10.0, (dB/(double)20.0) );

}

double NormFactorRmsToDb(double rms) {

return 20.0 * log10f(rms);

}

/* Compile with [gcc|g++] filename.c -lm */

int main(int argc, char *argv[]) {

printf( "\nUSAGE: %s Old relative[RMS|NormFactor] desriedDeltaDB ==> New [RMS|NormFactor]\n", argv[0]);

double originalRMSNormFactor = atof( argv[1] );

double dB = NormFactorRmsToDb( originalRMSNormFactor );

double deltaDb = atof( argv[2] );

dB += deltaDb;

double newRMSNormFactor = DbToNormFactorRms(dB);

printf("\nOld %f [RMS|NormFactor] + %f dB ==> New %f [RMS|NormFactor]\n\n",

originalRMSNormFactor, deltaDb, newRMSNormFactor );

}Bash script to merge test FIRs via new CLI of the modified DRC-FIR code.

Code:

#!/bin/bash

# Adjust phase tilt of HIGH

drc \

--MGOutFile="/tmp/ramdisk/scratch/HIGH_TILT.pcm" \

--MGInFile0="/tmp/ramdisk/scratch/TC_176400_L_HIGH_D64_NoPsyc_0.7_1.0_256K_TAPS.pcm" \

--MGInFile1="/home/test/RewFilters/12_3K_DEGREE_PHASE_256K.pcm" \

--MGType="M" --MGOutNormFactor=1.0 ../sample/strong176400.drc > merge1

# Adjust phase tilt of MID

drc \

--MGOutFile="/tmp/ramdisk/scratch/MID_TILT.pcm" \

--MGInFile0="/tmp/ramdisk/scratch/TC_176400_L_MID_D64_NoPsyc_0.7_1.0_256K_TAPS.pcm" \

--MGInFile2="/home/test/RewFilters/45_PLUS_250_DEGREE_PHASE_256K.pcm" \

--MGType="M" --MGOutNormFactor=0.391422 ../sample/strong176400.drc > merge2

# Adjust phase tilt of LOW and invert

drc \

--MGOutFile="/tmp/ramdisk/scratch/LOW_TILT.pcm" \

--MGInFile0="/tmp/ramdisk/scratch/TC_176400_L_LOW_D64_NoPsyc_0.7_1.0_256K_TAPS.pcm" \

--MGInFile1="/home/test/RewFilters/180_PLUS_DEGREE_PHASE_256K.pcm" \

--MGInFile2="/home/test/RewFilters/90_MINUS_50_DEGREE_PHASE_256K.pcm" \

--MGType="M" --MGOutNormFactor=0.057157 ../sample/strong176400.drc > merge3

# Adjust phase tilt of SUB and invert

drc \

--MGOutFile="/tmp/ramdisk/scratch/SUB_TILT.pcm" \

--MGInFile0="/tmp/ramdisk/scratch/TC_176400_L_SUB_D64_NoPsyc_0.7_1.0_256K_TAPS.pcm" \

--MGInFile1="/home/test/RewFilters/180_MINUS_DEGREE_PHASE_256K.pcm" \

--MGInFile3="/home/test/RewFilters/45_PLUS_20_DEGREE_PHASE_256K.pcm" \

--MGType="M" --MGOutNormFactor=0.008202 ../sample/strong176400.drc > merge4

# Sum corrected drivers with NO PHASE TILT corrections

drc \

--MGOutFile="/tmp/ramdisk/scratch/FULL_NO_TILT_MERGED.pcm" \

--MGInFile0="/tmp/ramdisk/scratch/TC_176400_L_HIGH_D64_NoPsyc_0.7_1.0_256K_TAPS.pcm" \

--MGInFile1="/tmp/ramdisk/scratch/TC_176400_L_MID_D64_NoPsyc_0.7_1.0_256K_TAPS.pcm" \

--MGInFile2="/tmp/ramdisk/scratch/TC_176400_L_LOW_D64_NoPsyc_0.7_1.0_256K_TAPS.pcm" \

--MGInFile3="/tmp/ramdisk/scratch/TC_176400_L_SUB_D64_NoPsyc_0.7_1.0_256K_TAPS.pcm" \

--MGType="S" --MGOutNormFactor=1 ../sample/strong176400.drc > merge5

# Sum corrected drivers with PHASE TILT corrections

drc \

--MGOutFile="/tmp/ramdisk/scratch/FULL_TILT_MERGED.pcm" \

--MGInFile0="/tmp/ramdisk/scratch/HIGH_TILT.pcm" \

--MGInFile1="/tmp/ramdisk/scratch/MID_TILT.pcm" \

--MGInFile2="/tmp/ramdisk/scratch/LOW_TILT.pcm" \

--MGInFile3="/tmp/ramdisk/scratch/SUB_TILT.pcm" \

--MGType="S" ../sample/strong176400.drc > merge6

Last edited:

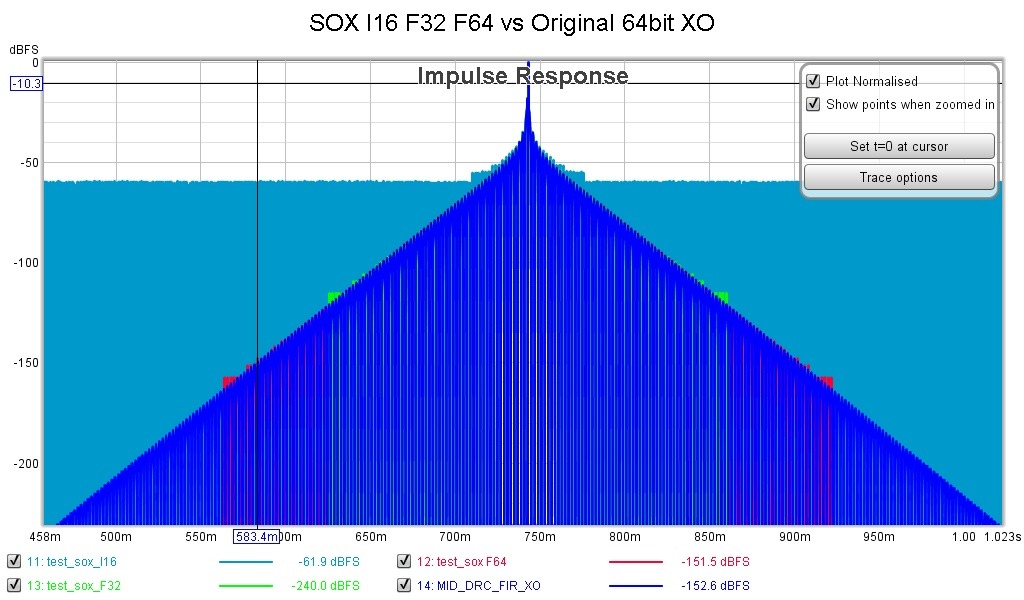

Was doing some unit testing and wanted to share this plot. I thought I had some precision issues and it turned out to be a SoX conversion that slipped into the mix.

16-bit int doesn't looks so good in comparison.

- Light Blue - SoX F64 to I16 and back to F64.

- Green - SoX F64 to F32 and back to F64

- Red - SoX F64 to F64

- Dark Blue - Original F64 and Alternate Code.

16-bit int doesn't looks so good in comparison.

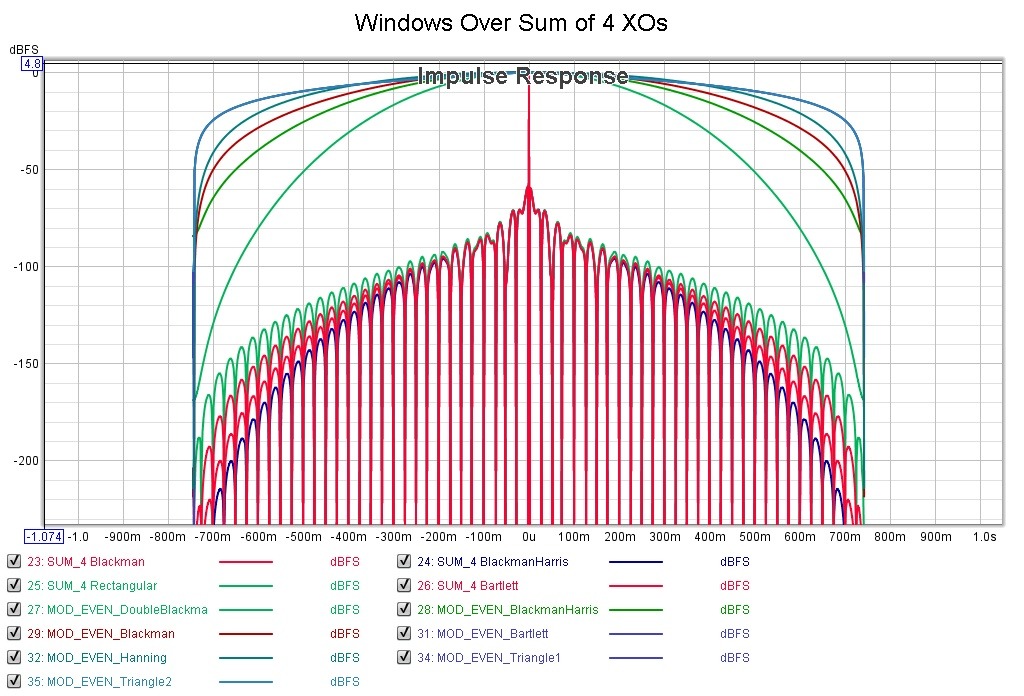

Added the ability to specify which windowing algorithm to use in the modded DRC-FIR code for seasoning to taste.

The upper traces are the windowing algorithms applied to a rectangular/all ones signal.

The lower traces are the same windowing algorithms applied to the summation of all 4 XOs.

This should make the toolkit functions more flexible should someone want something other than the standard Blackman window.

The upper traces are the windowing algorithms applied to a rectangular/all ones signal.

The lower traces are the same windowing algorithms applied to the summation of all 4 XOs.

This should make the toolkit functions more flexible should someone want something other than the standard Blackman window.

Hi Guys,

I dropped the code mods for a while due to other interruptions and have recently got back to work on it after finishing my new line array baffles. Things appear to moving along in a positive direction including getting past a few hurdles.

To recap:

Inputs:

Outputs:

Convolution Engine:

This allows you to switch back and forth between time aligned baseline XOs and time aligned corrected XOs for A/B comparisons.

One of the complaints of DRC-FIR is that it is manually intensive, requires manual SoX format conversions, requires the user to manually keep track of format information that is already stored in .wav file headers, but not raw files and thus is more complex and ripe for pilot error.

After working with SoX, I discovered it uses an internal 32-bit Int format which reduces the numerical accuracy of RePhase's IEEE 64-bit float XO filters using either the SoX's CLI interface and its API interface.

I am investigating using FFMPEG's API interface for both reading and writing files to eliminate the manual conversion stages, thus simplifying use and minimizing pilot errors. The SoX code base doesn't appear to have been touched in years where FFMPEG's code base appears to be actively maintained. If it works, virtually any lossless format can be used with DRC-FIR to bypass the needs to externally convert and maintain the header file information.

Another mod being mulled over is converting DRC-FIR to use the same actively maintained FFT library that CamillaDSP uses. FFTW is supposedly more accurate and 3-4X faster.

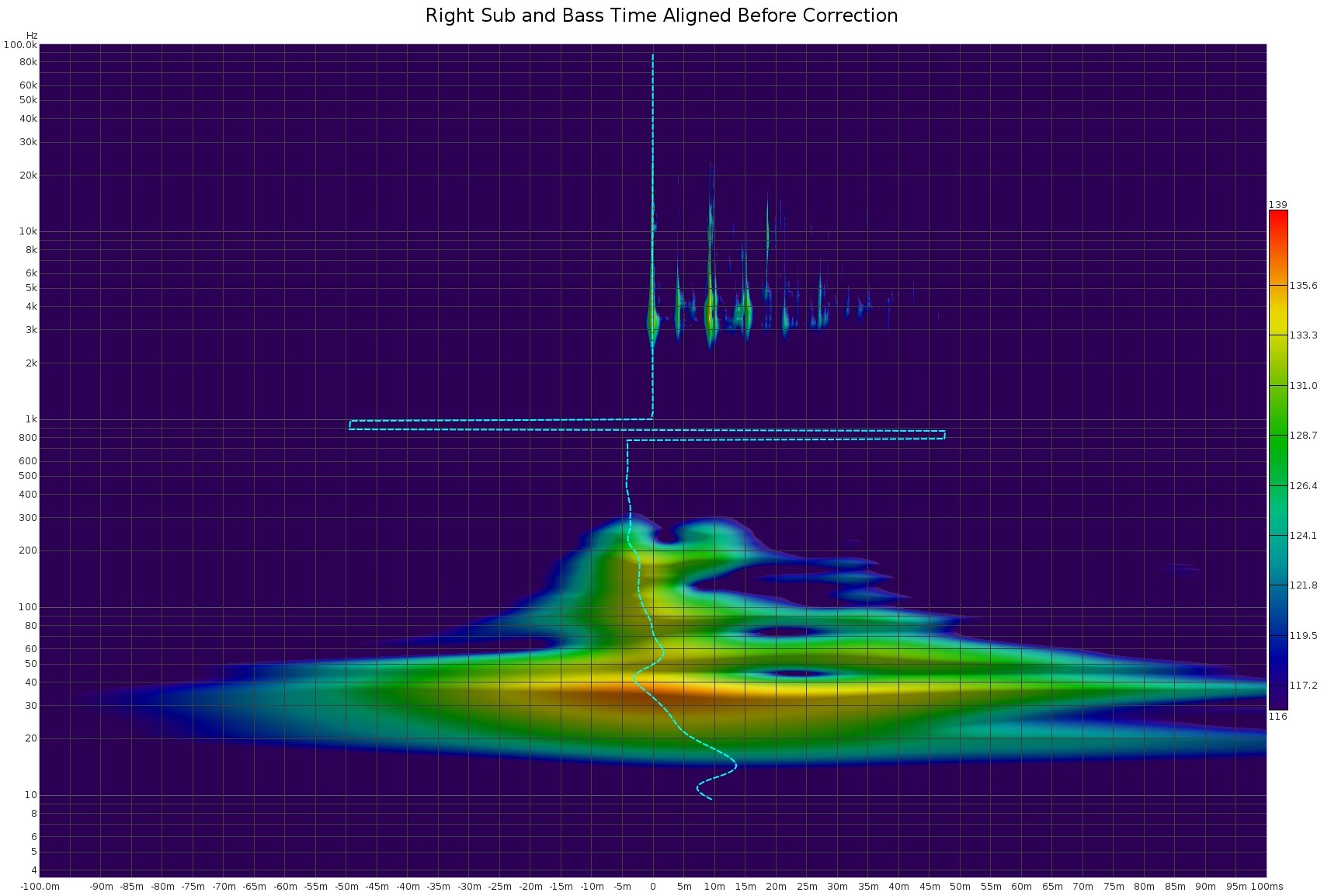

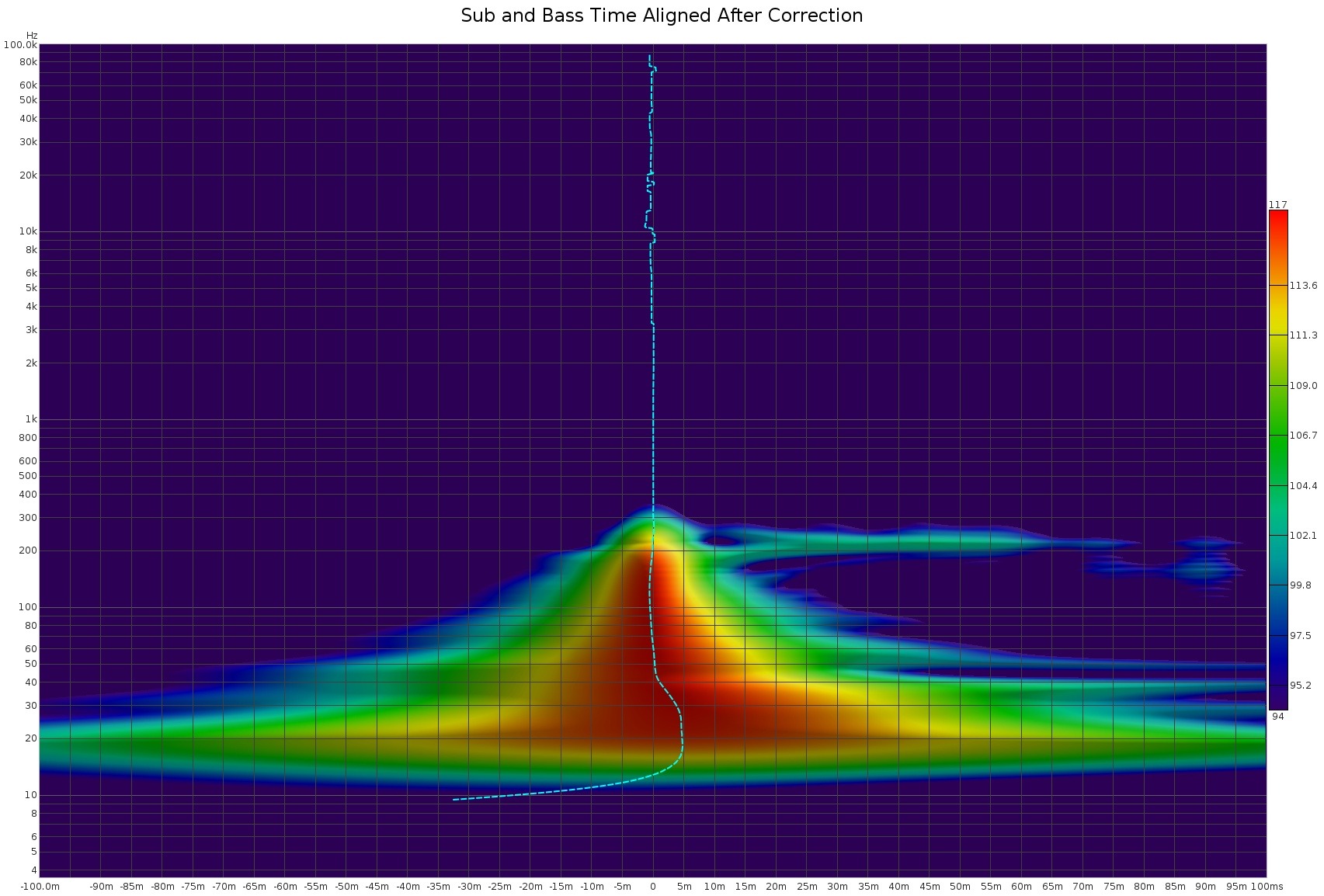

Here is a preview of my sub and bass panels using the aforementioned driver level correction. Much faster than manually correcting and I don't have to manually add shelf filters to extend my DIY sub's bass extension.

This workflow allows you to correct drivers under the Schroeder frequency and use baseline uncorrected XOs above or any combination you choose.

Corrected Sub and Bass drivers by themselves.

Time corrected Sub and Bass drivers before and after driver level correction.

I dropped the code mods for a while due to other interruptions and have recently got back to work on it after finishing my new line array baffles. Things appear to moving along in a positive direction including getting past a few hurdles.

To recap:

- Added ability to specify correction targets as band pass baseline XO FIRs created in RePhase or other tools.

- Added driver level correction for more accurate solutions over XO regions for multi-amp applications.

- Multiple-baffle systems will have better integration using driver level integration/corrections.

- Sub, Low, Mid, High (for driver level corrections) and Full for speaker level band pass curves.

- Updated work flows to time align drivers against their respective tweeters before corrections to enhance correction quality.

- Adding changes to make DRC-FIR easier to use to minimize pilot errors.

- DRC-FIR's input windows are sample-rate specific thus a specific config file won't work across different sample rates.

- Added ability to specify windows in fractional # of cycles or fractional # of milliseconds which is sample rate agnostic.

- Example: In section 4.2, Figure 6 of DRC-FIR's documentation shows Normal correction starting at 20Hz/500ms and ending at 20kHz, 0.5ms which is @ 9.97 cycles both stop and start values. You can now specify milliseconds and/or cycles. 500ms and 0.5ms should work the same across all sample rates for Normal correction levels without having to manually convert it for each sample rate.

- Allows each driver's correction to use its own tailored correction intensity profile settings vs just 1 for the whole speaker.

- Example: Sub=Strong, Bass=Normal, Mid=Soft, Tweeter=None, Full=Soft

- Added the ability to output generated FIRs with user specified # of taps (larger,smaller,same) so they play nice as a set in the convolution engine. This allows you to also control FIR latencies.

- Driver corrections are normalized/level matched against their respective target XO FIR's RMS levels.

Inputs:

- RePhase baseline band-pass FIR XOs for each driver and/or speaker

- Driver Sweeps for each driver and/or speaker

- Alignment delays to be placed into your convolution engine.

Outputs:

- Solution sets that can be drag and dropped into REW for analysis and your convolution engine.

- Corrected driver FIR XOs

- Corrected whole speaker FIR "XO or band pass filter"

- Intermediate raw files are binned separately for analysis if desired.

- Generated shell scripts and captured logs are binned separately for post inspection as desired.

- Test convolutions for each driver and whole speaker are provided for post analysis before running on hardware.

Convolution Engine:

- Add driver delays

- Add FIR XO's.

- Replace FIR XO with aggregated correction FIR XOs

- Convolution engine's filter map should retain the same topology, just swap Baseline XOs for Fixed XOs.

This allows you to switch back and forth between time aligned baseline XOs and time aligned corrected XOs for A/B comparisons.

One of the complaints of DRC-FIR is that it is manually intensive, requires manual SoX format conversions, requires the user to manually keep track of format information that is already stored in .wav file headers, but not raw files and thus is more complex and ripe for pilot error.

After working with SoX, I discovered it uses an internal 32-bit Int format which reduces the numerical accuracy of RePhase's IEEE 64-bit float XO filters using either the SoX's CLI interface and its API interface.

I am investigating using FFMPEG's API interface for both reading and writing files to eliminate the manual conversion stages, thus simplifying use and minimizing pilot errors. The SoX code base doesn't appear to have been touched in years where FFMPEG's code base appears to be actively maintained. If it works, virtually any lossless format can be used with DRC-FIR to bypass the needs to externally convert and maintain the header file information.

Another mod being mulled over is converting DRC-FIR to use the same actively maintained FFT library that CamillaDSP uses. FFTW is supposedly more accurate and 3-4X faster.

Here is a preview of my sub and bass panels using the aforementioned driver level correction. Much faster than manually correcting and I don't have to manually add shelf filters to extend my DIY sub's bass extension.

This workflow allows you to correct drivers under the Schroeder frequency and use baseline uncorrected XOs above or any combination you choose.

Corrected Sub and Bass drivers by themselves.

Time corrected Sub and Bass drivers before and after driver level correction.

Last edited:

Be careful what you wish for, you just might get it...

What I mean by that is that all this audio manipulation should not be about getting the cleanest pictures, but about getting the most convincing sound.

It's easy to manipulate the audio to make it look great in graphs, but it's much harder to make it sound convincing.

My suggestion (what I've done myself): Experiment with window sizes, for all parts of the frequency spectrum and look for the edges of audibility.

I ended up only using phase manipulation at frequencies below about 200 Hz, (but I didn't have crossovers above it) and looked for the shortest windows of manipulation that offered the sound improvements I was after. To come to that conclusion I've used just about anything you could imagine, just to find out what it did.

The most important lesson was to learn how the room contributed to what I heard and how to be able to manipulate (and use) that.

What I mean by that is that all this audio manipulation should not be about getting the cleanest pictures, but about getting the most convincing sound.

It's easy to manipulate the audio to make it look great in graphs, but it's much harder to make it sound convincing.

My suggestion (what I've done myself): Experiment with window sizes, for all parts of the frequency spectrum and look for the edges of audibility.

I ended up only using phase manipulation at frequencies below about 200 Hz, (but I didn't have crossovers above it) and looked for the shortest windows of manipulation that offered the sound improvements I was after. To come to that conclusion I've used just about anything you could imagine, just to find out what it did.

The most important lesson was to learn how the room contributed to what I heard and how to be able to manipulate (and use) that.

@wesayso ,

Understood, but matching the target is an indication if the software is functioning as expected or not.

My goal is for it to work with minimum or linear phase targets or a match there of. As to how closely it matches the target is a function of what settings one chooses to drive the DRC-FIR engine and what frequency ranges or drivers one chooses to correct over just using the baseline XOs. The approach should give one wide latitude to do as much or as little as possible including layering on the full range house curve.

Since my speakers are in 3 separate baffles, (like the old Magnepan Tympanies), I wanted something that could handle individual drivers at different physical locations and still match phase over the XO regions. Passive XO's would not do that.

What I am currently running is corrected sub and bass drivers over their driver range to 250Hz with the mids and tweeters running their uncorrected baseline XOs with all 4 drivers time aligned in the convolution engine.

Understood, but matching the target is an indication if the software is functioning as expected or not.

My goal is for it to work with minimum or linear phase targets or a match there of. As to how closely it matches the target is a function of what settings one chooses to drive the DRC-FIR engine and what frequency ranges or drivers one chooses to correct over just using the baseline XOs. The approach should give one wide latitude to do as much or as little as possible including layering on the full range house curve.

Since my speakers are in 3 separate baffles, (like the old Magnepan Tympanies), I wanted something that could handle individual drivers at different physical locations and still match phase over the XO regions. Passive XO's would not do that.

What I am currently running is corrected sub and bass drivers over their driver range to 250Hz with the mids and tweeters running their uncorrected baseline XOs with all 4 drivers time aligned in the convolution engine.

- Home

- Loudspeakers

- Full Range

- A convolution based alternative to electrical loudspeaker correction networks