I missed that one. Will go find it.

Be interesting to know what you make of it.

Rule 1: Start by defining the specific question an experiment is supposed to ask. The question Arny wants to ask is "Do any of these files sound different than the reference?" That's not the question Mooly is asking (which is "Do any of these files sound different from any of the others?"), so Mooly's experiment is, not surprisingly, different than one which would answer Arny's question.

It really does help to be explicit.

The distinction above is questionable, because the answers to both questions can answer the same basic question.

If the files don't sound different from the reference, then simple logic tells us that they also don't sound different from each other, and vice versa.

While the questions are different, the answers to them can give the same insights.

However, the above is incorrect because Mooley's question appears to be more like:

"Which of these op amps do you prefer the sound of?".

Again common sense and simple logic might prevail, but it didn't.

For there to be preference based on sound quality, there must be an audible difference, and the existence of an audible difference of any kind was never asked to be investigated.

The existence of an audible difference was presumed, which is a major error since whether or not there are audible differences are fundamental to the any of the questions being asked. I call this "Blind Faith in the universal existence of audible differences" which is common among audiophiles. The cause is their lack of respect or understanding for simple logic, which would (and has among true audio experts) led to the use of improved listening test procedures. Please see the relevant writings of Sean Olive and Floyd Toole.

Along the way proper tools for investigating the question as to whether the files sound different were brought to the table, but unfortunately the files were not properly made, and they sounded different for reasons that had nothing to do with the op-amps that were use.

The nature of the improper creation of the files was that they were not timed correctly. I presented this as a requirement right up front, but as usual it was ignored. After all, I'm dealing with people who in their own eyes know far more about audio in general and subjective testing in particular than I. I am in fact the village idiot in their eyes - that's clear. This is common with audiophiles.

Here are the details of the timing error:

I examined each file using an audio editor and identified some characteristic waveforms that related to the start of the recording of the music. I marked each start with a cue mark, and then listed out the timing of the cue mark:

File reference: 0.259 seconds

File A: 0.103 seconds

File B: 0.103 seconds

File C: 0.103 seconds

File D: 0.355 seconds

File E: 0.258 seconds

File F: 0.256 seconds

Timing errors of as little as a few milliseconds (0.001-0.003 seconds) can be reliably heard by a well-trained listener (Me). Even if not cued to the existence of a timing difference of a few milliseconds, a skilled listener may pick it up, and it may be relevant or even crucial to the question being asked for the test.

Having found a fatal error, I didn't go further and check the timing of the ends of the files. They may also be in error.

Of course, the personal attacks and name calling has already begun. I know how Gulliver felt in Lilliput. ;-)

Mooly, one of the files have messed up timing, cca100ms

I just posted the results of a simple timing analysis, and the timing of most of the files was messed up.

Actually, the fact that three files had the same timing is kinda hard for me to explain, since in general it appears that no general effort was made to properly time the files. Why then did 3 of them come out exactly the same?

To facilitate Mooly's look back: I clustered B,C,D, and E on the one hand as indistinguishable from each other, and A and F as different. I found them to be a bit wooly.

Arny, the test was good enough for what it was. You must have spent a lot of time finding fault in it, which you could have also used to present us with something better.

Arny, the test was good enough for what it was. You must have spent a lot of time finding fault in it, which you could have also used to present us with something better.

Last edited:

I wonder about this to, particularly when the likes of D Self (who has designed consoles) suggests that everything we hear has already passed though dozens if not a hundred of such devices.

Anybody who has ever even looked casually at the process of producing recordings should have had the above salient fact slammed in their face in a totally undeniable way.

Who needs the esteemed Mr. Self to tell us that air is a gas at STP or something like it?

Simple logic, guys! ;-)

Arny, the test was good enough for what it was.

That would appear to be a baseless assertioin that suits a personal agenda.

You must have spent a lot of time finding fault in it, which you could have also used to present us with something better.

BTW, I spent almost no time on this little diversion. Finding faults in naively designed experiments is like finding cows in dairy farms. Follow your nose!

I actually did present something better, and the tools for doing them, the criteria for applying them, but it was all obviously ignored by many.

See what I say about people thinking I am the village idiot?

Thanks for ignoring it all again!

Ah, now I get it, you are just p*ssing vinegar, simple logic, no D Self needed to understand that. Warning: doing so may lead to penile erosion, making you look like a little you-know-what.

Ah, now I get it, you are just p*ssing vinegar, simple logic, no D Self needed to understand that. Warning: doing so may lead to penile erosion, making you look like a little you-know-what.

Not at all. I respect D. Self rather highly, but there is this slight matter of my involvement with audio production starting over 40 years ago.

It's really hard to see the logic of obsessing over op amps when you know a few relevant facts like how many of them (and some pretty crappy ones in many cases) are for example in the pro audio gear that used to make the recordings.

What happened to the simple logic of the weakest link in the chain?

For example, we've seen a lot of very careful detailed looks at the line inputs of devices that are commonly used to make recordings or representative of them, but who has checked out the mic inputs?

What? You've lost me there. You mean there is a difference in the delay before the recording starts? That the files aren't trimmed the same? If so, how does that alter the overall sound?Timing errors of as little as a few milliseconds (0.001-0.003 seconds) can be reliably heard by a well-trained listener (Me). Even if not cued to the existence of a timing difference of a few milliseconds, a skilled listener may pick it up, and it may be relevant or even crucial to the question being asked for the test.

Or do you mean something else?

What? You've lost me there. You mean there is a difference in the delay before the recording starts? That the files aren't trimmed the same?

Of course.

If so, how does that alter the overall sound?

You've moved the goal posts.

The discussion was about audible differences among the files that were provided, and I have shown that they exist and are easy to hear.

One would hope that the files that were provided were representative of the overall sound, but of course we now know that they aren't.

We also have a little mystery - even though most of the files have random timing, three of them seem to have identical timing. Obviously different processes were used to make them, and that would appear to have some impact on the situation, as well.

To facilitate Mooly's look back: I clustered B,C,D, and E on the one hand as indistinguishable from each other, and A and F as different. I found them to be a bit wooly.

I hinted you had a good result earlier on. The LM833 was originally Nationals answer the Signetics 5532 and the late JLH many years ago reckoned that the 833 could possibly the best available (at the time). So to see the 5532, the 833 and of course the 4562 in any grouping shouldn't come as a surprise.

The TLE2072 is a oddball. I've used that and really wanted to like it but over many weeks didn't. Was it a real problem though or was it imagined.

Arny, your timing comments come as real surprise here. As Pano suggests, timing differences shouldn't matter anyway because its the music and how it comes across that is the real test.

Arny, you might be correct. So if there is technical "difference" in file preparation, isn't it surprising if many cannot hear the difference?

I'm not surprised at all that people can't hear actual differences because most audiophile listening evaluation's are so flawed that they overwhelm the listeners with false differences and people don't actually get much practice hearing real differences.

Also, there appears to be as yet no evidence that there are audible differences among the files. So it is possible that "no differences" is the right answer.

In fact even the measurable differences among the signal paths appear to be unknown.

Furthermore there appears to be a lot of confusion about the process. I'm confused for sure because the only schematic of the actual test process appear to be the one I drew based on the narrative of the test which may be flawed. Nobody has confirmed or denied it.

Arny, your timing comments come as real surprise here.

I'm sure. They are real facts, and they are relevant. If you thought they were important you would have never made them as they are easy enough to eliminate.

That speaks to prejudice, bias and maybe a little defensiveness.

As Pano suggests, timing differences shouldn't matter anyway because its the music and how it comes across that is the real test.

Since Pano seems to provide little but personal attacks, I don't know why his comments should be taken as totally and unimpeachably authoritative.

The fact of the matter is that the files were provided for a purpose and due to the timing errors that purpose is frustrated for many who have tried to listen to them.

People have mentioned difference testing and ABX testing and the timing errors can frustrate both.

Well yes that's true. It can cause a glitch in the switch. I think it did for me.People have mentioned difference testing and ABX testing and the timing errors can frustrate both.

But not everyone was using ABX, so I doubt they would notice.

I'm not surprised at all that people can't hear actual differences because most audiophile listening evaluation's are so flawed that they overwhelm the listeners with false differences and people don't actually get much practice hearing real differences.

Arny, I think you came here recklessly. No one has been ignoring you. I think you came with different perspective as many of us here...

We have been involved with many listening tests before. We know what is required in a listening test. PMA and SY himself has been mentioning more than you did here. And SY himself will never want to conduct such test because he knew the effort required to conduct a controlled test. So I believe many of us have similar perspective in seeing this test and it's purpose. There are many things involved, such as how to attract participants.

You may think that if you cannot hear difference then you have no right to pick preference. Then may be only you and me who will do it, and it is not Mooly's intention I believe.

And you cannot force the idea that the time difference was something "important" (again, it is about perspective). I'm the only one who posted ABX result, but it is NOT required. The time reference can only help in identifying difference (a.k.a. ABX), but it cannot help in identifying preference.

Identifying preference is important. Imagine you are to purchase a speaker, but you can only listen and cannot see the product. Hearing differences only will not help you make correct decision.

Go to XRK driver test round 3: http://www.diyaudio.com/forums/full...ind-comparison-2in-4in-drivers-round-3-a.html

The reference file is VERY different than the test clips (so you can say that there is no reference file at all). And the difference between the clips are sooooo huge (much much bigger than this opamp test). But we are not supposed to tell that we hear difference, but we are supposed to pick a preference!

So, go there and pick one driver that you prefer. Then you can defend yourself later if you pick the cheapest driver with the worst technical specifications. See my point?

Last edited:

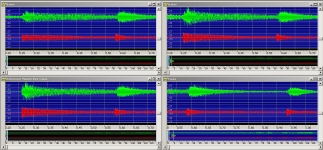

OK, I looked at the files in an editor. I don't find the same start times that you do, unless you were counting the noise floor. See below for what I found.

The waveforms do look different, tho. (notice the scale)

I haven't revealed the criteria I used to establish the timing numbers I provided, so any differences are completely understandable.

This may help:

http://www.diyaudio.com/forums/attachment.php?attachmentid=495579&stc=1&d=1437905632

Attachments

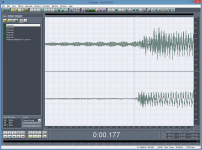

I'm just looking at them now and I can see what you are referring too. I lined all the files up visually by loading into Audacity and I can see the timing error you mention occurred and then carried over to the next files.

All I can say in that score is that the primary purpose of all the files is evaluation by listening, and this shows again (as in all the tests) that it always comes down to file analysis.

When you evaluate a source component or amp or whatever you don't have the opportunity to do millisecond perfect side by side comparisons. You just listen and form an opinion.

Gus listening here is one of the few to actually do just that. Its a purely subjective impression of what he heard.

All I can say in that score is that the primary purpose of all the files is evaluation by listening, and this shows again (as in all the tests) that it always comes down to file analysis.

When you evaluate a source component or amp or whatever you don't have the opportunity to do millisecond perfect side by side comparisons. You just listen and form an opinion.

Gus listening here is one of the few to actually do just that. Its a purely subjective impression of what he heard.

Late to this as usual.

I found them all pretty similar but would be happiest listening to C. A was a bit flat.

The reference track was plainly a fraction clearer than all the others but I still found it fatiguing compared with what I am used to. As soon as the voice came in, it seemed unnatural to me and I wasn't comfortable with it.

I was listening on a MacPro via external DAC into Sony TA-F300 and Sennheiser HD518. I'll try something better later. This is my 'snug' system and the Quad ESLs may reveal more.

Attachments

- Status

- Not open for further replies.

- Home

- General Interest

- Everything Else

- Listening Test. Trying to understand what we think we hear.