Almost all published blind tests of amplifiers have come to a null result, unless there was a tube.Do you have any examples of published tests coming to the former result?

ABX Double Blind Test Results: Power Amps

A swedish magazine who uses something they call before and after test has however come to a very different conclusion. Only three amps (a Bryston, an older modified NAD and an older Rotel) have been considered transparent and passed their blind test. All other amps, and they have tested quite many, have had some kind of audible distortion and coloration.

Amplifier Test Method

I think a blind test can easily give the result one is looking for or give an answer that's not very objective. There are so many factors that can play a role.

Last edited:

It was not the models that were to blame, it was the decision makers, both in Congress and the board rooms.

I thought they were all hand in hand.. 😉

Ah! there is a solution.. National debts to fund these idiocies..

I think a blind test can easily give the result one is looking for or give an answer that's not very objective. There are so many factors that can play a role.

Of course sighted tests don't do that! Really!?

There are no perfect tests or absolute results, but using this excuse to throw out all data that you don't like is simply closed minded.

Last edited:

*Snip*

/*Snip

All that effort to make their cabs and they didn't bother rounding the outer edge? Polished amateur-hour is still amateur-hour!

Did you see this?

Avantgarde Zero in polyurethane

/*Snip

All that effort to make their cabs and they didn't bother rounding the outer edge? Polished amateur-hour is still amateur-hour!

Earl,There are no perfect tests or absolute results, but using this excuse to throw out all data that you don't like is simply closed minded.

After (finally) looking over your white paper, "Subjective Testing of Compression Drivers", the test protocol you used and I used in :

http://www.diyaudio.com/forums/multi-way/212240-high-frequency-compression-driver-evaluation.html

are quite similar, with the following differences:

1) Rather than using a plane wave tube, I recorded the output of various drivers on a narrow conical horn on a large flat baffle.

The horn used has similar response to a plane wave tube above 800 Hz, so the difference between using a horn vs. plane wave seems to be little.

2) Rather than using one EQ for all the drivers, individual EQ was used for each driver, making source differences less, subjectively minimizing or eliminating differences when low drive levels are employed.

3) Rather than comparing three (high) voltage levels covering only a 6 dB range, each resulting in high levels of distortion (5-10% at 1000 Hz ranging up to 10-35% in the 4-9 kHz range) my tests were done over approximately 15 dB range with levels resulting in a range of much lower to higher levels of distortion than your test.

4) Rather than college students comparing three high levels of distortion to each other, my test allows any one that cares to compare the original source recording to the equalized response of drivers played back at various levels.

I have no doubt in your conclusion that at the three high levels of distortion, the three tracks were statistically similar in rating.

However, the distortion attributes of each of the drivers may be as different as the linear distortion (frequency response) are, so question your conclusion that the subjects were only detecting linear, rather than non-linear distortion differences.

I agree completely with your observation:

"In hindsight it is apparent that the addition of a dummy source, one that was in fact the reference, would have added value to the interpretation of the results. It is most unfortunate that this possibility was not seen beforehand."

My test allows for that possibility, you may possibly revise your conclusion after listening to the different drivers at different voltage levels yourself.

Since all the drivers equalized (linear) recorded response sound virtually the same as the source recording at low voltage drive levels (if you hear differences, please comment) but sound different at high levels (again, anyone not hearing those differences please comment) my conclusion would be that differences in non-linear distortion do make drivers sound different at high voltage input levels.

Art

Attachments

Earl,

I have no doubt in your conclusion that at the three high levels of distortion, the three tracks were statistically similar in rating.

However, the distortion attributes of each of the drivers may be as different as the linear distortion (frequency response) are, so question your conclusion that the subjects were only detecting linear, rather than non-linear distortion differences.

Art

Art, I don't believe that this is true. There was statistical significance across drivers, but not across levels. Had the distortion been significant, even if it was different across the drivers, it should have been detected by the level change. Changes which did change the measured THD by an order of magnitude as I recall. Based on the results, your claim simply cannot be correct.

How do we know that the distortion in your test is not somewhere else in the system?

If neither of us made an error in our experimental design and implementation then the only explanation that I can see is that we were testing at different absolute levels - yours being much higher than ours at you highest levels.

In neither of our experiments were we sensitive to diffraction effects and that's a serious limitation, especially when dealing with horns.

After some tens years of researching this specific topic (not horn in general, just the audibility of "harshness/distortion" in them) my conclusion is that the internal diffraction effects, HOMs, etc. are the most likely cause of a poor sound in a horn/waveguide device; that nonlinear effects, while they may be present (although the data here is controversial), are not significant enough to overcome the negative effects of diffraction.

Last edited:

1) The three levels you tested at are not an order of magnitude apart in terms of distortion or level, they are only 6 dB, while the distortion difference is only around 4 dB to 6 dB different.Art, I don't believe that this is true. There was statistical significance across drivers, but not across levels. Had the distortion been significant, even if it was different across the drivers, it should have been detected by the level change.

1)Changes which did change the measured THD by an order of magnitude as I recall. Based on the results, your claim simply cannot be correct.

2)How do we know that the distortion in your test is not somewhere else in the system?

3)If neither of us made an error in our experimental design and implementation then the only explanation that I can see is that we were testing at different absolute levels - yours being much higher than ours at you highest levels.

Had you included the 2.83 level, there would have been an order of magnitude difference.

Given three level choices, orders of magnitude would be far more revealing of difference than three with only a 6 dB range.

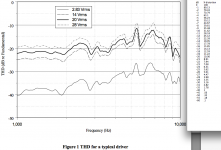

2) I am confident that the recording system is not resulting in the distortion we hear, the B&K 4004 mic, and the digital recorders used have virtually no distortion, while the driver distortion is easily measurable, just as you measured in "Figure 1" posted in #6605.

I was actually surprised that the distortion was not as apparent on the recordings as it was live.

3) The drive levels I recorded at were less than, equal to, and greater than your test levels, assuming you used 8 ohm nominal drivers.

This makes the difference in distortion far greater, and more apparent than in your test protocol.

Art

Last edited:

Art

I accept your critique of our experiment, had I to do it over again I would make changes.

My critique of your experiment is that it is not blind, which in my book is the first requirement for any study on audibility.

I accept your critique of our experiment, had I to do it over again I would make changes.

My critique of your experiment is that it is not blind, which in my book is the first requirement for any study on audibility.

Art,Art

I accept your critique of our experiment, had I to do it over again I would make changes.

My critique of your experiment is that it is not blind, which in my book is the first requirement for any study on audibility.

Your critique is valid, though the labeling for each of my recordings is so arcane that even I can't remember what all the recording conditions are without looking them up on the cheat sheet.

For the average person, the labels would have about the same value as diamonds, squares and triangles.

You could have Lidia play the recordings for you blind, with an A/B to the original source recording.

If your hearing is anything like mine, I'm sure you will be able to easily detect the recordings with high drive levels, and I doubt you will notice much if any difference between drivers played at the lower levels.

I have faith you will also hear the difference in the distortion "character" between the drivers.

I trust our old hearing's response a lot more than 27 blind college kids from 2005 😉.

Art

You can test a lot of things without recruiting helpers. For example:

foobar2000: Components Repository - ABX Comparator

foobar2000: Components Repository - ABX Comparator

You could have Lidia play the recordings for you blind, with an A/B to the original source recording.

Art

Your data could make for a very nice test, albeit, I am not about to do it.

Randomize the data and present it, with repeats to 20 people. You would have to control the playback conditions however. The data will, according to your claims, which I suspect are likely true, show that the audibility will be flat and then rise with level. You could then find the point above which the audibility becomes audible, the threshold. If this point is above where we did our tests then we are in complete agreement. If not then something is amiss. If close then its probably within experimental error. But in any case seeing that data would be very attractive to me, and certainly worth publishing (if you care about that sort of thing).

I would even trust blind college students. Did I ever mention that in one of our tests, the blind college students were statistically more stable than the "pro" listeners at a major audio company. It takes a serious experimental design to determine that, and it was quite a shock. My only guess was the "pro's" were trying to second guess the test and hearing things that weren't there. Of course that never happens ... does it!?!

In all of my subjective tests almost nothing ever comes out like you would expect it to. That's why I don't trust sighted tests. They always seem to come out just like the listener expects them to. Funny how that happens.

Klippel pages carry a nice distortion test, to get an idea of what level of distrtion is audible! Distortion is in the signal - use good headphones or good speakers at moderate levels.

Listening Test

Listening Test

Klippel pages carry a nice distortion test, to get an idea of what level of distrtion is audible!

For that character of distortion. A different character, i.e. shape of the transfer nonlinearity, will have a different level of audibility. This is paramount to understanding the perception of distortion.

Changes of diffraction behavior based on amplitude will show in changing correlation between two measurement points.

Yes that Klippes DT is sideline here. It is a fullrange speaker recorded. Fullranges usually have worst distrotion at bass, unlike WG/Horn midtweeters.

More general aboud audibility of distortion of loudspeakers http://www.klippel.de/uploads/media/Speaker_Auralization-Subjective_Evaluation_of_nonlin_Dist_01.pdf

and Human Hearing - Distortion Audibility Part 3 | Audioholics -> links at the end of page

More general aboud audibility of distortion of loudspeakers http://www.klippel.de/uploads/media/Speaker_Auralization-Subjective_Evaluation_of_nonlin_Dist_01.pdf

and Human Hearing - Distortion Audibility Part 3 | Audioholics -> links at the end of page

I would even trust blind college students. Did I ever mention that in one of our tests, the blind college students were statistically more stable than the "pro" listeners at a major audio company. It takes a serious experimental design to determine that, and it was quite a shock. My only guess was the "pro's" were trying to second guess the test and hearing things that weren't there. Of course that never happens ... does it!?!

This is the problem I have with every HiFi system review I have ever read. Much as I would love to take what they say at face value; much as I would love to believe that their conclusions will be reflected in my listening experience, it is impossible for me to suspend my disbelief. There is just no way around the fact that they are listening through their eyes and prejudices, just as I would be if I were in their shoes, sitting in front of megabuck audio eye candy.

Years ago, I came to the conclusion that there was only one type of subjective reviewer I would ever be able to believe--a blind one.

Wouldn't it be awesome if a blind person--or better yet a group of several, male and female over a range of ages--would audition gear and write reviews BEFORE being told anything about the systems they were reviewing? Now, THOSE reviews I would read with great interest!

Actually, it is a fantasy of mine to put on a blindfold and be led by the hand through a high-end show, room to room, listening and recording my observations without prejudice. It wouldn't rise to the level of a scientific study, but it would be a great personal education about my own listening prejudices, especially if I were to go back after the blind auditions and do sighted auditions and compare them to my first impressions.

Last edited:

Bill

I could not agree with you more.

Quite seriously, whenever I do listen, I close my eyes. Not sure why, I mean I already know what I am listening to so the biases are already there, but I just find the visual a distraction.

One interesting thing that happened to me once at a show: I was listening in a typically small room and I was seated with my eyes closed. I heard something odd in the playback, not something overt, kind of subtle. When I opened my eyes, I found that someone had walked between me and the speakers. What startled me was how subtle the effect was. For those who believe that the direct sound is all important, how do you explain that observation? Try it sometime - blind of course.

I could not agree with you more.

Quite seriously, whenever I do listen, I close my eyes. Not sure why, I mean I already know what I am listening to so the biases are already there, but I just find the visual a distraction.

One interesting thing that happened to me once at a show: I was listening in a typically small room and I was seated with my eyes closed. I heard something odd in the playback, not something overt, kind of subtle. When I opened my eyes, I found that someone had walked between me and the speakers. What startled me was how subtle the effect was. For those who believe that the direct sound is all important, how do you explain that observation? Try it sometime - blind of course.

When I'm really listening, I tend to close my eyes, too.

Regarding the subtle truths of blind listening, that's why I'm afraid it will never become a widely accepted standard. Truly blind listening requires too much humility, as it reveals how subtle or even inaudible the differences are between things that our eyes and prejudices insist are huge differences. It faces us with the actual limits of human auditory perception, and it points out how heavily our listening experience is informed by our other senses and our worldview.

I expect the findings of a truly blind auditioner comparing two pieces of gear would often be inconclusive. In fact, I would doubt their veracity if they weren't!

Of course, this is diametrically opposed to the audiophile philosophy where we rate the golden-eared gurus by their ability to "identify" the subtlest of nuances, no matter whether real or imagined.

Regarding the subtle truths of blind listening, that's why I'm afraid it will never become a widely accepted standard. Truly blind listening requires too much humility, as it reveals how subtle or even inaudible the differences are between things that our eyes and prejudices insist are huge differences. It faces us with the actual limits of human auditory perception, and it points out how heavily our listening experience is informed by our other senses and our worldview.

I expect the findings of a truly blind auditioner comparing two pieces of gear would often be inconclusive. In fact, I would doubt their veracity if they weren't!

Of course, this is diametrically opposed to the audiophile philosophy where we rate the golden-eared gurus by their ability to "identify" the subtlest of nuances, no matter whether real or imagined.

The blind shall lead the blind. 😀Wouldn't it be awesome if a blind person--or better yet a group of several, male and female over a range of ages--would audition gear and write reviews BEFORE being told anything about the systems they were reviewing? Now, THOSE reviews I would read with great interest!

Actually, it is a fantasy of mine to put on a blindfold and be led by the hand through a high-end show, room to room, listening and recording my observations without prejudice. It wouldn't rise to the level of a scientific study, but it would be a great personal education about my own listening prejudices, especially if I were to go back after the blind auditions and do sighted auditions and compare them to my first impressions.

- Home

- Loudspeakers

- Multi-Way

- Geddes on Waveguides