Hello Barleywater

No

No

I use traditional measurements. What you posted looks good overall but it doesn't match. How can you be sure what your accuracy is based on what you posted?? WAG?? More importantly where is there any correlation between what you posted vs. what's audible and meaningful.

That's the point I was making when I said they don't match. Is the mismatch significant or not based on what??

Rob🙂

Have you ever recorded a music signal through a speaker and compared it to the music signal? Or square waves? What level of accuracy have you achieved?

No

No

I use traditional measurements. What you posted looks good overall but it doesn't match. How can you be sure what your accuracy is based on what you posted?? WAG?? More importantly where is there any correlation between what you posted vs. what's audible and meaningful.

That's the point I was making when I said they don't match. Is the mismatch significant or not based on what??

Rob🙂

Barleywater, would you mind posting impulse responses (the data, not the graphics) recorded at the listening position, L and R speakers? That would be way more useful than those waveforms you presented. EDIT: those look really excellent, of course.

Last edited:

If you have achieved this level of input/output matching there is hardly any room for errors, don't you see?More importantly where is there any correlation between what you posted vs. what's audible and meaningful.

What's audible and meaningful to you, you've got to find out yourself...

If you have achieved this level of input/output matching there is hardly any room for errors, don't you see?

Hello KSTR

No I am being dense

,so just poke me in the eye

,so just poke me in the eye I have thick skin so don't worry. What level has been achieved here? Where is your benchmark?

I have thick skin so don't worry. What level has been achieved here? Where is your benchmark?What's audible and meaningful to you, you've got to find out yourself...

From a subjective standpoint yes, from a measurement stand point I don't agree. Measurements presented on a public forum should be in a standard format. It makes the conversation a lot easier if we are all talking the same language.

Rob🙂

Earl,

I am wondering whether you have tested you waveguide without the rounded lip termination? How do measurements look and how it sounds?

Mouth termination is the appropriate description. Yes, I have. The measurements are terrible, just like I expected. How does it sound? I don't know, why would I want to listen to something that measured that bad? 😕

On purpose I avoided any term like "sound" or "audible". Perhaps I should have written "The reproduction looks impressive".

My superposition wants to show, that already the most primitive visual comparison reveals differences in the acoustical domain.

Rudolf

I missed the subtlety. I should have know!

If you have achieved this level of input/output matching there is hardly any room for errors, don't you see?

What's audible and meaningful to you, you've got to find out yourself...

I see now - yes, I didn't see it before. There is just the right amount of room reverberation and coloration to offset the obvious emphasis on the high end. (Did I get that right?)

That was just a bad joke

It is indeed quite impressive.

Once the phase is linear such comparisons are possible. Have you tired subtracting the two tracks to listen to residual differences?

If the phase and amplitude is linear all that should remain will be non linear distortions (IMD, harmonic, thermal, ...). That would be interesting to really "hear" these isolated from the original signal.

In anechoic setting the difference signal would be non linear distortions, but here the reverberation of room from preceding signals is involved. Also speaker pass band is narrower than recording bandwidth. When speakers all pass is applied to recording, time domain responses are more closely matched. With non linear phase aligned systems, system's underlying all pass filtering may be applied to test signal, producing null for comparison to response recording.

Alignment of the visual presentation is done manually, with accuracy only to single sample offset to what is visually correct alignment for comparison. Alignment target was of apparent signal peaks. Alignment may also be targeted to zero crossings. Narrow band pass filters applied to source/response signals may be used to check waveform fidelity within chosen bands.

Indeed I have subtracted source signal from response recording and listened to results with ear buds. It sounds like my living room. This is on basis of what I hear in room when playing very short broadband bursts. With broadband bursts I can hear echos coming from living room, from dining room connected by doorway, from kitchen connected to other end of dinning room by second doorway, and from stairwell in corner of living room that leads upstairs. Many of these reflections can be heard with good solid hand clap too. Living room even has wall to wall carpet, and acoustic tile ceiling.

Also with ear buds, I've listened to source in left ear and difference signal in right. Sound is perceived as coming from left ear, with some of the lower frequencies perceived as centered in my head. An excellent entry point into examining localization cues.

I agree.

However, this has a limited bandwidth representation. It would be interesting to see a more complex music passage, and be consistent within 15 degrees off axis, that would be IMPRESSIVE!

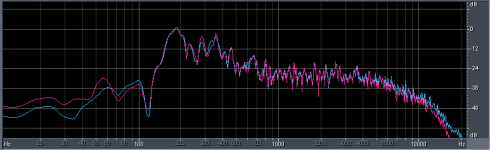

Percussion sounds are very broadband as evidenced by spectrum spanning drum strike from above waveform:

In above, white trace is source.

Peak in response spectrum at about 55Hz is most likely intermodulation difference component(s).

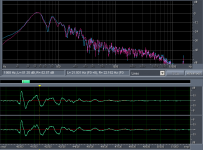

Here is picture of waveforms and spectra of kick drum from same response recording:

Spectral convergence is quite good; even for low relative levels of higher frequency components. Waveforms retain high similarity out to 200ms where room interactions and noise finally become significant.

The speaker alignment included bass extension. For use as two way in close listening this works quite well; however when driven hard bass extension leads to audible deterioration. But bass extension also makes integration with sub straightforward.

The system alignment and response recording were done in a single session without movement of microphone or speaker system.

Convolution of correction filters is done in real time. This need not be the case when system non linearity is small for extended studies. Convolution of speaker impulse response with source signal off line produces virtually identical results.

Off axis impulse responses where captured, and may be used as predictors of high confidence for looking into speakers off axis response to arbitrary stimulus.

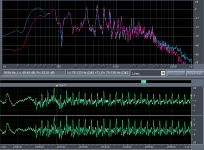

Here is convolution of 306ms impulse response with microphone 30° horizontal by 30° vertically above tweeter axis with part of same track from above that contains percussion, harmonica and guitar:

Sure, their are differences in spectra and in waveforms but consistencies are far greater, revealing a very high level of performance over 60°.

Mouth termination is the appropriate description. Yes, I have. The measurements are terrible, just like I expected. How does it sound? I don't know, why would I want to listen to something that measured that bad? 😕

Oh, just curious. I find it useful to correlate problems with actual sonic signatures detected during listening. I was also wondering if it was possible to have diffraction in higher frequency content that the driver.

Last edited:

Certainly can't complain about this. I am actually surprised to see data so good coming from a Pluto configuration, but this also reminded me about the configuration that inspired Joachim to work an a ZDL project. He must have heard something special and felt that diffraction reduction was of major importance. Seems like this Pluto configuration does not contribute much diffraction of concern just looking at the data. Would love to listen to it when there is a chance.Percussion sounds are very broadband as evidenced by spectrum spanning drum strike from above waveform:

View attachment 379559

In above, white trace is source.

Peak in response spectrum at about 55Hz is most likely intermodulation difference component(s).

Here is picture of waveforms and spectra of kick drum from same response recording:

View attachment 379560

Spectral convergence is quite good; even for low relative levels of higher frequency components. Waveforms retain high similarity out to 200ms where room interactions and noise finally become significant.

The speaker alignment included bass extension. For use as two way in close listening this works quite well; however when driven hard bass extension leads to audible deterioration. But bass extension also makes integration with sub straightforward.

The system alignment and response recording were done in a single session without movement of microphone or speaker system.

Convolution of correction filters is done in real time. This need not be the case when system non linearity is small for extended studies. Convolution of speaker impulse response with source signal off line produces virtually identical results.

Off axis impulse responses where captured, and may be used as predictors of high confidence for looking into speakers off axis response to arbitrary stimulus.

Here is convolution of 306ms impulse response with microphone 30° horizontal by 30° vertically above tweeter axis with part of same track from above that contains percussion, harmonica and guitar:

View attachment 379561

Sure, their are differences in spectra and in waveforms but consistencies are far greater, revealing a very high level of performance over 60°.

Last edited:

Seems like this Pluto configuration does not contribute much diffraction of concern just looking at the data.

Wow. I wish I had that kind of vision.

I have always had a hard time seeing diffraction even under ideal measurement conditions where I knew exactly what I was looking for. To be able to see it in a time trace is truly an amazing feat.

I can't say I am correct, but it seems good enough to at least interest me to listening to them. If something sounds good it does not seem good in the data, I feel tempted to improve the measured performance to see what change I can hear. Pretty time consuming process. Sometimes I just live with it for a while to see if my opinion changes, then take the next step.

Competent Measurements

yeah right. like enabl, cable threads, beyond the (whatever), etc. that sort of thing...

What planet does that occur on??

John L.

Hello KSTR

Measurements presented on a public forum should be in a standard format. It makes the conversation a lot easier if we are all talking the same language.

Rob🙂

yeah right. like enabl, cable threads, beyond the (whatever), etc. that sort of thing...

What planet does that occur on??

John L.

Mouth termination is the appropriate description. Yes, I have. The measurements are terrible, just like I expected. How does it sound? I don't know, why would I want to listen to something that measured that bad? 😕

This is absurd? Time to set up and measure and not a moment of self indulgence. Science is stoked by imagination and playtime; often leading to great and coherent thoughts.

Oh, just curious. I find it useful to correlate problems with actual sonic signatures detected during listening. I was also wondering if it was possible to have diffraction in higher frequency content that the driver.

I think it is still one frequency in, one frequency out, discounting non linearity.

Driver breakup has ability to completely change radiating wavefront. Controlled break up leads to dynamically changing wavefront. For stereo process this could not be good.

Time to set up and measure and not a moment of self indulgence.

You mean "self delusion" not "self indulgence". A waste of time is just a waste of time.

It would cost a fortune to develop something that gets closer to perfection. Ultimately, you need to integrate room design into the factor. I think the art is trying to get the most out of limited size, limited price, specific maximum SPL, and bandwidth by trying to keep the imperfections as least audible as possible.I think it is still one frequency in, one frequency out, discounting non linearity.

Driver breakup has ability to completely change radiating wavefront. Controlled break up leads to dynamically changing wavefront. For stereo process this could not be good.

Driver breakup is the beginning of modal operation. Lots of full range drivers play on this, and some have surprisingly good aspect in the sound reproduction. The most annoying aspect of breakup and model operation is that the delayed release of energy is hard to control, which is the main source of coloration in sound reproduction. I think it is challenging to design a driver with the modal operations as far out of the bandwidth as possible and without introducing a strong breakup mode. This is the main challenge for me amongst other things going on.

citation neededthe delayed release of energy is hard to control, which is the main source of coloration in sound reproduction

This is my own experience. If I am the first to experience it, not sure how to find references. Basically reduce it and listen for your self. FWIW, even my patents do not reference others, and I never do so in defending it either. In the past my patent attorney caused some complications in trying to do so, and I gave instructions never do so without my acknowledgement because I don't knowingly do that in development. I try to go deeper into the detail and figure things out from the basic phenomena.citation needed

Last edited:

- Home

- Loudspeakers

- Multi-Way

- Geddes on Waveguides