Indeed, however in the long run fools and trolls can be right. There may be a method for condensing time, in the form of a hardware artificially maintained at the causal edge, providing any computation answer, one microsecond after having asked the question.He already said that a silent PC could do millions of taps - you're seriously not giving him a hard enough time here steph by limiting it to six thousand 😀

This way a one million taps FIR could execute in one microsecond, fast enough for six 96 kHz audio channels. Currently we have no idea how to do it because we don't know how the brain operates, how the brain stores data, and how the brain processes data.

On March 15th 2012, French scientist Jean-Marie Souriau died. From 1960 he kept suggesting that we are not in the reality. He kept suggesting that we were equipped with hardware-cabled "group engines" from a mathemetical conception, acting as perception devices. He kept suggesting that mechanical engineering is a mathematical group (groupe de Galilée), that relativity is another group (groupe de Poincaré), and generalized relativity is another group. He kept suggesting that we, as living organisms, we are trapped into particular, complicated sections of "hidden groups". The whole geometry discipline, is now considered as a mathematical group, indeed.

According to Jean-Marie Souriau, from a geometrical and mathematical perspective, time and energy can be manipulated, annihilated, using particular group sections to be seen as geometric sections. In an interview dated December 27th 2010, he said that monkeys have abilities that the human don't have, because they have a better "Euclidian group" cabled in their brains. In the same interview Jean-Marie Souriau said that some day, mathematics, geometry and physics will meet in the neuroscience playground and generate huge progresses, but it won't happen rapidly because those sciences are usually kept apart.

A very optimistic theory - nothing to do with Jean-Marie Souriau words here - would be that once you manage to operate the correct geometric transformation, you end up like "virtually" connected to the "reality", hence able to manipulate time, space and energy at will. There are peoole saying that the human can be persuaded doing this, by accident (near death experiments), by drugs (DMT), and possibly later on, by science. You'll find always people ready to say that a copper wire has an infinite digital processing power because it permanently executes a 1-million sample FFT then inverse FFT on the signal he is conveying, taking the required energy from nowhere, and exactly compensating the inherent delay by executing in the future. Or in the past? This is only about a copper wire. Imagine what they would say about a whole brain. Gosh, I'm completely lost!

Last edited:

You're welcome to contribute to that thread I already linked, this one clearly isn't for you 😀

CuTop is right and the point is this: if you guys intend to make extensive use of long FIRs, then you'd rather stop counting the MACS of the direct (or time-domain) implementation and look instead at the classic ways to implement FIRs in the frequency domain. You will save a lot of processing resources - but you'll have to implement a FFT and deal with output delay.

For you Abralixito:

a FIR is a convolution denoted

y[n] = x[n]*h[n]

y is the output, x the input and h is your filter. n is the time index, and * is the convolution operator.

In order to make it in the frequency domain, you compute first the Fourier transform of x and h:

X = F(x) and H = F(h)

The convolution in time-domain translates into a simple multiply in the Frequency domain:

Y = X . H

where Y is the Fourier transform of your output and '.' is the multiply operator.

All you need to do now is to compute the inverse Fourier transform of Y in order to recover the time-domain samples.

So the global operation is:

y[n] = invF(F(x[n]).F(h[n]))

Now what's the fuss?

Well, direct form of convolution is power hungry, and required resources grow with *square* of the length of the filter.

The domain-frequency convolution, despite of looking complicated, is much less power hungry if you compute the Fourier transform and it's reverse using a FFT.

Hope it makes sense!

Indeed, however in the long run fools and trolls can be right.

Nice.

CuTop is right and the point is this: if you guys intend to make extensive use of long FIRs, then you'd rather stop counting the MACS of the direct (or time-domain) implementation and look instead at the classic ways to implement FIRs in the frequency domain.

Sure, but its a very big 'IF' there from my pov. I'm content with FIRs in the range 3-128 taps (take a look at my blog for my 3 tap one). If I were doing bass control (no plans to, as yet) I'd decimate down first.

You will save a lot of processing resources - but you'll have to implement a FFT and deal with output delay.

That's debatable - I'm definitely interested if you can show how it all fits on a simple M0/M3 with 8k RAM and 32k flash (and no FPU). Otherwise you're tilting at windmills 😀

Yes indeed, that's a strong point. I'm very curious to see how this will look, practically.CuTop is right and the point is this: if you guys intend to make extensive use of long FIRs, then you'd rather stop counting the MACS of the direct (or time-domain) implementation and look instead at the classic ways to implement FIRs in the frequency domain. You will save a lot of processing resources - but you'll have to implement a FFT and deal with output delay. Hope it makes sense!

Most of the time, there will be a Bode plot (gain and phase) associated to a given speaker driver exhibiting a 2nd order high-pass at something like 100 Hz (Q maybe 1.0), a few semi-random in-band irregularities in a 6 dB corridor from 100 Hz to 5 kHz, possibly a +10 dB resonance at 5 kHz, then a quite irregular low-pass above 5 kHz, possibly 3rd-order, with a few high frequency resonances corresponding to the cone breaking up. Most of the time, the designer ambition is to reshape the Bode plot, for attaining a desired Bode plot like a nice 4th-order double Butterworth highpass at 300 Hz and a 4th-order double Butterworth lowpass at 3 kHz, with the phase exactly matching a minimum phase behaviour.

The direct FIR method is very simple : you graph the actual driver impulse response, you graph the idealized driver impulse response, and by comparing them, you know the FIR coefficients that you need. You apply a windowing function, and you are done.

Basing on this, how would you calculate the FFT coefficients (real and imaginary) for generating the exact same filtering function, both in magnitude and in phase?

Say we apply a 1024 point FFT at 48 kHz, leading to a frequency resolution of 47 Hz. Is the FFT + FFT-1 feasible, realtime, on a Cortex-M4 clocked at 72 MHz?

Last edited:

That's debatable - I'm definitely interested if you can show how it all fits on a simple M0/M3 with 8k RAM and 32k flash (and no FPU). Otherwise you're tilting at windmills 😀

I've seen FFTs running on tiny fixed-point processors, I don't think you're going to have a problem running it on your ARM.

It's not my purpose to show anything - I just reacted when CuTop was accused of trolling, which he wasn't. Sorry if I let you think I was trying to demonstrate something.

Now re. the FFT on the ARM, I know there's a DSP library from ARM themselves. There should be a FFT in it, and if it's the case you even won't have to implement it 😉

Basing on this, how would you calculate the FFT coefficients (real and imaginary) for generating the exact same filtering function, both in magnitude and in phase?

Ok so your filter coefficients in time domain are

h[n] = h[0], h[1], ..., h[M] where M+1 is the length of your filter

Your coefficients are simply the Fourier transform of the sequence h:

H(k) = F(h[n])

Say we apply a 1024 point FFT at 48 kHz, leading to a frequency resolution of 47 Hz. Is the FFT + FFT-1 feasible, realtime, on a Cortex-M4 clocked at 72 MHz?

For the convolution itself, you don't need to care about 'frequency resolution'. The length of the filter will impact what you can achieve, but this is not the FFT convolution matter.

Now why do you want a 1024-point FFT?

Let's say your original filter is a 1024-point sequence. You can always split it into 8 successive 128-point sequences and thus use a 128-point FFT...

I've seen FFTs running on tiny fixed-point processors, I don't think you're going to have a problem running it on your ARM.

I've coded FFTs - on a 68k back in 1986. It did use up a fair amount of RAM (I had 64k bytes though) and that's quite limited in this application as I copy the code to RAM for increased speed. This is not just about writing the code, your argument was that it would be a better use of resources. That's what I'd like demonstrated - for you to back up that claim. Justify that for (say) a 128 point filter kernel, an FFT convolution would be a better use of resources in this particular instance.

It's not my purpose to show anything - I just reacted when CuTop was accused of trolling, which he wasn't.

He wasn't accused of trolling, his behaviour was described as trolling. Clearly he was and he's done nothing since which is not consistent with trolling.

Now re. the FFT on the ARM, I know there's a DSP library from ARM themselves. There should be a FFT in it, and if it's the case you even won't have to implement it 😉

I have no doubts there is. But the FFT is just part of the solution to FFT convolution, and a part I'm quite familiar with - enough for me to code it for myself. However this thread is about FIR filters which can be modelled using LTSpice.

Since you clearly know a fair amount about DSP, do you have any answer to my earlier posed question about round-off errors in FFT convolution when using fixed point and how those compare with FIR convolution? I'm curious to know whether Dr Smith's arguments hold up for fixed point.

Don't you have the impression that, operating with a 48 kHz sampling frequency, that you need a 1024-point FFT (hence a 47 Hz frequency resolution) for exercising a decent control on the low part of the spectrum, say between 30 Hz and 300 Hz. In the example I have supplied, you may have noticed that for accurately controlling the 300 Hz highpass (as target), we may need a better frequency resolution than the quoted 47 Hz.For the convolution itself, you don't need to care about 'frequency resolution'. Now why do you want a 1024-point FFT?

Let's say your original filter is a 1024-point sequence. You can always split it into 8 successive 128-point sequences and thus use a 128-point FFT...

I have the impression that your assertions are correct on a mathematical point of view, but completely discoupled, and potentially wrong, when it comes to the physical application.

He wasn't accused of trolling, his behaviour was described as trolling. Clearly he was and he's done nothing since which is not consistent with trolling.

Nice.

It's a real dilemma in these forums.

There's an interesting sounding thread about DSP and active crossovers called "Open Source DSP XOs". It doesn't mention "No PC allowed". It doesn't mention "No FFTs allowed".

There's 29 pages of stuff about specific hardware, and the difficulty of implementing a simple crossover with any number of esoteric processors.

Whereas I've got a system running on a PC using my own software which can implement millions of FIR taps. Personally, I'm using 65536 taps for each of my six channels and the PC isn't even breaking into a sweat. Why shouldn't I ask why people aren't doing the same thing? Mine is an "Open source DSP XO" (anyone is welcome to my code if they ask nicely) so I'm not even off topic.

Ah, but didn't I know? No FFTs and PCs are allowed in this thread because everyone here knows there's something mysteriously wrong with that approach. Eject the outcomer as a troll!

But then it turns out that some people here, at least, don't know about FFTs and how they can bestow upon you virtually unlimited FIR processing power. Maybe there is some theory about 32 bit floats not providing enough precision, but why not use 64 bits instead? Your PC can do it!

But anyway, I'll leave you to it. Good luck!

There's an interesting sounding thread about DSP and active crossovers called "Open Source DSP XOs". It doesn't mention "No PC allowed". It doesn't mention "No FFTs allowed".

Indeed, no objection to FFTs. PCs don't fit the bill here because they're not ARM and they're overkill for the job. Agreed the title of the thread is not specifically descriptive. However from reading the first post you'd note the reason I started the thread was because after Kendall Castor-Perry introduced a way of modelling FIR filters in LTSpice, I felt that could be a way-in for people to design filters. I later clarified that ARM was my preferred route for implementation.

There's 29 pages of stuff about specific hardware, and the difficulty of implementing a simple crossover with any number of esoteric processors.

That's a peculiarly selective review of the contents. Esoteric processors? Steph's introduced a few but the bulk of my contributions (as you might note I'm the thread initiator) is to do with the total opposite of esoteric processors - simple and cheap M0/M3/M4 MCUs.

Whereas I've got a system running on a PC using my own software which can implement millions of FIR taps. Personally, I'm using 65536 taps for each of my six channels and the PC isn't even breaking into a sweat. Why shouldn't I ask why people aren't doing the same thing? Mine is an "Open source DSP XO" (anyone is welcome to my code if they ask nicely) so I'm not even off topic.

Oh, I missed where you asked why people weren't doing the same thing as you. If I'd seen you ask that I'd definitely have answered you that its overkill - in terms of power requirements, space requirements and money. It also very probably won't sound very good - which is a guiding principle for me on this thread. If your PC is a DSP then that's certainly something very interesting and you should start your own thread to explain how you run Windows (or Linux) on your DSP. I'd be very interested for one.

Ah, but didn't I know? No FFTs and PCs are allowed in this thread because everyone here knows there's something mysteriously wrong with that approach. Eject the outcomer as a troll!

Haha very clever but where did I eject you? Trolls can be welcome for bringing in new ideas - as steph has already pointed out. So all views are welcome but where were you when I asked you a question about your suggested approach? Still waiting for an answer to the precision question....

But then it turns out that some people here, at least, don't know about FFTs and how they can bestow upon you virtually unlimited FIR processing power. Maybe there is some theory about 32 bit floats not providing enough precision, but why not use 64 bits instead? Your PC can do it!

Introducing FFTs most certainly is an interesting topic, however its not been shown by either you or chaparK that its relevant. My invitation to you two to show that it is still stands. Trolling is characterised by failing to engage and then buggering off is it not?

It also very probably won't sound very good [...]

That one is at least arguable. 🙂

Introducing FFTs most certainly is an interesting topic, however its not been shown by either you or chaparK that its relevant. My invitation to you two to show that it is still stands. Trolling is characterised by failing to engage and then buggering off is it not?

I don't really get what you want. You pointed to a link where the author states that you can expect noise to be in relation with the routine execution time. It's an intuitive way of looking at round-off errors and it holds whether you're using a fixed-point or a floating-point CPU.

So according to this author again, if the filter length is such that frequency-domain is more efficient, then you can also expect the noise floor to be lower in frequency-domain compared to a time-domain implementation on the same CPU.

That one is at least arguable. 🙂

Go for it then 🙂

I don't really get what you want. You pointed to a link where the author states that you can expect noise to be in relation with the routine execution time. It's an intuitive way of looking at round-off errors and it holds whether you're using a fixed-point or a floating-point CPU.

That's the point I'm not yet convinced about. He himself doesn't claim that - but you are claiming it and I'd like to see support. Is that at all unreasonable?

He says that roundoff errors for FFT would be an order of magnitude better - presumably because of fewer multiplies. But with a fixed point FIR there's no need for any round-off errors in the calculation itself, just rounding off at the end where the accumulator gets truncated (or rounded) to the appropriate number of bits. (We're assuming no overflow from the accumulator which is not so hard to arrange when we have 64 bits, with 16bit data and 16bit coefficients) Of course the coefficients have round-off errors at the start - what's the equivalent in terms of FFT of FIR coefficient rounding?

His argument for round-off doesn't appear to include round off errors before the start of computation, which I feel are still important. Perhaps this is related to steph's earlier point to you - he's not yet convinced that FFTs represent his desired frequency response with the required precision ISTM.

It's a little bit too easy, telling lies (the one million tap FIR on a PC) and qualifying as "esoteric" the ARM Cortex-A8, A9 and A15 used in millions Smartphones. Then saying good bye and good luck? What a joke! If you don't call this trolling, how would you call this? You asked for a reaction. Now you get a reaction.There's 29 pages of stuff about specific hardware, and the difficulty of implementing a simple crossover with any number of esoteric processors. Whereas I've got a system running on a PC using my own software which can implement millions of FIR taps. Personally, I'm using 65536 taps for each of my six channels and the PC isn't even breaking into a sweat.

Last edited:

If you don't call this trolling, how would you call this? You asked for a reaction. Now you get a reaction.

I'd not expect a troll to actually self identify as such - to them their behaviour is just 'what I do'. Its largely unconscious, somehow they feel driven to seek attention in a dishonest manner. Enjoy the entertainment 😀

The key is not to react to trolling, just respond to it. Reaction is what the troll sets out to get - a response though is somewhat befuddling to them. Which is why CuTop's self justifications don't hold any water - the response is not fitting his script.

Don't worry, he is not supposed to come back. If he does, he will need to find an explanation for having introduced himself like being able to millions of FIR taps on a PC (this is dishonest), then later on, changing his pitch, telling us that in reality, he is executing six 65536-tap FIRs at a xx kHz sampling frequency.I'd not expect a troll to actually self identify as such - to them their behaviour is just 'what I do'. Its largely unconscious, somehow they feel driven to seek attention in a dishonest manner. Enjoy the entertainment 😀

Is he using Synthmaker ? Was there a misunderstanding about the meaning of audio, about the meaning of realtime digital audio processing? Come on!

Is he doing bare FIRs, or is he taking advantage of the FFT then reverse FFT optimization? He said he was ready to expose his code "anyone is welcome to my code if they ask nicely", but he said this in the same message saying his big good bye to us. This is also dishonest. Since the beginning, the wants us to be frustrated, that's all.

Here is a valuable proposition. Let us write six stupid 65536-tap FIRs on a PC, and get them executed without a hiccup, outputting six audio channels thanks to the WinXP hdAudio subsystem on the motherboard, or by hooking a CM6206 based USB audio 5.1 attachement in case the PC only has stereo audio on the motherboard.

Preferably not using Synthmaker.

Preferably taking advantage of the SS2 extensions.

Just for the fun.

Now, if he comes back here showing his software approach, showing his code, that's a complete different story and I apology by advance.

Be warned that six 65536-tap FIRs at 48 kHz means 18.9 Giga MAC/s.

Even if you rely on SS2 extensions, packing four channels, you need a 6.3 Giga MAC/s.

If you run on a quadcore 3 GHz processor, you may theoretically attain 12.0 Giga MAC/s.

However the above estimates are invalid, because of the loop overhead.

Thus, the guy is not executing bare FIRs.

He may be executing the FFT then reverse FFT optimization.

Which is not the way he presented itself.

Read again post #287 : "A £50 used PC (a quiet one) with suitable sound card provides enough computing power to implement millions of FIR taps (you don't have to emulate boring old analogue filters), provides a colourful GUI to display any info you want, allows you to develop on the actual target machine using free tools, and there's no re-sampling or jitter to worry about. What's with all the messing about with DSP boards etc.?"

Last edited:

Not my idea of fun, but in the absence of any evidence that FIRs implemented as MACs are noisier than FFT convolution, I'm certainly sticking with the former. After all, FIRs can be incorporated into DACs (no need for MACs at all!) which is my current line of development 🙂

I'm always astonished by statements made by consultants, about applications they don't know.

Around 1984, there was the question of how to use the Intel 8051 one-chip microcontrollers for magaging telecom equipment, replacing discrete TTL gates by software. There were hardware blocks containing analog filters detecting the 50 Hz ring tone, the 440 Hz tone, and the 2280 Hz signalling tone. Those analog detectors delivered logic signals, to be interpreted by the 8031.

We asked consultants what was the optimal sampling frequency to be used by the Intel 8031 chip, for assessing if a tone was there, or not there. Depending on the consultant we got different answers.

There was a consultant saying that the 8051 had to sample at 8,000 Hz because of the telephone line conveying audio with a bandwith of 4000 Hz.

There was another consultant saying that the 8051 had to sample 10 times the maximum envelope variation, because the 8051 inherently did a square wave conversion. We said that the fastest envelope variation was on the 2280 Hz signal, the 2280 Hz detector having a bandwith of approx 10 Hz. He then said that the 8051 needs to sample the 2280 Hz detector output at 100 Hz.

There was another consultant saying that knowing that the 2280 Hz detector output had a 10 Hz bandwith, that the 8051 needed to sample it at 20 Hz.

We threw all tree reports in the bin, set a 20ms main timebase in the 8031, and executed the whole signalling logic at 50 Hz for years. With success.

The company was a Belgian subsidiary, selling military telephonic equipment to the belgian military. The parent company was located in France.

Around 1987, the parent company located in France, inherited some CNET assets, including CNET application engineers involved in bit-slice architectures executing DSP. The parent company bought the T.I. TMS32010 development kit, and transferred the CNET DSP experience on the TMS32010. For getting an immediate return on invest, the parent company started to sell TMS32010 DSP routines to the Belgian subsidiary, replacing the analog tone detectors. This was our second-generation military telecom interface, quite complicated as there was a 8031 chip and program, and a TMS32010 chip and program.

In the Belgian subsidiary we looked at the TMS320C25 potentially replacing the 8031+TMS32010 combination (only one program to manage), but unfortunately to our great frustration, we discovered that we were not anymore in the driving seat regarding the design. The parent company in France exercised full control, wanting to live as long as possible on the initial TMS32010 investment.

Back in those times, the recently introduced TMS320C25 was regarded as a promising chip, as it could address enough data and code for operating as DSP (on the 8,000 Hz interrupt), while executing combinatory and sequential logic (we were using finite state machines executing on a 50 Hz timebase) for managing the equipment inputs/outputs.

Back in 1987, this sounded incredible to engineers, even young engineers having two or three years experience with one-chip microcontrollers like the Intel 8031. Microcontrollers had their own computer science, DSP had a different computer science, and an Intel 8031 specialist was not to be confused with a Motorola 68K specialist. Those were three different worlds. As a joke, a Motorola 68K specialist would always say to an Intel 8031 specialist "prove me that your call return stack can't overflow", knowing by advance that 99% of the time, the Intel 8031 specialist answer would be "I know it doesn't overflow because I never get watchdogs". Amazing, isn't?

Again, if you paid external consultancy for addressing the practical impossibility to demonstrate that your application can't generate stack overflows, you were getting in big trouble. Some were advising solutions costing 10 times your budget, or inflicting a 10x performance penalty, and some others, a 10 times budget inflation, coupled to a 10x performance penalty.

There were intellectual barriers causing trouble like when asking an Intel 8031 specialist to write I/O routines for a TMS320C25. He would consider that you offer him a degraded job, that you don't allow him to exercise his microcontroller programming skills in a normal environment.

Back in those times, between 1984 and 1988, lots of things happened in the microcontroller industry, sometimes generating a degree of psycho-rigidity even with a team consisting on young developers.

When on top of this, each time you ask external consultancy, you get contradictory conclusions, it doesn't help stabilizing, comforting, a team of developers.

What didn't helped, was the arrival of the Transputer, around the same years, as another one-chip microcontroller coming with an incredible blend of ambitions like general control, DSP, and networking. Forcing you to adopt new programming paradigms, forcing you to adopt a new programming language.

We don't see those sort of things nowadays.

Okay, perhaps we still see novelties, but they take 10 years to grow, instead of 2 or 3 years like in the late eighties. I'm convinced that Xilinx Zynq-7000 is the way forward, and we still need to see a Xilinx Zynq-7000 featuring a low pin count BGA, featuring a top connectivity for a stacked SDRAM. A stacked SDRAM that you decide to mount, or not to mount.

Why can't diyAudio enthusiasts use modern silicon and PCB assemblies we find in China Smartphones?

Why cant't we load Linux on a Xilinx Zynq-7000, scrap all the Linux components we don't need, eventually get rid of the SDRAM, load a fresh ALSA patch (Advanced Linux Sound Architecture), and start building something, talking to custom peripherals built with the on-chip FPGA ?

You would answer : because Linux is top notch technology, because ALSA is a special Linux area only mastered by a few specialists, because FPGA design is a completely different discipline, because writing custom Linux drivers for custom FPGA peripherals is a completely different discipline, because the chips are so small they get difficult to assemble, and because the resulting PCB price, produced using batches of 10 (instead of 10,000) is going to repell most diyAudio enthusiats.

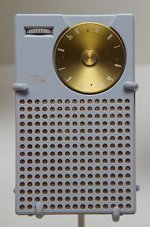

Okay, now look the 1954 Regency TR-1, the first commercially produced transistor radio. Do you see anything complicated in this, now?

Retrospectively, you could build one, as soon as 1958, after carefully studying the people and companies present at the 1958 Brussels Fair, involved into semiconductors distribution and PCB manufacturing.

We need the same dynamic about Linux, ALSA, FPGA design, Linux drivers, Xilinx Zynq-7000, PCB design, PCB assembly. And 20 years later, our successors will see nothing complicated in this.

Around 1984, there was the question of how to use the Intel 8051 one-chip microcontrollers for magaging telecom equipment, replacing discrete TTL gates by software. There were hardware blocks containing analog filters detecting the 50 Hz ring tone, the 440 Hz tone, and the 2280 Hz signalling tone. Those analog detectors delivered logic signals, to be interpreted by the 8031.

We asked consultants what was the optimal sampling frequency to be used by the Intel 8031 chip, for assessing if a tone was there, or not there. Depending on the consultant we got different answers.

There was a consultant saying that the 8051 had to sample at 8,000 Hz because of the telephone line conveying audio with a bandwith of 4000 Hz.

There was another consultant saying that the 8051 had to sample 10 times the maximum envelope variation, because the 8051 inherently did a square wave conversion. We said that the fastest envelope variation was on the 2280 Hz signal, the 2280 Hz detector having a bandwith of approx 10 Hz. He then said that the 8051 needs to sample the 2280 Hz detector output at 100 Hz.

There was another consultant saying that knowing that the 2280 Hz detector output had a 10 Hz bandwith, that the 8051 needed to sample it at 20 Hz.

We threw all tree reports in the bin, set a 20ms main timebase in the 8031, and executed the whole signalling logic at 50 Hz for years. With success.

The company was a Belgian subsidiary, selling military telephonic equipment to the belgian military. The parent company was located in France.

Around 1987, the parent company located in France, inherited some CNET assets, including CNET application engineers involved in bit-slice architectures executing DSP. The parent company bought the T.I. TMS32010 development kit, and transferred the CNET DSP experience on the TMS32010. For getting an immediate return on invest, the parent company started to sell TMS32010 DSP routines to the Belgian subsidiary, replacing the analog tone detectors. This was our second-generation military telecom interface, quite complicated as there was a 8031 chip and program, and a TMS32010 chip and program.

In the Belgian subsidiary we looked at the TMS320C25 potentially replacing the 8031+TMS32010 combination (only one program to manage), but unfortunately to our great frustration, we discovered that we were not anymore in the driving seat regarding the design. The parent company in France exercised full control, wanting to live as long as possible on the initial TMS32010 investment.

Back in those times, the recently introduced TMS320C25 was regarded as a promising chip, as it could address enough data and code for operating as DSP (on the 8,000 Hz interrupt), while executing combinatory and sequential logic (we were using finite state machines executing on a 50 Hz timebase) for managing the equipment inputs/outputs.

Back in 1987, this sounded incredible to engineers, even young engineers having two or three years experience with one-chip microcontrollers like the Intel 8031. Microcontrollers had their own computer science, DSP had a different computer science, and an Intel 8031 specialist was not to be confused with a Motorola 68K specialist. Those were three different worlds. As a joke, a Motorola 68K specialist would always say to an Intel 8031 specialist "prove me that your call return stack can't overflow", knowing by advance that 99% of the time, the Intel 8031 specialist answer would be "I know it doesn't overflow because I never get watchdogs". Amazing, isn't?

Again, if you paid external consultancy for addressing the practical impossibility to demonstrate that your application can't generate stack overflows, you were getting in big trouble. Some were advising solutions costing 10 times your budget, or inflicting a 10x performance penalty, and some others, a 10 times budget inflation, coupled to a 10x performance penalty.

There were intellectual barriers causing trouble like when asking an Intel 8031 specialist to write I/O routines for a TMS320C25. He would consider that you offer him a degraded job, that you don't allow him to exercise his microcontroller programming skills in a normal environment.

Back in those times, between 1984 and 1988, lots of things happened in the microcontroller industry, sometimes generating a degree of psycho-rigidity even with a team consisting on young developers.

When on top of this, each time you ask external consultancy, you get contradictory conclusions, it doesn't help stabilizing, comforting, a team of developers.

What didn't helped, was the arrival of the Transputer, around the same years, as another one-chip microcontroller coming with an incredible blend of ambitions like general control, DSP, and networking. Forcing you to adopt new programming paradigms, forcing you to adopt a new programming language.

We don't see those sort of things nowadays.

Okay, perhaps we still see novelties, but they take 10 years to grow, instead of 2 or 3 years like in the late eighties. I'm convinced that Xilinx Zynq-7000 is the way forward, and we still need to see a Xilinx Zynq-7000 featuring a low pin count BGA, featuring a top connectivity for a stacked SDRAM. A stacked SDRAM that you decide to mount, or not to mount.

Why can't diyAudio enthusiasts use modern silicon and PCB assemblies we find in China Smartphones?

Why cant't we load Linux on a Xilinx Zynq-7000, scrap all the Linux components we don't need, eventually get rid of the SDRAM, load a fresh ALSA patch (Advanced Linux Sound Architecture), and start building something, talking to custom peripherals built with the on-chip FPGA ?

You would answer : because Linux is top notch technology, because ALSA is a special Linux area only mastered by a few specialists, because FPGA design is a completely different discipline, because writing custom Linux drivers for custom FPGA peripherals is a completely different discipline, because the chips are so small they get difficult to assemble, and because the resulting PCB price, produced using batches of 10 (instead of 10,000) is going to repell most diyAudio enthusiats.

Okay, now look the 1954 Regency TR-1, the first commercially produced transistor radio. Do you see anything complicated in this, now?

Retrospectively, you could build one, as soon as 1958, after carefully studying the people and companies present at the 1958 Brussels Fair, involved into semiconductors distribution and PCB manufacturing.

We need the same dynamic about Linux, ALSA, FPGA design, Linux drivers, Xilinx Zynq-7000, PCB design, PCB assembly. And 20 years later, our successors will see nothing complicated in this.

Attachments

Last edited:

I worked for about 4 years in a consultancy role. It surprised me when my colleagues complained about how idiotic their clients were. To me it was obvious we'd have idiots as clients because that was why they hired us. Anyone with smarts would do it themselves.

Since I'm now a part-time teacher, I believe the time-honoured dictum of G B Shaw needs an overhaul -

'Those who can, do. Those who can't, consult.'

Since I'm now a part-time teacher, I believe the time-honoured dictum of G B Shaw needs an overhaul -

'Those who can, do. Those who can't, consult.'

- Status

- Not open for further replies.

- Home

- Source & Line

- Digital Line Level

- Open Source DSP XOs