But where's the source of the timing errors, and to what degree? This is basically true, but a straw man.Roughly true in theory, sometimes not true in reality. Timing errors of I2S and MCLK signals, and including noise mixed with those signals is known to have audibly affected the analog outputs of some dacs.

No they are not. DAC literature is written to market a product. The audible effects of jitter are not well quantified at all, for reasons in my previous post. You can only say that less jitter is likely better, but to what extent depends on many factors.The effects of jitter are well documented the dac literature.

If I had any other point here, it would be that if jitter is suspected and reduction is desired, then jitter must first be measured, either directly or its results. Direct jitter measurement is outside the capabilities of most in the DIY community, but measuring it's result in audio is absolutely doable with free spectrum analyzer software like REW. Until that's been done, and done with the total network transmission system included, there's no problem to work on.

I am sure electronic noise affects a DAC in many ways, but the two I know of is:

1. The clock oscillator will decrease in accuracy, which will mean more clock jitter (google "audio jitter" or something similar if you want to read up on what this is)

You know it's here you go wrong. What you dint understand is that there are more then one clock domain involved and they don't effect each other. The clock driving the ethernet has noting to do with the i2s clock. It's easy to understan if one ponder the situation if one would change the ethernet from 100b-t to 1gbps... would the i2ss clock go 10 times faster?

No.

QED.

//

Again, if the data is correctly presented to the DAC, there can be no change by definition.Didn't I already answer this? From a data-integrity standpoint, there is no change, but we are talking about audible changes in a DAC.

This is so general as to be both correct and incorrect at the same time. You must first define "clock accuracy". You can have accuracy in frequency, accuracy in stability, and stability over both long and short term. Then you must... MUST include the specific spectrum of the clock variation, or you don't have even the start of enough information here. Clock accuracy and jitter are two different things with two different causes.I am sure electronic noise affects a DAC in many ways, but the two I know of is:

1. The clock oscillator will decrease in accuracy, which will mean more clock jitter (google "audio jitter" or something similar if you want to read up on what this is)

True, but only in really badly designed systems. I would think an audiophile grade DAC would have none of these problems.2. The analogue output section of the DAC, which deals with generating a very accurate analogue signal to the amplifiers, will add electronic noise into the mix and end up doing it less accurately.

However, we've been discussing Ethernet and TCP/IP, not DACs. The data presented to the DAC post network transmission has already been conditioned correctly by definition of the transmission system.

Depends on what you would like to measure. What aspect are we lookin for?Do you have the capability to measure the signals on the 8 (or 4) Ethernet conductors?

Again, if the data is correctly presented to the DAC, there can be no change by definition.

Weeeell, we better stick to facts here... timing can have an impact on DAC depending on the DAC. A jitter sensitive DAC will produce distorsion as a consequence of jitter even if data is correct.

//

Weeeell, we better stick to facts here... timing can have an impact on DAC depending on the DAC. A jitter sensitive DAC will produce distorsion as a consequence of jitter even if data is correct.

//

Correct, not dispute there at all. The audibility depends on the degree and spectrum of the jitter signal.

The point is, the network transmission system has already dealt with jitter because datagrams and packets are not expected to be either synchronous or sequential.

All correct, but look at the context. Network data transmission with Ethernet and TCP/IP layers assumes there will be issues in the time domain, sometimes massive latency, and some packets won't even arrive in sequential order because of a retransmission request. There must be a buffer somewhere to reassemble this mess into coherent and error-free data. The buffer size could be very significant, even for local data links from PC to DAC over Ethernet. But the buffer implies reclocking, by definition. Reclocking is where the jitter would be removed, or if badly done, introduced. Bad reclocking is highly unlikely.

But again, since packet transmission is not synchronous or even sequential, there must be buffered reclocking. Any reclocking completely takes care of data time domain issues.

Correct, there are jitter buffers, at the hardware level most nic's will have settings that can be changed via the device manager and will effect all data packets in/out of the nic, additionally there can be other buffers at the software application layer for applications like audio and voip. I would imagine that any changes to audio rates and clocking would be applied by the software application for an attached audio device like a PCIe audio card or i2s/usb dac.

It appears that the OP has moved the goal posts from ethernet clocks to noise problems with DAC's.

All correct, but look at the context. Network data transmission with Ethernet and TCP/IP layers assumes there will be issues in the time domain, sometimes massive latency, and some packets won't even arrive in sequential order because of a retransmission request. .

He hasn't said if the link uses TCP/IP, UDP or some form of non-retry protocol...

No they are not. DAC literature is written to market a product.

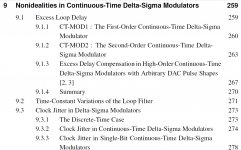

Please see Understanding Delta-Sigma Data Converters, 2nd Edition: https://www.wiley.com/en-us/Understanding+Delta+Sigma+Data+Converters,+2nd+Edition-p-9781119258278

Chapter 9 table of contents attached below.

measuring it's result in audio is absolutely doable with free spectrum analyzer software like REW.

J-Test only shows deterministic jitter, which is not the only kind that can have an audible effect. The test is overly relied upon because it is cheap and easy to do.

Attachments

Last edited:

He hasn't said if the link uses TCP/IP, UDP or some form of non-retry protocol...

He doesn't have to. If its a commercial DAC on a home network, the principles all apply.

Again, if you really want to improve your audio, look into what's happening in the speakers and room. There are huge problems to solve there that will be very audible to anyone.

That's the real truth.

That's the real truth."I am not aware of any peer reviewed published research on the subject."Hopefully people here understand that the term 'threshold of audibility' as used in psychoacoustics is not a hard limit. Rather, it is an estimate of an average value for a particular population (i.e. half the people in the population are not expected to be able to hear below the threshold).

That (in bold) is the key word which many unsuspecting consumers / diy-ers fall for.DAC literature is written to market a product.

Please see Understanding Delta-Sigma Data Converters, 2nd Edition: https://www.wiley.com/en-us/Understanding+Delta+Sigma+Data+Converters,+2nd+Edition-p-9781119258278

Chapter 9 table of contents attached below.

J-Test only shows deterministic jitter, which is not the only kind that can have an audible effect. The test is overly relied upon because it is cheap and easy to do.

I'm not arguing that jitter can have an audible effect. That's not in question. The degree of audibility is not a fixed single figure because the jitter signal is not of a known spectrum and magnitude. And then theres masking.

I'm stating, and re-stating the fact that nework data transmission assumes asychronous, and non-sequential datagrams and data packets. Any data corruption of any kind, including time domain, must by definition be addressed by the data transmission system and the data interface of a network-connected DAC. Jitter, in this case, is simply not a factor, and more to the origin point of the thread, cannot and will not be addressed by attempting to change the powering regulation and power circuit topology in a NIC.

Last edited:

I was talking about the DAC clock, which handles the actual audio stream (PCM or DSD), and in this case jitter can be very audible. Electronic noise will decrease the accuracy of the DAC clock, which will result in more so called clock-jitter.The buffer size could be very significant, even for local data links from PC to DAC over Ethernet. But the buffer implies reclocking, by definition. Reclocking is where the jitter would be removed, or if badly done, introduced. Bad reclocking is highly unlikely.

I know you were. But electronic noise affecting a DAC clock is entirely situational relating to a specific design flaw. Your suggested mods have nothing to do with that issue at all.I was talking about the DAC clock, which handles the actual audio stream (PCM or DSD), and in this case jitter can be very audible. Electronic noise will decrease the accuracy of the DAC clock, which will result in more so called clock-jitter.

No, other people did that for me 🙂It appears that the OP has moved the goal posts from ethernet clocks to noise problems with DAC's.

But they are related, since electronic noise reduce the accuracy of the clocks, and clocks in turn produce electronic noise.

But lets take a step back, here are the 3 things in a digital transmission that can affect sound (this is true for ethernet, USB, coax and even I2S):

1. Bit errors, which is typically not a problem (tweaks or no tweaks)

2. Audio stream jitter for SPDIF, and internal clock jitter inside the DAC

3. Electronic noise coming into the DAC with the digital data

1 is usually not a problem unless faulty cables or equipment, and we can disregard it in this context. 2 is not applicable to ethernet since its not time-dependent (note that ethernet has jitter, but its not the jitter mentioned in 2).

But ethernet and all electric connections in to a DAC contribute to 3, and bad timing, clock phase noise, switched regulators, RFI, pollution from mains grid and much much more affects this. And its very audible from a transparent HiFi system, even on well designed DACs like RME ADI-2 which I use.

Hopefully people here understand that the term 'threshold of audibility' as used in psychoacoustics is not a hard limit. Rather, it is an estimate of an average value for a particular population (i.e. half the people in the population are not expected to be able to hear below the threshold).

Yes, agreed. I myself take no hard position on the audibility of conversion jitter. Indeed, as I indicated, this topic is in dispute.

I was talking about the DAC clock, which handles the actual audio stream (PCM or DSD), and in this case jitter can be very audible. Electronic noise will decrease the accuracy of the DAC clock, which will result in more so called clock-jitter.

As a consequence of the clock quality in a switch... right?

//

Those are only indirectly related: accuracy for an oscillator is decreased by electronic noise, and noise can come from ethernet and the switch/NIC. And all clocks produce phase noise (some very much so).As a consequence of the clock quality in a switch... right?

//

So in a roundabout way, the clock in the switch/NICcan affect the clock in the DAC.

Last edited:

- Status

- Not open for further replies.

- Home

- Source & Line

- Digital Source

- Low-phase noice clock for ethernet, 25Mhz