So the question is not "can we localize on this or that frequency range", but "what frequency range does this the fastest". I think that there is only one answer to that question.

I have the feeling that you're looking for a question that will fit your predetermined answer.

As Pano's experiment clearly showed, the brain doesn't necessarily latch on to the "fastest" signal. It does get confused when slower components don't follow its initial formulation.

The problem is 2-speaker stereo itself. The room can make matters worse, much worse.

See the Benjamin/Brown graphs I had posted.

Griesing shows the same:

(Griesinger, "Pitch, Timbre, Source Separation")

Attachments

I think all research tell us we need two ears to localize. I have met people with one good ear that knows where I am whenever I speak to him. Has anyone reference to research on single ear localization?

Which is faster and most accurate - a crossbow bolt, or bullet? Both can kill you if they hit you in the head. To ignore one, just because the other is faster, is a risk some may not be willing to take.I am asking "What frequency range provides the fastest and most accurate determination of sound localization."

What is the practical need to know that which frequency range is more quickly localized? To design a "Home Theater in a Box" system?

The received knowledge in audio is that bass is omnidirectional, so it doesn't matter where the low frequency parts of the spectrum come from, as long as they exist. While that may be a good cheat for size, price, WAF, etc., it isn't high fidelity. I'm so tired of hearing and reading what is clearly wrong, that I've done tests to prove (at least to myself) just how wrong it is.

When this research showed up in the thread that location is dominant >700Hz, it was extrapolated into the idea that <700Hz is not important to localization, therefore we can safely ignore it. I, and others, remained skeptical about how well this would apply to a loudspeaker system. I did some simple experiments to verify this.

Yes, the upper mid-range of frequencies get our attention, for whatever reason. Yes, if we have to cheat with our systems, that's the range to favor. But ignoring the directivity of the lower registers is no way to achieve high fidelity.

My intent is simple. To debunk the audio myth that bass is omnidirectional and can't be localized, therefore we can put it anywhere and not worry. Raising the disregard for directivity to 700Hz is just plain silly. You may dodge the bullet, but that arrow sticking out of the back of your head is going to hurt.

... the brain doesn't necessarily latch on to the "fastest" signal.

It will if it can, that's the whole point. If it can then it will lock into the image, if it can't then it resorts to other less effective methods and the image becomse "muddy".

But ignoring the directivity of the lower registers is no way to achieve high fidelity.

No one "ignores" it, its just not as important. Why do you keep saying otherwise?

I have the feeling that you're looking for a question that will fit your predetermined answer.

I am looking for the truth, not just to "win" the argument.

Raising the disregard for directivity to 700Hz is just plain silly. You may dodge the bullet, but that arrow sticking out of the back of your head is going to hurt.

mmm 700 does seem a bit high, yes. 100 or 200, in a room, why not after all.. but 700? Basically nearly all the vocal fundamentals 😕

I am looking for the truth, not just to "win" the argument.

Did you try the barrier? Did you try Pano's files?

Looking for the truth means trying things that you consider unimportant but you only do the things you consider important. That's not how it works.

just out of curiosity, what are the freq into play when a baby cries?

Looked at some samples and the fundamentals were around 400Hz.

No one "ignores" it, its just not as important. Why do you keep saying otherwise?

Not testing for it's effect, continually advocating the same design that doesn't account for it, and arguing against it..

-last time I checked, that's actually a bit beyond simply ignoring it. 😉

Again though, it's importance (if indeed it is important), would relate to the bandwidth of reproduced sources (in our stereo context).

In the context of <700 Hz or >700 Hz, it really doesn't matter if an "image's" localization would otherwise be dominated by spectral content >700, IF in fact it doesn't have any significant spectral content >700 Hz.

Funny, I understood it completely.

One point on the sphere absorption calculation presented earlier, its wrong. The SPL reaching the boundary of the larger sphere has dropped by a large amount (depends on the radius) and so taking 1% of this smaller amount results in a smaller loss of energy not a greater loss. The whole derivation is wrong.

My math is simple and complete. Use your math to prove mine wrong. Dropped by a large amount, depends on radius..... blah blah blah. A given amount of sound energy projected on a given surface that is reflected back will be twice as much as when same amount of energy is projected on surface with same absorption coefficient and twice the area.

Markus,

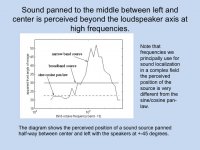

That is an easy experiment which I just did, the 'Sound panned to the middle between left and right... etc.' I suppose everybody will have a tone generator in his PC as well, plus some speakers hooked up, so it only takes 5 minutes.

When I sweep through the frequencies panned 1/2 left, the location of the tone remains exactly at the same spot.

I am interested to hear if others share this experience. What to make of it?

That is an easy experiment which I just did, the 'Sound panned to the middle between left and right... etc.' I suppose everybody will have a tone generator in his PC as well, plus some speakers hooked up, so it only takes 5 minutes.

When I sweep through the frequencies panned 1/2 left, the location of the tone remains exactly at the same spot.

I am interested to hear if others share this experience. What to make of it?

In the context of <700 Hz or >700 Hz, it really doesn't matter if an "image's" localization would otherwise be dominated by spectral content >700, IF in fact it doesn't have any significant spectral content >700 Hz.

So what does not have "significant" spectral content >700Hz?

Markus,

That is an easy experiment which I just did, the 'Sound panned to the middle between left and right... etc.' I suppose everybody will have a tone generator in his PC as well, plus some speakers hooked up, so it only takes 5 minutes.

When I sweep through the frequencies panned 1/2 left, the location of the tone remains exactly at the same spot.

I am interested to hear if others share this experience. What to make of it?

Play single sines with pauses in between. Is localization still rock solid?

Did you also try narrow band noise?

Markus, I sweep manually and there is an automatic pause when the generator locks onto the next frequency, so that came free with the setup. Of course it is important to keep your head in the same position.

I have to do a lot of fiddling to generate narrow band noise, and I don't see how that could influence the outcome, so for the moment I hope someone else is set up for this and will share his experience.

I have to do a lot of fiddling to generate narrow band noise, and I don't see how that could influence the outcome, so for the moment I hope someone else is set up for this and will share his experience.

So what does not have "significant" spectral content >700Hz?

Depends on the notes created/played, and the instrument.

A lot of bass guitar, lower synths, and likely a fair amount of the lower-range of acoustic instruments (or at least those with a muted harmonic signature).

There are probably spectrograms available for various instruments..

To do Griesinger justice: I didn't quote him. For quotes I would definitely use a quote frame or at least quotation marks.Since I now understand this was directed at me, let me again state that Griesinger is kitchen sink science, and this quote only proves it.

Your "kitchen sink science" remark tells me too much about your personality and not enough about your scientific qualification to judge this mans work in this way.

I'm not sure that I understand you here. Are you saying that the phase relation between a fundamental and it's harmonics is not stable over time?No way each harmonic is glued to its fundamental frequency since all speaker systems exhibit phase shifts. Experiments, even with excessive phase shifts, have not demonstrated any detrimental effect on localization.

With this sort of listening experiments everybody can be right on his money. Which is in no way critizising Panos work. I always learn a lot from listening to (or measuring from) his files. 🙂But what I really don't understand is how my advice on phase panning can be seen as counterproductive, because Pano's .wav files demonstrate I was right on the money there.

But didn't you say that "5ms time delay is so long, that the signal from that side gets strongly masked. It becomes almost completely inaudible to me, unless I make an effort to hear it" in post #2585?

I would put it this way: If something is coming 5 ms late to my ears and doesn't even fully resemble my original signal, my brain would think of it as an independant new sound.

Rudolf

Depends on the notes created/played, and the instrument.

A lot of bass guitar, lower synths, and likely a fair amount of the lower-range of acoustic instruments (or at least those with a muted harmonic signature).

There are probably spectrograms available for various instruments..

That shorthand for i dunno?

- Home

- Loudspeakers

- Multi-Way

- Linkwitz Orions beaten by Behringer.... what!!?