Follow up on Real-Time Clock hardware on the Pis:

Earlier in this thread (somewhere) I mentioned that I had purchased and was installing a real time clock (RTC) on each of the Pis I use for playback. I wanted to see how this influenced the time synchronization performance. I have some new info on this that I thought I would share.

First, some background about timekeeping on the Pi (and most other computers). The operating system has mechanism(s) for keeping time. When the computer is shut off, these cease to function. When the computer is again powered up, it needs to refer to something that has been keeping time while it was "asleep" as it has no way to know what time it is. Typically this has been a battery powered hardware clock. For the Pis there are a couple of versions that use either the DS1307 or DS323x ICs. If you want to Pi to have a clue about the date and time when it is powered on and there is no connection to the outside world (e.g. an internet connection of some kind) then you must install one of these. But if you have an internet connection, the Pis can simply ask a helpful time server what time/date it is and then set the software clock to that. Both the RTC and the mechanism to set the "fake" clock via the internet only apply to the time at bootup - after that they are not used at all by the OS unless specifically told to do so.

So, is a hardware RTC helpful for accurate time keeping on the Pi. Well, it depends on what you mean by "accurate". I want/need time on my Pis to be the same down to at least 1 msec (of each other). It turns out that the RTCs that I have used are not good enough for this, because the RTC does not keep perfect time AND it seems that there is a time lag introduced when the time is stored to the RTC on system halt and then read back into the OS on bootup. On my Pis this seems to be around 0.5 seconds in total, so the time set by the RTC at bootup would be no better than 500 milliseconds away from "true time'. So the RTC is not helpful for getting the accuracy that I seek.

There is software called NTP (network time protocol) that can continuously and periodically query internet time servers for the "true time" and try to compensate for the drift of the local software clock. I next tried to see if using NTP on each Pi would help things. NTP does take some time (up to a few hours) to settle down and get the time sync'd up to "true time". I was able to get about 1-3 msec synchronization via internet time servers using a wireless internet connection (that ain't bad!). But what I found was that this drifted around and between the machines one could drift away from the other so that there was up to 5-6 msec of offset.

Finally, I decided to implement a strategy where used NTP to sync up my music server (which is connected to my DSL modem by hard cabling) to internet time servers. I sync all other music systems (the Pis) to my music server over WiFi. This approach proved to be much more accurate and stable. The hardwire connection of the server reduces the jitter of the time data provided by the internet time servers. I am able to get its offset to continuously be below about 500 MICRO seconds (0.5 msec) from true time. That's amazing. It's my own personal Stratum 2 time server for the rest of my audio system. Because the Pis NTP daemon only needs to get info over the LAN and not via the internet, they also experience very low jitter and I am able to get them to sync to my music server's time to better than 1 msec including when they heat up and cool down, CPU demand changes, etc. So the entire music system is now synchronized to better than 1 msec, often to within 500 usec.

But there is one practical problem - whenever the systems are shut down and have to boot up again (including the music server) if there is a RTC onboard they initialize the date and time to something close to but not exactly the "true time". If the time after bootup is tens to hundreds of microseconds away from true time then NTP has to work hard to adjust the local clock and the error goes through some decaying oscillations before finally establishing the ultimate close sync that I mentioned above. That process may take 6 hours or more to settle. This means that any two machines will be too far off for audio playback to be completely glitchless, and now and then there is a very brief "glipp" sound as the audio is re-sync'd when the clock adjustments have gone too far for the buffer to compensate (in my estimation). Sometimes the (currently two) machines do not get back into sync, and I have to reset the streaming audio to get them to behave (this is only during the settling process after a large startup error). Once everything is well sync'd there are no glitches and audio is perfect.

It seems that having an RTC onboard actually makes things worse. With an RTC the time after bootup is hundreds of msec off and NTP might take hours to settle. Without an RTC the system simply queries the local time from some server, sets the clock to that, and then NTP takes it from there. It turns out that this process syncs the local clock to within a couple of msec or better of "true time" so NTP settles much faster and you can be up and running quickly, e.g. in a matter of a few minutes. The best case scenario seems to be a local time server (e.g. my Stratum 2 server implementation) that is never shut off. When the Pis boot up they can set their time to that over the local connection and its very accurate. The systems are then all on the same timebase almost immediately. NTP then takes care of the rest from there.

Hopefully this info is helpful for someone who is trying to set up a music system or who needs good synchronized local time across several machines.

.

Earlier in this thread (somewhere) I mentioned that I had purchased and was installing a real time clock (RTC) on each of the Pis I use for playback. I wanted to see how this influenced the time synchronization performance. I have some new info on this that I thought I would share.

First, some background about timekeeping on the Pi (and most other computers). The operating system has mechanism(s) for keeping time. When the computer is shut off, these cease to function. When the computer is again powered up, it needs to refer to something that has been keeping time while it was "asleep" as it has no way to know what time it is. Typically this has been a battery powered hardware clock. For the Pis there are a couple of versions that use either the DS1307 or DS323x ICs. If you want to Pi to have a clue about the date and time when it is powered on and there is no connection to the outside world (e.g. an internet connection of some kind) then you must install one of these. But if you have an internet connection, the Pis can simply ask a helpful time server what time/date it is and then set the software clock to that. Both the RTC and the mechanism to set the "fake" clock via the internet only apply to the time at bootup - after that they are not used at all by the OS unless specifically told to do so.

So, is a hardware RTC helpful for accurate time keeping on the Pi. Well, it depends on what you mean by "accurate". I want/need time on my Pis to be the same down to at least 1 msec (of each other). It turns out that the RTCs that I have used are not good enough for this, because the RTC does not keep perfect time AND it seems that there is a time lag introduced when the time is stored to the RTC on system halt and then read back into the OS on bootup. On my Pis this seems to be around 0.5 seconds in total, so the time set by the RTC at bootup would be no better than 500 milliseconds away from "true time'. So the RTC is not helpful for getting the accuracy that I seek.

There is software called NTP (network time protocol) that can continuously and periodically query internet time servers for the "true time" and try to compensate for the drift of the local software clock. I next tried to see if using NTP on each Pi would help things. NTP does take some time (up to a few hours) to settle down and get the time sync'd up to "true time". I was able to get about 1-3 msec synchronization via internet time servers using a wireless internet connection (that ain't bad!). But what I found was that this drifted around and between the machines one could drift away from the other so that there was up to 5-6 msec of offset.

Finally, I decided to implement a strategy where used NTP to sync up my music server (which is connected to my DSL modem by hard cabling) to internet time servers. I sync all other music systems (the Pis) to my music server over WiFi. This approach proved to be much more accurate and stable. The hardwire connection of the server reduces the jitter of the time data provided by the internet time servers. I am able to get its offset to continuously be below about 500 MICRO seconds (0.5 msec) from true time. That's amazing. It's my own personal Stratum 2 time server for the rest of my audio system. Because the Pis NTP daemon only needs to get info over the LAN and not via the internet, they also experience very low jitter and I am able to get them to sync to my music server's time to better than 1 msec including when they heat up and cool down, CPU demand changes, etc. So the entire music system is now synchronized to better than 1 msec, often to within 500 usec.

But there is one practical problem - whenever the systems are shut down and have to boot up again (including the music server) if there is a RTC onboard they initialize the date and time to something close to but not exactly the "true time". If the time after bootup is tens to hundreds of microseconds away from true time then NTP has to work hard to adjust the local clock and the error goes through some decaying oscillations before finally establishing the ultimate close sync that I mentioned above. That process may take 6 hours or more to settle. This means that any two machines will be too far off for audio playback to be completely glitchless, and now and then there is a very brief "glipp" sound as the audio is re-sync'd when the clock adjustments have gone too far for the buffer to compensate (in my estimation). Sometimes the (currently two) machines do not get back into sync, and I have to reset the streaming audio to get them to behave (this is only during the settling process after a large startup error). Once everything is well sync'd there are no glitches and audio is perfect.

It seems that having an RTC onboard actually makes things worse. With an RTC the time after bootup is hundreds of msec off and NTP might take hours to settle. Without an RTC the system simply queries the local time from some server, sets the clock to that, and then NTP takes it from there. It turns out that this process syncs the local clock to within a couple of msec or better of "true time" so NTP settles much faster and you can be up and running quickly, e.g. in a matter of a few minutes. The best case scenario seems to be a local time server (e.g. my Stratum 2 server implementation) that is never shut off. When the Pis boot up they can set their time to that over the local connection and its very accurate. The systems are then all on the same timebase almost immediately. NTP then takes care of the rest from there.

Hopefully this info is helpful for someone who is trying to set up a music system or who needs good synchronized local time across several machines.

.

Of the Volumio/Moode/Rune trio you mention, do any of those satisfy all my criteria above? I think I have looked at these, and lots of others, and none of the ones I found really met all my needs/desires. But I am not married to VLC and if something better comes along I would be more than happy to try it out.

These three are raspberry pi images that are set-up with mpd as the music server (same as the one Richard Taylor wrote about), plus webserver for gui and other nice things (including syncing between different pi - haven't tried this but assume it's not a tight tolerance as you have). My reason for mentioning these was more for the slick interface solutions than the actual player itself. Volumio has the latest mpd version allowing pipe-out while Moode requires an update. Rune is based on arch, and I haven't used it much. I like the moode interface the best. They can stream web radio, dlna or airplay so I assume they can be set-up to stream any website. Their strength is local playback. But, the nicest things with these very similar set-ups are the clients you can download to android, etc. or just use the browser interface. You can set the output to any alsa device you wish plus straight to ecasound.

When I played music from ubuntu, I liked Clementine, Banshee, DeaDBeef and

Audacious. They can all set alsa devices directly, but I never tried streaming or remote control so not sure if they can handle that

Back to the ladspa filters - trying to set it up and measure both before and after implementation. In the past I have always measured using REW on a windows laptop with an USB mic, but I haven't figured out how to do this via the pi2 in headless mode. In other words, REW sweep signal to ecasound to amplifier. On a normal laptop/desktop with line-in I could just have ecasound listen to the input, but the raspberry pi doesn't have line-in without adding extra hardware. Is it doable to run REW directly from the pi from commandline to get the mesurements or via wifi/ethernet from my laptop?

The whole purpose of my streaming audio system is to implement multiple, independent, synchronized playback devices...

I applaud your efforts toward this goal, Charlie. The time synch work has been an interesting read.

In the past I have implemented wired 'extensions' of a main system and I have to say, it was not satisfying sonically. With phase and cancellation transitions among speakers, it was inferior to simply cranking up the well-implemented 'main' stereo pair. So I find myself wondering what kind of space-filling and acoustic issues you may be facing. In my imagination, I guess that a multi-source wifi linkage system might better suit a sound reinforcement system rather than a system intended for accurate reproduction of carefully staged recordings. Are uses like that in the cards for you?

Cheers!

Last edited:

Back to the ladspa filters - trying to set it up and measure both before and after implementation. In the past I have always measured using REW on a windows laptop with an USB mic, but I haven't figured out how to do this via the pi2 in headless mode. In other words, REW sweep signal to ecasound to amplifier. On a normal laptop/desktop with line-in I could just have ecasound listen to the input, but the raspberry pi doesn't have line-in without adding extra hardware. Is it doable to run REW directly from the pi from commandline to get the mesurements or via wifi/ethernet from my laptop?

When I was testing out my LADSPA plugins I used a computer that had onboard audio, including an input jack. This allowed me to send an impulse/get the filtered output to/from the mobo connected to my test gear. Since the Pi doesn't have any input functionality you are kind of out of luck if you need to input analog audio to it unless you add on an ACD somehow. If you streamed to it over ethernet you could achieve the same thing. I have done this and it works great.

I applaud your efforts toward this goal, Charlie. The time synch work has been an interesting read.

In the past I have implemented wired 'extensions' of a main system and I have to say, it was not satisfying sonically. With phase and cancellation transitions among speakers, it was inferior to simply cranking up the well-implemented 'main' stereo pair. So I find myself wondering what kind of space-filling and acoustic issues you may be facing. In my imagination, I guess that a multi-source wifi linkage system might better suit a sound reinforcement system rather than a system intended for accurate reproduction of carefully staged recordings. Are uses like that in the cards for you?

Cheers!

I'm not sure what you did exactly when you refer to "wired extensions". Let's be clear - streaming over WiFi is NEVER going to be as good as a direct connection using a wire. With a WiFi connection you can't have the highest bit rates. You can't have the highest sampling rates. You may need to accept some level of compression of the audio stream to have a sufficiently low bandwidth requirement when you are using inexpensive 802.11n WiFi dongles. But all of that doesn't matter because, judging from my own system after extended listening and testing, it works great, sound quality is great, and there are few to no problems with playback, synchronization, and so on.

I'm not trying to push the envelope in terms of performance - there is no need for such flamboyance. It's not a "37-50" 2011 Screaming Eagle Oakville Cab, it's a light and enjoyable Cupcake prosecco. Refrshing, enjoyable, affordable (and I highly recommend it!). This is DIY after all and there is lots of expensive commercial gear to spend your money on. Just don't ask the waitress, er..., salesperson for a recommendation! 😱

😱

😱.

So many approaches to DIY and they are all good! My usual M.O. is to build, as cheaply as possible, a sound that I could never ever afford from commercial gear. The current project (6ch top-performing DAC) is way over budget, however! 😱

...wish I could make it to Burning Amp but... perhaps another year...

...wish I could make it to Burning Amp but... perhaps another year...

I finally took the plunge and bought a tablet, the Lenovo Tab 2 A10-70 (WiFi only version). I'm not a user of modern "mobile" devices and this was my first experience with something powered by Android, without a hard keyboard or hardware pointing device, etc. I'm really impressed with it on many levels!

The tablet was purchased for the sole purpose of working as a portable interface for my music server, to control VLC remotely. Until this point, I have been connecting from my Windows lapatop using an X-windows server over SSH and I wanted to try the same thing from the tablet. After a few false starts I managed to get ConnectBot and XSDL X-windows server working pretty well for this purpose. I can choose a low resolution and have VLC fill up the entire tablet screen. This makes is a little easier to click on the right thing using a fat finger. The one problem that I have with the native Qt interface for VLC is that the volume control is pretty small. It's difficult to make small adjustments to the volume, all too easy to accidentally set the volume to max, etc. VLC has keyboard shortcuts, but the default for the volume is CONTROL+up-arrow/down-arrow and the native Android keyboard didn't come with these keys. I did manage to find an add-on called the "Hacker's Keyboard", and after I downloaded and installed it volume control is a total breeze. HK is also pretty useful in general, e.g. in ConnectBot.

While I was looking into ways around the volume control size problem, I discovered that you can install custom skins for the Qt interface. The VLC web site has about 20 of these ready-made, but there is also a skin editor that you can use to build you own custom skin. I might do this so I can make my own large volume control slider and customize other aspects of the interface. I'm not so much into making it LOOK a certain way as much as I want to make it FUNCTION a certain way. It's pretty cool that VLC makes this kind of customization possible!

The tablet was purchased for the sole purpose of working as a portable interface for my music server, to control VLC remotely. Until this point, I have been connecting from my Windows lapatop using an X-windows server over SSH and I wanted to try the same thing from the tablet. After a few false starts I managed to get ConnectBot and XSDL X-windows server working pretty well for this purpose. I can choose a low resolution and have VLC fill up the entire tablet screen. This makes is a little easier to click on the right thing using a fat finger. The one problem that I have with the native Qt interface for VLC is that the volume control is pretty small. It's difficult to make small adjustments to the volume, all too easy to accidentally set the volume to max, etc. VLC has keyboard shortcuts, but the default for the volume is CONTROL+up-arrow/down-arrow and the native Android keyboard didn't come with these keys. I did manage to find an add-on called the "Hacker's Keyboard", and after I downloaded and installed it volume control is a total breeze. HK is also pretty useful in general, e.g. in ConnectBot.

While I was looking into ways around the volume control size problem, I discovered that you can install custom skins for the Qt interface. The VLC web site has about 20 of these ready-made, but there is also a skin editor that you can use to build you own custom skin. I might do this so I can make my own large volume control slider and customize other aspects of the interface. I'm not so much into making it LOOK a certain way as much as I want to make it FUNCTION a certain way. It's pretty cool that VLC makes this kind of customization possible!

Finally a break-through!

What has been holding me back from implementing the ladspa based cross-overs in my living room is that it has to be kids/wife friendly plus be able to play spotify and other closed systems. Most programs that I have found can pipe out local files or public webstreams to ecasound, but I hadn't been able to find a way to get spotify and other paid streaming services to pipe out the audio to ecasound in headless mode (without messing with the alsa config files). I have also found that many of the systems requires a little bit tweaking to play, which I don't mind, but will not pass the "ease-of-use" test for the rest of the family.

When I looked into some of the available syncing schemes out of curiosity from the discussion in the thread, I came across shairport-sync which can sync multiple devices over the apple airplay protocol. I also found out that recent versions can be compiled to pipe out raw pcm to stdout which can be set-up as an input to ecasound. Even better, the config files for shairport-sync are already set-up with a wait command that doesn't let it start until a second program (e.g. ecasound) has started.

The biggest benefit is that I can now have the shairport-sync with ecasound listen on stdin until airplay is connected with just a click on my ipad (for windows it requires something like airfoil) and it can then forward all audio from the ipad to the raspberry pi - spotify, youtube, TV apps, web radio stations, etc. In other words, my kids can use their favourite app and then click and play whatever they wish (with hopefully no tweaking required). Volume is even controlled by the physical volume buttons on the tablet.

The airplay protocol doesn't do any high resolution format, but neither does most streaming services or my DAC in my living room set-up, so this is a good workable solution for me. I have to admit that I'm not the biggest fan of apples products, and will continue look for something android friendly, but this will work for now. I have only tried it so far with one simple test filter so hope it will work with a more complex multi-channel stream.

It also has built in NTP sync protocol set-up with verbose output showing the sync corrections in real time. The wiki claims: "the limits are ± 88 frames – i.e. plus or minus two milliseconds". I haven't tried this yet, but hope to play around with later. Now it's time to write some ladspa filter!

@Charlie - do you have a forum thread somewhere with good discussions and hints for your ACD spreadsheet tool?

I hope you like the tablet. It's great for viewing and clicking but I agree, typing is a bit tricky. A good android app for connecting via SSH is JuiceSSH. It has a lot of functionality and the best is that it adds two rows of special keys to the standard keyboard. I have only used the smartphone version but assuming they are the same.

What has been holding me back from implementing the ladspa based cross-overs in my living room is that it has to be kids/wife friendly plus be able to play spotify and other closed systems. Most programs that I have found can pipe out local files or public webstreams to ecasound, but I hadn't been able to find a way to get spotify and other paid streaming services to pipe out the audio to ecasound in headless mode (without messing with the alsa config files). I have also found that many of the systems requires a little bit tweaking to play, which I don't mind, but will not pass the "ease-of-use" test for the rest of the family.

When I looked into some of the available syncing schemes out of curiosity from the discussion in the thread, I came across shairport-sync which can sync multiple devices over the apple airplay protocol. I also found out that recent versions can be compiled to pipe out raw pcm to stdout which can be set-up as an input to ecasound. Even better, the config files for shairport-sync are already set-up with a wait command that doesn't let it start until a second program (e.g. ecasound) has started.

The biggest benefit is that I can now have the shairport-sync with ecasound listen on stdin until airplay is connected with just a click on my ipad (for windows it requires something like airfoil) and it can then forward all audio from the ipad to the raspberry pi - spotify, youtube, TV apps, web radio stations, etc. In other words, my kids can use their favourite app and then click and play whatever they wish (with hopefully no tweaking required). Volume is even controlled by the physical volume buttons on the tablet.

The airplay protocol doesn't do any high resolution format, but neither does most streaming services or my DAC in my living room set-up, so this is a good workable solution for me. I have to admit that I'm not the biggest fan of apples products, and will continue look for something android friendly, but this will work for now. I have only tried it so far with one simple test filter so hope it will work with a more complex multi-channel stream.

It also has built in NTP sync protocol set-up with verbose output showing the sync corrections in real time. The wiki claims: "the limits are ± 88 frames – i.e. plus or minus two milliseconds". I haven't tried this yet, but hope to play around with later. Now it's time to write some ladspa filter!

@Charlie - do you have a forum thread somewhere with good discussions and hints for your ACD spreadsheet tool?

I hope you like the tablet. It's great for viewing and clicking but I agree, typing is a bit tricky. A good android app for connecting via SSH is JuiceSSH. It has a lot of functionality and the best is that it adds two rows of special keys to the standard keyboard. I have only used the smartphone version but assuming they are the same.

Finally a break-through!

What has been holding me back from implementing the ladspa based cross-overs in my living room is that it has to be kids/wife friendly plus be able to play spotify and other closed systems. Most programs that I have found can pipe out local files or public webstreams to ecasound, but I hadn't been able to find a way to get spotify and other paid streaming services to pipe out the audio to ecasound in headless mode (without messing with the alsa config files). I have also found that many of the systems requires a little bit tweaking to play, which I don't mind, but will not pass the "ease-of-use" test for the rest of the family.

When I looked into some of the available syncing schemes out of curiosity from the discussion in the thread, I came across shairport-sync which can sync multiple devices over the apple airplay protocol. I also found out that recent versions can be compiled to pipe out raw pcm to stdout which can be set-up as an input to ecasound. Even better, the config files for shairport-sync are already set-up with a wait command that doesn't let it start until a second program (e.g. ecasound) has started.

The biggest benefit is that I can now have the shairport-sync with ecasound listen on stdin until airplay is connected with just a click on my ipad (for windows it requires something like airfoil) and it can then forward all audio from the ipad to the raspberry pi - spotify, youtube, TV apps, web radio stations, etc. In other words, my kids can use their favourite app and then click and play whatever they wish (with hopefully no tweaking required). Volume is even controlled by the physical volume buttons on the tablet.

The airplay protocol doesn't do any high resolution format, but neither does most streaming services or my DAC in my living room set-up, so this is a good workable solution for me. I have to admit that I'm not the biggest fan of apples products, and will continue look for something android friendly, but this will work for now. I have only tried it so far with one simple test filter so hope it will work with a more complex multi-channel stream.

It also has built in NTP sync protocol set-up with verbose output showing the sync corrections in real time. The wiki claims: "the limits are ± 88 frames – i.e. plus or minus two milliseconds". I haven't tried this yet, but hope to play around with later. Now it's time to write some ladspa filter!

@Charlie - do you have a forum thread somewhere with good discussions and hints for your ACD spreadsheet tool?

I hope you like the tablet. It's great for viewing and clicking but I agree, typing is a bit tricky. A good android app for connecting via SSH is JuiceSSH. It has a lot of functionality and the best is that it adds two rows of special keys to the standard keyboard. I have only used the smartphone version but assuming they are the same.

I'd like to know what you think of shairport for multiple speakers. You may find that the stereo center shifts around with that level of sync. If the actual sync that you are able to achieve is much better than the quoted +/-2msec, then you've definitely got something there. I am able to get synchronization to within about 500 usec in my setup, and I find that works very well.

Thanks for mentioning JuiceSSH. I had looked at it, but to allow X forwarding you need you get the pro version (OK it's only $1.50) so I tried ConnectBot first and found that it works just fine. Honestly, I would rather have a true Android client interface to my server player and not have to use X-windows but so far just about everything I look into lacks something or another. My best bet might be MPD if I decide to go that route.

As far as info on my ACD package, there is a pretty extensive technical manual and a tutorial. Unfortunately I haven't released a version that has the exact same filter implementation used in the LADSPA plugins. It's on my to-do list! A couple of the LADSPA filters are slightly different (the shelving filters for instance) and I added a couple extra types with the LADSPA plugin. Otherwise the current ACD digital filers (symmetric type) should be identical. I'm hoping to release the LADSPA version of ACD by December. Earlier if people bug me about it...

What has been holding me back from implementing the ladspa based cross-overs in my living room is that it has to be kids/wife friendly plus be able to play spotify and other closed systems.

Sounds great! I'm on a relatively parallel track, but slightly different priorities. The need for easy use and access is fundamental, of course, so wireless control is essential. I have nothing against the MPD-based renderers, but found that a combo of Squeezelite and Logitech Media Server were just the ticket because I liked the control app iPeng HD.

To contribute here - I also found that some of the tablet SSH clients have features that make life super convenient for command line interactions. For iOS I'm using WebSSH. Complex commands can be stored, given nicknames, and executed with a mere touch! So for example, you could have a choice of different ecasound configurations and easily switch among them using a simple tap within an SSH window.

In my case, I'm building a BBB player appliance and I was thinking about how to incorporate and program a nice OLED display to report basic system operational parameters [e.g., sample rate, program source, etc.] Then it struck me - doh! All the info I could ever want is available with simple touch commands via the tablet SSH interface! ...just have to store the commands once. What a lot of work THAT saved!

You guys keep having fun! 🙂

I am working on an updated version to the Active Crossover Designer tools that will replace the miniDSP filter calculations with their LADSPA counterparts. This will make it possible to design a loudspeaker crossover (up to 4-way) and implement them using the LADSPA filters. Because I am adapting the existing ACD format the general principles of ACD will still apply (e.g. I don't have to write a new manual!).

I will announce the release of the new ACD version in the software forum when it is ready for download.

I will announce the release of the new ACD version in the software forum when it is ready for download.

Well, after a good day's work I have managed to get LADSPA filters working in ACD. I've decided to name the new tools "ACD-L" so that I can distinguish it from the original version. ACD-L fully supports the ACDf and mTAP LADSPA plugins that I wrote for doing crossovers in software under linux. I no longer have a use for the original "minDSP" version, but I will keep it around for others to use.

Once the crossover is developed it's very easy to implement it. ACD-L generates the text string that describes all of the LADSPA filters for ecasound. Copy the test out of the ACD-L tool and paste it into a text file on the machine that is running ecasound. Configure ecasound to read and use the filters in the text file (you do this only once, really). That's it.

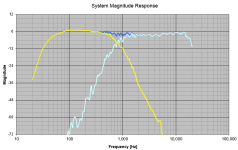

As an example of what you can do with the tools, attached is ACD-L's system response plot for the MTM that I am bringing to Burning Amp. I used it as a test bed to verify that everything in ACD-L was working correctly, so I took the opportunity to make a few changes to the previous version of the crossover.

Once the crossover is developed it's very easy to implement it. ACD-L generates the text string that describes all of the LADSPA filters for ecasound. Copy the test out of the ACD-L tool and paste it into a text file on the machine that is running ecasound. Configure ecasound to read and use the filters in the text file (you do this only once, really). That's it.

As an example of what you can do with the tools, attached is ACD-L's system response plot for the MTM that I am bringing to Burning Amp. I used it as a test bed to verify that everything in ACD-L was working correctly, so I took the opportunity to make a few changes to the previous version of the crossover.

Attachments

I'd like to know what you think of shairport for multiple speakers. You may find that the stereo center shifts around with that level of sync. If the actual sync that you are able to achieve is much better than the quoted +/-2msec, then you've definitely got something there. I am able to get synchronization to within about 500 usec in my setup, and I find that works very well.

Thanks for mentioning JuiceSSH. I had looked at it, but to allow X forwarding you need you get the pro version (OK it's only $1.50) so I tried ConnectBot first and found that it works just fine. Honestly, I would rather have a true Android client interface to my server player and not have to use X-windows but so far just about everything I look into lacks something or another. My best bet might be MPD if I decide to go that route.

As far as info on my ACD package, there is a pretty extensive technical manual and a tutorial. Unfortunately I haven't released a version that has the exact same filter implementation used in the LADSPA plugins. It's on my to-do list! A couple of the LADSPA filters are slightly different (the shelving filters for instance) and I added a couple extra types with the LADSPA plugin. Otherwise the current ACD digital filers (symmetric type) should be identical. I'm hoping to release the LADSPA version of ACD by December. Earlier if people bug me about it...

Hi there. I'm the developer of Shairport Sync -- thanks for the mention -- and it implements a synchronisation protocol developed by Apple, based on NTP-type synchronisation. Just to be clear, it isn't actually time-of-day synchronisation, instead, the Shairport Sync device's local clock can be calibrated against the iTunes etc. source and very accurate timing estimations can be made. I set the default inaccuracy that is tolerated to 88 frames -- 2 milliseconds, but it will comfortable go down to 33 frames on a wired connection to a Raspberry Pi. To be honest, the synchronisation algorithm could probably be improved to yield a drift of only a few frames -- less than 10 -- even on WiFi. All the same, I have never tried to drive left and right speakers from different devices. All the best in your endeavours!

Hi there. I'm the developer of Shairport Sync -- thanks for the mention -- and it implements a synchronisation protocol developed by Apple, based on NTP-type synchronisation. Just to be clear, it isn't actually time-of-day synchronisation, instead, the Shairport Sync device's local clock can be calibrated against the iTunes etc. source and very accurate timing estimations can be made. I set the default inaccuracy that is tolerated to 88 frames -- 2 milliseconds, but it will comfortable go down to 33 frames on a wired connection to a Raspberry Pi. To be honest, the synchronisation algorithm could probably be improved to yield a drift of only a few frames -- less than 10 -- even on WiFi. All the same, I have never tried to drive left and right speakers from different devices. All the best in your endeavours!

Hi Mike, thanks for posting! I've obviously heard good things about shairport sync and it looks like it, and my efforts, have some things in common (e.g. NTP). NTP seems to bring all devices onto the same time base to within a small enough error margin for audio, and its open source. I need to redo my timing measurements and post the updated info to show how this has been improved over my earlier efforts.

Now that the LADSPA based crossover design tools are working it's nice to be back to loudspeaker/crossover design for a change!

Meant to post this with the MTM frequency response, above - it's the phase alignment of the drivers in the system - please see the attached plot.

Meant to post this with the MTM frequency response, above - it's the phase alignment of the drivers in the system - please see the attached plot.

Attachments

Well, after a good day's work I have managed to get LADSPA filters working in ACD. I've decided to name the new tools "ACD-L"

I'm excited to try it out. Have you uploaded it to your website ? I wasn't able to find it.

I'm excited to try it out. Have you uploaded it to your website ? I wasn't able to find it.

ACD-L is not yet released. Hopefully in a few days time. If you want or need to use it ASAP, send me a PM. In the meantime, you can download the current version of ACD to familiarize yourself with the interface, learn how to define filters, etc. There is an extensive technical manual and a tutorial - 95% of this stuff will be the same for ACD-L.

ACD-L is not yet released. Hopefully in a few days time. If you want or need to use it ASAP, send me a PM. In the meantime, you can download the current version of ACD to familiarize yourself with the interface, learn how to define filters, etc. There is an extensive technical manual and a tutorial - 95% of this stuff will be the same for ACD-L.

On second thought, the ACD-L version really should be released along with a manual and tutorial that explains how to use it. It's similar to ACD in many ways, but also has new and different features and components. I have started working on these but it will take some time to complete them.

So, if you want ACD-L asap you can contact me for an "advanced copy". Otherwise, stay tuned. I will announce when it is released.

Hello Charile,

First i want to thank you for the filters! Great work.

I need your help or others, please post your ecasound command line that sets the input as Loopback and output to a hw Soundcard.

I can't make the Loopback to send sound to my hardware card. I am using VLC configured to send Audio to the loopback card.

Ecasound is running but no sound is coming out to the 6 outputs.

If i give input a mp3 file, all is OK, but when i choose Loopback device, no sound is coming.

If this kind of info will be present in the manual, i am waiting for that.

My linux skills are way behind Win7.. i have things to learn.

Regards,

Daniel

First i want to thank you for the filters! Great work.

I need your help or others, please post your ecasound command line that sets the input as Loopback and output to a hw Soundcard.

I can't make the Loopback to send sound to my hardware card. I am using VLC configured to send Audio to the loopback card.

Ecasound is running but no sound is coming out to the 6 outputs.

If i give input a mp3 file, all is OK, but when i choose Loopback device, no sound is coming.

If this kind of info will be present in the manual, i am waiting for that.

My linux skills are way behind Win7.. i have things to learn.

Regards,

Daniel

- Home

- Source & Line

- PC Based

- LADSPA plugin programming for Linux audio crossovers