The question is, how sensitive is the ear, to deviations from phase linearity? How much of a deviation from flat amplitude would you tolerate, to improve phase linearity?

Yes, the question. I don't think we know how sensitive the ear is to phase linearity...i think we are just now exercising the tools to find out.....as said in prior post..

One thing that I keep hearing...the finer the mag and phase response i achieve, the more even slight imbalances become more perceptible...

It's like a plank, which turns into a balance beam, which turns into a tightrope...as tuning gets more linear...

The better the sound gets, which coincides IME with the better the mag and phase measurements get, .....the easier it is to fall off the balance wire... i dunno.

Last edited:

Yes, the phase shift of the harmonics could cause a change in the timbre. So minimising them is a good idea. It's impossible to know though how much the sound has been changed due to phase shifts through all the preceding signal chain.And aren't harmonics originating off that primal movement...? Not to mention the further movements......all with their own mag phase relationship to the primal movement?

I suspect it could be a reason why some mono recordings particularly of small jazz combos in the 50's can sound so immediate, very little processing was done, almost straight to tape

Last edited:

I think everybody also agrees that amplitude response matters more than the phase response. With FIR, we aren't faced with that choice between optimizing FR or phase. We still need to look carefully at IR, step, and phase response to see issues with the room or with time alignment in a multiway and be careful not to over-correct.

If the phase curve isn't flat because the minimum phase of the house curve to which we've equalized isn't flat, we probably want to leave it alone. If it shows a change that is indicative of a time alignment issue, we definitely want to fix the time alignment issue.

If the phase curve isn't flat because the minimum phase of the house curve to which we've equalized isn't flat, we probably want to leave it alone. If it shows a change that is indicative of a time alignment issue, we definitely want to fix the time alignment issue.

Fortunately, everyone agrees that phase matters 🙂 Certainly, when 2 or more signals are added. Even, for a single speaker through a single driver. The question is, how sensitive is the ear, to deviations from phase linearity? How much of a deviation from flat amplitude would you tolerate, to improve phase linearity?

Year it looks that probably because nowadays we have the tools that can do manipulation with phase that including myself many experience that some phase schemes matters more or less 🙂.

About how much of a deviation from flat amplitude one would tolerate, to improve phase linearity? Well i don't know and could be i misunderstand but think i have it as mark100 than the better looking impulse and step response one can correct for compared to synthetic textbook one means we get a real smoother minimum phase system pass-band than before correction and if correction is right including slopes fall off curves it pays off with over avarage snappy attacks and decays and better looking waterfalls. Not shure either amplitude or phase needs to be flat, think amplitude needs to be as smooth as its possible to correct for including a tonality curve that suits room and if that amplitude correction is right phase should smooth out too and be the minimum phase portion that belongs amplitude domain.

Can we mix a floor to ceiling array pass band with a point source pass band right if a floor to ceiling array fall -3dB per doubling of distance verse a point source fall -6dB ?

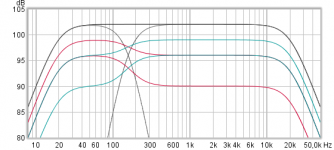

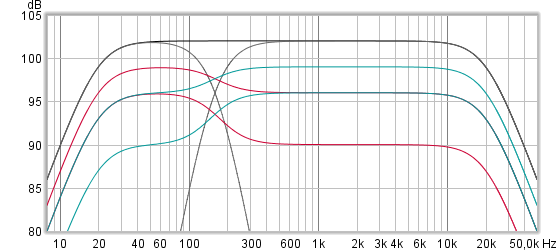

What i mean is seen in below where two pass bands are summed at 160Hz with 4th order LR slopes inside a 20Hz-20kHz system domain. Black trace is 102dB SPL at 1 meter where summing is perfect but if we use floor to ceiling array for LF pass band and sum it to point source HF pass band we get non smooth summing at 2 meter doubling of distance and again at 4 meter point ? Red traces is summing of LF part as being floor to ceiling array and HF part being a point source, where for turquoise trace LF part is point source and HF part being floor to ceiling array.

Attachments

Last edited:

Regarding pitch perception as occuring in the ear rather that the brain, a topic we touched on earlier, here is some research regarding the brain's role: http://www.physiology.org/doi/pdf/10.1152/jn.00104.1999

A brief quote from the article:

"... we report psychophysical and anatomical

evidence in favor of the hypothesis that fine-grained frequency

resolution at the perceptual level relies on neuronal frequency selectivity

in auditory cortex."

"in the auditory cortex" i.e. in the brain NOT the ear. That's what auditory cortex means!

Yes, the question. I don't think we know how sensitive the ear is to phase linearity...i think we are just now exercising the tools to find out.....as said in prior post.

Most studies have concluded that it is group delay that we are sensitive to, not the phase itself. And we also know that group delay sensitivity depends on absolute SPL. So this subject is far more complex than just "can we hear phase" because you now have to ask "at what signal level?"

I would love to study this in more depth, but as Lidia and I found out, it is problematic for the researcher. When we tried to study it we ran into OSHA which called our attempt to study louder sounds a "subject risk" and the university would not sign off on what we wanted to do. If universities can't do this kind of work then who can?

There has been a lot of talk about doing the finite line array and I did describe the differences to an infinite line array earlier in this thread. Is there more to this topic that people want to understand? A finite line array between two reflective surfaces is easy enough as well, and I did describe this as well. But unlike werewolf my patience for doing math equations in ASCII characters is very low.

"in the auditory cortex" i.e. in the brain NOT the ear. That's what auditory cortex means!

That right. Previously you told me I was wrong when I said pitch perception occurs in the brain. That's why I just said, here is the "brain's role."

Last edited:

If universities can't do this kind of work then who can?

Travel to a third world country, without going into too much detail about plans for maximum SPL? Obviously, there are safe exposure levels for short periods of time. We are a very litigious society.

Most studies have concluded that it is group delay that we are sensitive to, not the phase itself. And we also know that group delay sensitivity depends on absolute SPL.

Group delay is rate of change of phase with frequency. Most people here probably understand that description of the kind of phase we are most sensitive to. Maybe not all know it's referred to as group delay.

By the way, it's not too hard to hear at low SPL. One can play with a couple of EQs, one minimum phase and one linear phase. Add linear distortion of phase and FR with the minimum phase EQ, then restore FR with the linear phase EQ. The characteristic sound is left, and it doesn't sound good. It's why mastering EQ is usually applied very broadly and not surgically. Also, why the built-in EQ in garage band is low Q. Hard to make it sound bad that way.

Last edited:

Most studies have concluded that it is group delay that we are sensitive to, not the phase itself. And we also know that group delay sensitivity depends on absolute SPL. So this subject is far more complex than just "can we hear phase" because you now have to ask "at what signal level?"

I would love to study this in more depth, but as Lidia and I found out, it is problematic for the researcher. When we tried to study it we ran into OSHA which called our attempt to study louder sounds a "subject risk" and the university would not sign off on what we wanted to do. If universities can't do this kind of work then who can?

Haha ...you ran into SPL wimps!

Very interesting that sensitivity to group delay varies with SPL.

My interest / application is what I call Hi-Fi PA. I use group delay as a proxy for the least amount of FIR time/latency I know that it will take to flatten phase to a given frequency.

As well known, live sound only allows so much latency, so always looking for the most effective way to flatten phase as low as possible. It makes integrating mains to subs so much easier, as well as matching different type subs.

But it becomes ever more apparent that it takes time to fix time, no magic fixes......

It may be complete bias because I've spent so much efffort at it, but I do believe I'm enjoying ever greater dynamics and clarity with phase flattening...especially at crankin levels...

Are you sure you can't talk them into manning up for that research ?? 😀

Last edited:

That right. Previously you told me I was wrong when I said pitch perception occurs in the brain. That's why I just said, here is the "brain's role."

I remember it exactly the opposite. I agreed that "absolute" pitch happens in the brain, not not other aspects. Basic pitch is determined on the cochlea.

I remember it exactly the opposite. I agreed that "absolute" pitch happens in the brain, not not other aspects. Basic pitch is determined on the cochlea.

I think one disputed point had to do with phase-locking. You said phase locking only occurs in the ears. I said it can occur with neurons in the brain, which you said was incorrect. My understanding has been that neurons in the brain can do phase-locking type recognition too, although I don't remember where I came across it. If necessary, I can go see if I can find a reference for it.

By the way, I never said basic pitch recognition does't occur in the ears. It does. But there is a lot more to real world musical pitch perception than goes on in the ears. It was the part that goes on in the brain I am interested and was referring to.

How about pitch of all the notes in a chord at once played on an instrument with natural intermodulation products: beat notes, and chordal textures... all while part of a band playing?

How about highly distorted electric guitar double-stops?

EDIT: From looking over some related literature, it looks like no one really knows for sure how much of pitch processing occurs in the brain and under what circumstances. The problem is that it's always hard to determine exactly what is happening the brain because non-invasive tools such as MRI are kind of blunt instruments. There appear to be various theories of pitch perception, including dual models. In other words, we know more about ears than brains, but all we really have are theories about how the whole hearing system works, including pitch perception under various circumstances. The older theories focus more on what is better known, the ears, and as more is learned about brains there is increasing evidence suggesting more of a role than early theories allowed for. (Which by the way, is perfectly in keeping with Kahneman. People construct theories, or causal stories, based on what they know, and tend to ignore that there may be important factors that are unknown.)

Last edited:

All of this information is contained in a single varying voltage in the signal path, and presumably the same in the aural nerve to the brain? What happens in between seems a little more complicated, or is it?

No, there are 30,000 nerve fibres, ok! Just ignore me haha

No, there are 30,000 nerve fibres, ok! Just ignore me haha

Last edited:

Markw4

I agree with the discussion, but misunderstood you at the time. There is phase locking (apparently) in both the cochlea itself and the brain. In the cochlea the phase locking causes the outer hair cells to modulate in response to a signal and synchronous with the signal, thus causing a kind of local resonance at the point of the cochlea. This then increases the cochlea motion thus increasing the firing rate of the inner cells that go to the brain. This is necessary for the enhanced dynamic range that we see for hearing.

The neural locking in the brain is a different effect that takes the broad/chaotic neural firings from the principle pitch detection of the inner hair cells on the cochlea and sort of "filters it" to allow for enhanced pitch perception. Since this is in the brain it must be learned and can result in "perfect pitch" if the training is substantial. I don't have it, which is why I cannot hear the exact pitch of the Chinese language and as such found it impossible to learn.

I agree with the discussion, but misunderstood you at the time. There is phase locking (apparently) in both the cochlea itself and the brain. In the cochlea the phase locking causes the outer hair cells to modulate in response to a signal and synchronous with the signal, thus causing a kind of local resonance at the point of the cochlea. This then increases the cochlea motion thus increasing the firing rate of the inner cells that go to the brain. This is necessary for the enhanced dynamic range that we see for hearing.

The neural locking in the brain is a different effect that takes the broad/chaotic neural firings from the principle pitch detection of the inner hair cells on the cochlea and sort of "filters it" to allow for enhanced pitch perception. Since this is in the brain it must be learned and can result in "perfect pitch" if the training is substantial. I don't have it, which is why I cannot hear the exact pitch of the Chinese language and as such found it impossible to learn.

All of this information is contained in a single varying voltage in the signal path, and presumably the same in the aural nerve to the brain? What happens in between seems a little more complicated, or is it?

None of this is analog, its all encoded in nerve pulses, i.e. digital.

Can we mix a floor to ceiling array pass band with a point source pass band right if a floor to ceiling array fall -3dB per doubling of distance verse a point source fall -6dB ?

What i mean is seen in below where two pass bands are summed at 160Hz with 4th order LR slopes inside a 20Hz-20kHz system domain. Black trace is 102dB SPL at 1 meter where summing is perfect but if we use floor to ceiling array for LF pass band and sum it to point source HF pass band we get non smooth summing at 2 meter doubling of distance and again at 4 meter point ? Red traces is summing of LF part as being floor to ceiling array and HF part being a point source, where for turquoise trace LF part is point source and HF part being floor to ceiling array.

At low frequencies the farfield to nearfield transition point would be closer to the line source, maybe even before reaching the listening distance. So it should be relatively easier to crossover to a point source above that.

Now if we cross a line and a point source at a frequency ( 450Hz for example) where the nearfield of the line source extends way beyond the listening distance, we should match their levels at 4m not 1m.

That way at 2.5m the point source will be 2dB hotter and at 6.5m the line source will be 2dB hotter.

This gives +- 2dB between 2.5m and 6.5m. Not bad given the situation.

Crossing a line source to a subwoofer or a supertweeter ( say above 14kHz or so) could be understood. But why would anyone cross a line to a point source at 450Hz??

To avoid another long line of midbass drivers or is it a well executed compromise for improving something else? or both?

Check out the new McIntosh xrt2.1k. They use point source for 150Hz- 450Hz and line source for bandwidth below and above that.

Last edited:

There has been a lot of talk about doing the finite line array and I did describe the differences to an infinite line array earlier in this thread. Is there more to this topic that people want to understand? A finite line array between two reflective surfaces is easy enough as well, and I did describe this as well. But unlike werewolf my patience for doing math equations in ASCII characters is very low.

I would love to go deep enough to make at least approximate quantitative tradeoffs when choosing drivers, numbers and sizes of drivers for a finite line array.

I wouldn't ask you to translate the greek letters, integral signs and such to ascii characters; in fact I would prefer you didn't. That way the equations would look more familiar to me (stylistically) and be easier to understand.

The question becomes how to work around character set limitations on the forum. You can always take screen shots of your work in your favorite equation editor and embed the resulting JPEGs in your posts.

i'd love to learn more about finite line arrays if the math component can be explained in english (i would love to get a math to english translation so i can use what i learn)

I would love to go deep enough to make at least approximate quantitative tradeoffs when choosing drivers, numbers and sizes of drivers for a finite line array.

I'm not sure that a mathematical discussion of a finite line array could tell you much of that. Basically a look at finite line arrays simply brings in a discussion of directivity since the infinite line array has no directivity at all.

The first issue becomes what happens when the line source becomes finite in radius as opposed to an infinitesimal radius? Simply put, basically the exact same this as a spherical source. As the frequency goes up the angular response narrows. It does so at a slightly different rate if the line is very long, but for the most part this is no different than what we are used to.

What happens if the vertical part of the array is finite as well. If we are interested only in the far field this is pretty simple. First the falloff is back to 6 dB/oct, the horizontal directivity is the same as any other source, but the vertical response is now different. It can be shown that the vertical polar response is the Fourier transform of the vertical velocity distribution. This is where shading comes from. If you shade the array and allow each element to have a value then the final vertical directivity will be the Fourier series of the array of element values.

In the near field line arrays are very complex with levels changing with frequency, distance, angle, every conceivable variable changes things - basically a smooth field of constant level it is not. As much as it would be nice to know the details of this the fact is that it is always bad. There is no possibility of an EQ that is anywhere near globally effective,

So I think that you can see that there is nothing magic here, its all quite familiar. If anyone wants to see the full math behind any of these claims, I can probably do that, although I will say that this is all shown in my book (including near field problems), so it would simply be a repeat of what is there in full detail.

- Home

- Loudspeakers

- Multi-Way

- Infinite Line Source: analysis