The mid/side EQ would be easier with some plugins I guess. I'm using Voxengo's mid-side plugin as shown earlier in this thread, like this:

But with EQ on the other leg as well. The biggest challenge was to adjust tonality to counter the change in overall tonal balance. Something that Pano mentioned being necessary for the Phase Shuffler as well.

I do agree with your results and perception. They mimic my experience. I'm glad you took the test. We could have talked about it for ages but hearing it is much more powerful.

I might even go back and give that last Rephase 2 shuffler another shot. Last time I tried I had to convolve songs off line to get to hear it. But it should be possible to run 2 convolutions, I need the first one for my base EQ/time alignment.

Fun stuff, isn't it?

But with EQ on the other leg as well. The biggest challenge was to adjust tonality to counter the change in overall tonal balance. Something that Pano mentioned being necessary for the Phase Shuffler as well.

I do agree with your results and perception. They mimic my experience. I'm glad you took the test. We could have talked about it for ages but hearing it is much more powerful.

I might even go back and give that last Rephase 2 shuffler another shot. Last time I tried I had to convolve songs off line to get to hear it. But it should be possible to run 2 convolutions, I need the first one for my base EQ/time alignment.

Fun stuff, isn't it?

Yeah, I figured that was how it was set up. That Voxengo plugin sure is useful. Could we see what the EQ is that you have on the side channel vs mid channel?

As I listen to it more, the "rephase-2" phase-only shuffler is just incredible. It tightens up the imaging of mono (center) material so much. I don't hear too much talk about mono imaging too much, because it usually sucks I guess. Has real depth now!

Pano do you have the settings file for Rephase? I wanted to load it up and make some IRs at other sample rates. And experiment with it a bit, but having a known starting point. I may just try to recreate it from the image you posted.

As I listen to it more, the "rephase-2" phase-only shuffler is just incredible. It tightens up the imaging of mono (center) material so much. I don't hear too much talk about mono imaging too much, because it usually sucks I guess. Has real depth now!

Pano do you have the settings file for Rephase? I wanted to load it up and make some IRs at other sample rates. And experiment with it a bit, but having a known starting point. I may just try to recreate it from the image you posted.

Similar effects on the Infinity tower speakers (out from walls, 1.3m equilateral triangle).

Tightens up center image at times an uncomfortable amount... ie. hip hop with a lot of extremely dry center elements (dead vocal booth, direct drum machine..). When mixes actually rely on the fuzziness of the phantom center to give center elements greater ASW (apparent source width), it can eat up some of the magic. I still prefer it pretty much all the time, even when the extra transparency reveals "the man behind the curtain"...

Tightens up center image at times an uncomfortable amount... ie. hip hop with a lot of extremely dry center elements (dead vocal booth, direct drum machine..). When mixes actually rely on the fuzziness of the phantom center to give center elements greater ASW (apparent source width), it can eat up some of the magic. I still prefer it pretty much all the time, even when the extra transparency reveals "the man behind the curtain"...

The mid/side EQ I used to judge was something like this:

Side signal: boost at 400 Hz, Q = 0.5 Gain = 1 dB, cut at 4000 Hz, Q = 0.4 Gain = -1 dB

Mid signal: cut at 400 Hz, Q = 0.5 Gain = -1 dB, Boost at 4000 Hz, Q = 0.4 Gain = 1 dB

This was used to listen to center and side vocals for tonal balance. After that I have made changes to the overall balance so it has changed but it would not make much sense to post it separate from the rest of the things I do. Which include ambient channels where I have an opposing cut at 400 Hz in the side channels for example.

I compared this to the first shuffler presented in this thread. I still need to revisit that last rephrase shuffler. What I did notice in the brief test I did on that one that it was a big improvement compared to the first one presented in this thread. Meanwhile I moved on to the ambient experiments. That alone can create it's own troubles with tonal changes. So I never gave the second shuffler a fair chance. But I haven't forgotten it and will pick it up at some point.

I'm glad you're liking the results. I'm thinking the mid/side EQ might not give the same benefit with more traditional speakers. The way I see it line arrays already have a lot of tiny phase shifts due to the use of multiple drivers. But it can't hurt to try.

I do have some extra cuts at 3700 Hz and 7270 Hz in the mid signal (very specific to my speaker/listening distances) after some cross talk cancelation experiments. It loosely relates to the dips created by introducing an opposing signal in each channel with a delay of 0.270 ms. (difference between the ears at the listening spot) This improved the intelligibility of some specific material.

Side signal: boost at 400 Hz, Q = 0.5 Gain = 1 dB, cut at 4000 Hz, Q = 0.4 Gain = -1 dB

Mid signal: cut at 400 Hz, Q = 0.5 Gain = -1 dB, Boost at 4000 Hz, Q = 0.4 Gain = 1 dB

This was used to listen to center and side vocals for tonal balance. After that I have made changes to the overall balance so it has changed but it would not make much sense to post it separate from the rest of the things I do. Which include ambient channels where I have an opposing cut at 400 Hz in the side channels for example.

I compared this to the first shuffler presented in this thread. I still need to revisit that last rephrase shuffler. What I did notice in the brief test I did on that one that it was a big improvement compared to the first one presented in this thread. Meanwhile I moved on to the ambient experiments. That alone can create it's own troubles with tonal changes. So I never gave the second shuffler a fair chance. But I haven't forgotten it and will pick it up at some point.

I'm glad you're liking the results. I'm thinking the mid/side EQ might not give the same benefit with more traditional speakers. The way I see it line arrays already have a lot of tiny phase shifts due to the use of multiple drivers. But it can't hurt to try.

I do have some extra cuts at 3700 Hz and 7270 Hz in the mid signal (very specific to my speaker/listening distances) after some cross talk cancelation experiments. It loosely relates to the dips created by introducing an opposing signal in each channel with a delay of 0.270 ms. (difference between the ears at the listening spot) This improved the intelligibility of some specific material.

Last edited:

Hi Mike and thanks for trying it and posting your results. Stereo is a wonderful illusion, but it isn't perfect, especially for the phantom center. This shuffler seems a good way of fixing some of those problems.

The 2nd of the phase only shuffler files should, in theory, be the best. So far it has been found to be so in the very limited number of test we have made. I'll dig to see if I can find the settings file, not sure if I saved it. Hadn't thought about adding EQ or other effects to it, that's a good idea.

As for EQing the system after the shuffle, it will probably be individualized. Since the comb filtering is different for different head sizes and shapes, what the shuffler does will be different for different heads. That said, a good comprise could probably be reached.

The 2nd of the phase only shuffler files should, in theory, be the best. So far it has been found to be so in the very limited number of test we have made. I'll dig to see if I can find the settings file, not sure if I saved it. Hadn't thought about adding EQ or other effects to it, that's a good idea.

As for EQing the system after the shuffle, it will probably be individualized. Since the comb filtering is different for different head sizes and shapes, what the shuffler does will be different for different heads. That said, a good comprise could probably be reached.

In the case of a 3 speaker arrangement (like I'm building), whatever else I do with delays, I would want the center speaker acoustic signal to arrive first, so precedence effect would put it out front of the rest of the band.

What I was getting at in a previous comment was that using a very short delay (1 - 20mS) to create a decorrelation between the center and the L&R side spkrs. would also create comb filter effects due to crosstalk in the extraction process (L+R center and L-XR/R-XL for sides, X being continuously variable so can be better optimized). But if instead of a single delay, several delays were used in that time range (1 - 20mS), and they had either a 1.4 or 1.62 delay relation to each other, and with the other channel(s), then maybe the cancellations would get largely filled in with minimal doubling up of cancellations and/or their integral multiples. Much like how listening room acoustics work, where the worse case is one reflection, and many reflections largely fill in each others cancellations, leaving you with something listenable.

This technique may be able to work with only two speakers too, to improve the phantom center image apparent stability when a bit off axis. You'd pull the L+R away from the L and R (with the above mentioned stereo matrix and L+R), add the delays to each of the 3 signals strategically as mentioned above, and then re-combine them somehow... Or, you might want to just add the multiple delays to the center image, and only a single slightly longer delay (~16mS) to the L and R signals, so the center image would stay out front (precedence effect). 'coarse I could be wrong... (?) My understanding of decorrelation is that it creates more of a sense of separateness from other signals. I haven't found a detailed explanation as to how it's done in the real world (in stereo output digital reverbs for ex.), but I'm guessing it's about using multiple delays too short to perceive, but long enough to do the job (?)..

What I was getting at in a previous comment was that using a very short delay (1 - 20mS) to create a decorrelation between the center and the L&R side spkrs. would also create comb filter effects due to crosstalk in the extraction process (L+R center and L-XR/R-XL for sides, X being continuously variable so can be better optimized). But if instead of a single delay, several delays were used in that time range (1 - 20mS), and they had either a 1.4 or 1.62 delay relation to each other, and with the other channel(s), then maybe the cancellations would get largely filled in with minimal doubling up of cancellations and/or their integral multiples. Much like how listening room acoustics work, where the worse case is one reflection, and many reflections largely fill in each others cancellations, leaving you with something listenable.

This technique may be able to work with only two speakers too, to improve the phantom center image apparent stability when a bit off axis. You'd pull the L+R away from the L and R (with the above mentioned stereo matrix and L+R), add the delays to each of the 3 signals strategically as mentioned above, and then re-combine them somehow... Or, you might want to just add the multiple delays to the center image, and only a single slightly longer delay (~16mS) to the L and R signals, so the center image would stay out front (precedence effect). 'coarse I could be wrong... (?) My understanding of decorrelation is that it creates more of a sense of separateness from other signals. I haven't found a detailed explanation as to how it's done in the real world (in stereo output digital reverbs for ex.), but I'm guessing it's about using multiple delays too short to perceive, but long enough to do the job (?)..

.....Pano do you have the settings file for Rephase? .....

.....I'll dig to see if I can find the settings file, not sure if I saved it......

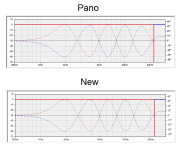

Settings file for Rephase attached below and it is created visual by comparing to Pano's posted graph, after that tried wav-file import to REW and exported as frd-file for import into Rephase to flatten phase and that way find numbers but result didn't get any closer than first try.

Attachments

Settings file for Rephase attached below and it is created visual by comparing to Pano's posted graph, after that tried wav-file import to REW and exported as frd-file for import into Rephase to flatten phase and that way find numbers but result didn't get any closer than first try.

Nice, thanks. I ended up doing the Wav -> REW -> txt -> rePhase method myself, but couldn't figure out how to get 2 channels showing at once in rePhase...

How did Pano come up with those "shuffle frequencies" anyway? They seem related to the ear-spacing comb filter frequencies?

Nice, thanks. I ended up doing the Wav -> REW -> txt -> rePhase method myself, but couldn't figure out how to get 2 channels showing at once in rePhase.....

To cheat and get Rephase visual show 2 channels load the settings file and hit "Generate" button which make blue trace in plot go red and into memory, now at "General" tab invert time and you will see new actual settings as blue trace together the remembered red trace.

Can think of generate one separate mono or stereo IR-wav file for above non inverted and inverted correction one at a time and then let a config-file for JRiver point to left and right IR-wav file. But how Pano did create one stereo IR-wav file that have non inverted and inverted correction for left and right in one stereo IR-wav file i don't know, can only guess that maybe Audacity can create that mix.

.....How did Pano come up with those "shuffle frequencies" anyway? They seem related to the ear-spacing comb filter frequencies?

i'm blank that subject : ) but can easy hear his IR-wav file creation works.

In the case of a 3 speaker arrangement (like I'm building), whatever else I do with delays, I would want the center speaker acoustic signal to arrive first, so precedence effect would put it out front of the rest of the band.

What I was getting at in a previous comment was that using a very short delay (1 - 20mS) to create a decorrelation between the center and the L&R side spkrs. would also create comb filter effects due to crosstalk in the extraction process (L+R center and L-XR/R-XL for sides, X being continuously variable so can be better optimized). But if instead of a single delay, several delays were used in that time range (1 - 20mS), and they had either a 1.4 or 1.62 delay relation to each other, and with the other channel(s), then maybe the cancellations would get largely filled in with minimal doubling up of cancellations and/or their integral multiples. Much like how listening room acoustics work, where the worse case is one reflection, and many reflections largely fill in each others cancellations, leaving you with something listenable.

This technique may be able to work with only two speakers too, to improve the phantom center image apparent stability when a bit off axis. You'd pull the L+R away from the L and R (with the above mentioned stereo matrix and L+R), add the delays to each of the 3 signals strategically as mentioned above, and then re-combine them somehow... Or, you might want to just add the multiple delays to the center image, and only a single slightly longer delay (~16mS) to the L and R signals, so the center image would stay out front (precedence effect). 'coarse I could be wrong... (?) My understanding of decorrelation is that it creates more of a sense of separateness from other signals. I haven't found a detailed explanation as to how it's done in the real world (in stereo output digital reverbs for ex.), but I'm guessing it's about using multiple delays too short to perceive, but long enough to do the job (?)..

While obviously I didn't play with a 3 speaker setup close together I did play with delaying the sides compared to the center. What I ended up with were strange effects and 3 sources. Left, Right and Center... no real imaging in between. It was fun to play with, but it didn't impress 🙂. I only tried very short delays.

I can see the cross talk cancelation working with such a setup though. I never got that to work properly with a stereo pair of speakers. At least not for the complete stereo field. I tried several variants of available plugins and convolution files. Mess with the stereo mix too much and you end up with only 3 mayor sources of sound.

I'd like to try that last shuffler again with a slight EQ at ~400 Hz to the sides.

I'm in no hurry though as I do enjoy what I've got right now. The ambient addition was one of my most successful experiments, besides cleaning up the early waterfall plots with various measures. The biggest of those measures being time coherency.

Settings file for Rephase attached below and it is created visual by comparing to Pano's posted graph, after that tried wav-file import to REW and exported as frd-file for import into Rephase to flatten phase and that way find numbers but result didn't get any closer than first try.

BYRTT, did you check in a loop how this shuffle looks in an early waterfall taken from a perfect signal? It's a tiny wiggle within 30 degree but it alternates in timing to left and right.

Thanks Brytt, I was not able to find my rePhase file. Looks like you got a perfect match.

The phase alternations were made to mimic the phase of the original multi-tap shuffler. Done by hand and by eye.

I exported a left and a right wave in 32 bit format, then combined them into a single 24 bit stereo file in a wave editor. Audacity will work for combining. You can leave them in 32 bit, it works fine in the JRiver convolver.

I am away on business for a couple of days, look forward to see what you guys get up to.

The phase alternations were made to mimic the phase of the original multi-tap shuffler. Done by hand and by eye.

I exported a left and a right wave in 32 bit format, then combined them into a single 24 bit stereo file in a wave editor. Audacity will work for combining. You can leave them in 32 bit, it works fine in the JRiver convolver.

I am away on business for a couple of days, look forward to see what you guys get up to.

Pano, BYRTT, with your authorization I'd like to implement these settings as phase EQ presets (L and R versions) in rephase's next release.

Dear Abby,

I am mastering a solo piano recording, predominantly using Royer R121 ribbons in Blumlein configuration (plus another spaced pair over the hammers, mixed lower). First I did some gentle analog tone shaping (by ear of course) at a fancy mastering facility with a Sontec EQ, Titan compressor, Burl tube ADC etc. to get that $$ sound, haha.

Back in the digital domain, following Gerzon's advice, I applied a 2dB low frequency boost to the side channel to compensate for the widening polar pattern of the mics at those frequencies, using a linear phase EQ. This made a big difference! Shelved down low frequencies (both channels) to compensate for proximity effect of microphones, again linear phase. For both of these corrections I referenced the polar and promixity plots from the microphone manufacturer and adjusted by ear.

Now herein lies the problem: the rephase shuffler on my playback system sounds so good that I am tempted to apply it while mastering, to gain the benefits on most playback systems.

But then, while listening through the filter on MY playback system, I get the effect applied twice, which thins out the stereo image too much. This is what troubles me about the implementation of novel filters on playback. Not sure what to do...

Sincerely,

Addicted to phase shuffling

I am mastering a solo piano recording, predominantly using Royer R121 ribbons in Blumlein configuration (plus another spaced pair over the hammers, mixed lower). First I did some gentle analog tone shaping (by ear of course) at a fancy mastering facility with a Sontec EQ, Titan compressor, Burl tube ADC etc. to get that $$ sound, haha.

Back in the digital domain, following Gerzon's advice, I applied a 2dB low frequency boost to the side channel to compensate for the widening polar pattern of the mics at those frequencies, using a linear phase EQ. This made a big difference! Shelved down low frequencies (both channels) to compensate for proximity effect of microphones, again linear phase. For both of these corrections I referenced the polar and promixity plots from the microphone manufacturer and adjusted by ear.

Now herein lies the problem: the rephase shuffler on my playback system sounds so good that I am tempted to apply it while mastering, to gain the benefits on most playback systems.

But then, while listening through the filter on MY playback system, I get the effect applied twice, which thins out the stereo image too much. This is what troubles me about the implementation of novel filters on playback. Not sure what to do...

Sincerely,

Addicted to phase shuffling

pos,

Very welcome to use settings file from post 247. Credit for file settings is Pano's work and share of this thread plus a thanks existence of Rephase tool.

Very welcome to use settings file from post 247. Credit for file settings is Pano's work and share of this thread plus a thanks existence of Rephase tool.

Dear Abby,

I am mastering a solo piano recording, predominantly using Royer R121 ribbons in Blumlein configuration (plus another spaced pair over the hammers, mixed lower). First I did some gentle analog tone shaping (by ear of course) at a fancy mastering facility with a Sontec EQ, Titan compressor, Burl tube ADC etc. to get that $$ sound, haha.

Back in the digital domain, following Gerzon's advice, I applied a 2dB low frequency boost to the side channel to compensate for the widening polar pattern of the mics at those frequencies, using a linear phase EQ. This made a big difference! Shelved down low frequencies (both channels) to compensate for proximity effect of microphones, again linear phase. For both of these corrections I referenced the polar and promixity plots from the microphone manufacturer and adjusted by ear.

Now herein lies the problem: the rephase shuffler on my playback system sounds so good that I am tempted to apply it while mastering, to gain the benefits on most playback systems.

But then, while listening through the filter on MY playback system, I get the effect applied twice, which thins out the stereo image too much. This is what troubles me about the implementation of novel filters on playback. Not sure what to do...

Sincerely,

Addicted to phase shuffling

Dear Addicted to phase shuffling,

I would advice against using any kind of shuffler in the mastering process as most setups we've come across suffer from a lot of early reflections and as a result do not get to experience the benefits that this type of shuffling brings.

It's better to reserve this type of shuffling for the reproduction stage, where serious listeners such as yourself know how to extract the most out of their system.

Sincerely,

Abby

BYRTT, did you check in a loop how this shuffle looks in an early waterfall taken from a perfect signal? It's a tiny wiggle within 30 degree but it alternates in timing to left and right.

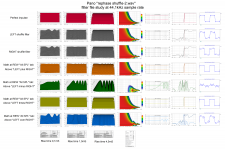

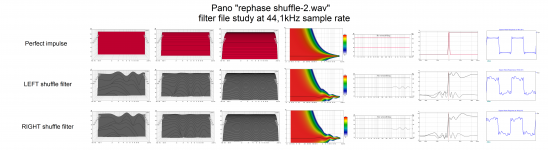

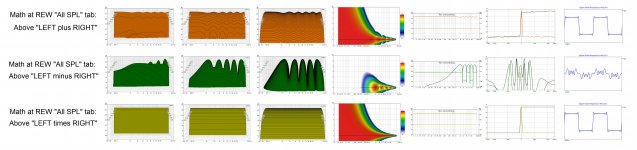

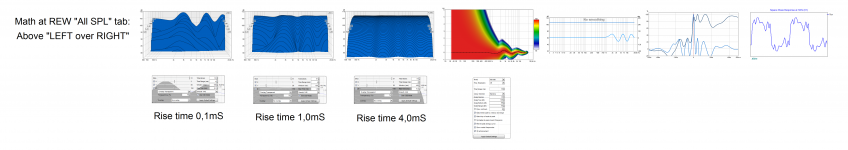

Thought i could spare some time running live soundcard SPDIF I/O loop that exactly gives perfect signal as you write verse soundcard analog loop I/O, in that we here have Pano's IR-wav file then think it's just let REW's math on "All SPL" tab do its magic : )

Most interesting result think is row five that show filter "LEFT times RIGHT" sum perfect if done in electric domain with no spacing as with real speakers and probably also at listening position in a symmetric listening environment, but in real world acoustic enviroment the other three "LEFT plus RIGHT"/"LEFT minus RIGHT"/"LEFT over RIGHT" can maybe sneak in a bit too not being at symmetrical listening position.

File size for graphs did grow therefor picture 1 is same as 2/3/4 the latter is meant to read small details.

Attachments

Last edited:

APL_TDA is not bad as kind of alternative waterfall if setting time span from 0 to 5mS, it show visual both attack and decay ringing where in REW "Waterfall" tab only decay is visible and not what happens before first slice at time zero unless one looks into "GD" or "Spectrogram" tab. Below is what APL_TDA gets out of reading IR-wav files from previous post 258.

Attachments

Last edited:

.....Most interesting result think is row five that show filter "LEFT times RIGHT".....

Quote myself because bummer in text post 258 😱 words "row five" is nonsense and should read row six.

- Home

- Loudspeakers

- Multi-Way

- Fixing the Stereo Phantom Center