It misses Moving Iron cartridges cause it havent "heard" much about it, even it may be the superior cartridge type.

Cheers!

Cheers!

The engineer who designed this phono stage clearly knows a thing or two about audio, and likely built it for their own rig before sharing it with others. It is interesting that the magic formula isn't explicitly documented. There are simply things that feedback can and cannot do, and the choice of whether or not to use it, or to use a voicing amplifier like Hegel's Sound Engine 2, is a matter of taste and perception.

The Pearl 2 phono stage has no feedback at the first input stage, while many other phono stages do, but make the mistake of also using it as a RIAA filter at the same time. (Pearl 3 is a little different and Wayne also seems to know the formula, however the preamplifier on page 12 can easily take on Pearl 3). Voicing amplifiers cannot be used in phono stages due to the added complexity and noise they introduce.

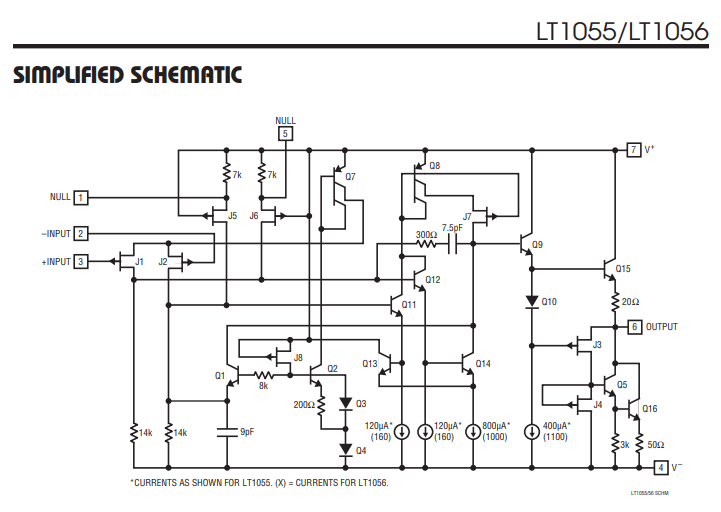

For the second gain stage, you can either use the LT1055/56 or a discrete op-amp like the second stage of the Pearl 2. This op-amp could also be very good for all line-level processing.

The sound signature of your phono stage will change considerably depending on whether you choose to use the first stage of the Pearl 2 or the LT1115. I think using a signal relay to switch between the two types of input stages could be useful. However, if you don't want to be constrained by proprietary chips, you can go discrete with SMT.

The first input stage can make a big difference in the sound of a phono stage.

Feel free to critic and correct any claim

The Pearl 2 phono stage has no feedback at the first input stage, while many other phono stages do, but make the mistake of also using it as a RIAA filter at the same time. (Pearl 3 is a little different and Wayne also seems to know the formula, however the preamplifier on page 12 can easily take on Pearl 3). Voicing amplifiers cannot be used in phono stages due to the added complexity and noise they introduce.

For the second gain stage, you can either use the LT1055/56 or a discrete op-amp like the second stage of the Pearl 2. This op-amp could also be very good for all line-level processing.

The sound signature of your phono stage will change considerably depending on whether you choose to use the first stage of the Pearl 2 or the LT1115. I think using a signal relay to switch between the two types of input stages could be useful. However, if you don't want to be constrained by proprietary chips, you can go discrete with SMT.

The first input stage can make a big difference in the sound of a phono stage.

Feel free to critic and correct any claim

Last edited:

using phono input stage with overall RIAA NFB has no disadvantages both in terms of overload capacity and noise, as well as the absence of a Miller capacitance.feedback at the first input stage, while many other phono stages do, but make the mistake of also using it as a RIAA filter at the same time

You may well be right and in some scenarios may just be a matter of taste especially where there is no measurable difference

More gpt 3.5 fun, just how far it can go and how well it can do it, we shall see, but computers do make stuff more fun

The STM32 microcontroller is the central processing unit of the system and is responsible for controlling all of the other components. The motorized potentiometer or PGA2311 is used to control the volume of the system. The display provides information to the user, such as the current volume level and selected source. The IR receiver and transmitter are used to communicate with the remote control. The ESP32 Wi-Fi module is used to provide streaming connectivity. The ES9038 DAC converts the digital audio signal from the microcontroller to an analog signal that can be played through the speakers. Flash memory is used to store additional data, such as firmware or audio files. The rotary encoder is used to select different sources or adjust the volume. The power supply provides power to all of the components in the system.

Microcontroller:

STM32 series, e.g., STM32F4 or STM32F7, for its versatility and performance.

Volume Control:

Motorized Potentiometer: ALPS RK168 or similar.

Alternatively, digital volume control IC: PGA2311.

Display:

OLED or TFT display based on project requirements.

Example: SSD1306 for OLED.

Remote Control:

Infrared (IR) receiver module: TSOP4838 or similar.

IR remote control transmitter.

Streaming:

For Wi-Fi connectivity, consider an ESP32 module (e.g., ESP32-WROOM-32).

Example Wi-Fi module part number: ESP32-WROOM-32.

Audio DAC:

ES9038 or similar high-quality DAC.

Example: ESS Sabre ES9038PRO.

Memory:

Depending on your application, you may need Flash memory.

Example: M12L64164A or similar.

Rotary Encoder:

ALPS rotary encoder or similar.

Example: EC11 series.

Firmware:

Depending on the microcontroller, you can use STM32CubeIDE for STM32 or PlatformIO for ESP32.

Implement communication protocols like I2C, SPI, UART for various peripherals.

App Control:

For app control, you might consider using Bluetooth or Wi-Fi.

Example: For Wi-Fi, you can use the ESP32's capabilities with a mobile app.

Bill of Materials (BOM):

STM32 Microcontroller

Motorized Potentiometer or PGA2311

Display (OLED or TFT)

IR Receiver and Transmitter

ESP32 Wi-Fi Module

ES9038 or similar DAC

Flash Memory (if needed)

Rotary Encoder

Various passive components (resistors, capacitors)

Power supply components (voltage regulators, etc.)

Assembly Instructions:

Microcontroller and Peripherals:

Connect the STM32 to the peripherals using appropriate communication protocols (I2C, SPI, UART).

Volume Control:

Connect the motorized potentiometer or PGA2311 according to the datasheets.

Display:

Connect the display to the microcontroller using the appropriate interface.

Remote Control:

Connect the IR receiver and transmitter to the microcontroller.

Streaming (Wi-Fi):

Integrate the ESP32 module and establish communication with the microcontroller.

Audio DAC:

Connect the ES9038 or similar DAC to the microcontroller.

Memory:

Connect the flash memory if additional storage is needed.

Rotary Encoder:

Connect the rotary encoder to the microcontroller.

Power Supply:

Design and implement a suitable power supply for the entire system.

Firmware:

Develop firmware using the chosen IDE (STM32CubeIDE or PlatformIO).

Implement drivers for each peripheral.

Write code for volume control, display management, remote control, and streaming.

Ensure compatibility with mobile apps for remote control.

App Control:

Develop a mobile app for iOS/Android.

Use Wi-Fi or Bluetooth protocols to communicate with the receiver/amplifier.

Implement features like volume control, source selection, etc., in the app.

Please note that this is a simplified overview, and the actual implementation would require detailed knowledge of the selected components and programming languages. Additionally, thorough testing and compliance with industry standards are essential for a successful consumer electronics product.

The STM32 microcontroller is the central processing unit of the system and is responsible for controlling all of the other components. The motorized potentiometer or PGA2311 is used to control the volume of the system. The display provides information to the user, such as the current volume level and selected source. The IR receiver and transmitter are used to communicate with the remote control. The ESP32 Wi-Fi module is used to provide streaming connectivity. The ES9038 DAC converts the digital audio signal from the microcontroller to an analog signal that can be played through the speakers. Flash memory is used to store additional data, such as firmware or audio files. The rotary encoder is used to select different sources or adjust the volume. The power supply provides power to all of the components in the system.

Microcontroller:

STM32 series, e.g., STM32F4 or STM32F7, for its versatility and performance.

Volume Control:

Motorized Potentiometer: ALPS RK168 or similar.

Alternatively, digital volume control IC: PGA2311.

Display:

OLED or TFT display based on project requirements.

Example: SSD1306 for OLED.

Remote Control:

Infrared (IR) receiver module: TSOP4838 or similar.

IR remote control transmitter.

Streaming:

For Wi-Fi connectivity, consider an ESP32 module (e.g., ESP32-WROOM-32).

Example Wi-Fi module part number: ESP32-WROOM-32.

Audio DAC:

ES9038 or similar high-quality DAC.

Example: ESS Sabre ES9038PRO.

Memory:

Depending on your application, you may need Flash memory.

Example: M12L64164A or similar.

Rotary Encoder:

ALPS rotary encoder or similar.

Example: EC11 series.

Firmware:

Depending on the microcontroller, you can use STM32CubeIDE for STM32 or PlatformIO for ESP32.

Implement communication protocols like I2C, SPI, UART for various peripherals.

App Control:

For app control, you might consider using Bluetooth or Wi-Fi.

Example: For Wi-Fi, you can use the ESP32's capabilities with a mobile app.

Bill of Materials (BOM):

STM32 Microcontroller

Motorized Potentiometer or PGA2311

Display (OLED or TFT)

IR Receiver and Transmitter

ESP32 Wi-Fi Module

ES9038 or similar DAC

Flash Memory (if needed)

Rotary Encoder

Various passive components (resistors, capacitors)

Power supply components (voltage regulators, etc.)

Assembly Instructions:

Microcontroller and Peripherals:

Connect the STM32 to the peripherals using appropriate communication protocols (I2C, SPI, UART).

Volume Control:

Connect the motorized potentiometer or PGA2311 according to the datasheets.

Display:

Connect the display to the microcontroller using the appropriate interface.

Remote Control:

Connect the IR receiver and transmitter to the microcontroller.

Streaming (Wi-Fi):

Integrate the ESP32 module and establish communication with the microcontroller.

Audio DAC:

Connect the ES9038 or similar DAC to the microcontroller.

Memory:

Connect the flash memory if additional storage is needed.

Rotary Encoder:

Connect the rotary encoder to the microcontroller.

Power Supply:

Design and implement a suitable power supply for the entire system.

Firmware:

Develop firmware using the chosen IDE (STM32CubeIDE or PlatformIO).

Implement drivers for each peripheral.

Write code for volume control, display management, remote control, and streaming.

Ensure compatibility with mobile apps for remote control.

App Control:

Develop a mobile app for iOS/Android.

Use Wi-Fi or Bluetooth protocols to communicate with the receiver/amplifier.

Implement features like volume control, source selection, etc., in the app.

Please note that this is a simplified overview, and the actual implementation would require detailed knowledge of the selected components and programming languages. Additionally, thorough testing and compliance with industry standards are essential for a successful consumer electronics product.

Much better is OPA192 (better Voffset, lower noise and TDH, lower price)For the second gain stage, you can either use the LT1055/56

Much better is MUSES72323Motorized Potentiometer or PGA2311

There are very good implementations of the above on the forum as well as online. Just came across this https://www.diyaudio.com/community/threads/digital-control-of-attenuation-repository-for-diy.404377/ . There was a time when raspberry pie streamers were the rave, they still are. The optimizations I had done in arduino were in timer utilization, bitwise operations and register shifting, in implementation layout and grounding management. Some implementations here for volume control are using precision resistor ladders with small signal relays, diyers and audiophiles with an engineering inclination do know how to have fun.

Title: Navigating the Impact of Jitter in Audio: Unraveling the Digital Symphony

IntroductionIn the realm of digital audio, the subtle yet significant issue of jitter has captured the attention of audiophiles, engineers, and enthusiasts alike. Jitter, defined as timing variations in the arrival of digital audio bits, has the potential to influence the perceived quality of reproduced sound. Its impact on audio playback quality is a subject of ongoing debate and scrutiny in the audiophile community.

Defining Jitter

Jitter refers to deviations from the precise timing of a periodic signal, specifically in the context of audio, where it manifests as timing variations between individual bits or blocks of bits in a digital audio stream. The consequences of jitter are most notable in high-resolution audio systems, where the precision of timing becomes paramount.

PCM and DSD Playback

In audio protocols like I2S and SPI, jitter can manifest differently for PCM (Pulse Code Modulation) and DSD (Direct Stream Digital) playback. In PCM systems, timing errors can cause distortion in the reconstructed analog signal, thus affecting audio quality. DSD, being a 1-bit audio format, is generally less susceptible to jitter, but significant levels can still impact playback accuracy.

Audibility of Jitter

The audibility of jitter is a nuanced aspect that depends on various factors, including the resolution of the audio system, the sensitivity of the playback equipment, the individual listener's sensitivity, the listening environment, and the type of audio signal being transmitted.

Mitigation Strategies

Audio engineers employ various strategies to mitigate the effects of jitter, such as dedicated clocking mechanisms, buffering, and re-clocking devices. These devices are implemented in high-end audio equipment to ensure precise timing and minimize the impact of jitter on audio quality.

Resistor Ladder DACs and Subjectivity

Certain DAC architectures, such as resistor ladder DACs (R-2R ladder DACs), have been associated with delivering a more natural and musical sound. However, the choice between DAC types is highly subjective, and factors such as implementation quality and system compatibility play crucial roles in shaping the overall audio experience.

Conclusion

While the impact of jitter on audio quality is a topic of ongoing exploration and discussion, it is clear that minimizing jitter is a priority in high-quality audio systems. The pursuit of pristine timing and precision continues to drive advancements in audio technology, ensuring that the digital symphony reaches the ears of listeners with fidelity and accuracy. As audiophiles navigate the intricate world of digital audio, the quest for an optimal balance between technical precision and subjective preference remains at the forefront of the audio experience.

more cohesive output

Introduction:

When exploring the technical journey of streaming audio, it's crucial to consider how different protocols and interfaces handle challenges like jitter. Let's examine the role of Bluetooth, WiFi, Ethernet, and SPDIF in the context of streaming audio and address jitter concerns. Although audibility of jitter when pop and clicks are absent may be low.

Establishing Internet Connection:

When you open a streaming app on your device, it establishes an internet connection using protocols like HTTP or HTTPS.

Selecting a Song:

Upon selecting a song, the app sends a request to the streaming service's servers to retrieve information about the chosen track.

Receiving Metadata:

Metadata about the selected song, including title, artist, and a unique identifier, is received from the streaming service's servers.

Requesting Audio Data:

Another request is sent to retrieve the compressed audio data for the selected song, typically using streaming protocols like HLS or DASH.

Audio Compression and Format:

The server delivers compressed audio data in formats like Ogg Vorbis, which is then transmitted to the app in chunks for smooth streaming.

Local Buffering:

The app buffers a small amount of audio data locally to ensure uninterrupted playback, especially during network fluctuations.

Transmission with Interconnect Cable:

The app converts compressed audio data into a digital format compatible with the device's DAC.

The digital audio data is transmitted to the DAC using an interconnect cable, such as USB, SPDIF, or HDMI.

DAC Communication:

The DAC, utilizing a specific communication protocol (e.g., I2S, USB), receives and processes the digital audio data.

Digital-to-Analog Conversion (DAC):

The DAC converts the digital audio data into an analog signal.

Amplification and Speaker Output:

The analog signal is sent to an amplifier, which powers the speakers, producing the final sound output.

Interconnect Cable Options:

USB:

Commonly used for transmitting digital audio data from the device to the DAC.

SPDIF:

Widespread support with options for both optical (Toslink) and coaxial cables.

Limited bandwidth and susceptibility to jitter.

Lacks significant inherent buffering; buffering is often implemented elsewhere in the audio chain.

HDMI:

High bandwidth, supports high-resolution audio formats.

Clock synchronization features potentially reduce jitter.

Complexity and potential HDCP issues.

I2S:

Dedicated audio interface designed for high-fidelity applications.

Separate clock signals for data and word synchronization may reduce jitter.

May require careful implementation.

Types of DACs:

Resistor Ladder DAC:

Utilizes a network of resistors to create voltage steps corresponding to the digital input.

Known for their simplicity and transparency in audio reproduction.

Oversampling DAC:

Increases the sample rate of the digital audio signal before conversion.

Aims to improve audio quality by reducing quantization errors and enhancing the resolution of the analog signal.

Sigma-Delta DAC:

Uses a delta-sigma modulation technique to convert digital signals to analog.

Known for high resolution and low distortion, often employed in high-end audio applications.

I2S DAC:

Uses the I2S protocol for communication.

USB DAC:

Utilizes a USB connection for digital audio input.

Coaxial DAC:

Accepts digital audio signals through a coaxial cable.

Optical DAC:

Accepts digital audio signals through an optical cable (Toslink).

Balanced DAC:

Uses a balanced audio signal with two conductors and a ground.

Jitter Concerns in Streaming Technologies:

Bluetooth:

Jitter may occur due to variations in the timing of data transmission.

Devices often implement buffer mechanisms to mitigate jitter effects.

WiFi:

Jitter can result from variations in network conditions.

Buffer mechanisms are often employed to smooth out irregularities in data transmission.

Ethernet:

Lower jitter compared to wireless protocols.

Stable data transmission reduces the impact of jitter.

SPDIF:

Susceptible to jitter, especially in longer transmissions.

Limited inherent buffering; buffering may be implemented elsewhere in the audio chain.

Jitter Reduction Measures:

Bluetooth and WiFi:

Ensure a stable and interference-free wireless environment.

Use devices with advanced buffering capabilities.

Opt for higher-quality Bluetooth codecs (e.g., aptX HD) and WiFi protocols.

Ethernet:

Choose high-quality Ethernet cables.

Ensure proper network configuration and stability.

SPDIF:

Use high-quality cables for SPDIF connections.

Choose devices with built-in jitter reduction technologies.

Implement local buffering mechanisms in the audio playback chain.

In summary, the journey from opening a streaming app to playing the first note involves a complex interplay of protocols, media types, and technologies, each with its considerations for addressing jitter in the streaming process. Careful selection of components, including different types of DACs, and implementation of buffering strategies are key to achieving optimal audio quality and user experience.

Oversampling at a Glance:

Definition:

Oversampling involves increasing the sample rate of a digital signal before conversion. In digital audio, this means taking more samples per second than the original signal.

Mathematics of Oversampling:

Original Sample Rate: A 16-bit audio signal sampled at 44.1 kHz (standard CD quality).

Oversampling Ratio (N): Deciding to oversample by a factor of 4 (N = 4).

New Sample Rate: The new sample rate becomes 4 * 44.1 kHz = 176.4 kHz.

Worked Example:

Original Audio Signal:

Original bit depth: 16 bits

Original sample rate: 44.1 kHz

Oversampled Audio Signal:

New bit depth: Remains 16 bits (no change)

New sample rate: 176.4 kHz

Steps:

Original Signal Representation:

In a simplified example, a single 16-bit sample is represented as a binary number.

Example: Original 16-bit sample = 0101010111001100

Oversampling Process:

For each original sample, three extra "in-between" samples are added based on the oversampling ratio.

Example:

Original: 0101010111001100

Extra samples: 0101010111001100, 0101010111001100, 0101010111001100

New Signal Representation:

The oversampled signal now has four samples for every original sample.

Example: Oversampled signal = 0101010111001100 0101010111001100 0101010111001100 0101010111001100

Pros and Cons of Oversampling:

Pros:

Increased Resolution: Improved resolution and accuracy in representing the audio signal.

Better Filtering: Allows for better anti-aliasing filtering, reducing unwanted artifacts.

Cons:

Increased Processing Load: Requires additional computational power, a concern in resource-limited devices.

Storage Requirements: Increases data amount, potentially impacting storage.

In oversampling, original samples are interpolated to create additional samples, effectively increasing the sample rate.

Smoother Curves:

Oversampling contributes to smoother curves in the audio signal by providing more data points, especially in capturing rapid changes.

Noise Reduction:

The increased sampling rate allows for more precise filtering of high-frequency noise during digital-to-analog conversion, enhancing the signal-to-noise ratio.

Higher Frequency Distortion:

While reducing noise, oversampling may introduce higher frequency components, potentially leading to higher frequency distortion.

Potential Benefits:

Improved Resolution: Effectively increases the effective resolution of the digital signal.

Anti-Aliasing Filtering: Higher sample rates allow for more effective anti-aliasing filtering, reducing artifacts.

Considerations:

Processing Load: Computational demand for oversampling can be higher, especially for devices with limited processing power.

Storage and Bandwidth: Increases data processing and transmission requirements, impacting storage and bandwidth.

Summary:

In theory, oversampling contributes to a more accurate representation of the audio signal by providing additional samples and improving filtering. Trade-offs involve considerations such as increased processing demands and potential higher frequency distortion.

In real-world scenarios, the effectiveness of oversampling depends on implementation, including the quality of interpolation algorithms, anti-aliasing filter design, and digital-to-analog converter capabilities. It's a tool used in audio engineering with careful consideration of system requirements and performance goals.

Introduction:

When exploring the technical journey of streaming audio, it's crucial to consider how different protocols and interfaces handle challenges like jitter. Let's examine the role of Bluetooth, WiFi, Ethernet, and SPDIF in the context of streaming audio and address jitter concerns. Although audibility of jitter when pop and clicks are absent may be low.

Establishing Internet Connection:

When you open a streaming app on your device, it establishes an internet connection using protocols like HTTP or HTTPS.

Selecting a Song:

Upon selecting a song, the app sends a request to the streaming service's servers to retrieve information about the chosen track.

Receiving Metadata:

Metadata about the selected song, including title, artist, and a unique identifier, is received from the streaming service's servers.

Requesting Audio Data:

Another request is sent to retrieve the compressed audio data for the selected song, typically using streaming protocols like HLS or DASH.

Audio Compression and Format:

The server delivers compressed audio data in formats like Ogg Vorbis, which is then transmitted to the app in chunks for smooth streaming.

Local Buffering:

The app buffers a small amount of audio data locally to ensure uninterrupted playback, especially during network fluctuations.

Transmission with Interconnect Cable:

The app converts compressed audio data into a digital format compatible with the device's DAC.

The digital audio data is transmitted to the DAC using an interconnect cable, such as USB, SPDIF, or HDMI.

DAC Communication:

The DAC, utilizing a specific communication protocol (e.g., I2S, USB), receives and processes the digital audio data.

Digital-to-Analog Conversion (DAC):

The DAC converts the digital audio data into an analog signal.

Amplification and Speaker Output:

The analog signal is sent to an amplifier, which powers the speakers, producing the final sound output.

Interconnect Cable Options:

USB:

Commonly used for transmitting digital audio data from the device to the DAC.

SPDIF:

Widespread support with options for both optical (Toslink) and coaxial cables.

Limited bandwidth and susceptibility to jitter.

Lacks significant inherent buffering; buffering is often implemented elsewhere in the audio chain.

HDMI:

High bandwidth, supports high-resolution audio formats.

Clock synchronization features potentially reduce jitter.

Complexity and potential HDCP issues.

I2S:

Dedicated audio interface designed for high-fidelity applications.

Separate clock signals for data and word synchronization may reduce jitter.

May require careful implementation.

Types of DACs:

Resistor Ladder DAC:

Utilizes a network of resistors to create voltage steps corresponding to the digital input.

Known for their simplicity and transparency in audio reproduction.

Oversampling DAC:

Increases the sample rate of the digital audio signal before conversion.

Aims to improve audio quality by reducing quantization errors and enhancing the resolution of the analog signal.

Sigma-Delta DAC:

Uses a delta-sigma modulation technique to convert digital signals to analog.

Known for high resolution and low distortion, often employed in high-end audio applications.

I2S DAC:

Uses the I2S protocol for communication.

USB DAC:

Utilizes a USB connection for digital audio input.

Coaxial DAC:

Accepts digital audio signals through a coaxial cable.

Optical DAC:

Accepts digital audio signals through an optical cable (Toslink).

Balanced DAC:

Uses a balanced audio signal with two conductors and a ground.

Jitter Concerns in Streaming Technologies:

Bluetooth:

Jitter may occur due to variations in the timing of data transmission.

Devices often implement buffer mechanisms to mitigate jitter effects.

WiFi:

Jitter can result from variations in network conditions.

Buffer mechanisms are often employed to smooth out irregularities in data transmission.

Ethernet:

Lower jitter compared to wireless protocols.

Stable data transmission reduces the impact of jitter.

SPDIF:

Susceptible to jitter, especially in longer transmissions.

Limited inherent buffering; buffering may be implemented elsewhere in the audio chain.

Jitter Reduction Measures:

Bluetooth and WiFi:

Ensure a stable and interference-free wireless environment.

Use devices with advanced buffering capabilities.

Opt for higher-quality Bluetooth codecs (e.g., aptX HD) and WiFi protocols.

Ethernet:

Choose high-quality Ethernet cables.

Ensure proper network configuration and stability.

SPDIF:

Use high-quality cables for SPDIF connections.

Choose devices with built-in jitter reduction technologies.

Implement local buffering mechanisms in the audio playback chain.

In summary, the journey from opening a streaming app to playing the first note involves a complex interplay of protocols, media types, and technologies, each with its considerations for addressing jitter in the streaming process. Careful selection of components, including different types of DACs, and implementation of buffering strategies are key to achieving optimal audio quality and user experience.

Oversampling at a Glance:

Definition:

Oversampling involves increasing the sample rate of a digital signal before conversion. In digital audio, this means taking more samples per second than the original signal.

Mathematics of Oversampling:

Original Sample Rate: A 16-bit audio signal sampled at 44.1 kHz (standard CD quality).

Oversampling Ratio (N): Deciding to oversample by a factor of 4 (N = 4).

New Sample Rate: The new sample rate becomes 4 * 44.1 kHz = 176.4 kHz.

Worked Example:

Original Audio Signal:

Original bit depth: 16 bits

Original sample rate: 44.1 kHz

Oversampled Audio Signal:

New bit depth: Remains 16 bits (no change)

New sample rate: 176.4 kHz

Steps:

Original Signal Representation:

In a simplified example, a single 16-bit sample is represented as a binary number.

Example: Original 16-bit sample = 0101010111001100

Oversampling Process:

For each original sample, three extra "in-between" samples are added based on the oversampling ratio.

Example:

Original: 0101010111001100

Extra samples: 0101010111001100, 0101010111001100, 0101010111001100

New Signal Representation:

The oversampled signal now has four samples for every original sample.

Example: Oversampled signal = 0101010111001100 0101010111001100 0101010111001100 0101010111001100

Pros and Cons of Oversampling:

Pros:

Increased Resolution: Improved resolution and accuracy in representing the audio signal.

Better Filtering: Allows for better anti-aliasing filtering, reducing unwanted artifacts.

Cons:

Increased Processing Load: Requires additional computational power, a concern in resource-limited devices.

Storage Requirements: Increases data amount, potentially impacting storage.

In oversampling, original samples are interpolated to create additional samples, effectively increasing the sample rate.

Smoother Curves:

Oversampling contributes to smoother curves in the audio signal by providing more data points, especially in capturing rapid changes.

Noise Reduction:

The increased sampling rate allows for more precise filtering of high-frequency noise during digital-to-analog conversion, enhancing the signal-to-noise ratio.

Higher Frequency Distortion:

While reducing noise, oversampling may introduce higher frequency components, potentially leading to higher frequency distortion.

Potential Benefits:

Improved Resolution: Effectively increases the effective resolution of the digital signal.

Anti-Aliasing Filtering: Higher sample rates allow for more effective anti-aliasing filtering, reducing artifacts.

Considerations:

Processing Load: Computational demand for oversampling can be higher, especially for devices with limited processing power.

Storage and Bandwidth: Increases data processing and transmission requirements, impacting storage and bandwidth.

Summary:

In theory, oversampling contributes to a more accurate representation of the audio signal by providing additional samples and improving filtering. Trade-offs involve considerations such as increased processing demands and potential higher frequency distortion.

In real-world scenarios, the effectiveness of oversampling depends on implementation, including the quality of interpolation algorithms, anti-aliasing filter design, and digital-to-analog converter capabilities. It's a tool used in audio engineering with careful consideration of system requirements and performance goals.

one can say over sampling provides the same benefits to spread spectrum minus the chipping code

False. Timing errors are more critical in DSD.DSD, being a 1-bit audio format, is generally less susceptible to jitter,

How so? Is something turning shaped noise into distortion?...oversampling may introduce higher frequency components, potentially leading to higher frequency distortion.

Of little practical meaning/applicability. Jitter (close-in and or far-out phase noise) should be controlled by putting master clocks very close to the dac. ASRC and or FIFO buffering can be used to attenuate jitter, or pretty much eliminate incoming jitter, respectively.Jitter Reduction Measures:

Bluetooth and WiFi:

Ensure a stable and interference-free wireless environment.

Use devices with advanced buffering capabilities.

Opt for higher-quality Bluetooth codecs (e.g., aptX HD) and WiFi protocols.

'Balanced dac' is a term that usually refers to a dac which outputs in-phase and inverted-phase analog signals, which can then be used to attenuate common mode noise coming out of the dac.Balanced DAC:

Uses a balanced audio signal with two conductors and a ground.

Last edited:

First time I've seen that claim. Care to explain more?one can say over sampling provides the same benefits to spread spectrum minus the chipping code

Mark your spot on, thanks for your response. As for over sampling , there's a reason there's a switch on some DACs to turn it on and off especially on good R2R some may prefer no oversampling or x2, maybe x4 is a tad too much and x8 well that must be a crappy DACFalse. Timing errors are more critical in DSD.

View attachment 1234546

How so? Is something turning shaped noise into distortion?

Of little practical meaning/applicability. Jitter (close-in and or far-out phase noise) should be controlled by putting master clocks very close to the dac. ASRC and or FIFO buffering can be used to attenuate jitter, or pretty much eliminate incoming jitter, respectively.

'Balanced dac' is a term that usually refers to a dac which outputs in-phase and inverted-phase analog signals, which can then be used to attenuate common mode noise coming out of the dac.

- Home

- Amplifiers

- Solid State

- ChatGPT ideas about amplifier design