Woo... So I can't read the small xterm and differentiate between 1.8 and 1.0 (with the slash).

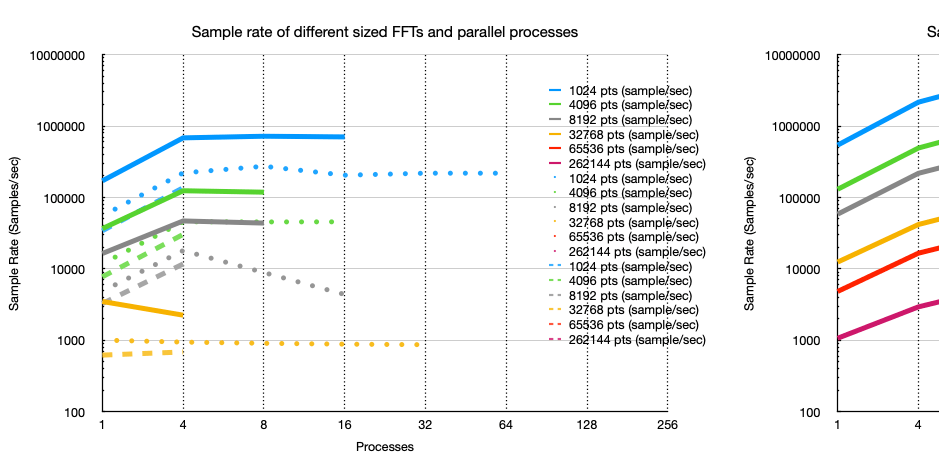

CPU only results for RP 4 at 1.8GHz (stock board and image) vs zero 2 (1GHz) vs ODroid C2 (1.5GHz) vs i7 (3.2GHz). The RP4 I noted is running in 32bit ("arm7") and the Zero is running 32bit.

On the left side: RP4 line, Zero 2W dash and C2 as dots. On the right i7 with 4 cores in the VM.

So first things - the RP4 out performs the C2 and, unsurprising, blows the zero out of the water. There's no heatsink attached it didn't throttle below 1.8GHz. And it's a decent performer for as simple SoC - the CM4 shares the same IC so that looks promising - but they must increase memory performance off they want to enter the compute space (or "compute" is just a load of marketing hogwash).

I don't know what was going down with the 32Kpts FFT perhaps cache thrashing but also this is a 2GB RP4 running a desktop and desktop OS image. It is running 64GB faster SD card (although this isn't used). Swap use is non-existent. It may be the CP throttling (although it's not reporting it).

So a single RP4 isn't going todo 1MSPS 1Mpt FFT in realtime (even the i7 won't). A couple of RP4s will do 1MSPS 1024pts FFT if the FFT is split and the with a 10 sample average running, an RP could do 4096pts FFT at 100Ksps.

In other news - after the 3 hour build I have the zero with a vkFFT complied for OpenCL but it was giving me an odd exit return code and I got distracted.

The Mrs has started asking questions.. like "I thought that you were making something for headphones" and the telling "that's big for headphones" looking at the 16 tube OTL HP amplifier that will need some power transformers at some point.. Given some of the electronics retailers are showing out of stock for MG SAC305 solder.. and she found it difficult sourcing an iPad for her large new Circut gadget.. that's going to be done next year.. and I'm slumming it with this:

CPU only results for RP 4 at 1.8GHz (stock board and image) vs zero 2 (1GHz) vs ODroid C2 (1.5GHz) vs i7 (3.2GHz). The RP4 I noted is running in 32bit ("arm7") and the Zero is running 32bit.

On the left side: RP4 line, Zero 2W dash and C2 as dots. On the right i7 with 4 cores in the VM.

So first things - the RP4 out performs the C2 and, unsurprising, blows the zero out of the water. There's no heatsink attached it didn't throttle below 1.8GHz. And it's a decent performer for as simple SoC - the CM4 shares the same IC so that looks promising - but they must increase memory performance off they want to enter the compute space (or "compute" is just a load of marketing hogwash).

I don't know what was going down with the 32Kpts FFT perhaps cache thrashing but also this is a 2GB RP4 running a desktop and desktop OS image. It is running 64GB faster SD card (although this isn't used). Swap use is non-existent. It may be the CP throttling (although it's not reporting it).

So a single RP4 isn't going todo 1MSPS 1Mpt FFT in realtime (even the i7 won't). A couple of RP4s will do 1MSPS 1024pts FFT if the FFT is split and the with a 10 sample average running, an RP could do 4096pts FFT at 100Ksps.

In other news - after the 3 hour build I have the zero with a vkFFT complied for OpenCL but it was giving me an odd exit return code and I got distracted.

The Mrs has started asking questions.. like "I thought that you were making something for headphones" and the telling "that's big for headphones" looking at the 16 tube OTL HP amplifier that will need some power transformers at some point.. Given some of the electronics retailers are showing out of stock for MG SAC305 solder.. and she found it difficult sourcing an iPad for her large new Circut gadget.. that's going to be done next year.. and I'm slumming it with this:

So after fighting the RP4 onscreen keyboard 'matchbox'.. which has issues, I've switched to 'onboard' which is far better. Recalibated the touchscreen and I'm now installing Vulkan... which will take a while to download, build and install. Then I can get vkFFT running and we can see if trying to make a OpenGL ES2.x cross compile is worth the effort.

The test FFT program I've been using at the moment only runs CPU and performs a full FFT per sample in single precision float. So it will be a good comparison with vkFFT.

However the OS is still 32bit.. so I'll probably have to start over again with a 64bit image.

EDIT: nope.. only needs a rip-update for the firmware and a boot.ini update: https://medium.com/for-linux-users/...pi-4-faster-with-a-64-bit-kernel-77028c47d653

It runs faster 🙂

The test FFT program I've been using at the moment only runs CPU and performs a full FFT per sample in single precision float. So it will be a good comparison with vkFFT.

However the OS is still 32bit.. so I'll probably have to start over again with a 64bit image.

EDIT: nope.. only needs a rip-update for the firmware and a boot.ini update: https://medium.com/for-linux-users/...pi-4-faster-with-a-64-bit-kernel-77028c47d653

It runs faster 🙂

Last edited:

Just running the same arm test on 1024, 4096, 8192 and 32k point FFTs it seems that switching from 32bit to 64bit kernel and using aarch64 compiled version gives a 20% boost to performance. Not to be sniffed at.

I had a look at apt and the RPi PR4 OS desktop image is all 'arm' ie 32bit, that includes the desktop server and the Vulcan install. Doing the same search with aarch64 shows the gnu 10 compiler chain but nothing else. I'd need to somehow force linux to switch the installed components - or reinstall a full 64bit version of the OS, including the drivers etc.

I'm just letting vkFFT compile.. it's faster than the zero but I suspect that it'll also compile 32bit..

I had a look at apt and the RPi PR4 OS desktop image is all 'arm' ie 32bit, that includes the desktop server and the Vulcan install. Doing the same search with aarch64 shows the gnu 10 compiler chain but nothing else. I'd need to somehow force linux to switch the installed components - or reinstall a full 64bit version of the OS, including the drivers etc.

I'm just letting vkFFT compile.. it's faster than the zero but I suspect that it'll also compile 32bit..

Last edited:

Code:

pi@raspberrypi:~/VkFFT/build $ ./Vulkan_FFT -benchmark_vkfft -X 32768 -B 1 -N 100

WARNING: v3dv is neither a complete nor a conformant Vulkan implementation. Testing use only.

WARNING: v3dv is neither a complete nor a conformant Vulkan implementation. Testing use only.

VkFFT System: 32768x1x1 Batch: 1 Buffer: 0 MB avg_time_per_step: 3.475 ms std_error: 0.007 num_iter: 100 benchmark: 73 scaled bandwidth: 0.3 real bandwidth: 0.6So that's with a 32bit Vulcan and 32bit OS, plus 32bit vkFFT.

So a 32K FFT on the GPU at 3.475ms per FFT, so 0.003475s per FFT = 287.7 FFTs per second.

Code:

pi@raspberrypi:~/VkFFT/build $ ./Vulkan_FFT -benchmark_vkfft -X 1048576 -B 1 -N 100

WARNING: v3dv is neither a complete nor a conformant Vulkan implementation. Testing use only.

WARNING: v3dv is neither a complete nor a conformant Vulkan implementation. Testing use only.

VkFFT System: 1048576x1x1 Batch: 1 Buffer: 8 MB avg_time_per_step: 118.718 ms std_error: 0.027 num_iter: 100 benchmark: 69 scaled bandwidth: 0.3 real bandwidth: 0.8So 1Mpt FFT processed in 118.7ms .. so that's 0.118718s which is 8.4 FFTs per second.

So those are single shot (ie not batched) but running 100 FFTs on it for the benchmark.

Not bad but I suspect 64bit will make them faster.

For reference the pipeline code does 3,468 32k FFT per second on the 64bit CPU. However I've not tried a 1Mpt on the CPU code.. let me try.

Last edited:

Ok, so I've done a 'scaled' 1M on the CPU with 1024 samples into a 1M FFT space. The scaling shows 18 FFT/sec for the 1M FFT which aligns with the testing results for the other sizes (or in the graph-speak above that's 24 samples/sec on a 1Mpt FFT - way off the bottom of the graph).

So it seems that vkFFT is slower than the 'adjust coefficients'. Also I'm unsure if that is the GPU kernel execution time or includes the data transfer time between CPU and GPU memory areas.

The discrepancy (ie you'd expect GPUs to be faster) is down to how well the GPU works on the memory bus. Standalone GPUs graphics cards out perform the i7 memory bus and usually use specialist formats of ram for that reason. The VideoCore may not be working at it's best, the drivers etc running 32 bit and other such fun.

I think the only way to check is to switch to 64bit OS Image and install everything 64bit from the start.

In other news - test test demo for 3D, glxgears, gives a nice 57fps on the IPS screen.

So it seems that vkFFT is slower than the 'adjust coefficients'. Also I'm unsure if that is the GPU kernel execution time or includes the data transfer time between CPU and GPU memory areas.

The discrepancy (ie you'd expect GPUs to be faster) is down to how well the GPU works on the memory bus. Standalone GPUs graphics cards out perform the i7 memory bus and usually use specialist formats of ram for that reason. The VideoCore may not be working at it's best, the drivers etc running 32 bit and other such fun.

I think the only way to check is to switch to 64bit OS Image and install everything 64bit from the start.

In other news - test test demo for 3D, glxgears, gives a nice 57fps on the IPS screen.

Last edited:

So I've rebuild the entire RPI in 64bit (arm64 only), instead of using the automated Vulcan tools I've had to manually build a 64bit version using this excellent guide: install-vulkan-on-raspberry-pi.html

The RPI in general use flies along and the builds seem faster - both kernel and all the operating system files (including windowing etc) are running 64bit. I'm just now waiting for the bits to compile..

The RPI in general use flies along and the builds seem faster - both kernel and all the operating system files (including windowing etc) are running 64bit. I'm just now waiting for the bits to compile..

64bit Vulkan and VkFFT - 1Mpt FFT

So no real difference - I'll call that within the error margin.

So that isn't much faster on 32K either - 295 FFT/sec but still slower than the CPU.

I'll try ffts next but I couldn't get that to compile properly. The 1M CPU pipeline code shows 17/sec so close to the 18 it's within the error margin. This pretty much shows it's the memory bus that's slow on the RP series.

Code:

pi@raspberrypi:~/VkFFT/build $ ./Vulkan_FFT -benchmark_vkfft -X 1048576 -B 1 -N 100

WARNING: v3dv is neither a complete nor a conformant Vulkan implementation. Testing use only.

VkFFT System: 1048576x1x1 Batch: 1 Buffer: 8 MB avg_time_per_step: 116.837 ms std_error: 0.027 num_iter: 100 benchmark: 70 scaled bandwidth: 0.3 real bandwidth: 0.8So no real difference - I'll call that within the error margin.

Code:

pi@raspberrypi:~/VkFFT/build $ ./Vulkan_FFT -benchmark_vkfft -X 32768 -B 1 -N 100

WARNING: v3dv is neither a complete nor a conformant Vulkan implementation. Testing use only.

VkFFT System: 32768x1x1 Batch: 1 Buffer: 0 MB avg_time_per_step: 3.389 ms std_error: 0.001 num_iter: 100 benchmark: 75 scaled bandwidth: 0.3 real bandwidth: 0.6So that isn't much faster on 32K either - 295 FFT/sec but still slower than the CPU.

I'll try ffts next but I couldn't get that to compile properly. The 1M CPU pipeline code shows 17/sec so close to the 18 it's within the error margin. This pretty much shows it's the memory bus that's slow on the RP series.

Last edited:

VkFFT measures the kernel execution time of a consecutive FFT+iFFT pair in -benchmark_vkfft, so 118.7ms per iteration on 1M length FFT corresponds to ~17s/sec in your notation - similar to CPU results. Almost identical values of real bandwidth between 32K and 1M results (0.6GB/s and 0.8GB/s) show that the code is mostly bandwidth limited on GPU. CPU being able to get higher results on a 32K system indicates that it most likely has a cache size big enough to store the system and avoid more data transfers to RAM.So it seems that vkFFT is slower than the 'adjust coefficients'. Also I'm unsure if that is the GPU kernel execution time or includes the data transfer time between CPU and GPU memory areas.

Good point - that makes sense. Given you wrote it 🙂VkFFT measures the kernel execution time of a consecutive FFT+iFFT pair in -benchmark_vkfft, so 118.7ms per iteration on 1M length FFT corresponds to ~17s/sec in your notation - similar to CPU results. Almost identical values of real bandwidth between 32K and 1M results (0.6GB/s and 0.8GB/s) show that the code is mostly bandwidth limited on GPU. CPU being able to get higher results on a 32K system indicates that it most likely has a cache size big enough to store the system and avoid more data transfers to RAM.

I think this confirms that focusing on reducing memory utilisation or increasing density is the only way to increase performance (ignoring overclock). I was hoping the GPU compute kernels would be more efficient during gather/scatter.

So "Dear ImGUI" works with OpenGL ES 3.1 and has a wider support of device types compared to nanogui (that requires 3.3 which the RP4 doesn't have at the moment and may never have). There's also a lot of extension libraries for this too.

I've just tried the Vulkan backend implementation of imgui and that works well on the RP4 with a touch screen - I think we have our OpenGL GUI for our spectrum analyser.

So my intent here is to make a View-Model-Controller style architecture - this means that the Model is the FFT/spectrogram/harmonics etc, the View is the OpenGL rendering of the model information, and the control is the GUI operation of the features for that. It does get a little complex if we start overlaying things - such as multiple traces etc but for now that gives a nice starting point. Apple software uses MVC and so do most desktops. It doesn't have to be complex but the loose coupling makes it easier to extend or adjust without having to hack too much.

I've also been thinking of the sample processing chain given the test results. Given those results it makes sense to have a vkFFT offline based solution which would work for larger machines with discrete GPUs - perhaps an external GPU for the Mac mini. A realtime option (per-sample) is possible too. I think the next exploration is what can be done to break the problem down for each RP4. Some ideas in this space:

* FFTs can be split based on frequency - a number of processors can process a sub-range of the frequency range. The resulting FFT is not complete - it only holds the impact of the samples within that frequency AND each processor must process each sample (or averaged sample) as the frequency could occur anywhere. This means we can't only activate the low frequency if we have enough data - well not for realtime.

Once the FFT slices have been created, to get the final FFT is simply an addition for every bin with their corresponding bin. This can be sped up by simply tracking the FFT start and finish bin where the changes occur. The resulting FFT is then a full FFT.

I was also thinking about zooming and if there's a way of sub-ranging data processing based on the current zoom.

1. Zoom of frequency makes the span window smaller around a central point.

2. Zoom of amplitude is simply scaling of the FFT bin.

3. Zooming into a frequency resolution increases the processing required for the defined frequency range. All samples still need to be processed, however with a zoom of resolution you're interested in a specific sub-processing processing per sample. Windowing and splitting can still be used to reduce and spread processing but obviously with less averaging the rate of samples processed goes up. That then gives you your resolution increase - the rest if the FFT outside any sample affecting windowing then has to be sacrificed for this processing.

This doesn't stop (if you have enough omph) from setting up a separate zoom or resolution for a specific area of interest for triggering on the same sample stream for example.

4. It is possible to record all samples, regardless of averaging etc then offline process for scroll and zoom through the recorded back data. Like a DSO scope.

Scroll through zoomed frequency is therefore just a change in centre point for the zoom. The issue is that the entire back buffer has to be replayed through the processing (see point 3). Thus having a history buffer is required (point 4).

With that in mind. I'll program a basic OpenGL FFT view that displays the zoom model output. As we're essentially adjusting the processing rather than a view of an existing processing that's really a model. Although the same data in the end. That distinction means we can use the same view, without complicating it. The complexity then sits within the model which given the changes to the processing is going to be complex anyway.

Doing offline processing is then simple - depending on the processing within the model required - the model can do online or offline as required. The view should not need to know about this over than the rate of updates slows or varies. A 10 second sample ring buffer then gives us plenty of time and space. And our 'normal' view will be a zoom simply set to a specific default. In the end we only need enough buffer space to handling any block processing - ie transfer or processing.

GUI OpenGL 'window' view --gets data from--> Model (zoom parameters) --> back end

Now thinking a little in terms of features so we don't end up implementing something dead end. Although refactoring isn't a problem an architectural massive change when 5 minutes of thought isn't a good idea.

If we have a spectrogram - a history of FFTs, then our view will have a spectragram view and a view of the FFTs. I would expect a couple of features:

1. I can move scroll around the spectragram and the FFT display shows that FFT with the current processing configuration. For example the peak recognition 'tracks' correctly the peaks.

2. scrolling frequency shifts the spectragram adding blank space as the current capture shifts (if realtime zoomed) or reprocesses data in the history to update the spectrogram.

This means there's some data processing 'architecture' patterns we can build in from day one. Theres a requirement to be able to get data samples over time from the history to recreate data on the front end. Good thing we store all the samples.. So this also means the off-line processing pipeline should be able to presented with a time, frequency range and resolution.

Next up is the useful stuff like THD and SNR measurements.

If I want a THD/SNR measurement then I could apply the measurement now - in realtime. Or I could opt for a measurement based on higher resolution but longer processing time crunching of the recorded data.

If I want to detect odd signals - given the processing time and the resolution/span - that's likely to be offline.

I've just tried the Vulkan backend implementation of imgui and that works well on the RP4 with a touch screen - I think we have our OpenGL GUI for our spectrum analyser.

So my intent here is to make a View-Model-Controller style architecture - this means that the Model is the FFT/spectrogram/harmonics etc, the View is the OpenGL rendering of the model information, and the control is the GUI operation of the features for that. It does get a little complex if we start overlaying things - such as multiple traces etc but for now that gives a nice starting point. Apple software uses MVC and so do most desktops. It doesn't have to be complex but the loose coupling makes it easier to extend or adjust without having to hack too much.

I've also been thinking of the sample processing chain given the test results. Given those results it makes sense to have a vkFFT offline based solution which would work for larger machines with discrete GPUs - perhaps an external GPU for the Mac mini. A realtime option (per-sample) is possible too. I think the next exploration is what can be done to break the problem down for each RP4. Some ideas in this space:

- windowing of sample effect - so a sample may only effect X samples either side of the sample position in a much larger N sized FFT. Using a window involves a multiplication against the window across that 2X sample range. So as long as the computation time of 2X is far less than applying to the full N that will save time for the larger FFTs. Han, Blackman-Harris etc could all be used to reduce the scope of processing for each sample.

- Averaging can be done in a couple of ways - sample averaging and FFT power/bin averaging. The former gives a performance increase at the expense if accuracy over the FFT. An average'd signal is then applied as an average single sample across the FFT. The result is simply a lower resolution of averages but we gain from the averaging effect on gaussian noise so the averaging gives a lower noise floor (but not for correlated noise). It's possible to track the upper and lower values of the averaged bin to - that means 3x the work (upper, lower and average FFTs) but that may be interesting. Also you should be able to define if the sampling used is average/highest value/lowest value etc.

* FFTs can be split based on frequency - a number of processors can process a sub-range of the frequency range. The resulting FFT is not complete - it only holds the impact of the samples within that frequency AND each processor must process each sample (or averaged sample) as the frequency could occur anywhere. This means we can't only activate the low frequency if we have enough data - well not for realtime.

Once the FFT slices have been created, to get the final FFT is simply an addition for every bin with their corresponding bin. This can be sped up by simply tracking the FFT start and finish bin where the changes occur. The resulting FFT is then a full FFT.

I was also thinking about zooming and if there's a way of sub-ranging data processing based on the current zoom.

1. Zoom of frequency makes the span window smaller around a central point.

2. Zoom of amplitude is simply scaling of the FFT bin.

3. Zooming into a frequency resolution increases the processing required for the defined frequency range. All samples still need to be processed, however with a zoom of resolution you're interested in a specific sub-processing processing per sample. Windowing and splitting can still be used to reduce and spread processing but obviously with less averaging the rate of samples processed goes up. That then gives you your resolution increase - the rest if the FFT outside any sample affecting windowing then has to be sacrificed for this processing.

This doesn't stop (if you have enough omph) from setting up a separate zoom or resolution for a specific area of interest for triggering on the same sample stream for example.

4. It is possible to record all samples, regardless of averaging etc then offline process for scroll and zoom through the recorded back data. Like a DSO scope.

Scroll through zoomed frequency is therefore just a change in centre point for the zoom. The issue is that the entire back buffer has to be replayed through the processing (see point 3). Thus having a history buffer is required (point 4).

With that in mind. I'll program a basic OpenGL FFT view that displays the zoom model output. As we're essentially adjusting the processing rather than a view of an existing processing that's really a model. Although the same data in the end. That distinction means we can use the same view, without complicating it. The complexity then sits within the model which given the changes to the processing is going to be complex anyway.

Doing offline processing is then simple - depending on the processing within the model required - the model can do online or offline as required. The view should not need to know about this over than the rate of updates slows or varies. A 10 second sample ring buffer then gives us plenty of time and space. And our 'normal' view will be a zoom simply set to a specific default. In the end we only need enough buffer space to handling any block processing - ie transfer or processing.

GUI OpenGL 'window' view --gets data from--> Model (zoom parameters) --> back end

Now thinking a little in terms of features so we don't end up implementing something dead end. Although refactoring isn't a problem an architectural massive change when 5 minutes of thought isn't a good idea.

If we have a spectrogram - a history of FFTs, then our view will have a spectragram view and a view of the FFTs. I would expect a couple of features:

1. I can move scroll around the spectragram and the FFT display shows that FFT with the current processing configuration. For example the peak recognition 'tracks' correctly the peaks.

2. scrolling frequency shifts the spectragram adding blank space as the current capture shifts (if realtime zoomed) or reprocesses data in the history to update the spectrogram.

This means there's some data processing 'architecture' patterns we can build in from day one. Theres a requirement to be able to get data samples over time from the history to recreate data on the front end. Good thing we store all the samples.. So this also means the off-line processing pipeline should be able to presented with a time, frequency range and resolution.

Next up is the useful stuff like THD and SNR measurements.

If I want a THD/SNR measurement then I could apply the measurement now - in realtime. Or I could opt for a measurement based on higher resolution but longer processing time crunching of the recorded data.

If I want to detect odd signals - given the processing time and the resolution/span - that's likely to be offline.

Personally the first thing I want the scope todo on startup is display configured default. For me that is likely a fast single bin-to-pixel 1-50KHz FFT in realtime from averaged 256KSPS/1MSPS feed would be great with a peak reading for the 10 highest peaks. Persistence display for this would be good too. Dot mode or bars. When a sine wave is put in - the peaks are identified automatically.

Pinch zoom and swipe scrolling is possible on the touch screen. They work very well as long as the user sees the feedback quickly. This also means possibly using the existing data scaled or shifted to show the immediate feedback but then have the system fill in the detail as the processing pipeline provides the data. The user shouldn't be sat waiting for an operation to finish before they can push a button to cancel/move away. In fact the system should automatically handle the chancel of processing requested anyway. So processing of should be relatively iterative - for example processing 1 Mpt fft with 1M samples at 17 samples/sec or even 1000 samples/sec means we can cancel without waiting through the entire processing request.

Loading and storing data should be relatively straightforward as the source of the data.

As samples are always appearing, the screen is only going to be as realtime as the last processing update. If we want a form of trigger, then an option here is to allow triggers to occur at the fastest rate that the processing has been configured for (or a separate pipeline has been configured for). Given we record all the samples - we should be able to record a trigger at the point of the samples, passing that point forward then allows slower processing of the data around the trigger to display the trigger event.

As a detect - trigger and alert in realtime through an electrical pin to trigger an oscilloscope - we could do this - as long as the sample rate is high enough in the processing pipeline.

There's not that much difference between per-sample and data blocks. Only the rate of FFT change and keeping the processing time within that time constraints. Essentially most processing will take a input of a complete FFT. Some components we can create on the pipeline can be faster more specialist thus can sit on the faster sample-rate FFT, in fact it may be better to make them change orientated for less data processing requirements.

This sounds like a lot - however putting in a little architecture should make it more flexible without loosing speed in needless copying or reprocessing of data.

Eitherway I've got a good starting architecture on paper.

Pinch zoom and swipe scrolling is possible on the touch screen. They work very well as long as the user sees the feedback quickly. This also means possibly using the existing data scaled or shifted to show the immediate feedback but then have the system fill in the detail as the processing pipeline provides the data. The user shouldn't be sat waiting for an operation to finish before they can push a button to cancel/move away. In fact the system should automatically handle the chancel of processing requested anyway. So processing of should be relatively iterative - for example processing 1 Mpt fft with 1M samples at 17 samples/sec or even 1000 samples/sec means we can cancel without waiting through the entire processing request.

Loading and storing data should be relatively straightforward as the source of the data.

As samples are always appearing, the screen is only going to be as realtime as the last processing update. If we want a form of trigger, then an option here is to allow triggers to occur at the fastest rate that the processing has been configured for (or a separate pipeline has been configured for). Given we record all the samples - we should be able to record a trigger at the point of the samples, passing that point forward then allows slower processing of the data around the trigger to display the trigger event.

As a detect - trigger and alert in realtime through an electrical pin to trigger an oscilloscope - we could do this - as long as the sample rate is high enough in the processing pipeline.

There's not that much difference between per-sample and data blocks. Only the rate of FFT change and keeping the processing time within that time constraints. Essentially most processing will take a input of a complete FFT. Some components we can create on the pipeline can be faster more specialist thus can sit on the faster sample-rate FFT, in fact it may be better to make them change orientated for less data processing requirements.

This sounds like a lot - however putting in a little architecture should make it more flexible without loosing speed in needless copying or reprocessing of data.

Eitherway I've got a good starting architecture on paper.

Can I clarify that when you say samples/second you mean FFTs/second - a completely different thing...First run at 32K fft size and it's providing 621 s/cpu sec and 618 s/second with the existing code.... so not as bad as you'd expect considering it's 1GHz instead of 1.5GHz also DDR2.

View attachment 1003974

So the dotted line is the C2 real samples/sec,

Can I clarify that when you say samples/second you mean FFTs/second - a completely different thing...

Sure.

Samples per second - the rate of samples from the ADC on the output bus (following internal filtering/decimation)

The FFT/sec is the rate of FFTs (sizes vary as above) per second generated.

In the realtime processing each sample received results in a delta multiplied by the coefficients which is then applied across the current FFT. So for each sample a new updated FFT is available. Samples are not batched into a buffer and the buffer FFT'd.

For offline FFT - the samples are recorded into a larger block (say 32K or 1M) and the current block is used to create a new FFT. So sample rate and FFT rate are independent.

In the CPU results graphs (realtime)- the FFT size is set, for example 1024 or 32K and the maximum online sample processing rate is recorded - that's the Y axis.

The CPU results X axis shows processes in parallel - this is the total samples/sec (Y axis) of that number of processes operating in parallel processing the FFT size.

The reason why samples/sec and FFTs become related is the realtime coefficient approach. When a large FFT increases processing time per sample, the sample rate capacity reduces.

Perhaps the graph Y axis should be labled "Sample rate capacity" indicating the maximum rate achieved with the FFT size and parallelism. Naturally you want the capacity to be faster than the ADC sample rate for realtime. For offline - the capacity indicates how many FFTs can be generated/sec of the defined sample set size.

For the GPU graphs, those are offline and FFT/sec.

Last edited:

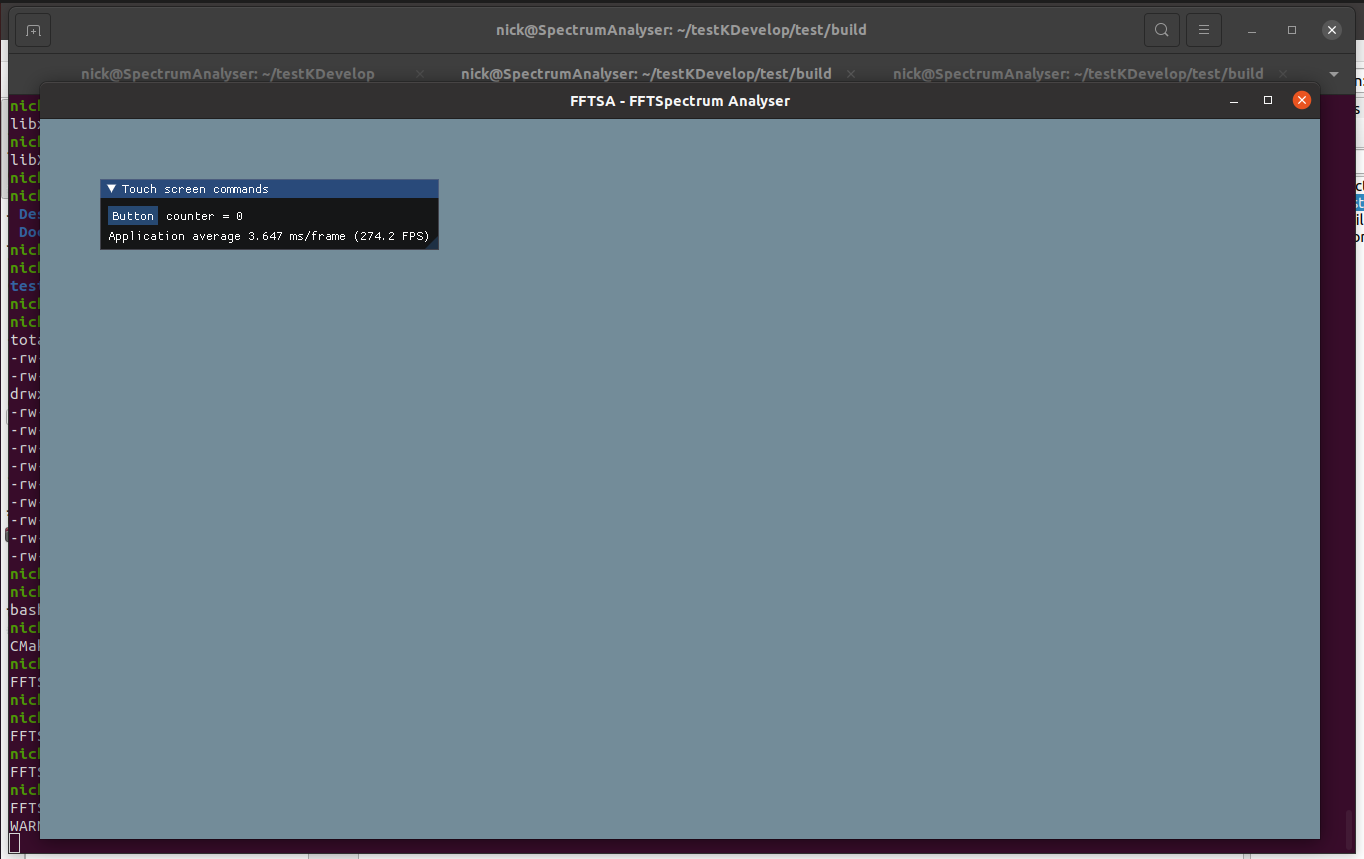

So it's been a while since my professional days of coding (2005 time frame to be exact), however I've recently had to save company's deliveries by stepping in and doing coding in Java and C++ with KDevelop. This is my first using IMGUI, CMake etc in anger.

The hello world of GUIs.. so part stolen from the example but restructured into C++. I could have done Python but the components would still have been lower languages. In the end the GUI management may still switch to Python to help spongability and adding of features/integration etc should anyone else want to help. I've also created a GitHub "fftsa" repo so once there's enough to make sense I'll upload the sources - probably under GPL licensing.

What is nice is this works on the VM. So although vkFFT won't work, at least the GUI/OpenCL development can be done on both the larger system and the PI. I'll not spam the forum with development progress but stick with the interesting bits around signal processing and features etc. The initial baby steps - I'll stick with 5 initially:

1. Simple FFT View rendering

2. "Realtime" CPU fed with a testblock -> 1024pt FFT

3. Develop Pan and Zoom, size button menu

4. Sample sources for sound card input and GPIO input

5. Offline FFT using vkFFT and increasing support for larger FFTs.

6. Cursors and measurement

After that it's incrementally adding features and functionality into the skeleton.

Idea for Spectral Leakage reduction

I've been looking at spectral leakage, specifically if a mode that works offline can be built to reduce spectral leakage. I have an idea thus:

1. For each FFT bin - create an narrow band FFT from the original sample set for that frequency range where the FFT size is adjusted for a whole integer wave. This reduces the spectral leakage for the bin.

2. Coalesce the FFT bins into the final FFT.

So this is a reduction rather than a complete removal (low frequency will be difficult until the size of the sample set is large).

The hello world of GUIs.. so part stolen from the example but restructured into C++. I could have done Python but the components would still have been lower languages. In the end the GUI management may still switch to Python to help spongability and adding of features/integration etc should anyone else want to help. I've also created a GitHub "fftsa" repo so once there's enough to make sense I'll upload the sources - probably under GPL licensing.

What is nice is this works on the VM. So although vkFFT won't work, at least the GUI/OpenCL development can be done on both the larger system and the PI. I'll not spam the forum with development progress but stick with the interesting bits around signal processing and features etc. The initial baby steps - I'll stick with 5 initially:

1. Simple FFT View rendering

2. "Realtime" CPU fed with a testblock -> 1024pt FFT

3. Develop Pan and Zoom, size button menu

4. Sample sources for sound card input and GPIO input

5. Offline FFT using vkFFT and increasing support for larger FFTs.

6. Cursors and measurement

After that it's incrementally adding features and functionality into the skeleton.

Idea for Spectral Leakage reduction

I've been looking at spectral leakage, specifically if a mode that works offline can be built to reduce spectral leakage. I have an idea thus:

1. For each FFT bin - create an narrow band FFT from the original sample set for that frequency range where the FFT size is adjusted for a whole integer wave. This reduces the spectral leakage for the bin.

2. Coalesce the FFT bins into the final FFT.

So this is a reduction rather than a complete removal (low frequency will be difficult until the size of the sample set is large).

So its not an FFT, just a DFT that's updated every sample - without windowing?In the realtime processing each sample received results in a delta multiplied by the coefficients which is then applied across the current FFT. So for each sample a new updated FFT is available. Samples are not batched into a buffer and the buffer FFT'd.

So its not an FFT, just a DFT that's updated every sample - without windowing?

Correct. I don't think there's a continuous FFT capable Digital analyser thus they all term "FFT" but are in fact a "DFT" as you've highlighted. It's operating with a window that's shifting (the ring buffer is a window) thus isn't strictly "without a window". It therefore will not be immune to windowing effects (from lower in the spectrum) given not all frequencies will be present in the buffer/bins for a whole number of cycles. Even the digital binning of high frequencies is a form of windowing.

What is interesting in realtime approach taken offline means the buffer size can be adjusted to the wave length so that the wave length zero transition would match up with the interval. For the realtime processing it doesn't need to be a 2^N DFT length. Although the complexity is that wave generation is aligned to the ADC sample clock (generated by the ADC board clock IC or external clock).

I mean a window to control spectral leakage - you really need this for spectral analysis or your spectrum may be smothered in artifacts surely?

Or are you synchronously generating the test signals always?

For any measurements of a spectrum when the source isn't synchronous a flat-top window is needed as most windows have several dB of scalloping error.

Or are you synchronously generating the test signals always?

For any measurements of a spectrum when the source isn't synchronous a flat-top window is needed as most windows have several dB of scalloping error.

I mean a window to control spectral leakage - you really need this for spectral analysis or your spectrum may be smothered in artifacts surely?

Or are you synchronously generating the test signals always?

For any measurements of a spectrum when the source isn't synchronous a flat-top window is needed as most windows have several dB of scalloping error.

The noise smothering would be dependant on the sample source. I get your point (for example here: https://www.ni.com/en-gb/innovation...-of-deep-memory-in-high-speed-digitizers.html).

I'll code up the following sample sources software interfaces that provide the input stream into the software:

- ALSA which is Linux's sound system using libasound2. Simply that means you can input data from any source - it seems to work with YouTube or with the microphone jack. It would work with any sound card you have too.

- GPIO which is the RPI specific parallel connection from the ADC for a single channel.

- Test sine wave generator sample source so it makes software testing easy. This would be as low noise as you can get.

Am I going to use synchronous measurements - probably not. I would expect phase delays to make it a right royal pain in the behind to accurately align the point of transition.

I'll keep the windowing point in mind. I have noted some interesting texts such as: https://dspguru.com/files/Scalloping Loss Compensation-Lyons.pdf but I'm in the middle of the FFT OpenGL rendering design. Once that's done I should have some basic running FFTs.

Looks similar to the previous photo but under the hood is a complete re-architecture of the GUI as the end of the FFT pipeline. FFT code in place but I didn’t have the time to connect the FFT to the image texture.. tomorrow..

Days of BBC Basic... MOVE x,y DRAW x,y

Vulkan, to quote someone else "A little over 900 lines of code later, we've finally gotten to the stage of seeing something pop up on the screen! Bootstrapping a Vulkan program is definitely a lot of work, but the take-away message is that Vulkan gives you an immense amount of control through its explicitness.". And that's not including any drawing of FFTs. What it does give you is the option for some impressive graphics however I'm of the opinion that simple graphics works best - although this means rendering things like translucent cursors, grid block backgrounds and axis scales simple without complicating the rendering - leaving Vulcan to composite into the final screen image. I was thinking for a cheeky screensaver of wrapping the live screen image around the GLUT teapot moving around the screen - a few lines of work from past experience 😀 Vulkan is also highly portable to Linux desktop, Windows and MacOS..

The display design I'm going for is simple solid colours:

In terms of persistence or spectrogram its easy to make a gradient later. At a later date I can make the colour scheme configuration file based. The IPS screen is bright, even with the brightness on the screen turned to zero so having a software intensity. That also helps people with colour blindness.

Vulkan, to quote someone else "A little over 900 lines of code later, we've finally gotten to the stage of seeing something pop up on the screen! Bootstrapping a Vulkan program is definitely a lot of work, but the take-away message is that Vulkan gives you an immense amount of control through its explicitness.". And that's not including any drawing of FFTs. What it does give you is the option for some impressive graphics however I'm of the opinion that simple graphics works best - although this means rendering things like translucent cursors, grid block backgrounds and axis scales simple without complicating the rendering - leaving Vulcan to composite into the final screen image. I was thinking for a cheeky screensaver of wrapping the live screen image around the GLUT teapot moving around the screen - a few lines of work from past experience 😀 Vulkan is also highly portable to Linux desktop, Windows and MacOS..

The display design I'm going for is simple solid colours:

- dark black-ground - probably black or very dark grey.

- Yellow for the FFT points or bars.

- White for the onscreen markups such as scales, peak identification etc.

- White for the text

- Dark blue for the min max range if using averaging and min+max is switched on

- Green for cursors/cursor blocks

- Red for triggered highlights

In terms of persistence or spectrogram its easy to make a gradient later. At a later date I can make the colour scheme configuration file based. The IPS screen is bright, even with the brightness on the screen turned to zero so having a software intensity. That also helps people with colour blindness.

- Home

- Design & Build

- Equipment & Tools

- Arduino Spectrum Analyser 24bit 256KS/s