Found the STM and Broadcom drivers - they use DMA and the Broadcom supports grey mode.

linux/stm32_i2s.c at v5.10-stm32mp * STMicroelectronics/linux * GitHub

linux/bcm2835-i2s.c at master * torvalds/linux * GitHub

So that should be easy to setup.

So freertos is more single core embedded than RT (which doesn’t exclude multi core).

linux/stm32_i2s.c at v5.10-stm32mp * STMicroelectronics/linux * GitHub

linux/bcm2835-i2s.c at master * torvalds/linux * GitHub

So that should be easy to setup.

source: A clean RTOS for the Raspberry pi 3 and 4? - #10 by richard-damon - Kernel - FreeRTOS Community ForumsFor support, the RPi is just an Arm processor, so I suspect that there is already a port that handles most of the details. It might need a driver for the interrupt controller and timer. Some bigger issues though is that the RPi has a multi-core processor. FreeRTOS at its heart only supports a single core per version of FreeRTOS loaded (AMP). There are some third party provided multi-core ports of varying quality, but SMP multi-processor systems have very different issues to solve than single core systems.

So freertos is more single core embedded than RT (which doesn’t exclude multi core).

Last edited:

raspberry-pi-i2s-driver/i2s_driver.c at master * taylorxv/raspberry-pi-i2s-driver * GitHub as an example of software byte transfer. I’ll have a look to find the rpi official driver but that was the like that kept appearing.

Official linux RPi driver supported by the RPi Foundation is bcm2835-i2s.c - sound/soc/bcm/bcm2835-i2s.c - Linux source code (v5.15.6) - Bootlin, designed for the alsa SoC framework (ASoC).

Also the Broadcom manual indicates the three options for software and dma: https://datasheets.raspberrypi.com/bcm2711/bcm2711-peripherals.pdf#page85 chapter 7 - I see there’s a grey mode that could be used and the i2s takes time to flush <32bit transfers with zeros.

I do not understand the advantage of the gray code for this application, but maybe so, any suggestion? The official driver uses the DMA mode, of course.

No the clocking for i2s can be set higher

I have been closely following RPi I2S development for a while and have never seen such info. The official position is 384kHz/32bit max (as stated in the driver), although I have tested higher rates. Any links to that? I am very interested in higher throughput of the RPi's PCM peripheral.

, although the RPi clock sucks,

?? No RPi clock is used in the I2S slave mode.

having an external master clock drive continuous transfers (ie the clock chip attached to the ADC that clocks the data ready - that is not controlled by the cpld or RPi) would have an even sample spread more accurate than RPi initiated reads.

I2S in master mode could be used, if sampling frequencies were selected to avoid the MASH fractional division in the respective broadcom clock dividers. I tested such mode in Avoiding RPi Master I2S Fractional Jitter. The question is whether clock-generating GPIOs for the ADC/CPLD master clock could be linked to the same PLL and the divider still be integer/non-fractional. IMO the master mode is too complicated for this and using an external clock generator with multiple outputs is much more convenient.

Yup. From a concurrency perspective the RPI clocking and servicing scheduling can’t be relied on and a synchronous protocol would be needed. We can reduce the risk by increasing the performance and ensure it is the highest priority but we cannot rule out any issues. The ADC in continuous mode isn’t a synchronous.

Some of the teachings from years of concurrency and formally mathematically proving it have never left me looking at problems the same ever again.

The ADC clocking of the samples means we can be relatively confident of interval timing but not.

What I was thinking originally is that the cpld would clock a signal out to the RPI once the parallel signals were set ready to read the data. That way the “data ready” going from the cpld would be the transfer clock. It’s certainly possible to clock a parallel GPIO/SMI that way, plus in “grey mode” the i2s read is clocked externally. So the CPLD could clock the i2s - creating a clock (concurrency speaking) that is synchronous from the ADC data ready, through the CPLD to the i2s controller on the RPI.

The question is how do you ensure the data is taken off should the reading driver/app be rescheduled for a higher priority service? This is why I’m considering ChibiOS/RT as we can configure that. Also why running the GUI and the processing on the same RPI may work but would not be best practice.

If we used a synchronous calling to the ADC then we would have all the clocking issues and not be confident of the intervals - we’d then need to ensure the CPLD clock is locked - it would then clock requests for single samples and the data back would be clocked back to the RPI using the same data ready signal approach.

At that point it may be too complex for a CPLD and an FPGA would be needed.

My flippant remark about the RPI clocking is from the output i2s issues that lead to the large number of i2s de-jitter buffers - thus we would not be trusting it given a large variation between samples (ie sync calls) would cause for FFTs.

Some of the teachings from years of concurrency and formally mathematically proving it have never left me looking at problems the same ever again.

The ADC clocking of the samples means we can be relatively confident of interval timing but not.

What I was thinking originally is that the cpld would clock a signal out to the RPI once the parallel signals were set ready to read the data. That way the “data ready” going from the cpld would be the transfer clock. It’s certainly possible to clock a parallel GPIO/SMI that way, plus in “grey mode” the i2s read is clocked externally. So the CPLD could clock the i2s - creating a clock (concurrency speaking) that is synchronous from the ADC data ready, through the CPLD to the i2s controller on the RPI.

The question is how do you ensure the data is taken off should the reading driver/app be rescheduled for a higher priority service? This is why I’m considering ChibiOS/RT as we can configure that. Also why running the GUI and the processing on the same RPI may work but would not be best practice.

If we used a synchronous calling to the ADC then we would have all the clocking issues and not be confident of the intervals - we’d then need to ensure the CPLD clock is locked - it would then clock requests for single samples and the data back would be clocked back to the RPI using the same data ready signal approach.

At that point it may be too complex for a CPLD and an FPGA would be needed.

My flippant remark about the RPI clocking is from the output i2s issues that lead to the large number of i2s de-jitter buffers - thus we would not be trusting it given a large variation between samples (ie sync calls) would cause for FFTs.

Last edited:

Actually I wonder if you can sample in i2s, buffer, FFT and the use the phase correlation (or frequency shift) to correct the interval, buffer and sent to the DAC via i2s.. but that’s a different project. (A synchronous buffer and reclock is the other way..)

“ The ADC clocking of the samples means we can be relatively confident of interval timing but not.”

That should be “when” on the end.

That should be “when” on the end.

I’m back in the UK and now have to quarantine and do the required testing. New storm tomorrow *joy joy*

However I’ve been looking through the Broadcom 2711 and ARM CortexA57 instruction set - specifically the optimisation guide and the caching configuration. I’ll download the cross compiler and see what the arm code looks like. The ODroid C2 is a similar core so i may see how it goes.

However I’ve been looking through the Broadcom 2711 and ARM CortexA57 instruction set - specifically the optimisation guide and the caching configuration. I’ll download the cross compiler and see what the arm code looks like. The ODroid C2 is a similar core so i may see how it goes.

Code:

Z4sdftv:

.LFB3335:

.cfi_startproc

stp x29, x30, [sp, -80]!

.cfi_def_cfa_offset 80

.cfi_offset 29, -80

.cfi_offset 30, -72

adrp x1, .LANCHOR0

add x0, x1, :lo12:.LANCHOR0

mov x29, sp

stp d8, d9, [sp, 64]

.cfi_offset 72, -16

.cfi_offset 73, -8

ldr s9, [x1, #:lo12:.LANCHOR0]

ldp s8, s1, [x0, 4]

stp x19, x20, [sp, 16]

ldr s0, [x0, 12]

.cfi_offset 19, -64

.cfi_offset 20, -56

adrp x20, freqs

add x20, x20, :lo12:freqs

fsub s9, s9, s1

stp x21, x22, [sp, 32]

fsub s8, s8, s0

.cfi_offset 21, -48

.cfi_offset 22, -40

ldr w22, [x0, 16]

adrp x21, coeffs

add x21, x21, :lo12:coeffs

str x23, [sp, 48]

.cfi_offset 23, -32

add x23, x20, 8192

mov w19, 0

.p2align 3,,7

.L17:

sbfiz x0, x19, 3, 32

add w19, w19, w22

add x1, x0, x21

ldr s2, [x0, x21]

ldr s3, [x1, 4]

fmul s1, s8, s2

fmul s0, s8, s3

fmadd s1, s9, s3, s1

fnmsub s0, s9, s2, s0

fcmp s1, s0

bvs .L20

.L15:

ins v0.s[1], v1.s[0]

ldr d2, [x20]

cmp w19, 1023

sub w0, w19, #1024

csel w19, w0, w19, gt

fadd v0.2s, v2.2s, v0.2s

str d0, [x20], 8

cmp x23, x20

bne .L17

ldp x19, x20, [sp, 16]

ldp x21, x22, [sp, 32]

ldr x23, [sp, 48]

ldp d8, d9, [sp, 64]

ldp x29, x30, [sp], 80

.cfi_remember_state

.cfi_restore 30

.cfi_restore 29

.cfi_restore 72

.cfi_restore 73

.cfi_restore 23

.cfi_restore 21

.cfi_restore 22

.cfi_restore 19

.cfi_restore 20

.cfi_def_cfa_offset 0

ret

.L20:

.cfi_restore_state

fmov s1, s8

fmov s0, s9

bl __mulsc3

b .L15

.cfi_endprocSo that's using the optimisations for the -O3, -march=armv8-a.

I've read through the ARM documentation and the cortex-a57 optimisation guide. I used to program assembler for the ARM2 through 710 and strong-arm many years ago. Those were simple 32bit pipelines but the coding is almost identical.

There's a few observations of this code:

* 32bit vs 64bit impact on caches - the little old arm has 48K L1 instruction and data caches per core and they all share a L2 2MB cache. Given our data sets are 1 Million wide as it stands - that cache will look like no cache.

In 64bit mode the pointers, now being 64bit will destroy the caches. However in 64 bit mode you get more registers and the opportunity todo single register operations where a 32bit would need more - specifically if we started using FP (as the code above does). The code above is 64bit. In the old days registers were R0 to R14.. now you have X (64bit) and Y (32bit) hence the reason you see lots of X.

* Cortex bonuses - the cortex ARM8 has a floating point unit for each core which is nice, plus neon (SIMD), gcc made use if neon here "ins v0.s[1], v1.s[0]". However it doesn't make use of the coprocessing engines (these can perform operations in parallel with the ARM cores).

* pipeline structure - out of order execution. The cortex-a57 has a out of order execution pipeline that means the code execution for loads and stores work best in a structure that doesn't have loads and stores close together to prevent stalling whilst it's waiting to complete.

The code does a decent job of spacing the LDR and STR operations.

* Code complexity - the code complexity here seems to be overly complex. If the code prepared the ability to perform multiple loads and stores using parallel operations then it could be excused however when you look at the fundamental operation:

Code:

ldr d2, [x20]

cmp w19, 1023

sub w0, w19, #1024

csel w19, w0, w19, gt

fadd v0.2s, v2.2s, v0.2s

str d0, [x20], 8You can see it loads 64bit float ('D' addresses the registers as 64bit FP) and then adds and stores a 64bit FP - this is because currently I'm using 32bit floats so that the we fit both real and img into a single register. In reality the rest of the code is compiler complexity in accessing data.

If you look at the example code for copying memory given in the optimisation guide:

The Cortex-A57 processor includes separate load and store pipelines, which allow it to execute one load μop and one store μop every cycle. .

To achieve maximum throughput for memory copy (or similar loops), one should do the following.

• Unroll the loop to include multiple load and store operations per iteration, minimizing the overheads of looping.

• Use discrete, non-writeback forms of load and store instructions (such as LDRD and STRD), interleaving them so that one load and one store operation may be performed each cycle. Avoid load-/store-multiple instruction encodings (such as LDM and STM), which lead to separated bursts of load and store μops which may not allow concurrent utilization of both the load and store pipelines.

The following example shows a recommended instruction sequence for a long memory copy in AArch32 state:

Code:

Loop_start:

SUBS r2,r2,#64

LDRD r3,r4,[r1,#0]

STRD r3,r4,[r0,#0]

LDRD r3,r4,[r1,#8]

STRD r3,r4,[r0,#8]

LDRD r3,r4,[r1,#16]

STRD r3,r4,[r0,#16]

LDRD r3,r4,[r1,#24]

STRD r3,r4,[r0,#24]

LDRD r3,r4,[r1,#32]

STRD r3,r4,[r0,#32]

LDRD r3,r4,[r1,#40]

STRD r3,r4,[r0,#40]

LDRD r3,r4,[r1,#48]

STRD r3,r4,[r0,#48]

LDRD r3,r4,[r1,#56]

STRD r3,r4,[r0,#56]

ADD r1,r1,#64

ADD r0,r0,#64

BGT Loop_startA recommended copy routine for AArch64 would look similar to the sequence above, but would use LDP/STP instructions.

So from this coding the loop by unrolling as per the example means we should then have better memory transfer performance rather than looping. Perhaps a there is a pragma that could suggest an unloosing of 4 or 8 per loop.

EDIT: Yes there is..

Code:

#pragma GCC unroll n

You can use this pragma to control how many times a loop should be unrolled. It must be placed immediately before a for, while or do loop or a #pragma GCC ivdep, and applies only to the loop that follows. n is an integer constant expression specifying the unrolling factor. The values of 0 and 1 block any unrolling of the loop.In short - we have a simple concept for FFT but there's a lot of memory I/O for that simplicity. Where we would obtain speed is in:

* parallel smaller blocks where the blocks do not span L1 cache pages on different CPU cores - for example we break the 1M into 4, one for each core.

* reduce LDR reads given our coefficients are fetched from a span of 1M in stepped by the frequency bin (ie step frequency = rate = Hz). This allows us to reduce the spanning and the amount of data. The issue here is our sample is marching forward through through each bin - less of an issue if we're averaging a number of samples but at a full 1M this won't work. If this sounds confusing - simplify the data we're fetching so it's fetching is more efficient.

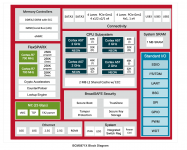

* The interesting question is - could we also setup the Broadcom (RPi) flexspark accelerator ARM cores (these are are not the same as core/neon etc) but can run programs separately. to add more processing bandwidth. The issue here is the performance is not well understood: (look middle left):

There are two Flexspark cores that could be used - perhaps in data offload with the I/O to clear the constructed FFTs.

These from the initial read are controlled by a set of memory mapped control registers just like the I/O peripheral hardware controllers.

Last edited:

Code:

.L21:

add w19, w19, w22

ins v0.s[1], v1.s[0]

cmp w19, 1023

sub w0, w19, #1024

csel w19, w0, w19, gt

ldr d4, [x20, 8]

sbfiz x0, x19, 3, 32

add x1, x0, x21

fadd v4.2s, v4.2s, v0.2s

ldr s2, [x0, x21]

ldr s3, [x1, 4]

str d4, [x20, 8]

fmul s1, s8, s2

fmul s0, s8, s3

fmadd s1, s9, s3, s1

fnmsub s0, s9, s2, s0

fcmp s1, s0

bvs .L39

.L23:

add w19, w19, w22

ins v0.s[1], v1.s[0]

cmp w19, 1023

sub w0, w19, #1024

csel w19, w0, w19, gt

ldr d4, [x20, 16]

sbfiz x0, x19, 3, 32

add x1, x0, x21

fadd v4.2s, v4.2s, v0.2s

ldr s2, [x0, x21]

ldr s3, [x1, 4]

str d4, [x20, 16]

fmul s1, s8, s2

fmul s0, s8, s3

fmadd s1, s9, s3, s1

fnmsub s0, s9, s2, s0

fcmp s1, s0

bvs .L40

.L25:Using the unroll loop pragma we get the above (an example of two steps of eight of an unroll. 8). So still quite busy so we're going to have to code different at the C/C++ level.

Last edited:

I've also spotted this in the SPI part of the Broadcom manual I missed before:

However it's annoying that the LoSSI mode clocking doesn't allow the read operation to be externally clocked (or it's not clear that 'grey mode' and 'LoSSI' can be combined).

On the Flexsparx ARM cores - these look like they're tied into the Broadcom proprietary NIC functionality as network packet handling accelerators. That would be a concern from a security perspective.. fingers crossed that they're secure otherwise every RP4 could be targeted as a DOS source.

However this means that the RP4 NIC can access the external SRAM without going through the ARM chip.

9.3.4. 24-bit read command

A 24-bit read can be achieved by using the command 0x04.

However it's annoying that the LoSSI mode clocking doesn't allow the read operation to be externally clocked (or it's not clear that 'grey mode' and 'LoSSI' can be combined).

On the Flexsparx ARM cores - these look like they're tied into the Broadcom proprietary NIC functionality as network packet handling accelerators. That would be a concern from a security perspective.. fingers crossed that they're secure otherwise every RP4 could be targeted as a DOS source.

However this means that the RP4 NIC can access the external SRAM without going through the ARM chip.

Last edited:

So I've ordered a 7" capacitive touchscreen, a RPi 4 2GB and a RPi Zero 2. That way I can test all the options.

Just looking at options for GUI etc. My thinking is simply going headless as the RPI will not be needing a heavy windowing environment.

RayLib - an immediate lightweight windowing and graphics system for video games, naturally running in OpenGL but also handles a connection to a gesture library

tslib and ts_uinput - provides touchscreen support with multi-touch gestures.

That provides enough functionality without the large windowing paraphernalia. The display of data through OpenGL should allow for composite into the screen with display of data, numbers and it handles things like drop down lists, text entry with a number pad etc.

Probably best to think of it as an outline window manager which is all this really needs. I'll probably keep the main RP4 board as RaspberryOS but the zeros may even go headless with direct linux frame buffers providing some GPU support (the OpenCL support is a little finnicky). It's possible to make a OpenGL render with textures do the same as OpenCL - that was the original way we had todo GPGPU back in 2005 😀

I need to find a way to use ChibiOS RT on the zeros but with the Broadcom ethernet acceleration. A network bus for output transfer would work really nicely and scale easily.

RayLib - an immediate lightweight windowing and graphics system for video games, naturally running in OpenGL but also handles a connection to a gesture library

tslib and ts_uinput - provides touchscreen support with multi-touch gestures.

That provides enough functionality without the large windowing paraphernalia. The display of data through OpenGL should allow for composite into the screen with display of data, numbers and it handles things like drop down lists, text entry with a number pad etc.

Probably best to think of it as an outline window manager which is all this really needs. I'll probably keep the main RP4 board as RaspberryOS but the zeros may even go headless with direct linux frame buffers providing some GPU support (the OpenCL support is a little finnicky). It's possible to make a OpenGL render with textures do the same as OpenCL - that was the original way we had todo GPGPU back in 2005 😀

I need to find a way to use ChibiOS RT on the zeros but with the Broadcom ethernet acceleration. A network bus for output transfer would work really nicely and scale easily.

Last edited:

I've found a little more interesting library - mainly because it supports WebGL too which makes a remote control web interface a lot easier. Raylib looks awesome but I think nanogui may be a better option. Only issue at the moment is that there are so many libraries around there.

nanogui is a lightweight interface for C++ and Python that uses OpenGL. In short it looks a little better and you don't have to have a game style centre loop. It also works on Mac and windows. More here: NanoGUI — NanoGUI 0.1.0 documentation

I've also got the port of chibi realtime OS compiling in the development VM and just in time as the bits arrive today.

nanogui is a lightweight interface for C++ and Python that uses OpenGL. In short it looks a little better and you don't have to have a game style centre loop. It also works on Mac and windows. More here: NanoGUI — NanoGUI 0.1.0 documentation

I've also got the port of chibi realtime OS compiling in the development VM and just in time as the bits arrive today.

So whilst the forum software was updated I've received my bits - only thing is I'm now waiting for the wife's PRC test to end quarantine before I can solve it. The RPI 4 is the only device in the house that has a USB-C (other than the Mac lighting ports) hence I have no cables or wallwalt. So I should have power for it today 😀

I managed to resurrect the ODroid C2 using the UART port - reinstalled a minimal ubuntu install with kernel 3.16 and do some cross compiling and testing. Annoyingly this has a dead HDMI connector (possibly due to the bend in the board by the factory heatsink clamp).The rest is fine.

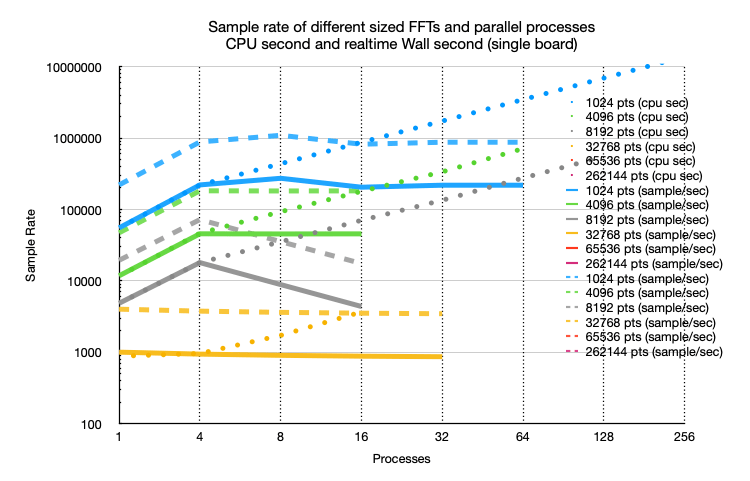

I found I'd made a boo-boo in the original tests - the timing was CPU time and not wall time. The original tests were 4 cores of an i7 3.2GHz in a VM (the i7 has 6 cores with hyper threading enabled, thus 12 "cores") - the test results above are for the ODroid with is as a ARM v8 Cortex-a53 running at 1.5GHz with 2GB ram - in the tests the CPU hit 67degC but according to the current frequency rate did not throttle due to the passive heatsink.

The dotted line is the CPU timed rate. The solid line is the wall time sample rate (real samples per second). The longer dashes are the 4x the wall rate (essentially as if I had 4 boards in parallel - with the 4 busses we get a full multiplication). I only performed up to 32K samples given the rate was dropping below 1000 s/s.

SO.. the ODoid C2 isn't a slouch, but as can clearly be seen - when compared to the 4 cores of the i7 it's about 4x slower. Hence I'd need four ODroid C2s running to equal one i7 with 4CPUs in a VM. So I'd need a 16 C2s to push 1+MSPS at 1024 on the CPU!

Next job is to look at a comparison with optimised libraries

The issue with FFTW/FFTS is that they need an aligned memory location and the data needs copying in and out - that cost will hurt performance. However it may be that I may have to face facts and make a buffered non-pipelined..

The GPU flavours also have issues - in the same way that the CPU cousins need to copy in/out of aligned buffers, the GPU needs to DMA upload the data from the CPU shadow into the GPU memory area, process the GPU program kernel(s), then retrieve the result by DMA copying the data back from the GPU memory area into the CPU memory area. Thus again pipeline is not possible an it has to be a buffered approach.

Let me code up some basic tests performing the same test but with FFTW/FFTS and vkFFT. I would expect the vkFFT to be very fast but at a cost of the copy.

Incidentally it would be interesting to see if the vK works on the ZeroW2 (I'd need to run on normal linux for the test). I would expect if it works on the RP4 then it would work on the CM4.

Let's see where the tests take me.

I managed to resurrect the ODroid C2 using the UART port - reinstalled a minimal ubuntu install with kernel 3.16 and do some cross compiling and testing. Annoyingly this has a dead HDMI connector (possibly due to the bend in the board by the factory heatsink clamp).The rest is fine.

I found I'd made a boo-boo in the original tests - the timing was CPU time and not wall time. The original tests were 4 cores of an i7 3.2GHz in a VM (the i7 has 6 cores with hyper threading enabled, thus 12 "cores") - the test results above are for the ODroid with is as a ARM v8 Cortex-a53 running at 1.5GHz with 2GB ram - in the tests the CPU hit 67degC but according to the current frequency rate did not throttle due to the passive heatsink.

The dotted line is the CPU timed rate. The solid line is the wall time sample rate (real samples per second). The longer dashes are the 4x the wall rate (essentially as if I had 4 boards in parallel - with the 4 busses we get a full multiplication). I only performed up to 32K samples given the rate was dropping below 1000 s/s.

SO.. the ODoid C2 isn't a slouch, but as can clearly be seen - when compared to the 4 cores of the i7 it's about 4x slower. Hence I'd need four ODroid C2s running to equal one i7 with 4CPUs in a VM. So I'd need a 16 C2s to push 1+MSPS at 1024 on the CPU!

Next job is to look at a comparison with optimised libraries

- fixed-fit - a fixed point FFT, only issue is that it's output is 32bit. In reality it would need to be 64bit working. A 64bit fixed C implementation of the current code loop may be only the books to at least test the memory speed.

- FFTW - "the Fastest Fourier Transformation in the West" the granddaddy of fast FFT on your CPU.

- FFTS - "the Fastest Fourier Transformation in the South" a response to FFTW but on mobile devices with optimised Neon ARM SIMD.

- vkFFT - Vulkan based GPU FFT - this is a fast library going with FFTW does on GPUs including Raspberry PI 4.

- GPU_FFT - GPU Based FFT on OpenCL, given OpenCL's demise in favour of Vulkan, I will probably not bother with this library.

The issue with FFTW/FFTS is that they need an aligned memory location and the data needs copying in and out - that cost will hurt performance. However it may be that I may have to face facts and make a buffered non-pipelined..

The GPU flavours also have issues - in the same way that the CPU cousins need to copy in/out of aligned buffers, the GPU needs to DMA upload the data from the CPU shadow into the GPU memory area, process the GPU program kernel(s), then retrieve the result by DMA copying the data back from the GPU memory area into the CPU memory area. Thus again pipeline is not possible an it has to be a buffered approach.

Let me code up some basic tests performing the same test but with FFTW/FFTS and vkFFT. I would expect the vkFFT to be very fast but at a cost of the copy.

Incidentally it would be interesting to see if the vK works on the ZeroW2 (I'd need to run on normal linux for the test). I would expect if it works on the RP4 then it would work on the CM4.

Let's see where the tests take me.

So I have the RPI Zero W 2 up and running in headless (RPI OS Lite) with a 32GB Class 10 SD - not that I would expect any swapping.

Zero W 2 CPU results...

First surprise is that RPI OS Lite for the zero 2 runs 32bit (even though it has a A53 quad core CPU). There is a way to change this by altering the image but for now - let's see what 32 bit gives. I know that it's going to miss some registers and SIMD instructions..

First run at 32K fft size and it's providing 621 s/cpu sec and 618 s/second with the existing code.... so not as bad as you'd expect considering it's 1GHz instead of 1.5GHz also DDR2.

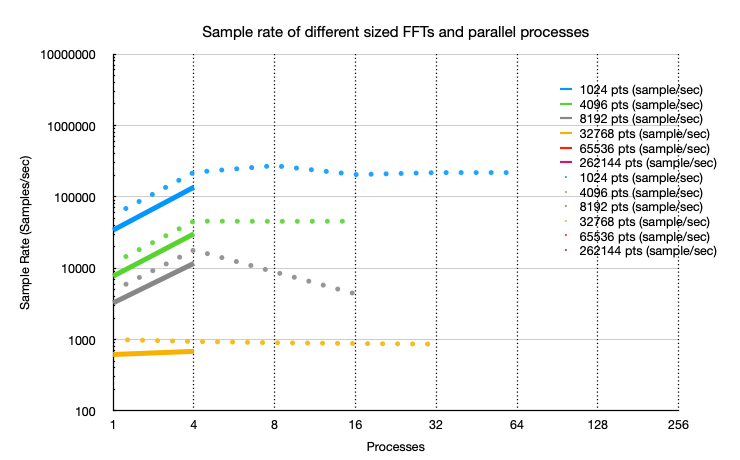

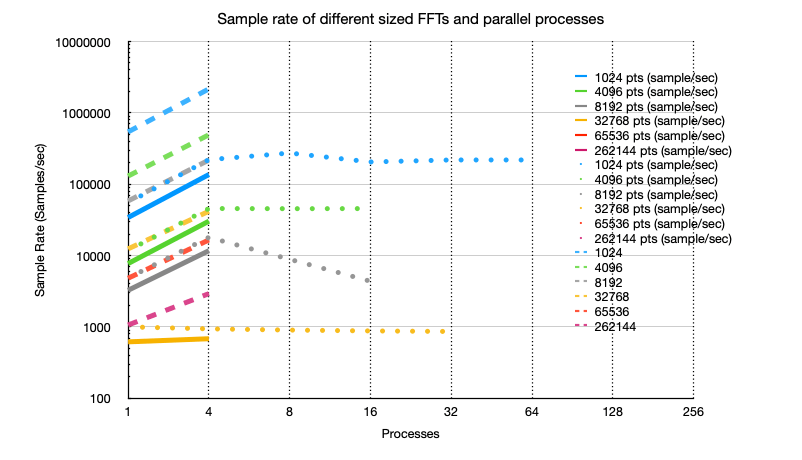

So the dotted line is the C2 real samples/sec, the solid line is the 32bit Zero 2 CPU based code. So a single core comparison is the Zero 2 is 62% the speed of a C2 and 6.66% of a i7 core (comparing 1024point and 1M samples as it's so fast for the timing!)

Below - zero 2 (line), c2 (dot), i7 (dash):

The power supply for the RP4 arrives tomorrow and that, I'm expecting, will be approximately similar performance as the C2. That will also give me the option to compile the vkFFT for the RP as the sources don't like cross compiling from x86 to arm. I'll then start playing with the Zero and see if there's more performance (64 bit and GPU).

So from this it looks like (finger in the air guesstimate) that it may be possible to perform 100 FFT/sec (ie keep up with screen refresh rate) and have a decent FFT size but only if the samples are buffered into large batches and processed 'offline'. It may be possible with the CPU to process in realtime with 1024pt FFT - the 1MSPS could be averaged down to say 100KSPS for that 1024py FFT.

All the testing so far has been using 32bit floats which is not ideal and I'm itching to see how the performance goes with GPUs although those will be also running 32bit floats as a comparison. There's the option for 64 bit double floats with the CPU but that is going to be offline reprocessing of the recorded samples.

Zero W 2 CPU results...

First surprise is that RPI OS Lite for the zero 2 runs 32bit (even though it has a A53 quad core CPU). There is a way to change this by altering the image but for now - let's see what 32 bit gives. I know that it's going to miss some registers and SIMD instructions..

First run at 32K fft size and it's providing 621 s/cpu sec and 618 s/second with the existing code.... so not as bad as you'd expect considering it's 1GHz instead of 1.5GHz also DDR2.

So the dotted line is the C2 real samples/sec, the solid line is the 32bit Zero 2 CPU based code. So a single core comparison is the Zero 2 is 62% the speed of a C2 and 6.66% of a i7 core (comparing 1024point and 1M samples as it's so fast for the timing!)

Below - zero 2 (line), c2 (dot), i7 (dash):

The power supply for the RP4 arrives tomorrow and that, I'm expecting, will be approximately similar performance as the C2. That will also give me the option to compile the vkFFT for the RP as the sources don't like cross compiling from x86 to arm. I'll then start playing with the Zero and see if there's more performance (64 bit and GPU).

So from this it looks like (finger in the air guesstimate) that it may be possible to perform 100 FFT/sec (ie keep up with screen refresh rate) and have a decent FFT size but only if the samples are buffered into large batches and processed 'offline'. It may be possible with the CPU to process in realtime with 1024pt FFT - the 1MSPS could be averaged down to say 100KSPS for that 1024py FFT.

All the testing so far has been using 32bit floats which is not ideal and I'm itching to see how the performance goes with GPUs although those will be also running 32bit floats as a comparison. There's the option for 64 bit double floats with the CPU but that is going to be offline reprocessing of the recorded samples.

Last edited:

Compiling the mesa for vkFFT has been fun. Even without the -j parallel compile option it kept running out of memory and swap with the default 100MB with the 512MB of ram. Thankfully it seems more behaved with 400MB of swap.. almost compiled 🙂

So on the RPI Zero 2 W this is the glx info:

So it appears that the we get an OpenGL ES 2.0 implementation rather than OpenGL ES 3.1. I will rebuild the zero using the desktop image and see if that remains. X desktop works too but compilation of vkFFT takes hours due to memory swap. With the swap increased to 400MB the compilation no longer crashes but you can see there's at least 100-200MB off swap used at times - single threaded compilation also needed as the compile will often take 75%+ of the memory.

Instead what I may try is to build the image on the RPI4 using it's faster capabilities then switch the SD card.

I also want to try running non-X'd GPU too. That way it would run faster without the overhead but still support GPU.

Code:

direct rendering: Yes

Extended renderer info (GLX_MESA_query_renderer):

Vendor: Broadcom (0x14e4)

Device: VC4 V3D 2.1 (0xffffffff)

Version: 21.3.1

Accelerated: yes

Video memory: 427MB

Unified memory: yes

Preferred profile: compat (0x2)

Max core profile version: 0.0

Max compat profile version: 2.1

Max GLES1 profile version: 1.1

Max GLES[23] profile version: 2.0

OpenGL vendor string: Broadcom

OpenGL renderer string: VC4 V3D 2.1

OpenGL version string: 2.1 Mesa 21.3.1 (git-9da08702b0)

OpenGL shading language version string: 1.20

OpenGL ES profile version string: OpenGL ES 2.0 Mesa 21.3.1 (git-9da08702b0)

OpenGL ES profile shading language version string: OpenGL ES GLSL ES 1.0.16So it appears that the we get an OpenGL ES 2.0 implementation rather than OpenGL ES 3.1. I will rebuild the zero using the desktop image and see if that remains. X desktop works too but compilation of vkFFT takes hours due to memory swap. With the swap increased to 400MB the compilation no longer crashes but you can see there's at least 100-200MB off swap used at times - single threaded compilation also needed as the compile will often take 75%+ of the memory.

Instead what I may try is to build the image on the RPI4 using it's faster capabilities then switch the SD card.

I also want to try running non-X'd GPU too. That way it would run faster without the overhead but still support GPU.

Your code-fu makes me feel guilty for letting my own skills get so rusty

Hehe been a long time since I've done any of this. Lots of creaky braincells.

So vkFFT - requires -> Vulkan (which is a next generation OpneGL/OpenCL) which requires a minimum of OpenGL ES 3.1. The Zero 2 has Open ES 2.x which means it won't support Vulkan (at least in the current form) and therefore won't support vkFFT directly. However this testing has show it would still be possible to FFT on the GPU on the zero, however it's more primitive support means doing some dirty work of coding a FFT in the same way as vkFFT does automatically by coding into GPU compute. It's important to note that OpenGL ES 2.0 does have GPU compute and therefore the GPU is capable of supporting it - only the tools and language differ front side hence vkFFT would need to be adjusted to support ES 2.0 shaders however typically each GL/ES version has a limit on the number of shaders thus we may run out of resources if we did that.

It may be possible to take the compute 'assembly language' for a large FFT and with a few tweaks have a ES 2.0 hard coded option. Or the longer option of writing a ES 2.0 FFT.. incidentally I believe the C2 also only support ES 2.x. The old ATI CTM "Close-to-the-metal" GPGPU approach was essentially assembler on the GPU - a far cry from Vulkan, OpenCL and nVidia's CUDA.

This has a good image showing how all the shaders and SPIRV supports them: spir. In theory it may be possible for SPIR to output Open ES 2.0 shaders rather than Vulkan. In fact... https://github.com/KhronosGroup/SPIRV-Cross#converting-a-spir-v-file-to-glsl-es this shows that with a command line you can specify the ES version.. and possibly 2.x.

As linux, like every OS, has a graphic stack (a-brief-introduction-to-the-linux-graphics-stack) you can see we don't need the windowing to utilise the GPU. However where tools and libraries such as vkFFT have dependencies, there's a need to bring the bloat which is not good for a zero..

Last edited:

Reading through this: https://github.com/DTolm/VkFFT/blob/master/documentation/VkFFT_API_guide.pdf it looks like the vkFFT library could be built into and be told to use OpenCL and therefore OpenGL ES 2.0. The question is - what version of OpenCL/ES is needed by the vkFFT code.

more info on the linux compute/graphics stack: https://commons.wikimedia.org/wiki/File:The_Linux_Graphics_Stack_and_glamor.svg

So for a cut down GPU capable system there's a few options:

1. GPU > Linux Kernel > Linux Direct Render Manager (DRM) > LibDRM > Mesa 3D -- OpenGL / EGL --> App

2. GPU > Linux Kernel > Linux Direct Render Manager (DRM) > LibDRM > Mesa 3D -- OpenGL ES 3.x / Vulkan --> App (vkFFT)

3. GPU > Linux Kernel > Linux Direct Render Manager (DRM) > LibGL -- OpenGL ES 3.x / Vulkan --> App (vkFFT)

Now we have two questions:

Q1. Does the Broadcom driver support the direct render manager and/or act as a LibGL only out through its own OpenGL/ES/Vulkan interface?

Q2. Will the OpenGL ES requirements work with an OpenCL backend? ES 3.1's main thing is compute, so prior to that it's been programmable shaders and graphics. This is why the requirement for 3.1 as a starting point for most GPU based FFTs.

3.1 has ARB support and starts adding compute shaders. I see that 2 has EXT which has single precision float and 1/2 sized floats and OES shaders vs the ARB for later.

more info on the linux compute/graphics stack: https://commons.wikimedia.org/wiki/File:The_Linux_Graphics_Stack_and_glamor.svg

So for a cut down GPU capable system there's a few options:

1. GPU > Linux Kernel > Linux Direct Render Manager (DRM) > LibDRM > Mesa 3D -- OpenGL / EGL --> App

2. GPU > Linux Kernel > Linux Direct Render Manager (DRM) > LibDRM > Mesa 3D -- OpenGL ES 3.x / Vulkan --> App (vkFFT)

3. GPU > Linux Kernel > Linux Direct Render Manager (DRM) > LibGL -- OpenGL ES 3.x / Vulkan --> App (vkFFT)

Now we have two questions:

Q1. Does the Broadcom driver support the direct render manager and/or act as a LibGL only out through its own OpenGL/ES/Vulkan interface?

Q2. Will the OpenGL ES requirements work with an OpenCL backend? ES 3.1's main thing is compute, so prior to that it's been programmable shaders and graphics. This is why the requirement for 3.1 as a starting point for most GPU based FFTs.

3.1 has ARB support and starts adding compute shaders. I see that 2 has EXT which has single precision float and 1/2 sized floats and OES shaders vs the ARB for later.

Last edited:

Code:

pi@raspberrypi:~/VkFFT/build $ cmake ..

-- The C compiler identification is GNU 10.2.1

-- The CXX compiler identification is GNU 10.2.1

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: /usr/bin/cc - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /usr/bin/c++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Looking for CL_VERSION_2_2

-- Looking for CL_VERSION_2_2 - found

-- Found OpenCL: /usr/lib/arm-linux-gnueabihf/libOpenCL.so (found version "2.2")

-- Configuring done

-- Generating done

-- Build files have been written to: /home/pi/VkFFT/buildSo I'll leave this building whilst I go and get the RP4 PS and do the food shop... 3 hours later it finished.

Just fighting the RP4 setup - so it recognised the touch screen but wrong resolution and the RPI desktop doesn't have an onscreen keyboard as part of the standard configuration! The Add/Remove program is dire - every time it locks up trying to search the large list.. when apt list grep works faster..

I think the RP4 CPU gets far hotter (and it's configured slower I think).

I'll make a metal construction inside the box to secure the screen and bits with screws.

So the 30W Belkin usb-c power adaptor has enough power but trying to run the zero from the RP4 USB ends up resetting the screen. So I think I need a USB power hub in there too. A little annoying with two mains plugs instead on one.

The sneaky thing is I can play with this whilst watching TV with the mrs 🙂 Next up is to try the same test program as before on the RP4. First .. to check the speed settings. I have a sneaking suspicion it's running slow Mhz from the boot setup file..

Last edited:

- Home

- Design & Build

- Equipment & Tools

- Arduino Spectrum Analyser 24bit 256KS/s