Code:

SDFT: 552380 fts per second...p/AudioAnalyser/projects/test/testfft$ 0 iters...

SDFT: 548542 fts per second.

SDFT: 543291 fts per second...

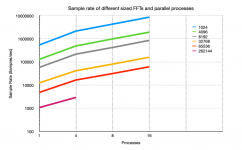

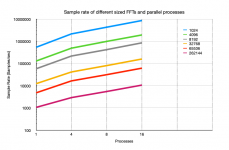

SDFT: 526558 fts per second.It occurred to me to run 4 single-threaded processes in parallel at the command line (facepalm) .. for a 1024bin, the result is over 500Ks/s each so 2Ms/sec in total. Running 8 processes returns the same.. about 520K each - or 4Msps.

So I think there's something fishy going on in the bowels of GNU and ubuntu..

16 processes (I use a bash script to trigger) .. and the same. 535K each or 8Msps. 😀

So 30 processes ... 530K each... or 16Msps.. into 1024 bin.. so obviously we're probably running in the caches..

Ok let's try.. 32K bin.. and see our max rate.. let's go straight to 30 processes in parallel..

Code:

.... pending...POOR THING..

I forgot that I multiplied the number of iterations by 1000 for the small 1024 sample size to get a decent time measurement.. 30 kill commands later..

32K bin

1 process = 12.5Ksps

4 processes = 30Ksps

Code:

SDFT: 10642.7 fts per second.op/AudioAnalyser/projects/test/testfft$ 0 iters...

SDFT: 10327.3 fts per second.

SDFT: 10198 fts per second.

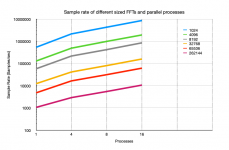

SDFT: 10286.9 fts per second.So it looks like we could make a sweet spot for the RPis based on their performance but running multiple processes on multiple devices may return even better performance depending on the rate needed.

I'll do a graph of the following 1024, 4096, 32K, 128K, 256K and 1M... the reason the 4096 didn't differ is that it wasn't compiled..

Last edited:

So I think this is worth pursuing with multiple processes (and multiple boards).

I think it would be possible to pan and zoom and still maintain the sample digestion rate from the ADC at quite a resolution.

If we think about it like this - the 7" touchscreen is 1240 pixels across. So our 1024 bins works quite nicely allowing for some touch menu area or reading.

The sample rate depends on the ADC configuration but let's consider 256KS/S and 1MS/S.

The rate of 256KS/S is equally spread over the 1 second interval based on the ADC eval board clock chip (better than a multi tasking operating system would do). We therefore should get 256K interrupts/sec and DMA.

We can average 256 incoming samples for each sample for a bin to provide a 256KS/S view in wide field view. In fact we can also process max and min of the same and create separate FFTs - the screen output can then show the min, max and average/mode etc.

Any FFT readings would then be an average across the entire bin - however this would allow us to identify major areas of interest - and even start tracking those areas.

We can live zoom or history zoom (we record the samples in our buffer). In fact we can process <1Hz at an out of band rate (ie process low sub 1Hz frequency at once every second for example).

When we zoom we have the centre frequency and we can then either immediately start on the live data, or recreate the FFT zoomed in using the historic data until we catch up with the live data.

Zoomed into 1Hz per pixel, we would be averaging 10 samples for that individual pixel FFT bin and should we want we could zoom in more to 1:1 with 0.1Hz.

The issue is that zooming in, naturally changes the data sampled in making the calculations for the SNR, THD etc. Also if the signal moves and we cannot track it within the current FFT attention window, if we wanted a system to detect and track a frequency and it's harmonics that go outside of the current window but not mess the THD value up we'd need a way to take those peaks. It could be possible to make a split system - where processes are split so that they know the current fundamental - additional processes then track the offset outside the main FFT. A window if you like. The resulting THD would then be as accurate as the zoom. That could be a visual or simply a process that keeps the calculations sane.

Panning signals could be down through the same 'shift' through the history with different parameters or simply take the sample stream in realtime (the data would then appear to rise as the live data starts filling the FFT bins) with the effect on the calculations for example.

Due to the sample rate capacity it means multiple tasks can be processing and displaying or triggering at the same time.

The 1104 will do 200MHz so I'm not that fussed in chasing down a problem, although we could make widget FFT that looks for mirror spikes from the ADC process from higher frequencies.

I would like to keep the resolution and the SNR higher than the 8bit 1104 could handle.

Let's get a first step - I think a RPi with a simple microphone running a touchscreen pushing samples into processes would be good. Infact I could program on a touchscreen as if it was a tablet 🙂 it also allows the performance figures to be validated. I'm guessing performance per CPU being 1/4 of a i7 CPU..

Last edited:

http://liu.diva-portal.org/smash/get/diva2:1367440/FULLTEXT01.pdf

The paper above has some very interesting ideas. The same methods could be used to reduce the size of FFTs and the size of the coefficient table (making cpu cache more efficient). It was the paper where I noted the use of virtex4s and 7s. Both extremely costly but shows the use of multiple fpgas the act as a control and processing pipelines.

The same radix selection and breaks down into steps could be used for specialised processes. Something to think about tomorrow whilst I’m busy building more of the pond..

The paper above has some very interesting ideas. The same methods could be used to reduce the size of FFTs and the size of the coefficient table (making cpu cache more efficient). It was the paper where I noted the use of virtex4s and 7s. Both extremely costly but shows the use of multiple fpgas the act as a control and processing pipelines.

The same radix selection and breaks down into steps could be used for specialised processes. Something to think about tomorrow whilst I’m busy building more of the pond..

For completeness

What is interesting the last paper is the design of the rotators - specifically the calculation of the MSB without error based on the algorithm. Something that your scope doesn't tell you is the number of bits in the FFT implementation that are error free..

I suspect that a 24bit FFT stuffing 24bits into a 32bit float will not result in a 24 MSB error free result for this reason - adding to the quantisation noise. So even the LSB between a 32bit float have impact and so too does the arc mismatch of the rotator.

What is interesting with previous papers is that it shows little difference between a properly analysed fixed point and floating point solution in terms of error - the fixed point normally offers speed with a little more error, although expanding the fixed point to 32bit to 64bit may surpass the float/double implementation for the requirements of the rotator and FFT overall given we know them (we may not need a full exponent in a double for example).

So my thinking here is exploring a similar rotator mechanism, the current one uses a full 2PI/N table (N being the size of the FFT in bins), thus causing a large impact on the CPU cycle thus slowing the rate. Given the symmetry of sin/cos we can beak this down further.

Then understanding the implementation we then know if we can fix/float/double the resulting implementation and still maintain an acceptable error given the ADC limits. At least we can specify the limitations and error.

Food for thought.

What is interesting the last paper is the design of the rotators - specifically the calculation of the MSB without error based on the algorithm. Something that your scope doesn't tell you is the number of bits in the FFT implementation that are error free..

I suspect that a 24bit FFT stuffing 24bits into a 32bit float will not result in a 24 MSB error free result for this reason - adding to the quantisation noise. So even the LSB between a 32bit float have impact and so too does the arc mismatch of the rotator.

What is interesting with previous papers is that it shows little difference between a properly analysed fixed point and floating point solution in terms of error - the fixed point normally offers speed with a little more error, although expanding the fixed point to 32bit to 64bit may surpass the float/double implementation for the requirements of the rotator and FFT overall given we know them (we may not need a full exponent in a double for example).

So my thinking here is exploring a similar rotator mechanism, the current one uses a full 2PI/N table (N being the size of the FFT in bins), thus causing a large impact on the CPU cycle thus slowing the rate. Given the symmetry of sin/cos we can beak this down further.

Then understanding the implementation we then know if we can fix/float/double the resulting implementation and still maintain an acceptable error given the ADC limits. At least we can specify the limitations and error.

Food for thought.

Last edited:

Amazing stuff. Don't have a lot to say about your programming, that's clearly out of my league 🙂

Regarding your comment "30 kill commands later"... There's killall:killall(1): kill processes by name - Linux man page

Regarding your comment "30 kill commands later"... There's killall:killall(1): kill processes by name - Linux man page

I’ve comparing options for RPi.

CM3 is 1.3ghz and 40 GPIO pins in a normal pi header

CM4 is 2x the speed of a CM3 but they have lost their way for dense compute - they’ve made it a RPi4 as it now has two 200pin connectors and an external board for everything that the RPi4 has.. TuringPI is making a CM4 stack and I hope that it offers all 40 GPIO pins out if the kernel is configured right. Fast io is good but why have 99 badminton and other connectors? The good point of the CM4 is large memory and better CPU to make more if it.

An alternative, slower, is the zero2. 1GHz with 40 pins but 512MB ram so perhaps a fully cutdown install (ie runs a SDCard pre prepped with no Desktop just the basics). For £20 it makes a good test subject. The code/install would need to be built off board given the lack of resources.

Just need to see the performance we’re dealing with.

Incidentally there is quite a bit of RPi GPU FFT work that has been done, although the algorithms are FFT of a large set of samples not a high speed stream of samples.

CM3 is 1.3ghz and 40 GPIO pins in a normal pi header

CM4 is 2x the speed of a CM3 but they have lost their way for dense compute - they’ve made it a RPi4 as it now has two 200pin connectors and an external board for everything that the RPi4 has.. TuringPI is making a CM4 stack and I hope that it offers all 40 GPIO pins out if the kernel is configured right. Fast io is good but why have 99 badminton and other connectors? The good point of the CM4 is large memory and better CPU to make more if it.

An alternative, slower, is the zero2. 1GHz with 40 pins but 512MB ram so perhaps a fully cutdown install (ie runs a SDCard pre prepped with no Desktop just the basics). For £20 it makes a good test subject. The code/install would need to be built off board given the lack of resources.

Just need to see the performance we’re dealing with.

Incidentally there is quite a bit of RPi GPU FFT work that has been done, although the algorithms are FFT of a large set of samples not a high speed stream of samples.

There is a relationship between sample time and frequency resolution. No matter how fast your FFT 1 Hz resolution needs at least 1 second worth of samples. Superfast processing is less important. For my application a delay is not a big issue.

There is a relationship between sample time and frequency resolution. No matter how fast your FFT 1 Hz resolution needs at least 1 second worth of samples. Superfast processing is less important. For my application a delay is not a big issue.

Sample time: clock

Frequency time: per cycle of the frequency (confidence value 100% at full cycle)

FFT process time: rate of FFTs on a batch of data, this may be faster than screen refresh rate but not realtime.

Screen refresh time: 50-100 Hz

User time: N seconds

It's an interesting term 'realtime' within a frequency domain given per cycle varies across the spectrum. Processing the samples at a minimum 2x the rate of the frequency (nyquist) for the minimum resolution. The benefit is signal detection at the frequency (ie confidence of a detected frequency grows as sample clock moves through the first sine wave). It gives you the chance for example to detect a frequency and trigger at a confidence level. So there is a phase delay between the start of the signal and the detection.

Reading up a little, for example a 0.01Hz signal would need 2x sample rate 0.02Hz and therefore a 50 second history buffer to detect a cycle and for a number of cycles multiply that by at least 4x (ie 4 cycles minimum) to classify as an intermittent signal. This is a moot point given an FFT in realtime is likely to be binned at a lower resolution. It can be detected in realtime but given a DC block I suspect that it's not going to be very useful. Nothing stopping a realtime averaged low resolution FFT placing identifying markers for later more costly processing to reprocess.

All the data is still be logged at sample rate and both realtime and offline can be performed. And in fact, as you've eluded to, the information is there to post-event FFT, detect and trigger. So it's very possible to process FFTs up to the screen refresh rate for them to appear realtime from a display point of view. Which is good enough for 99.9% of users.

It's also possible to apply FFTs over time and FFT the corresponding result to detect cycling spikes for oscillations for example - I used Z axis FFT of the 2D FFTs as part of the CCD astro work. The human is a great computer but it can't cope with a massive stream of information.

In FFT you have the luxury of sample history to zoom into RBW, resolution bandwidth, without new data. The limitation compared to a sweep analogue is that the sample rate is fixed by the ADC so unless you downconvert (or slow the sweep voltage controlled oscillator) in the analogue to slow the signal down for more samples/sec on the slower signal you're limited in the final resolution. The FFT "becomes" the bandpass filter of you will from what I can see.

Also as you've pointed out the CM4 with the larger memory options allows for more memory-costly non-realtime processing. This would include phase correlation and history based FFT transforms. The faster processing power would mean a more reactive analyser.

Accuracy and precision also then follows given the extra time for processing.

Last edited:

GPU FFT in RPi using the the normal methods: GPU_FFT

So for offline processing (ie large blocks of samples) this will work well for any rate our ADC can muster in terms of resolution. We can extend the sizes to larger FFTs for low frequency but as discussed how useful that would be, personally under 1Hz I would simply default that to DC. It would be possible to process for <1Hz and subtract from the FFT to correct.

More useful would be for multiple FFTs in terms of bins over time for repeating signal analysis - but the resolution then becomes dependant on how many FFTs you have over the second. Or offline 2x FFT per highest frequency..

Also it's not far then to GPU -> OpenGL -> screen for a rendering without messing with the CPU (as much as shared busses allow), replicating what I've done in terms of astro work.

In terms of accuracy, the current GPU_FFT uses float, hence the point about 1 order of magnitude of error between GPU_FFT and FFTW. This would need quantifying so we know the impact on our calculations. If we have 24bits, it doesn't make sense if we find the resulting calculations are error free to 10bits. A float being 24bits of mantissa is all well and good but no use if the GPU processing destroys it. (again if the error is below our noise floor then perhaps it's not a problem)

Incidentally, there's even research into FFT power consumption on RPi, including FFTW (CPU), GPU_FFT and scipy: https://digitalcommons.library.umaine.edu/cgi/viewcontent.cgi?article=3898&context=etd

So for offline processing (ie large blocks of samples) this will work well for any rate our ADC can muster in terms of resolution. We can extend the sizes to larger FFTs for low frequency but as discussed how useful that would be, personally under 1Hz I would simply default that to DC. It would be possible to process for <1Hz and subtract from the FFT to correct.

More useful would be for multiple FFTs in terms of bins over time for repeating signal analysis - but the resolution then becomes dependant on how many FFTs you have over the second. Or offline 2x FFT per highest frequency..

Also it's not far then to GPU -> OpenGL -> screen for a rendering without messing with the CPU (as much as shared busses allow), replicating what I've done in terms of astro work.

In terms of accuracy, the current GPU_FFT uses float, hence the point about 1 order of magnitude of error between GPU_FFT and FFTW. This would need quantifying so we know the impact on our calculations. If we have 24bits, it doesn't make sense if we find the resulting calculations are error free to 10bits. A float being 24bits of mantissa is all well and good but no use if the GPU processing destroys it. (again if the error is below our noise floor then perhaps it's not a problem)

Incidentally, there's even research into FFT power consumption on RPi, including FFTW (CPU), GPU_FFT and scipy: https://digitalcommons.library.umaine.edu/cgi/viewcontent.cgi?article=3898&context=etd

Last edited:

Nick, I always find your posts exceedingly interesting and informative. Your recording of thought processes and thoroughness are laudable.

Nick, I always find your posts exceedingly interesting and informative. Your recording of thought processes and thoroughness are laudable.

Allows me to remember where I was and what needs doing!

I was reading the RPi programmers manual (with the register bit values etc) plus a couple of other sites around DMA.

So it's possible to setup an interrupt on the leading edge rise of a GPIO pin, then execute a DMA control block. That control block would copy the GPIO states for the pins 1 through 24 from the mapped memory location to the buffer, et volia a buffer DMA we can really drive processing from. We could even trigger an interrupt for each DMA transfer that the code could use to start processing.

Now the interesting thing would be - could you DMA into GPU memory as it's simply a logical separation with the shared memory architecture? Also given the interrupt and CPU core caches, we may want to look at the memory cache invalidation strategy used for the area containing the circular buffer. In theory the sample buffer (if each sample is processed immediately) is a write only and so cache invalidation for a 4K page will not harm it but if the performance relies not on write through but write caching of the memory page that may cause a problem. Too detailed but worth a check.

Looking at some sources for drivers that do something similar it appears the GPU halts the interrupts every 500ms for 16us too plus we're getting dangerously into the undocumented with respect to video pipeline caching etc. Given we have 1M samples/sec -> 1uS between interrupts. The alternative is to run a system that simply pulls data continuously which is not good for performance. That sort if thing will put a hole in our samples as in continuous mode the ADC will not wait.

Breaking the circular buffer into sections helps - it allows us to maintain a current circular buffer always but then selectively use buffers (either as a large block for FFT or writing to disk).

What we don't want is one or more missing samples - that would be bad for online or offline processing. Hence the focus on the possible oops moments we could have. Ubintu and Debian are not realtime operating systems (RTOS) so latency could very easily cause a problem in capture so I think the next step is a deeper look at the RTOS.

Nice summary of OSes: Raspberry Pi Real-Time OS (RTOS): Which to Choose | All3DP

Although freertos has AWS in the background, ChibiOS/RT looks better in terms of documentation and support on RPI: Getting Started With ChibiOS/RT on the Raspberry Pi

Last edited:

Hmm.. ChibiOS/RT looks good but the last update from Pete on the RPi version was 2012 which means essentially porting it again for the newer models. Although it's not going to be too different it is missing some functionality (LAN and USB). The main OS master line seems good with good support.

James, who ported FreeRTOS, has really changed his focus to his own bitthunder RTOS so the last FreeTOROS update was 2017.

James, who ported FreeRTOS, has really changed his focus to his own bitthunder RTOS so the last FreeTOROS update was 2017.

Secondary Memory Interface | Hackaday

Another option is the SMI - second memory interface with 500Mbps to play with. The software defined radio is running at 4Msps. So our simple 1Msps or less is child’s play. We can switch from 24bit parallel to 16bit by doubling the parallel through put - the SPI cold simply clocks 16bit shift, then another 16bit shift for each ADC SPI sample. That allows is to fit in the SMI 16bit width limitation but at a far higher rate whist perhaps reducing the number of GPIO pins in use (also allows an 8bit parallel bus for FFT output transfer or results transfer to the main RPi for example). This uses the same GPIO pins in parallel but instead of using the GPIO peripheral hardware+interrupt+dma it uses the SMI which has it’s own interrupts+dma.

Only concern is the lack of documentation and the fatal crash due to bus conflict. So it out the former it makes the latter a bit difficult to target.

Thinking about this more - out CPLD helps because we can control the output. This is important as the SMI is an “always on” hence you push data at it, it will attempt to process it. For a ADC pushing data continuously that’s good but the CPLD provides a switch to control it.

Additionally it’s confirmed that you can define a 16pin bus but configure the other pins for the other modes. Neat feature for packing I/O into the small number of pins.

Another option is the SMI - second memory interface with 500Mbps to play with. The software defined radio is running at 4Msps. So our simple 1Msps or less is child’s play. We can switch from 24bit parallel to 16bit by doubling the parallel through put - the SPI cold simply clocks 16bit shift, then another 16bit shift for each ADC SPI sample. That allows is to fit in the SMI 16bit width limitation but at a far higher rate whist perhaps reducing the number of GPIO pins in use (also allows an 8bit parallel bus for FFT output transfer or results transfer to the main RPi for example). This uses the same GPIO pins in parallel but instead of using the GPIO peripheral hardware+interrupt+dma it uses the SMI which has it’s own interrupts+dma.

Only concern is the lack of documentation and the fatal crash due to bus conflict. So it out the former it makes the latter a bit difficult to target.

Thinking about this more - out CPLD helps because we can control the output. This is important as the SMI is an “always on” hence you push data at it, it will attempt to process it. For a ADC pushing data continuously that’s good but the CPLD provides a switch to control it.

Additionally it’s confirmed that you can define a 16pin bus but configure the other pins for the other modes. Neat feature for packing I/O into the small number of pins.

Last edited:

SMI is doubtlessly interesting. But slaved I2S easily handles 512kHz/24bit/2ch, yielding the required 1Msps 24bit single channel, the driver is available and well tested. Some small high-speed FPGA can convert SPI to I2S master. All the user-space ecosystem is available for alsa audio streams.

SMI is doubtlessly interesting. But slaved I2S easily handles 512kHz/24bit/2ch, yielding the required 1Msps 24bit single channel, the driver is available and well tested. Some small high-speed FPGA can convert SPI to I2S master. All the user-space ecosystem is available for alsa audio streams.

I had a look at the i2s driver and it’s all cpu byte copies - one from user area into a ring buffer and the second from the ring buffer to the FIFO buffer. So it would work but not the most elegant (ie cpu load).

I’ll look into the i2s latency.

The old rpi 3 had a i2s speed error.

The rp4 has a “400khz max” however people have put their scopes on and it looks like 1MHz or even 3Mhz is possible. I’d need to be confident there’s no dropped frames under load. The CPLD would not be buffering for the continuous conversion. FPGA to convert a non-standard 24bit read seems overkill. For i2s.

The rp4 has a “400khz max” however people have put their scopes on and it looks like 1MHz or even 3Mhz is possible. I’d need to be confident there’s no dropped frames under load. The CPLD would not be buffering for the continuous conversion. FPGA to convert a non-standard 24bit read seems overkill. For i2s.

I had a look at the i2s driver and it’s all cpu byte copies - one from user area into a ring buffer and the second from the ring buffer to the FIFO buffer. So it would work but not the most elegant (ie cpu load).

Any code and link pointers to your I2S infos?

RAM -> FIFO (and back for capture) is via DMA, shown in the datasheet. Where did you find the memcpy from user area to the RAM DMA buffer in the driver? My knowledge of the alsa SoC framework is quite rudimentary and I do not know how to find it. Nevertheless typically RAM-based audio devices (e.g. all PCI-based ones) give the userspace direct access to the DMA'd RAM via the alsa hw:XX device. My 2 cents the same holds for the I2S peripheral which also transfers data from the FIFO to RAM via DMA, just like PCI devices do.

USB devices use dual buffering as the final frames (accessed by the USB controller via DMA) must be composed of packets generated by the various USB-device drivers.

I’ll look into the i2s latency.

The I2S peripheral has 64 slot FIFO, fill level (=DMA trigger) configurable. The rest is about alsa driver latency, period length. Significantly longer than 64 frames.

Why is latency important when high-resolution FFT will take seconds to collect enough samples (as Demain mentioned before)?

The rp4 has a “400khz max” however people have put their scopes on and it looks like 1MHz or even 3Mhz is possible.

Honestly I have no idea what you mean. Do you talk about I2S, or perhaps I2C? Any links? I tested RPi4 I2S slaved at 768/1024/1536kHz samplerate loopback STICKY: The I2S sound thread. [I2S works] - Page 41 - Raspberry Pi Forums and ES9038Q2M at 768kHz (worked for 24bit TDM slot, not for 32bit slot).

raspberry-pi-i2s-driver/i2s_driver.c at master * taylorxv/raspberry-pi-i2s-driver * GitHub as an example of software byte transfer. I’ll have a look to find the rpi official driver but that was the like that kept appearing.

Also the Broadcom manual indicates the three options for software and dma: https://datasheets.raspberrypi.com/bcm2711/bcm2711-peripherals.pdf#page85 chapter 7 - I see there’s a grey mode that could be used and the i2s takes time to flush <32bit transfers with zeros.

No the clocking for i2s can be set higher, although the RPi clock sucks, having an external master clock drive continuous transfers (ie the clock chip attached to the ADC that clocks the data ready - that is not controlled by the cpld or RPi) would have an even sample spread more accurate than RPi initiated reads.

The reason for being concerned with latency, clocking and transfers is two fold:

A) it’s too easy to miss a sample.

B) it’s too easy to throw away performance using software and not use the hardware that does it more efficiently. The CPU time is then available for the FFT and other features.

You are right the Google result returned i2c and I didn’t notice. For i2c thread: pi 4 - What is Rpi's I2C Maximum Speed? - Raspberry Pi Stack Exchange

Also the Broadcom manual indicates the three options for software and dma: https://datasheets.raspberrypi.com/bcm2711/bcm2711-peripherals.pdf#page85 chapter 7 - I see there’s a grey mode that could be used and the i2s takes time to flush <32bit transfers with zeros.

No the clocking for i2s can be set higher, although the RPi clock sucks, having an external master clock drive continuous transfers (ie the clock chip attached to the ADC that clocks the data ready - that is not controlled by the cpld or RPi) would have an even sample spread more accurate than RPi initiated reads.

The reason for being concerned with latency, clocking and transfers is two fold:

A) it’s too easy to miss a sample.

B) it’s too easy to throw away performance using software and not use the hardware that does it more efficiently. The CPU time is then available for the FFT and other features.

You are right the Google result returned i2c and I didn’t notice. For i2c thread: pi 4 - What is Rpi's I2C Maximum Speed? - Raspberry Pi Stack Exchange

Last edited:

Also confirmed the screen I want to use is a IPS capacitive touch screen - 7” which matches the 1104X-E so it’ll be a good size without being too large. Tempted to put a 4x4 push button matrix and a rotary encoder dial that can be configured. The IPS has a 100hz response time too.

Touch screen is good but trying to pinch on a virtual screen on a bench may be annoying RSI inducing.

The form I’m trying to aim for is about the size of the 1104. Not too big but large enough to fit everything in with a common power supply.

Touch screen is good but trying to pinch on a virtual screen on a bench may be annoying RSI inducing.

The form I’m trying to aim for is about the size of the 1104. Not too big but large enough to fit everything in with a common power supply.

- Home

- Design & Build

- Equipment & Tools

- Arduino Spectrum Analyser 24bit 256KS/s