Not sure a chamber with 1 ft wedges is sufficiently anechoic for our "Absolute Listening Test" 🙂all. the smallest one was 10' x 17' x 8' . subtract a ft for foam. they all had to satisfy ITU-T P.341

Here's an example of distortion, here in the vocal track, especially noticeable as things warm up at around the late 30s seconds in.Pages and pages and I still concur.

Angelo's method measures response & THD to a given accuracy in the theoretically shortest possible time. That's why I developed the theory & code about a decade before him. I wanted it for factory test but at the time, the computing power (and more significantly, good A/Ds) were too $$$ as I wanted loads."[...] Although much, much slower than a log sweep the stepped sine measurement can measure low distortion levels much more accurately than a sweep [...]"

[https://www.roomeqwizard.com/help/help_en-GB/html/graph_distortion.html]

I wasted months of my life on log sine.

Stepped sine was worth every minute.

We ended up getting into bed with Clio. They did ClioQC for us and it has a lot of our ideas. I thoroughly recommend Clio for factory test and also as an inexpensive tool for R&D. Their measurement mike is one of the very few whose calibration I trust.

Does REW use Angelo's method?

Hah! It's Arie Kaizer. I met him in Hamburg at AES 1981 (?) over a couple of pints. It took me an hour before I realised his "wretched pistons" were actually "rigid pistons" 😆 He was the first to attempt FEA of cone behaviour (p38) while we were the first to do Laser scans of diaphragms to confirm these simulations .. hence our mutual interest.

This is the best explanation of Wigner stuff I've seen but also illustrates its shortcomings.

Anyone wanna explain how his Fig 67 (p37) Wigner, tells you more useful stuff than his Fig 66 (p36) CSD aka 'waterfall' aka KEFplot?

His brother used (?) to be editor of Elektor and did several good articles on Ambisonics

Arie's papers touch on some less well known (completely ignored?) but really important stuff which I hope to pontificate on when hifijim starts a clean thread 🙂

Last edited:

Thanks for this Boden. Da explanation of Spinorama is excellent but I dunno it is any better than the traditional Julian Wright '3D' directivity plots that JA uses in Stereophile. I worked with Julian at Celestion on loadsa stuff.@kgrlee: much better than the Stereophile measurements..

Erins Sudio Corner is also a good place to see the same way of evaluations.

Look here to start:

A big positive (huge) of the stereophile speaker measurements is that JA has been doing them the same way and presenting them the same way since ~ 1988 or so. So there is this huge database of measurements on all kinds of speakers.

The downside of course is that the measurement technology is fixed in what was then state of the art. Now it is a rather simplistic set of measurements. If they switched to CEA-2034A today, it might be hard to compare future speakers with past. There are pluses and minuses to every decision.

The downside of course is that the measurement technology is fixed in what was then state of the art. Now it is a rather simplistic set of measurements. If they switched to CEA-2034A today, it might be hard to compare future speakers with past. There are pluses and minuses to every decision.

Supa dupa spruik of da Harman empire 🙂I believe that the "spinorama" (not sure why, but I hate lingo like that) originated with Olive and Toole's research at Harmon (and Toole's prior research at the NRC in Canada before being recruited to Harmon) as they tried to characterize speakers/determine which parameters were preferred in blind testing. Starting in the era of the LSR monitors (LSR 6332, 6328 I think), Harmon began to engineer their speakers with these goals in mind and shared testing data in that format (attached). This approach is now seen throughout JBL's professional speakers and Revel's (part of Harmon) home speakers.

There's no doubt Toole & Olive have popularized much good stuff and speakers in general (especially cheapo ones) are much better cos their efforts 🙂

They quote our work from da 70s & 80s so they can't be bad 😎 But I don't think they have come up with anything valuable to add to what we did then.

The reason they are false prophets is the way they conduct DBLTs. After all, to answer the original question, we need to measure 'sound'.

The measuring instrument is your DBLT panel. It has an accuracy that needs to be checked every now and then. They claim only 'trained' listeners can give reliable results and (like some people here) that different listeners will like different speakers. This tells me their test is flawed. My DBLTs show that da teenage girl in from da street 'likes' the same speakers as da recording engineer who designs his own microphones.

Deleted: 100 pages on DBLTs.

One HUGE factor in my tests that da false prophets ignore is that the subjects must CHOOSE THE MUSIC.

I've spoken to people involved in setting up their supa dupa DBLT facilities at Harman ... Shut up Lee! Save your ranting for hifijim's thread!

Klippel came on the scene after I disappeared into the bush to become a beach bum so anything I say about it is purely based on da www. My very casual look suggests it can do most of the stuff I would want but his real forte is Production Testing though its a bit $$$ for loadsa units. I think he even has a Scanned Doppler Interferometry system, which was unique to us in da old days, to look at diaphragm behaviour 🙂The Klippel apparatus used at ASR and @bikinpunk 's (Erin's Audio Corner, YT link shared above) is an (expensive) system that uses near field, non-anechoic techniques to generate the spin data and other measurements (including CSDs, distortion, etc.). Several manufactures have acquired the Klippel system and are using it in design (Magico would be a "high-end" example).

At last! A 'waterfall'. Deleted 10 pages of why 'waterfalls' aka KEF CDS aka KEFplots are rubbish

OK! I'm biased. 'Waterfalls' are probably the most important measurement done these days that relate to how things sound. But the correct interpretation of 'waterfalls' is sadly lacking .. let alone for Wigners, cepstrums and other high falutin buzzwords.

JA at Stereophile has probably looked at more 'waterfall's than anyone alive. But I once spent an hour or two over several pints explaining to him the difference between our PAFplots & da KEFplots and also how to interpret them. eg the CDS of a good sounding paper cone is very different from that for a good sounding plastic cone.

Last edited:

Wanna tell us which part of CEA-2034A tells us more than da simplistic Stereophile stuff?If they switched to CEA-2034A today, it might be hard to compare future speakers with past.

From my own reading of Olive and Toole's work they agree with you that all listeners do eventually converge to the same preferences. The claims of trained vs untrained listeners is that the trained listeners come to the same conclusion much quicker and more reliably when decribing their preferences.They claim only 'trained' listeners can give reliable results and (like some people here) that different listeners will like different speakers. This tells me their test is flawed. My DBLTs show that da teenage girl in from da street 'likes' the same speakers as da recording engineer who designs his own microphones.

https://pearl-hifi.com/06_Lit_Archi...blications/Trained_vs_Untrained_Listeners.pdf

"Significant differences in performance, expressed in terms of the magnitude of the loudspeaker F statistic FL, were found among the different categories of listeners. The trained listeners were the most discriminating and reliable listeners, with mean FL values 3–27 times higher than the other four listener categories. Performance differences aside, loudspeaker preferences were generally consistent across all categories of listeners, providing evidence that the preferences of trained listeners can be safely extrapolated to a larger population." Emphasis added.

I'll toss a question in here - earlier in the thread I saw mention of Siegfried Linkwitz and his shaped tonebursts:

https://www.linkwitzlab.com/sys_test.htm

It appears Linkwitz was doing this before the word wavelet was coined (which has a perhaps more refined definition than "shaped toneburst"), and there's been a good bit of DSP development with wavelets, at least outside audio. Wavelets are similar to a Fourier spectrum, but give analysis of signals located in time as well as in frequency.

I just found this 1996 Linkwitz interview, from around when I recall the word wavelet showing up in the electronic design trade magazines. The whole thing is interesting for this thread, but page 3 is relevant to my interests here:

https://www.stereophile.com/interviews/503/index.html

The question: Have wavelets been used much in speaker/driver analysis and design? Why or why not? It certainly seems that wavelets would be relevant.

It seems Mr. Linkwitz was on to something with his "shaped tonebursts." He discussed it more on this page:Another type of test that's not normally published is the shaped tone burst/energy storage one that Linkwitz liked:

https://www.linkwitzlab.com/mid_dist.htm

https://www.linkwitzlab.com/sys_test.htm

It appears Linkwitz was doing this before the word wavelet was coined (which has a perhaps more refined definition than "shaped toneburst"), and there's been a good bit of DSP development with wavelets, at least outside audio. Wavelets are similar to a Fourier spectrum, but give analysis of signals located in time as well as in frequency.

I just found this 1996 Linkwitz interview, from around when I recall the word wavelet showing up in the electronic design trade magazines. The whole thing is interesting for this thread, but page 3 is relevant to my interests here:

https://www.stereophile.com/interviews/503/index.html

The question: Have wavelets been used much in speaker/driver analysis and design? Why or why not? It certainly seems that wavelets would be relevant.

D

Deleted member 375592

Absolutely not Absolutely Listening Test, not even close. The main target was to get repeatable controllable measurements during work hours, for that you need to have good sound isolation, like 40+dB, from heavy tracks' and planes' noise. This also means you have a 0.99 reflective cage for low frequencies with native RT60 > 700ms, to start with. It is relatively easy to bring it <100ms for f > 200Hz... Good luck to bring it below 200ms for f < 100Hz.Not sure a chamber with 1 ft wedges is sufficiently anechoic for our "Absolute Listening Test" 🙂

Could anyone explain to me why a waterfall (Integrated RIR Gabor transform) is better than a simple spectrogram of RIR? Although I prefer wavelets, and yes, I use them as variable-length windowing skim,

BTW, Farina aka old good Chirp does not provide you with error bounds estimates and is overly sensitive to noise bursts.

Their tests could be better arranged to get statistical significance more quickly, especially with 'untrained listeners'.From my own reading of Olive and Toole's work they agree with you that all listeners do eventually converge to the same preferences. The claims of trained vs untrained listeners is that the trained listeners come to the same conclusion much quicker and more reliably when describing their preferences.

Some results from 2 decades of DBLTs.

- da man in da street is more perceptive (gives more reliable results) than da HiFi Reviewer

- da woman in da street is more perceptive than da man in da street. So pay attention if your wife, girlfriend, mistress, mother says, " I don't like this one as much as your old one" 😳

- Da wannabe Golden Pinnae, dem who can hear differences between mains plugs etc, is deaf; gives completely random results in DBLTs

- Even da best true golden pinnae are opinionated & biased. There are some people whose opinions I value in a DBLT but I still take anything they say sighted, with a BIG pinch of salt.

Only one HiFi reviewer rises above the man in da street and may be in the same group as da true golden pinnae.

This is a serious issue. These days, you can hardly ever hear a speaker before buying .. so everyone relies on 'reviewers' who are probably deaf. You really have to say very LOUDLY & CLEARLY, "My stuff is hand carved from Unobtianium & Solid BS by Virgins."

Last edited:

Waz iz 'simple spectrogram of RIR'? Can you post an example? You must excuse my ignorance of Gabor & other buzzwords.Could anyone explain to me why a waterfall (Integrated RIR Gabor transform) is better than a simple spectrogram of RIR?

AFAIK, all da 'waterfalls', wavelets, ceptstrums, Wigner stuff are operations on an 'anechoic' or quasi anechoic https://www.aes.org/e-lib/browse.cfm?elib=12875 IR so dunno about Room Impulse Response.

Not sure how you deduce that. Angelo's method has theoretically the best rejection of other stuff including noise bursts.BTW, Farina aka old good Chirp does not provide you with error bounds estimates and is overly sensitive to noise bursts.

I've made good measurements with background noise so loud I couldn't hear someone speaking nearby. One such occasion was when Angelo visited Cooktown, the Centre of the Universe, and brought presents. 🙂

Last edited:

D

Deleted member 375592

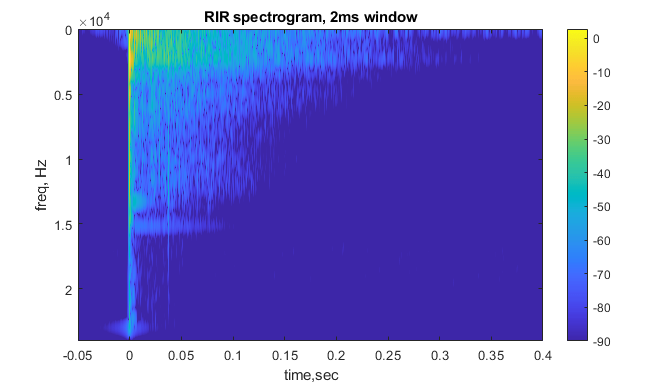

Here is a 'typical' spectrogram of RIR (dB scaled) which is a convolution of anechoic IR of spk and the room (here, my living room with half of foam off):

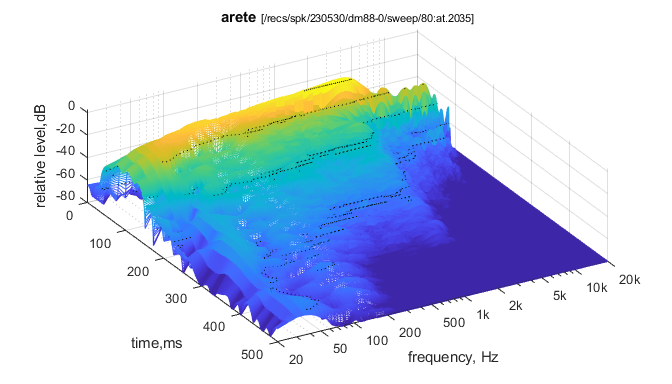

It is kind of hard to get anechoic measurements for DIY. I know what it is but not sure why mention them if you can't get them? Instead, I use observable data and pick the top of the ridge on wavelet sgram as spk:

Dennis Gabor was the first guy who invented Short Term Fourier Analysis back in 1940s (he used Gauss window only then). https://en.wikipedia.org/wiki/Dennis_Gabor

I deduced my conclusions from the theory of System Identification, like in L. Ljung https://www.oreilly.com/library/view/system-identification-theory/9780132441933/ It is somewhat accepted that Least Squares are considered the Best Linear Unbiased Estimator (BLUE) in p=2 sense. The convolution methods like chirp, MLS and perfect sequences are much less computationally intensive but to pronounce them 'The Best' could be a bit contradictory to the current state of science as we know it.

Particulary, Chirp errs at low frequencies where both noise and non-linear distortions are at their worst.

It is kind of hard to get anechoic measurements for DIY. I know what it is but not sure why mention them if you can't get them? Instead, I use observable data and pick the top of the ridge on wavelet sgram as spk:

Dennis Gabor was the first guy who invented Short Term Fourier Analysis back in 1940s (he used Gauss window only then). https://en.wikipedia.org/wiki/Dennis_Gabor

I deduced my conclusions from the theory of System Identification, like in L. Ljung https://www.oreilly.com/library/view/system-identification-theory/9780132441933/ It is somewhat accepted that Least Squares are considered the Best Linear Unbiased Estimator (BLUE) in p=2 sense. The convolution methods like chirp, MLS and perfect sequences are much less computationally intensive but to pronounce them 'The Best' could be a bit contradictory to the current state of science as we know it.

Particulary, Chirp errs at low frequencies where both noise and non-linear distortions are at their worst.

Last edited by a moderator:

^^^^An audio science that doesn't obey physics. Sure.

The 'like/don't like' factor...

This has got to be accepted

Phisics has to stop at a certain point

Now it's all mixed up.

It's the confusion between sum and superimposition of waves

The AES paper about anechoic and in-room bla bla that I accidentally opened now contains an error in the premise, as it address the error of in-room measurements to be caused by the reflections. But the reflections do cause the perception of a room!

Etc. Etc

( Keeping all for Hifijim new incoming thread 🙂 )

D

Deleted member 375592

With Chirp aka Farina method, you don't measure the loudspeaker but how the loudspeaker fits your model of it. Anything that is not in the model is considered as observation noise and rejected. Whenever you err you have no indication how badly you erred. This way you completely reject Barhausen noise. You can not distinguish it from the observation noise.

Thus we have this discussion "Do measurements matter?"

Thus we have this discussion "Do measurements matter?"

Could take both, the old and the. new! 🙂A big positive (huge) of the stereophile speaker measurements is that JA has been doing them the same way and presenting them the same way since ~ 1988 or so. So there is this huge database of measurements on all kinds of speakers.

The downside of course is that the measurement technology is fixed in what was then state of the art. Now it is a rather simplistic set of measurements. If they switched to CEA-2034A today, it might be hard to compare future speakers with past. There are pluses and minuses to every decision.

Do I understand you correctly when I read you can measure low frequencies in a normal listening environment (that is, without the usual gating) and derive nonlinear distortions in the low frequency range?Here is a 'typical' spectrogram of RIR (dB scaled) which is a convolution of anechoic IR of spk and the room (here, my living room with half of foam off):

View attachment 1283655

It is kind of hard to get anechoic measurements for DIY. I know what it is but not sure why mention them if you can't get them? Instead, I use observable data and pick the top of the ridge on wavelet sgram as spk:

View attachment 1283656

Dennis Gabor was the first guy who invented Short Term Fourier Analysis back in 1940s (he used Gauss window only then). https://en.wikipedia.org/wiki/Dennis_Gabor

I deduced my conclusions from the theory of System Identification, like in L. Ljung https://www.oreilly.com/library/view/system-identification-theory/9780132441933/ It is somewhat accepted that Least Squares are considered the Best Linear Unbiased Estimator (BLUE) in p=2 sense. The convolution methods like chirp, MLS and perfect sequences are much less computationally intensive but to pronounce them 'The Best' could be a bit contradictory to the current state of science as we know it.

Particulary, Chirp errs at low frequencies where both noise and non-linear distortions are at their worst.

- Home

- Loudspeakers

- Multi-Way

- Do measurements of drivers really matter for sound?