I would like to thank @Turbowatch2 for making me aware of the Thomann line of DSP units. The t.racks 4x4 mini https://www.thomann.de/de/the_t.racks_dsp_4x4_mini.htm is a nice alternative to the MiniDSP 2x4HD. It is nice to have options.

j.

j.

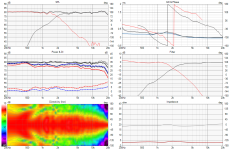

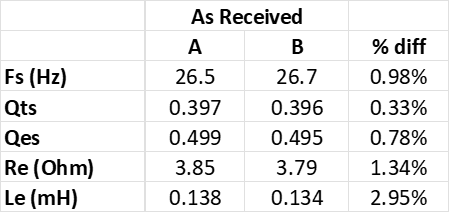

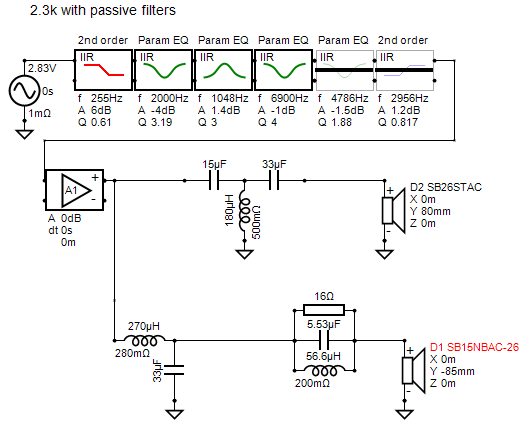

The woofers arrived, and I unpacked them and did some preliminary measurements… impedance sweeps and basic TSP stuff.

This is my first experience with Dayton woofers, and the Dayton RS270-4 woofers look nice. Using DATSv3 I measured them before and after break-in.

And after 20 minutes of 25 Hz sine wave at +/- 5mm excursion (about 3 Vrms)

Nice job Dayton. This is very good matching between the two woofers.

j.

This is my first experience with Dayton woofers, and the Dayton RS270-4 woofers look nice. Using DATSv3 I measured them before and after break-in.

And after 20 minutes of 25 Hz sine wave at +/- 5mm excursion (about 3 Vrms)

Nice job Dayton. This is very good matching between the two woofers.

j.

In other developments, I have been looking at the possibility of using the SB26CDC tweeter as an alternate. I have a lot of experience with this driver, and I also happen to have a spare set of these. My original decision to use a soft dome tweeter came from a discussion with the person most likely to build this design, and his preference for soft domes. But other people have a preference for hard domes, and I would like to be able to offer this design with a hard dome option.

The impedance curve of the SB26CDC-C000-4 is very close to the SB26STAC-C000-4. The SB26CDC is slightly less sensitive, and the response curve is not quite the same, but both are reasonably flat. There are some directivity differences above 4k... but overall, the differences are minor.

I have a full set of polar responses for the SB26CDC from three earlier projects. I selected the project that had the baffle shape most similar to this one. To use this response, I had to adjust the driver gain to reconcile the different test conditions and drive voltages. I also had to ensure that the phase / delay of the two tweeters were compatible.

When I substitute the SB26CDC into the simulation in place of the SB26STAC, I get a response which is quite reasonable. This is with no changes in either the analog passive filters or the DSP filters. If I leave the passive filters unchanged, but optimize the DSP filters, I get a very nice response.

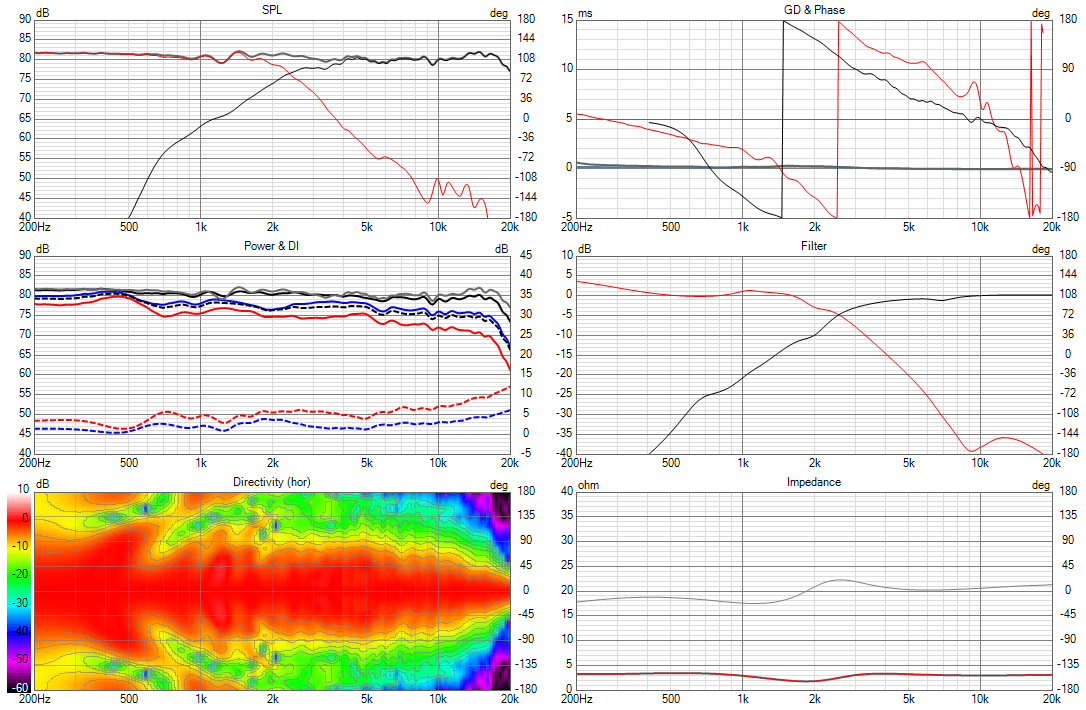

First the baseline with SB26STAC soft dome

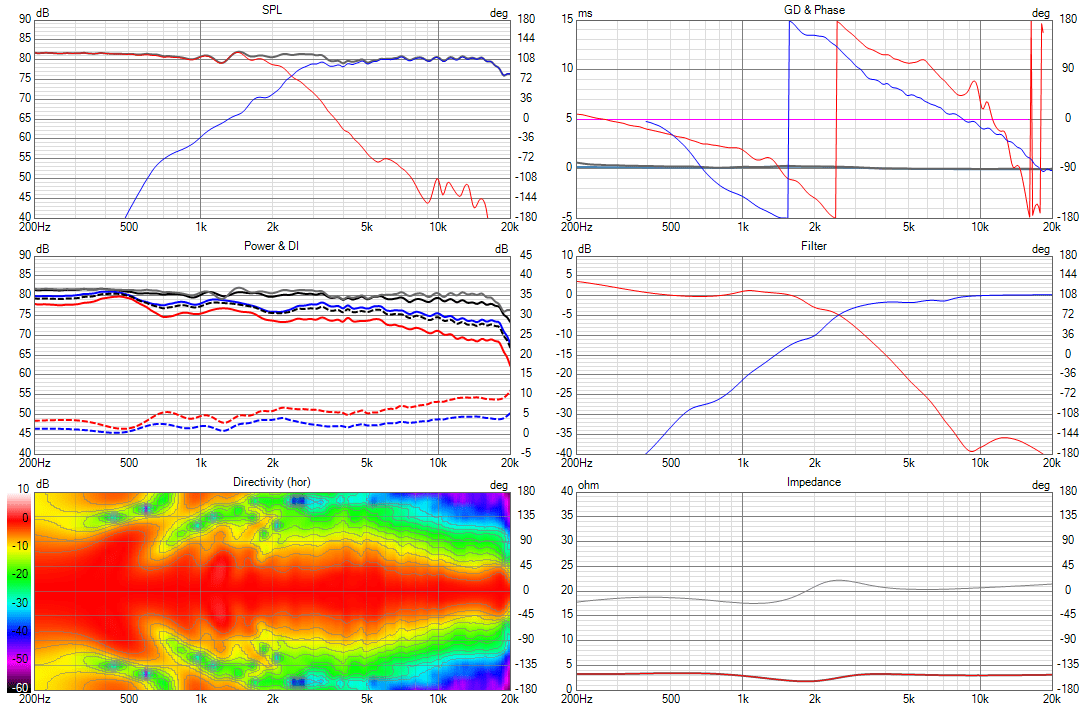

Now with the SB26CDC aluminum/ceramic dome

Once again, this is prototype data. The actual driver responses will be measured in the finished cabinets, and that will determine the filter configuration of the finished design.

This demonstrates to me that this speaker can probably be built with either tweeter, and the only change that would be needed is a slightly different set of DSP filters.

j.

The impedance curve of the SB26CDC-C000-4 is very close to the SB26STAC-C000-4. The SB26CDC is slightly less sensitive, and the response curve is not quite the same, but both are reasonably flat. There are some directivity differences above 4k... but overall, the differences are minor.

I have a full set of polar responses for the SB26CDC from three earlier projects. I selected the project that had the baffle shape most similar to this one. To use this response, I had to adjust the driver gain to reconcile the different test conditions and drive voltages. I also had to ensure that the phase / delay of the two tweeters were compatible.

When I substitute the SB26CDC into the simulation in place of the SB26STAC, I get a response which is quite reasonable. This is with no changes in either the analog passive filters or the DSP filters. If I leave the passive filters unchanged, but optimize the DSP filters, I get a very nice response.

First the baseline with SB26STAC soft dome

Now with the SB26CDC aluminum/ceramic dome

Once again, this is prototype data. The actual driver responses will be measured in the finished cabinets, and that will determine the filter configuration of the finished design.

This demonstrates to me that this speaker can probably be built with either tweeter, and the only change that would be needed is a slightly different set of DSP filters.

j.

I would personally cross the tweeter even lower.

I also noticed that you boost the shelving filter.

A rule of thumb is that it's better to attenuate instead to prevent clipping in either the DSP or preamp before the power amp.

Those Dayton RS also still some really good looking drivers! 🙂

I also noticed that you boost the shelving filter.

A rule of thumb is that it's better to attenuate instead to prevent clipping in either the DSP or preamp before the power amp.

Those Dayton RS also still some really good looking drivers! 🙂

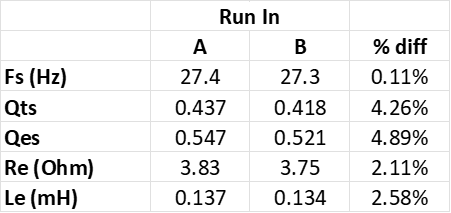

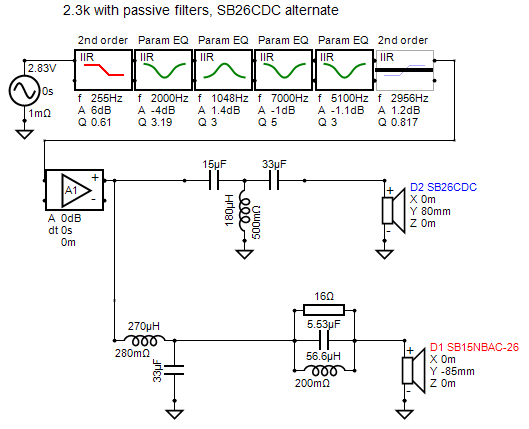

That 270µH and 33µF combo sure look bold to me... Did you consider flattening the impedance of the SB26?

Yep, will most definitely create a dive in impedanceThat 270µH and 33µF combo sure look bold to me... Did you consider flattening the impedance of the SB26?

Yeah, that has been on my mind. I knew I would have to address it at some point.I don't know if it's in error or not, but the impedance dips to 2 ohms at 2k.

I had tried a few things, such as adding some resistance to the parallel 33uf cap in the mid circuit and the parallel coil in the tweeter circuit, but that altered the response and phase too much. @markbakk nudged me along a good path with his comment... If I increase the mid inductor from 0.27 mH to 0.47 mH, I maintain a nice response across all the curves (on-axis and power response curves are what I mostly focus on at this point)... AND the dip in impedance is taken care of... Nice.

Thanks for the insights and advice... I was worried that fixing the impedance dip would be more complicated... j.

Attachments

No, I loaded the values into Excel. I also rounded everything to 3 significant digits. I doubt my measurements are better than that.did DATS calculate it?

My biggest concern was that I might present the amp with too low of a load, even at 2k it may be too low. The amps I am planning to use are inexpensive class D units based on the TDA7498E chip ... These are not high quality robust amps.

Hypex Fusion amps are stable down to a 1 Ohm load... but these are not Hypex.

Hypex Fusion amps are stable down to a 1 Ohm load... but these are not Hypex.

Rule of thumb is not to go below Re.

Even with very good amplifiers I wouldn't go near 2 ohm. It's just bad design practice. Potentially asking for trouble.

Even with very good amplifiers I wouldn't go near 2 ohm. It's just bad design practice. Potentially asking for trouble.

Could you expand on this thought a little bit more? My only experience with DSP active systems is using Hypex Fusion amps. These have a soft-clipping feature which can be adjusted to match the drivers.I also noticed that you boost the shelving filter.

A rule of thumb is that it's better to attenuate instead to prevent clipping in either the DSP or preamp before the power amp.

If I understand you correctly, there is a difference in how the DSP and preamp will respond to these two filters:

Above: First order shelf, Boost of +6 dB at low frequencies

Below: First order shelf, Cut of -6 dB at high frequencies

To me, these two filters do exactly the same thing, with the relative gain being 6 dB higher in the first filter. I have never run into clipping in the way I use my equipment, but I suspect I am pretty conservative when it comes to headroom.

I have limited experience in how professional sound people use equipment. How exactly would the first filter result in clipping? What scenarios or use cases would the first filter create risk of clipping?

Thanks for your thoughts!

J.

So they are not the same, the first one contains +6dB boost.To me, these two filters do exactly the same thing, with the relative gain being 6 dB higher in the first filter. I have never run into clipping in the way I use my equipment, but I suspect I am pretty conservative when it comes to headroom.

I have limited experience in how professional sound people use equipment. How exactly would the first filter result in clipping? What scenarios or use cases would the first filter create risk of clipping?

Or another way of saying, is that it's the 2nd one with just a gain block with 6dB in it.

So even in the digital domain (= at DSP level), we can't just boost infinity high.

How much that is, depends on various factors, I just quickly looked some information up, but I think at the Analog Devices forum it's being described well;

https://ez.analog.com/dsp/sigmadsp/...what-db-level-at-the-output-produces-clipping

I forgot the fully underlying theory what is causing clipping in the digital domain again.

(has been a while, brains gets rusty with theory after a while, lol)

But I can look it up again, if you really want know! 🙂

Also we only have so much headroom after the DAC as well as our power amplifier.

6dB is equal to 10^(6/20) = twice the output voltage!

So it goes up VERY rapidly at some point!

Especial when a DAC output only has like ±2.5V available (with a 5V rail, theoretical max, in practice it's often lower)

The danger here is mostly that you will just clip against the voltage rails.

How bad that is, depends on the context. How dynamic the music is for example.

Like you already mentioned yourself, in some cases it will (probably) just work fine.

My previous comment was more coming from good design practices.

Where and how you exactly approach that, depends again a bit on context as well as personal preference.

But I think it's a better approach to leave the highest point of "boost" (can also be like peak of a param EQ) at the 0 dB level.

That way you know that your DSP+DAC always has maximum headroom available.

As if we just didn't apply any filtering at all (seen from a headroom perspective).

Given that the output amplifier has enough gain to provide full max power that is allowed for the loudspeaker.

If not, adding more gain in like the DAC filter stage often works better (since it has much more headroom than the DAC itself)

This is always a bit of a chicken egg-story.

Because at the same time we want to have the highest amount of output from our DAC to make sure our SNR is as high as possible!!

Otherwise we have to boost more after our DAC = more noise (since you boost the noise with it as well)

For any active analog it's basically the same story as well.

One of the reasons why pro audio systems like to use ±18V or even higher for the active filter stages!

So it's mostly a question of where do we have the most amount of headroom available.

In most cases that is the power amplifier.

What does this all mean in practice?

Well the issue that can arise, is that you think that mid frequencies are on a nice level (before clipping) but than all of a sudden a bass note comes along and hits the clipping point.

All of this seen from a signal point of view, not loudspeaker point of view.

Hopefully this made a more sense, if not, please ask! 🙂

btw, forget to mention, but if your volume control is BEFORE your DSP, this is obviously already less of an issue.

But since we want to maximize SNR again, it's better to have the volume control after the the DSP.

If I remember correctly, FusionAmps dsp has 48 bit internal processing depth, 8 bits of which is used for boosting headroom. Not sure about the latter part, but I've used them for >10 dB boosting without problems. My units are the older IIR-only type.

And while this is mostly true, it will become irrelevant when using digital feed and 24 or more bit depth, which is the standard now.btw, forget to mention, but if your volume control is BEFORE your DSP, this is obviously already less of an issue.

But since we want to maximize SNR again, it's better to have the volume control after the the DSP.

This is a slightly different discussion.And while this is mostly true, it will become irrelevant when using digital feed and 24 or more bit depth, which is the standard now.

Even in a system with just a digital feed and just a DAC, this order might be relevant.

How relevant depends on context.

But let's do some simple calculations.

Let's say we have a DAC with 102dB SNR at full scale.

Which is at 2Vrms.

This means that our fixed voltage noise level is at 10^(-102/20) * 2V = 0.016 mVrms.

This is the self noise of the DAC itself at a absolute (fixed) level.

Instead of 2Vrms, we now put out 0.2Vrms

So the SNR at this point is now 20 * log (0.016 mV / 0.2 V) = 82dB

Or a faster way of doing this: 102 - 20log(0.2/2) = 82dB

You see that the lower this output level, the smaller this (relative) signal-to-noise ratio becomes.

How "bad" that is, depends on context and the rest of the chain.

So say we have at least a DAC output stage that does 6dB of gain and a power amplifier that has 30dB of gain.

This absolute level of noise is all of a sudden 10^((30+6)/20) * 0.016mVrms = 1mVrms

Say we have like 24 high sensitive compression drivers of 110dB per 2.83V at 1 meter in a array.

At a short distance (1 meter), these just add up, so we get an extra gain of 20log(24) = 27,6dB.

So a total of 110 + 27.6 = 137.6dB per 2.83V.

At 1mVrms (noise) this will do 20log(1mV/2.83V) + 137.6 = 68.6dB of noise!

In a quiet environment, that is very audible!

Even with just one single compression driver, that would have been 41dB of noise.

Which can also be just about audible in a quiet room.

This is one of the reason why in some cases you want a DAC with high dynamic range/SNR.

Not because of the dynamic range itself, but because it has a absolute noise level that just will get amplifier.

In the previous example, a DAC with a SNR of say like 112dB is already knowing that total noise level down by 10dB

Obviously, this calculation is simplified.

In reality there is often more distance, so the noise levels go down and in the case of an array the output of the drivers don't add up with a 20log anymore.

So in practice it won't be as extreme as that 68dB of noise, but it's also still a long way to go to go below say 40-50dB.

Keep in mind that some concert stage also play very light music with a very quiet audience.

I have been involved in some issues were the musicians were complaining about the noise, mostly because it was quite distracting from the (quiet) moment and performance.

When you have a volume control behind the DAC, it attenuates the self noise of the DAC as well.

Therefor maximizing the SNR at every volume

Because if the signal is being attenuated by 20dB, this noise is also attenuated by 20dB.

(minus the self noise of the volume control itself obviously).

It is only not always very practical to implement unfortunately.

To make a long story short, we don't care about "the bits" we care about the fact that certain DACs with higher bit rate have lower noise levels.

I have done that as well, and it will work in some cases.but I've used them for >10 dB boosting without problems

Point is, to make it a deliberate choice, instead of trying to figure out why on earth things sound bad all of a sudden.

Just keep everything at 0dB will save you from potential problems and depending on the situation you can make the discussion to ignore that.

Like I said before, I think it's just better design practice that way.

I would rather have 8 or 10dB to much headroom than finishing a design and than realize it's close to clipping when somebody turns up everything.

If you're short on sensitivity, you can also always just put in a gain block instead.

I think that is just much nicer design choice.

There are some flaws in this reasoning. The noise floor of a delta sigma dac doesn't usually vary significally by output level, so the noise is constant. Even if it did, the hiss from highs is not a problem, because you usually attenuate the top end and boost only the low end, which is a lot less sensitive. If you add resistors after the amp in series with a sensitive cd, you also attenuate hiss. 24 horns at 1 meter, oh wow. As it happens, my FusionAmp is hooked up to 110 db/W cd+horn (NSD1095N with XT1086). I do hear the hiss when I'm standing right in front of horn. But it's not really audible 2 meters away during day time or when sound is playing. Also if you're wondering about power on/off thumb, it's not a problem even if you had direct wiring to speaker elements. The sound is a faint click, not a thumb.This is a slightly different discussion.

Even in a system with just a digital feed and just a DAC, this order might be relevant.

How relevant depends on context.

But let's do some simple calculations.

Let's say we have a DAC with 102dB SNR at full scale.

Which is at 2Vrms.

This means that our fixed voltage noise level is at 10^(-102/20) * 2V = 0.016 mVrms.

This is the self noise of the DAC itself at a absolute (fixed) level.

Instead of 2Vrms, we now put out 0.2Vrms

So the SNR at this point is now 20 * log (0.016 mV / 0.2 V) = 82dB

Or a faster way of doing this: 102 - 20log(0.2/2) = 82dB

You see that the lower this output level, the smaller this (relative) signal-to-noise ratio becomes.

How "bad" that is, depends on context and the rest of the chain.

So say we have at least a DAC output stage that does 6dB of gain and a power amplifier that has 30dB of gain.

This absolute level of noise is all of a sudden 10^((30+6)/20) * 0.016mVrms = 1mVrms

Say we have like 24 high sensitive compression drivers of 110dB per 2.83V at 1 meter in a array.

At a short distance (1 meter), these just add up, so we get an extra gain of 20log(24) = 27,6dB.

So a total of 110 + 27.6 = 137.6dB per 2.83V.

At 1mVrms (noise) this will do 20log(1mV/2.83V) + 137.6 = 68.6dB of noise!

In a quiet environment, that is very audible!

Even with just one single compression driver, that would have been 41dB of noise.

Which can also be just about audible in a quiet room.

This is one of the reason why in some cases you want a DAC with high dynamic range/SNR.

Not because of the dynamic range itself, but because it has a absolute noise level that just will get amplifier.

In the previous example, a DAC with a SNR of say like 112dB is already knowing that total noise level down by 10dB

Obviously, this calculation is simplified.

In reality there is often more distance, so the noise levels go down and in the case of an array the output of the drivers don't add up with a 20log anymore.

So in practice it won't be as extreme as that 68dB of noise, but it's also still a long way to go to go below say 40-50dB.

Keep in mind that some concert stage also play very light music with a very quiet audience.

I have been involved in some issues were the musicians were complaining about the noise, mostly because it was quite distracting from the (quiet) moment and performance.

When you have a volume control behind the DAC, it attenuates the self noise of the DAC as well.

Therefor maximizing the SNR at every volume

Because if the signal is being attenuated by 20dB, this noise is also attenuated by 20dB.

(minus the self noise of the volume control itself obviously).

It is only not always very practical to implement unfortunately.

To make a long story short, we don't care about "the bits" we care about the fact that certain DACs with higher bit rate have lower noise levels.

I promote digital interconnects, because that way you don't lose SNR into noise floor with attenuated signals. 24 bits is 144 dB of dynamic range and the processing precision of 48 bits is 288 dB. I think the 24 bit input is padded from both sides to allow higher precision over attenuated signals and provide headroom for boosting. I think Hypex got these things covered when designing the dsp software and analog gain structure.

I'm using a digital preamp (miniDSP SHD) to attenuate the signal before it gets to the FA's, so even with that, the performance is very good, because the precision extends so much. Even if I attenuate to the limits of audible output of my system in my space, -60 dB, the sound is as good as when it's playing at -30 or -20 point, latter of which is painfully loud with low DR material for how my system is tuned. 16 bit source with -3 dB peaks basically uses all of the 16 bits, and when attenuated by 60 dB (10 bits) and put into a 24 bit bus (SPDIF), it truncates 2 LSB bits. Again, not a problem because human hearing cannot hear that big differences all at once and they would be the faintest bits that get truncated anyway. You're never going be listening 1 dB scale nuances when peak spl goes to 144 dB. At -30 dB (16+5 bits, fits within 24), it won't lose any information when transmitting digitally, but it probably did, if using analog. I guess this is what you were thinking about, too.

Yes, it's a good idea to attenuate rather than boost, if you don't have automatic unity gain or have otherwise inferior product. But, within reason, that is not necessary with FusionAmps.

- Home

- Loudspeakers

- Multi-Way

- Compact, low cost, active 3-way speaker