Earl,

Since you keep insisting "all the tests that I have done say that they (higher harmonics) are not audible", I feel compelled to again point out your test's voltage levels covered only a 6 dB range, tested at three levels, 14, 20, and 28 volts, (12.25 to 50 watts into 4" diaphragm drivers mounted to plane wave tubes) resulting in only a 6 dB range difference, and only a 6 dB range of distortion from approximately 10 to 18% at 1 kHz, and 18 to 31% at 6 kHz.

Since those levels of distortion were not compared to a low distortion level (like 2.83 volts) or the reference recording there would be little chance of anyone telling them apart. The source recording (a live recording of Talking Head's "burning down the house") has plenty of distortion to start with. You have still presented no explanation of how subjective distortion perception results could be derived from that protocol.

I too have always been puzzled by the choice to perform this test at such high drive levels.

12.25 to 50 W into a ~110 dB/W(m) driver produce 121 - 127 dB average SPL at 1m, or approx. 111 - 117 dB average at a typical 3m home listening distance.

This is WAY louder than desirable (arguably, even bearable) for extended music listening at home.

A more typical ~85 dB average listening level (still allowing ~105 dB peaks when listening to non-compressed music with, say, a 20 dB crest factor) would require an average electrical drive level of only 0.03 W (or ~0.5 V into 8 Ohm).

If the test was meant to determine audibility of distortion in real-world (meaningful) conditions, why not perform the test at this sort of level?

And why not use audiophile-grade acoustic and minimally processed source material?

Marco

Last edited:

Your example tweeter was unique, I have never seen this level of distortion in any driver that I use. I would be tempted to call it broken, but without first hand knowledge of the conditions, I would refrain from that conclusion.

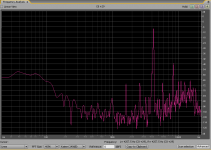

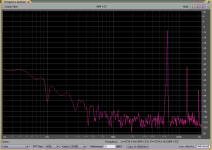

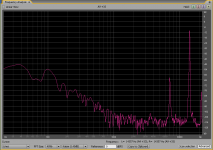

Regarding that tweeter, I have uploaded its sweep response (400Hz - 20kHz) to my page:

http://pmacura.cz/t2030_recorded_sweep.wav

The log sweep is about 10s long and speaker voltage is 1.5Vrms in that test. One can hear the subharmonic components and one can analyze the spectrum.

Some notable time points:

- 6.402s at 4257.5 Hz we can see subharmonic components

- 7.117s at 5646.9 Hz

- 7.451s at 6443.9 Hz

- 7.564s at 64743.6 Hz the record is "clean" with THD <1%

- 9.477s at 14357 Hz pure subharmonic spectral line

I will post another drivers with notable 5th harmonic later, but this one is the only type with subharmonics.

Attachments

turk, I have numbered places in your post that need discussing and are open to empirical observation. An SPL meter or SPL meter Ap for a cell phone is handy.

[1]if it's a badly designed or badly made horn this may be so.

well if JBL and EV are considered badly designed horns and compression drivers i really do have to find something better to work with.

not sure what your on about here i don't think increasing spl makes it worse[2]But if that is the case, it will sound even worse the more you turn up the SPL and will eventually drive you out of the room.

again not sure what your on about here never said anything about "too loud"Why do I say that? Because a well designed and made speaker won't sound "too loud" until you have it playing way over 100 dB at listening position. The sensation of "too loud" is caused by hearing distortion - no sensation of "too loud", no audible distortion.

this may be more of a fletcher/munson hearing response phenomenon but i have noticed that at low levels some horns exhibit "non linear" behavior and that keeping them between minimum(so as to not behave non linear) and maximum (exceptable level long before the onset of driver or amp distortion) appears to make them behave better, more "linear".[3]This statement is difficult to understand perhaps you could express it in another way.

if i can get better efficiency/more spl without spending my hard earned money on high priced utility costs why wouldn't i. as sound contractor if i can save "watt dollars" for the venue they're more likely to continue working with me.[4] Historically, horns were were used to amplify sound by making the connection between the driver diaphragm and the air more efficient because electrical amplification was difficult and expensive. Electrical amplification is now cheap for homes and other smallish venues and horns are used in them now also for wave guiding or directivity control. Standards have risen and folk don't like the distorted sound of old fashioned horns.

here is the part of where i have a problem coming to terms with what is being said.The distorted sound characteristic of horns is caused by refraction of sounds from the sides, from the edge at the mouth/baffle boundary, and reflection from the impedance of the mouth back to the driver diaphragm.

This refraction/reflection are delayed parts of the signal which comes directly out of the horn to the listener. And, although there may be reinforcement and cancellation of some frequencies, no new harmonics are produced, this is an acoustic phenomenon only. This is a linear process.

Acoustical problem requires an acoustical solution.

Earl Geddes has provided some solutions. He showed from first principles that there is a horn wall profile that produces the least amount of refraction/reflection, and that rounding over the mouth/baffle boundary produced lower level refraction, that ensuring the driver horn connection was as smooth as possible, and that it was possible to diminish the level of of remaining refraction/reflection produced inside the horn by filtering it with open celled foam.

He wanted to call horns developed through his mathematical formula waveguides to distinguish them from the traditional horn developed out of a different equation but that really didn't happen.

So to answer your question, it depends on the profile of the waveguide for the coverage angle how much distortion is produced. It's certainly true that if you want a narrower coverage, the waveguide will be longer.

I'm running out of time. There is a very lengthy thread entitled 'Geddes on Waveguides', in the stickies I think, at at the Diyaudio Multiway Forum. You should look at it.

Why do I say that? Because a well designed and made speaker won't sound "too loud" until you have it playing way over 100 dB at listening position. The sensation of "too loud" is caused by hearing distortion - no sensation of "too loud", no audible distortion.

I think that is simplifying the issue a bit even though I agree sort of with what I think is your message. It's also about program material. A clean speaekr playing "distorted music" will of course sound equally loud at a relatively low level.

Also, sustained signals focused to the midrange will definetely sound loud below 100dB SPL I'd say.

This refraction/reflection are delayed parts of the signal which comes directly out of the horn to the listener. And, although there may be reinforcement and cancellation of some frequencies, no new harmonics are produced, this is an acoustic phenomenon only. This is a linear process.

Acoustical problem requires an acoustical solution.

Although acoustical phenomena of course can produce harmonic and non-harmonic distortion products.

with respect to horn refraction/reflection no new harmonics are generated but doesn't the level of existing harmonics with respect to the fundamental change? or is that something else i have wrong?

Q: do the 'HOMs and internal reflections' have an analogue in cone speakers? - breakup modes?

No not really. Cone resonances are not delayed in time they happen at the same time as the signal. The diffractions (FrankWW, it is diffraction not refraction) and reflections are delayed in time by sometime very large amounts of time. Consider how long a reflection from the mouth would take to travel back to the driver and back out the horn to the mouth again. This is way longer in time than any cone resonance would ever exhibit.

If the test was meant to determine audibility of distortion in real-world (meaningful) conditions, why not perform the test at this sort of level?

And why not use audiophile-grade acoustic and minimally processed source material?

Marco

We choose the level on the high side in order to determine the performance at the edge where we expected NLD to be most pronounced. From the THD levels, this was the case.

In hindsight I would change the source material, but at that time we wanted something akin to the kind of music that would be played over the device - B&C largest customers are sound reinforcement. Distortion in the source was never an issue to use because we didn't detect anything unpleasant. I still contend that the distortion in the source claim is inaccurate. No one here knows how much, if any processing was done on the source material. It is not obvious if any was done.

with respect to horn refraction/reflection no new harmonics are generated but doesn't the level of existing harmonics with respect to the fundamental change? or is that something else i have wrong?

No this is correct, but it is also linear. The whole discussion here is about what it is in a horn that we hear that we don't like.

I am simply telling you what my 40+ years of doing this has determined. And it is not like I am still looking, I have found the problem and solved it - this is the answer that I found.

Although acoustical phenomena of course can produce harmonic and non-harmonic distortion products.

Acoustical nonlinearity is so rare that I think that it is unlikely that you have ever heard it. The only example that I can think of that I have heard is a space shuttle landing. As it lands there is a sonic boom created well out in space. This boom is a plus, then minus impulsive peak. But the peak moves faster than the dip. Many miles away you will hear two booms separated by a short time delay. To create this kind of nonlinearity requires an enormous sound level, closing in on 160 dB. At 120 dB (where you would loose your hearing if you stayed very long) only a very small % 2nd order is created. Below 120 dB and nothing of any significance is created.

No not really. Cone resonances are not delayed in time they happen at the same time as the signal. The diffractions (FrankWW, it is diffraction not refraction) and reflections are delayed in time by sometime very large amounts of time. Consider how long a reflection from the mouth would take to travel back to the driver and back out the horn to the mouth again. This is way longer in time than any cone resonance would ever exhibit.

Hmm.... I think to most people resonance is a phenomena that store and release energy.. "store" and "release" gives a hint about time domain being involved here.. ;-)

That you can find other phenomena with "longer extension" in time is not a good argument for claiming a resonance with shorter life span being without delay.

So, cone resonances are delayed in time and they do not "happen at the same time as the signal"... if happen at the same time should be read as same time as force is applied via the voice coil.

Also at least one significant path length of diffraction is directly from point of change of acoustic impedance to point of observation (mic/listener), travel time back to driver is not a part of that.

Acoustical nonlinearity is so rare that I think that it is unlikely that you have ever heard it. The only example that I can think of that I have heard is a space shuttle landing. As it lands there is a sonic boom created well out in space. This boom is a plus, then minus impulsive peak. But the peak moves faster than the dip. Many miles away you will hear two booms separated by a short time delay. To create this kind of nonlinearity requires an enormous sound level, closing in on 160 dB. At 120 dB (where you would loose your hearing if you stayed very long) only a very small % 2nd order is created. Below 120 dB and nothing of any significance is created.

Rare in your speakers possibly bot not in speakers in general on a global scale.

There's many of them (both speakers and acoustic sources of distortion) and I'm a bit surprised you don't acknowledge that.

Acoustical nonlinearity is so rare that I think that it is unlikely that you have ever heard it.

everytime i hear a driver in too small a box suffering from amplitude modulation i can help but think of it as acoustic non linearity. but i could be wrong.

A resonance starts to respond instantly with the application of the driving force. It builds over time, but this time frame is exceedingly short when compared to the travel time of a wave diffracted or reflected within the horn body, which does nothing at the listening point until this secondary wave arrives. These are dramatically different time scales.

Rare in your speakers possibly bot not in speakers in general on a global scale.

There's many of them (both speakers and acoustic sources of distortion) and I'm a bit surprised you don't acknowledge that.

Because it is simply not true. The only source of acoustic nonlinearity of air is right at the throat of a horn and even this is quite small when compared to other sources of nonlinearity. It takes, as I said, an enormous SPL level to yield an acoustic nonlinearity. I'm surprised that you don't get that.

everytime i hear a driver in too small a box suffering from amplitude modulation i can help but think of it as acoustic non linearity. but i could be wrong.

The nonlinear compliance of a box is miniscule when compared to the nonlinearity of the suspension. For this reason, as the box gets smaller the total system gets more linear not less.

Because it is simply not true. The only source of acoustic nonlinearity of air is right at the throat of a horn and even this is quite small when compared to other sources of nonlinearity. It takes, as I said, an enormous SPL level to yield an acoustic nonlinearity. I'm surprised that you don't get that.

The nonlinear compliance of a box is miniscule when compared to the nonlinearity of the suspension. For this reason, as the box gets smaller the total system gets more linear not less.

So which is it, non existant or small? You can't have it both ways. You are contradicting yourself.

Nonlinear compliance of air in any closed or semi-closed air volume communicating with the driver... nonlinear air flow of in the drivers, in the boxes etc... nonlinearities of helmholtz designs (nonlinear function of the port and port/driver combo).

Want more?

A resonance starts to respond instantly with the application of the driving force. It builds over time, but this time frame is exceedingly short when compared to the travel time of a wave diffracted or reflected within the horn body, which does nothing at the listening point until this secondary wave arrives. These are dramatically different time scales.

Not at all, there are resonances in acoustic and mechanical systems that have a link between driving force and resonant mechanism. So not necessarily "instantly".

For example.. coupling to a room mode in an adjacent space in a listening set up. This is a non min phase thingy which you can not use a simple EQ to compensate.

Resonance in room walls. The walls can not possibly start to resonate before the air pressure wave hits it. Time of travel from speaker to wall you know.

The nonlinear compliance of a box is miniscule when compared to the nonlinearity of the suspension. For this reason, as the box gets smaller the total system gets more linear not less.

By the way, last week I found a subwoofer having much higher (audibly and measurable) distortion than what I remembered it to have.. I found a very small leakage in the box (approx 30 square mm). Sealing this leakage reduced 2nd and 3rd harmonic more than 10dB and the distortion was not audible anymore.

This leakage was nonlinear in itself and had a nonlinear loading of the box compliance as a consequence. A very real acoustic nonlinearity with effects of significant magnitude.

In the real world there are lots of problems and sources of errors, even if you pretend that's not the case. Throwing a bunch of money on the problem and/or building a bigger system (to reduce or eliminate problems) is seldom a possible solution.

Nonlinear distortion IS a very big factor in speakers, perhaps the absolute biggest.. then comes the room and then linear parameters of the speaker.

Earl,The Klippel tests are contrived and not real tests of real speakers in situ.

I know our test was rigorous and short of your complaint that the original signal is too distorted to tell any distortion (which you have no proof of), the test was well controlled. If you are trying to get me to agree that my test was all wrong because of the source material, well, that's not going to happen. It is what it is and I'll stand by it.

Weltersys asked:

Have you conducted any comparative tests with multiple individuals that prove linear distortions (such as HOMs) that have a nonlinear perception are perceived as "more horrible" sounding than nonlinear distortion?

I am not sure what this means, I don't think that I ever said it, and of course no tests like that have ever been done on a scientific basis - no one cares.

My question was asked because you have often stated that linear distortions (such as HOMs) are what make horns "sound horrible" (and progressively worse as SPL increases), but have not seen any data that back up that claim. That said, my experience does concur that distortion sounds worse through "pinched throat" horns than conical or OS expansions, so I agree that HOMs, horn reflections and diffraction are additional problems.

As far as your test, the source material is not my primary "complaint", my complaint is that your subjects couldn't compare the three levels of distorted driver's output to the source itself. I don't think I could tell the difference between only a 6 dB range of distortion listening to the track you chose, so I'm not questioning the college kid's results, the test protocol simply did not make it possible to make distinctions regarding distortion, other than over a very limited range.

As far as the Klippel test being "contrived and not real tests of real speakers in situ", your test also did not use real speakers in situ, it used recordings of HF drivers on plane wave tubes with no comparison to the source. The fact that the distortion used in the Klippel tests are emulations of speaker distortion rather than a recording of speaker distortion does not affect perception of distortion, and it is obvious that given a proper A/B test, it is easy to tell a distorted recording from a clean one.

Your claims that "no one cares" about harmonic distortion ignore the fact that it can be heard and has a negative impact on sound quality which increases with the distortion level. If harmonics could not be heard, one could not tell the difference between an upright bass, a cello or a violin.

Art

- Status

- Not open for further replies.

- Home

- Loudspeakers

- Multi-Way

- Audibility of distortion in horns!