Which raises the question: Over what average period do we hear tonal balance?

I think that my comment led you to this question, which I think really isn't very testable. I will say that we don't hear beats much past a beat frequency of about 10Hz... The ear is more (or less) than a spectrum analyser, but the signals Klaus provided don't have the same spectrum, so they don't prove that the ear isn't a spectrum analyser

Describing the human perceptual process as a "pure" FFT sort of procedure doesn't carry much merit - an FFT will identify signals regardless of level and proximity, and in the ear there are masking effects going on. That said, the FFT/cosine transform procedures used in perceptual coding seem to work fairly well.

Hi guys

I have been pretty busy lately and have not had much chance to post here lately.

I couldn’t help but toss a few thoughts and observations in on this subject though, particularly about spatial hearing and a microphone.. Keep in mind, this is my personal view as of today 8-1-2010 and does not reflect the view of anyone except those who agree haha.

In a way, one needs a wider view to see what phase shows, it is much like being overly concerned with the presence of cancellation notches when the reflected / delayed signals which cause them is actually the greater concern acoustically.

Also, to a very large degree, one needs to interpret what measurements say to understand them.

Consider that one’s eye can turn to see any direction X or Y within your window, but it takes two eyes to judge depth.

In recording, one can capture pressure from one point in space with a single microphone but it takes two to even begin to capture left / right information. One can capture L, R plus height, plus depth if you have 4 microphones.

It takes one more frame of reference than the domains you wish to describe.

You hear in 3d but only have “two data ports”, so how is that and why does that matter?

You “hear” much more than you are aware of. If one placed a teeny tiny microphone inside or your ears and did a comprehensive spherical frequency response measurement, one finds that the shape of your ears and head all perform a continuous modification of the response and phase based on angle.

At every point, the response is a little different from the adjacent position and frequency. Growing up, not having any other frame of reference, you have learned to associate all of those changes NOT as changes in response BUT in the way something sounds coming from a given location.

I have been building speakers for commercial sound for a good while now which are an attempt to combine multiple ranges of drivers into something which radiates as a single source.

Regular high output speakers have multiple sources and usually have a great deal of frequency dependent self interference, usually visible on a plot. It was clear to me in that starting from a giant concert array down to a single driver, that the size of the speaker system was often inversely correlated to sound quality. The goal was to make a single source that was powerful enough to use commercially.

Phase enters into this as one way to think of it (as Heyser did) is that the acoustic phase of a speaker is like a modulation of it’s physical position front to back in time. Ideally, if one were to combine multiple sources into one thing, then it would require they all be at the same position in time. My solution involved using a constant directivity horn, both for pattern control but also for the efficiency advantage as the products tend to be used in larger spaces.

For that approach to work it required all the interacting drivers all be within about a quarter wavelength of each other, in other words, like close coupled subwoofers are except higher up and within the horn.

I will link an explanation of how they work later but the key point was that as they became closer to a single source (over many steps and years) a weird observation became a “flashing light”.

As these got better, If one played a single speaker playing voices etc, it became progressively harder to hear how far away it was if your eyes were closed. Sure, it was clear as day what direction it was, but gradually the ability to localize it’s physical depth got smaller and smaller.

Now, with most of our stuff going into large installations, the importance of that effect is not grasped BUT when used in a stereo system, the effect was stunning. Stereo depends on creating a realistic mono phantom image and then “leaning it” to one side or the other. When the loudspeaker is “radiating” strong clues as to where it is, it is competing with the stereo image.

Picture the case producing the phantom or stereo image, how much does having each speaker broadcast it’s location “help”, not at all.

Those clues certainly include edge diffraction but also include the “self interference” between drivers which are too far apart.

While one cannot see these things well in an on axis measurement, the 3d hearing ability one has allows one to clearly identify the speakers physical location in addition to the signal’s intended image. THAT I am sure is part of the sound of a speaker, how strongly it shouts it’s own location. This would certainly also have to include other things like Earl Geddes “higher order modes” which would radiate localization clues as well.

Anyway, I had thought what is was hearing was the reduction of time errors, I was using a TEF machine for measurement and let it steer my direction to a single source. Being able to reproduce a square wave broad band was a good goal but was not causing what I heard. If you create that kind of phase shift and listens with headphones, one would conclude that preserving input wave-shape is a subtle effect usually.

What I was hearing was not subtle and something related to the spatial radiation from the source, sort of a spatial distortion or lack of it, no lobes or nulls, maybe “source identity” would be a good name. That is in time or Z but also X and Y.

I think this is what one hears when you have little Fostex full range drivers on a baffle, a minimum of spatial distortion, it is a wide dispersion point source that makes a large image with little clue as to it’s physical depth.

Understand, I am not saying magnitude and phase and so on are not important, just that I am very sure that there is another part related to how we hear.

Developing these horns over the years I have heard it over and over.

I found a couple links to a couple hifi people on line who heard some of the speakers with this solution somewhere and posted about what they heard;

The bottom of the page on the first link shows a front view of a new smaller speaker, the picture shows the high, mid and low entry points into the horn for the three ranges, then a couple reviews of a larger one.

PSW Sound Reinforcement Forums: Product Reviews: Sound Reinforcement => Danley Review

When used in a hifi system;

http://www.diyaudio.com/forums/multi-way/156988-gedlee-summa-vs-lambda-unity-horn-3.html

High Efficiency Speaker Asylum - RE: Danley Sound Labs - endust4237 - June 05, 2008 at 16:06:13

A real review;

Danley Sound Labs SH-50 Loudspeaker

My point, that phase only looks at time/frequency in one location, you automatically hear from two points and can triangulate spatial differences as well. Phase or a single point measurement may or may not show the feature you seek depending on the complexity of radiation pattern as Speakers radiate a 3D balloon who’s shape changes with frequency, it probable the things I speak of are present here if we knew what to look for.

Anyway, about half way down in the link below is an explanation of the synergy horns acoustic operation;

http://www.danleysoundlabs.com/pdf/danley_tapped.pdf

Best,

Tom Danley

I have been pretty busy lately and have not had much chance to post here lately.

I couldn’t help but toss a few thoughts and observations in on this subject though, particularly about spatial hearing and a microphone.. Keep in mind, this is my personal view as of today 8-1-2010 and does not reflect the view of anyone except those who agree haha.

In a way, one needs a wider view to see what phase shows, it is much like being overly concerned with the presence of cancellation notches when the reflected / delayed signals which cause them is actually the greater concern acoustically.

Also, to a very large degree, one needs to interpret what measurements say to understand them.

Consider that one’s eye can turn to see any direction X or Y within your window, but it takes two eyes to judge depth.

In recording, one can capture pressure from one point in space with a single microphone but it takes two to even begin to capture left / right information. One can capture L, R plus height, plus depth if you have 4 microphones.

It takes one more frame of reference than the domains you wish to describe.

You hear in 3d but only have “two data ports”, so how is that and why does that matter?

You “hear” much more than you are aware of. If one placed a teeny tiny microphone inside or your ears and did a comprehensive spherical frequency response measurement, one finds that the shape of your ears and head all perform a continuous modification of the response and phase based on angle.

At every point, the response is a little different from the adjacent position and frequency. Growing up, not having any other frame of reference, you have learned to associate all of those changes NOT as changes in response BUT in the way something sounds coming from a given location.

I have been building speakers for commercial sound for a good while now which are an attempt to combine multiple ranges of drivers into something which radiates as a single source.

Regular high output speakers have multiple sources and usually have a great deal of frequency dependent self interference, usually visible on a plot. It was clear to me in that starting from a giant concert array down to a single driver, that the size of the speaker system was often inversely correlated to sound quality. The goal was to make a single source that was powerful enough to use commercially.

Phase enters into this as one way to think of it (as Heyser did) is that the acoustic phase of a speaker is like a modulation of it’s physical position front to back in time. Ideally, if one were to combine multiple sources into one thing, then it would require they all be at the same position in time. My solution involved using a constant directivity horn, both for pattern control but also for the efficiency advantage as the products tend to be used in larger spaces.

For that approach to work it required all the interacting drivers all be within about a quarter wavelength of each other, in other words, like close coupled subwoofers are except higher up and within the horn.

I will link an explanation of how they work later but the key point was that as they became closer to a single source (over many steps and years) a weird observation became a “flashing light”.

As these got better, If one played a single speaker playing voices etc, it became progressively harder to hear how far away it was if your eyes were closed. Sure, it was clear as day what direction it was, but gradually the ability to localize it’s physical depth got smaller and smaller.

Now, with most of our stuff going into large installations, the importance of that effect is not grasped BUT when used in a stereo system, the effect was stunning. Stereo depends on creating a realistic mono phantom image and then “leaning it” to one side or the other. When the loudspeaker is “radiating” strong clues as to where it is, it is competing with the stereo image.

Picture the case producing the phantom or stereo image, how much does having each speaker broadcast it’s location “help”, not at all.

Those clues certainly include edge diffraction but also include the “self interference” between drivers which are too far apart.

While one cannot see these things well in an on axis measurement, the 3d hearing ability one has allows one to clearly identify the speakers physical location in addition to the signal’s intended image. THAT I am sure is part of the sound of a speaker, how strongly it shouts it’s own location. This would certainly also have to include other things like Earl Geddes “higher order modes” which would radiate localization clues as well.

Anyway, I had thought what is was hearing was the reduction of time errors, I was using a TEF machine for measurement and let it steer my direction to a single source. Being able to reproduce a square wave broad band was a good goal but was not causing what I heard. If you create that kind of phase shift and listens with headphones, one would conclude that preserving input wave-shape is a subtle effect usually.

What I was hearing was not subtle and something related to the spatial radiation from the source, sort of a spatial distortion or lack of it, no lobes or nulls, maybe “source identity” would be a good name. That is in time or Z but also X and Y.

I think this is what one hears when you have little Fostex full range drivers on a baffle, a minimum of spatial distortion, it is a wide dispersion point source that makes a large image with little clue as to it’s physical depth.

Understand, I am not saying magnitude and phase and so on are not important, just that I am very sure that there is another part related to how we hear.

Developing these horns over the years I have heard it over and over.

I found a couple links to a couple hifi people on line who heard some of the speakers with this solution somewhere and posted about what they heard;

The bottom of the page on the first link shows a front view of a new smaller speaker, the picture shows the high, mid and low entry points into the horn for the three ranges, then a couple reviews of a larger one.

PSW Sound Reinforcement Forums: Product Reviews: Sound Reinforcement => Danley Review

When used in a hifi system;

http://www.diyaudio.com/forums/multi-way/156988-gedlee-summa-vs-lambda-unity-horn-3.html

High Efficiency Speaker Asylum - RE: Danley Sound Labs - endust4237 - June 05, 2008 at 16:06:13

A real review;

Danley Sound Labs SH-50 Loudspeaker

My point, that phase only looks at time/frequency in one location, you automatically hear from two points and can triangulate spatial differences as well. Phase or a single point measurement may or may not show the feature you seek depending on the complexity of radiation pattern as Speakers radiate a 3D balloon who’s shape changes with frequency, it probable the things I speak of are present here if we knew what to look for.

Anyway, about half way down in the link below is an explanation of the synergy horns acoustic operation;

http://www.danleysoundlabs.com/pdf/danley_tapped.pdf

Best,

Tom Danley

The problem with the word phase is that it can be interpreted a few different ways depending on context - here's three off the top of my head:

Intrinsic amplitude/phase of a transducer or system.

polarity of a signal

interaural time delay

Now Mr. Danley has added head related transfer function (HRTF) to the mix, perhaps since are beginning to get started on perceptual arguments.

In reading the original poster's question I would interpret it as having the first meaning - others have not.

A quick search resulted in this:

http://www.diyaudio.com/forums/multi-way/166411-measurements-when-what-how-why-14.html#post2184142

http://www.diyaudio.com/forums/multi-way/5699-cant-reproduce-square-wave-14.html#post1973245

Mr. Geddes has other comments on phase, but I can't find them at the moment.

The second link is a perhaps interesting thread for the waveshape camp.

I will say that I agree with Mr. Geddes in that phase is essentially irrelevant - excepting in how it affects amplitude....in summing of crossovers, for example. Danley's example of being concerned about notches more than the reflections that cause them, and understanding what the measurements are telling you, is apropos.

Intrinsic amplitude/phase of a transducer or system.

polarity of a signal

interaural time delay

Now Mr. Danley has added head related transfer function (HRTF) to the mix, perhaps since are beginning to get started on perceptual arguments.

In reading the original poster's question I would interpret it as having the first meaning - others have not.

A quick search resulted in this:

http://www.diyaudio.com/forums/multi-way/166411-measurements-when-what-how-why-14.html#post2184142

http://www.diyaudio.com/forums/multi-way/5699-cant-reproduce-square-wave-14.html#post1973245

Mr. Geddes has other comments on phase, but I can't find them at the moment.

The second link is a perhaps interesting thread for the waveshape camp.

I will say that I agree with Mr. Geddes in that phase is essentially irrelevant - excepting in how it affects amplitude....in summing of crossovers, for example. Danley's example of being concerned about notches more than the reflections that cause them, and understanding what the measurements are telling you, is apropos.

Hi Ron

“The problem with the word phase is that it can be interpreted a few different ways depending on context”

Absolutely! That was part of what I was trying to say, one needs to see the context of the measurement even.

I don’t think people need to know “head acoustics” they have lived with it and nothing else. Rather to think of sound as a 3d thing, we hear in 3d but we can only measure in 1D.

I scanned some pages of the measurement thread, it had some interesting parts but was a sort of a can of worms, I guess I am glad I was busy then, I would have gotten sucked in..

I also scanned the “Can’t reproduce a square wave” thread a bit too.

Among many things, it was said there that at best, one could only produce a square wave at one position due to the differing driver distances.

While that is true normally (speakers produce lobes and nulls at xover), that is not true always, all one has to do is have the drivers close enough together so that they combine and radiate coherently (equally in all directions, about a quarter wl or less), then (with an acoustic magic wand) have the individual phase responses sum to act like one source in time.

That is where phase might enter too, if you model X phase shift electronically and listen with headphones, that may well reflect the exact measured response at one point in space, but it might not emulate the radiated effect of the spatially separated sources.

I found a hifi speaker company that has discovered this same 3d thing (I think).

It’s not constant directivity, wouldn’t preserve waveshape but it does come closer to radiating as a broad band simple point source.

http://www.kef.com/resources/conceptblade/_brochures/Concept_Blade_Brochure_en.pdf

Anyway, you can produce a square wave and do it over a relatively broad band and essentially anywhere in the pattern.

http://www.diyaudio.com/forums/multi-way/71824-making-square-waves.html

Best,

Tom Danley

Ron, were you on the old bass list?

“The problem with the word phase is that it can be interpreted a few different ways depending on context”

Absolutely! That was part of what I was trying to say, one needs to see the context of the measurement even.

I don’t think people need to know “head acoustics” they have lived with it and nothing else. Rather to think of sound as a 3d thing, we hear in 3d but we can only measure in 1D.

I scanned some pages of the measurement thread, it had some interesting parts but was a sort of a can of worms, I guess I am glad I was busy then, I would have gotten sucked in..

I also scanned the “Can’t reproduce a square wave” thread a bit too.

Among many things, it was said there that at best, one could only produce a square wave at one position due to the differing driver distances.

While that is true normally (speakers produce lobes and nulls at xover), that is not true always, all one has to do is have the drivers close enough together so that they combine and radiate coherently (equally in all directions, about a quarter wl or less), then (with an acoustic magic wand) have the individual phase responses sum to act like one source in time.

That is where phase might enter too, if you model X phase shift electronically and listen with headphones, that may well reflect the exact measured response at one point in space, but it might not emulate the radiated effect of the spatially separated sources.

I found a hifi speaker company that has discovered this same 3d thing (I think).

It’s not constant directivity, wouldn’t preserve waveshape but it does come closer to radiating as a broad band simple point source.

http://www.kef.com/resources/conceptblade/_brochures/Concept_Blade_Brochure_en.pdf

Anyway, you can produce a square wave and do it over a relatively broad band and essentially anywhere in the pattern.

http://www.diyaudio.com/forums/multi-way/71824-making-square-waves.html

Best,

Tom Danley

Ron, were you on the old bass list?

Hello David

I am have a bit of trouble understanding what transient information is being discarded. Could you please clarify?

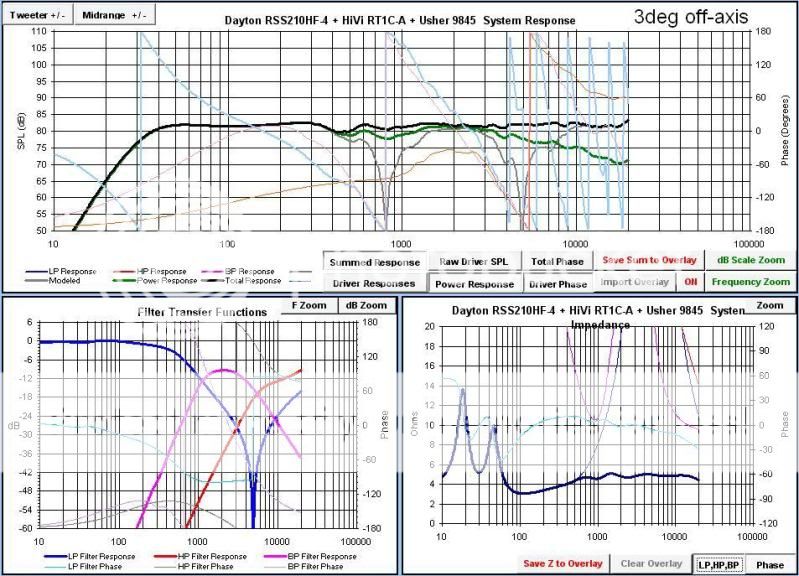

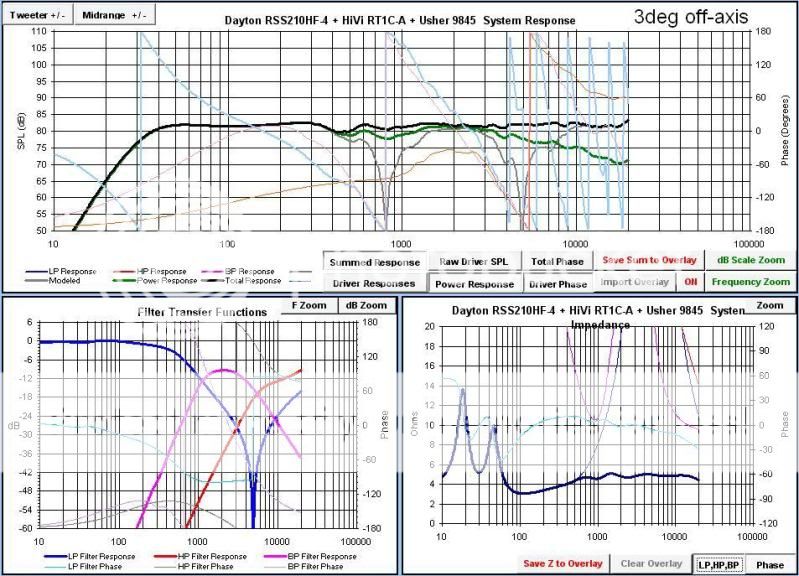

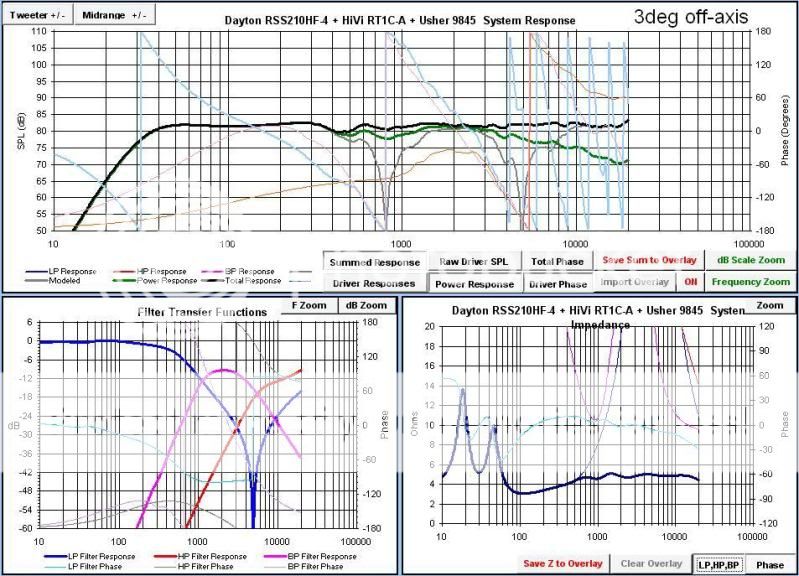

Here is a measurement of a clone system I built using 24db acoustic slopes. Speaker in my avatar. Looking at the measured response it meets the +/-25 deg from 80Hz to 18kHz requirement. Would you classify this as a linear phase design??

Rob

Rob, the impedance phase graph you provide has no relevance to the frequency response and acoustic phase.

Regarding transient performance, I refer you to Thiel's white paper on Coherent Source Loudspeakers

http://www.thielaudio.com/THIEL_Site05/PDF_files/PDF_tech_papers/techpaper_general2.pdf

A couple of excerpts :

TIME RESPONSE

In most loudspeakers the sound from each driver reaches the listener at different times. This

causes the loss of much spatial information. Different arrival times from each driver cause the

only locational clues to be the relative loudness of each speaker. Relying only on loudness

information causes the sound stage to exist only between the speakers. In contrast to this

loudness type of imaging information, the ear–brain interprets real life sounds by using timing

information to locate the position of a sound. The ear perceives a natural sound as coming from

the left mainly because the left ear hears it first. That it may also sound louder to the left ear is

of secondary importance. Therefore, preserving accurate arrival times allows your ear–brain to

interpret location of sounds in its normal, natural way and provides realistic imaging.

Another problem caused by separate arrival times from each driver is that the attack, or

start, of every sound is no longer clearly focused in time as it should be. Because more than one

driver is involved in the reproduction of the several harmonics of any single sound, the drivers

must be heard in unison to preserve the structure of the sound. Since, in most speakers, the

tweeter is closer to the listener’s ear, the initial attack of the upper harmonics can arrive up to a

millisecond before the body of the sound. This delay results in a noticeable reduction in the

realism of the reproduced sound.

To eliminate both these problems THIEL speakers use one of, or a combination of, two

techniques. In some cases drivers are mounted coincidently (not merely coaxially) and/or

drivers are mounted on a sloped baffle. In either case, the positioning ensures that the sound

from each driver reaches the listener at the same time. The sloping baffle arrangement can work

perfectly for only one listening position. However, because the drivers are positioned in a

vertical line the error introduced by a listener to the side of the speaker is very small. Also,

because the driver spacing is not more than the approximate wavelength of the crossover

frequency, the error introduced by changes in listener height are small within the range of

normal seated listening heights provided the listener is 8 feet or more from the speakers.

Coincident mounting ensures that the sound from the two drivers reaches the listener at

exactly the same time, regardless of listener (or speaker) position.

PHASE RESPONSE

We use the trade mark Coherent Source to describe the unusual technical performance of time and phase coherence which gives THIEL

products the unusual ability to accurately reproduce musical waveforms.

Usually, phase shifts are introduced by the crossover slopes, which change the musical waveform and result in the loss of spatial and

transient information. The fourth order Linkwitz-Riley crossover is sometimes promoted as being phase correct. What is actually meant is

that the two drivers are in phase with each other through the crossover region. However, in the crossover region neither driver is in phase

with the input signal nor with the drivers’ output at other frequencies; there is a complete 360° phase rotation at each crossover point.

Since 1978 THIEL has employed first order (6dB/octave) crossover systems in all our Coherent Source speaker systems. A first order

system is the only type that can achieve perfect phase coherence, no time smear, uniform frequency response, and uniform power response.

A first order system achieves its perfect (in principle) results by keeping the phase shift of

each roll-off less than 90° so that it can be canceled by the roll-off of the other driver that has

an identical phase shift in the opposite direction. (Phase shifts greater than 90° cannot be

canceled.) The phase shift is kept low by using very gradual (6dB/octave) roll-off slopes which

produce a phase lag of 45° for the low frequency driver and a phase lead of 45° for the high

frequency driver at the crossover point. Because the phase shift of each driver is much less than

90° and is equal and opposite, their outputs combine to produce a system output with no phase

shift and perfect transient response.

Figure 4 graphically demonstrates how the outputs of each driver in a two-way speaker

system combine to produce the system’s output to a step input. The first graph shows the ideal

output. The second shows the operation of a time-corrected, fourth order crossover system. The

two drivers produce their output in the same polarity and both drivers start responding at the

same time. However, since the high-slope network produces a large amount of phase shift, the

tweeter’s output falls too quickly and the woofer’s output increases too gradually. Therefore,

the two outputs do not combine to produce the input step signal well but instead greatly alter

the waveform. The third graph shows how, in a first order crossover system, the outputs of the

two drivers combine to reproduce the input waveform without alteration.

In practice, the proper execution of a first order system requires very high quality, wide

bandwidth drivers and that the impedance and response variations of the drivers be

compensated across a wide range of frequencies. This task is complex since what is necessary

is that the acoustic driver outputs roll off at 6 dB/octave and not simply for the networks

themselves to roll off at 6 dB/octave. For example, if a typical tweeter with a low frequency

roll-off of 12 dB/octave is combined with a 6 dB/octave network, the resulting acoustical

output will roll off at 18 dB/octave. Therefore, in practice, the required network circuits are

much more complex than might be thought.

The result of phase coherence (in conjunction with time coherence) is that all waveforms will be

reproduced without major alterations. The speaker’s reproduction of a step waveform demonstrates

this fact since, like musical waveforms, a step is made up of many frequencies which have precise

amplitude and phase relationships. For a step signal to be accurately reproduced, phase, time and

amplitude response must all be accurate. Because this waveform is so valuable,

it is commonly used to evaluate the performance of electronic components. It is

not typically used for speaker evaluation because most speakers are not able to

reproduce it recognizably.

An externally hosted image should be here but it was not working when we last tested it.

Last edited:

Anyway, you can produce a square wave and do it over a relatively broad band and essentially anywhere in the pattern.

http://www.diyaudio.com/forums/multi-way/71824-making-square-waves.html

Best,

Tom Danley

Ron, were you on the old bass list?

Yep, I was there ~10-12 years ago. After it left the Utexas "mcfeeley" server it became somehow less. There were some 7000 members supposedly, but that collapsed to a few hundred after the switch -- I don't think the membership dropped so much as the people who couldn't figure out how to get off the list were finally off

Regards,

Ron

One factor that gets lost in the shuffle, partly because it doesn't have a sexy name like "Time Align", is the inter-driver phase relationship through the one or two-octave crossover region. This isn't phase relative to the electrical source, but phase angle between to the high and lowpass drivers. This type of phase has direct consequences for non-coincident drivers, since it steers the polar pattern. I also believe it is directly audible as non-coherence, as a single source splitting into two, and is a major problem in the high-end speaker business.

I try and aim for phase divergence between the drivers being no larger than 20 degrees, and ideally 5 degrees or less. This can be confirmed by temporarily reverse-phasing one of the drivers and seeing how deep the null is: I aim for 25 to 30 dB nulls, which implies (through vector math) 5 degrees or better phase-match. If reverse-phasing one of the drivers makes no difference at all, then your drivers have a 90 degree phase angle between them. If the crossover region actually goes up, then the drivers can be 120 degrees or more apart, a very undesirable condition.

The best subjective way to audition for phase coherence (in the inter-driver sense) during development is walk up close to the speaker, place the cabinet on its side if necessary, and use a pink-noise stimulus at a moderate level to drive the speaker system. As phase coherence improves, the apparent source changes from two drivers to a blur between the two to a single virtual source centered between them. The single-source illusion requires 10 degrees or better phase coherence over at least an octave of the crossover region.

Where many, if not most, crossovers fail is sloppy control of phase angles over the entire crossover region, not just the exact crossover frequency itself. For example, many dome tweeters have a small 1~2 dB bump in their response a half-octave below the crossover, and this results in the inter-driver phase angle spreading apart in the "bump" region. Even this has only a very minor impact on overall frequency response, and is unlikely to be noticed by a magazine reviewer, the rapid phase deviation (between drivers) in the one-octave region is audible as incoherence, as a disjointed, disintegrated quality to the sound. And it's all caused by not paying attention to that little bump in the tweeter's response in the band-reject region.

Correct that bump, using a small notch filter or mechanical modification of the tweeter, and the speaker sounds far better, while the overall response curves shows little change. When the inter-driver phase problem is corrected, the sensation of a single driver becomes apparent. There's also a significant improvement in the timbre of speaking or singing voices.

But this care in design is surprisingly rare in the high-end world, resulting in $50,000 to $100,000 speaker systems that sound incoherent and disjointed, with peculiar tonal colorations and spatial problems.

The same applies to compensating for driver breakups from the midrange or midbass driver. If these are not attended to, the upper portion of the crossover region can sound harsh, despite no apparent indication on the FR curve. If the rolloff region is smooth and phase-controlled, then system has a "single-driver" sound. But with the current fad for drivers with rough breakup regions (Kevlar, ceramic, metal, etc.), and low-slope crossovers, this is rarely met in contemporary loudspeakers.

Incoherent crossovers in coincident drivers is an interesting separate topic. Certainly, a coincident driver allows more freedom in the crossover design, since the polar-pattern steering problem goes away, and phase angle deviations between drivers will be masked by physical proximity. But I still suspect phase-matching drivers, and carefully controlling the rolloff regions, will have benefits, just not as obviously as a non-coincident system. The test protocol of temporarily reverse-phasing one of the drivers and looking for the depth of the null would still work, and provide an indication of inter-driver coherence.

I try and aim for phase divergence between the drivers being no larger than 20 degrees, and ideally 5 degrees or less. This can be confirmed by temporarily reverse-phasing one of the drivers and seeing how deep the null is: I aim for 25 to 30 dB nulls, which implies (through vector math) 5 degrees or better phase-match. If reverse-phasing one of the drivers makes no difference at all, then your drivers have a 90 degree phase angle between them. If the crossover region actually goes up, then the drivers can be 120 degrees or more apart, a very undesirable condition.

The best subjective way to audition for phase coherence (in the inter-driver sense) during development is walk up close to the speaker, place the cabinet on its side if necessary, and use a pink-noise stimulus at a moderate level to drive the speaker system. As phase coherence improves, the apparent source changes from two drivers to a blur between the two to a single virtual source centered between them. The single-source illusion requires 10 degrees or better phase coherence over at least an octave of the crossover region.

Where many, if not most, crossovers fail is sloppy control of phase angles over the entire crossover region, not just the exact crossover frequency itself. For example, many dome tweeters have a small 1~2 dB bump in their response a half-octave below the crossover, and this results in the inter-driver phase angle spreading apart in the "bump" region. Even this has only a very minor impact on overall frequency response, and is unlikely to be noticed by a magazine reviewer, the rapid phase deviation (between drivers) in the one-octave region is audible as incoherence, as a disjointed, disintegrated quality to the sound. And it's all caused by not paying attention to that little bump in the tweeter's response in the band-reject region.

Correct that bump, using a small notch filter or mechanical modification of the tweeter, and the speaker sounds far better, while the overall response curves shows little change. When the inter-driver phase problem is corrected, the sensation of a single driver becomes apparent. There's also a significant improvement in the timbre of speaking or singing voices.

But this care in design is surprisingly rare in the high-end world, resulting in $50,000 to $100,000 speaker systems that sound incoherent and disjointed, with peculiar tonal colorations and spatial problems.

The same applies to compensating for driver breakups from the midrange or midbass driver. If these are not attended to, the upper portion of the crossover region can sound harsh, despite no apparent indication on the FR curve. If the rolloff region is smooth and phase-controlled, then system has a "single-driver" sound. But with the current fad for drivers with rough breakup regions (Kevlar, ceramic, metal, etc.), and low-slope crossovers, this is rarely met in contemporary loudspeakers.

Incoherent crossovers in coincident drivers is an interesting separate topic. Certainly, a coincident driver allows more freedom in the crossover design, since the polar-pattern steering problem goes away, and phase angle deviations between drivers will be masked by physical proximity. But I still suspect phase-matching drivers, and carefully controlling the rolloff regions, will have benefits, just not as obviously as a non-coincident system. The test protocol of temporarily reverse-phasing one of the drivers and looking for the depth of the null would still work, and provide an indication of inter-driver coherence.

Last edited:

Lynn and Danley, thanks for the posts!!

Lynn, I will have to try that speaker listening test on my next build, thanks!

Wouldn't all this discussion point to the fact that something like the DEQX is worth more then its price tag in terms of removing any phase issues that exist?

Lynn, I will have to try that speaker listening test on my next build, thanks!

Wouldn't all this discussion point to the fact that something like the DEQX is worth more then its price tag in terms of removing any phase issues that exist?

To further the subject a bit...

You have other methods of designing a xover that have differing phase characteristics. There is flat power response and transient perfect, neither of which are linear(coherent)-phase.

It depends if you want the linear-phase to be your tradeoff to achieve something else.

Oh- and you can't actually hear phase itself. You can hear the time-alignment or other things that relate to phase, but not hear phase itself. You can hear absolute phase, and that is definitely different. I heard that example on a set of BESL Systm 5's at Bamberg's place.

Then you have the differing phases.

Acoustic Phase

Impedance Phase

(and probably even a time-domain phase)

The Acoustic phase is what we look at for linear phase alignment of drivers through a xover. Impedance phase is mainly just the capacitive/inductive cycles of a design related to the impedance.

To have a linear-phase design, the acoustic phase must be a virtual overlay from driver to driver, almost like a relay race. A stepped baffle lends itself to any slope and still be phase aligned easily. When you have a Z-offset to have the drivers emanating from a different vertical plain, you have to additionally shift the acoustic phase of a driver to align with the next. This is typically done by using an additional xover order on one of the drivers (more accurately the tweeter, but both work) and essentially having asymmetrical xover slopes.

This is the best example of a linear-phase system that I have modeled, namely my "Attitudes" design. (You heard this one, Panomaniac!)

Essentially 3rd order HP on tweeter, 2nd LP and 3rd HP on Mid, and 2nd LP on woofer. I did use an LCR at the input to flatten the impedance magnitude around 3kHz, but otherwise, this is how it went.

It seemed like a lot of this thread has been arguing over what is favorable instead of laying out the details of the issue and how to achieve it.

Yes- FR drivers are inherently minimum phase, as they don't typically have xover phase shifts.

Oh- and a linear acoustic phase is more important from mid to tweeter, since the wavelengths are shorter and easier to discern. The lower the frequency of xover, the less of a concern it becomes for time of arrival to the listener.

Later,

Wolf

You have other methods of designing a xover that have differing phase characteristics. There is flat power response and transient perfect, neither of which are linear(coherent)-phase.

It depends if you want the linear-phase to be your tradeoff to achieve something else.

Oh- and you can't actually hear phase itself. You can hear the time-alignment or other things that relate to phase, but not hear phase itself. You can hear absolute phase, and that is definitely different. I heard that example on a set of BESL Systm 5's at Bamberg's place.

Then you have the differing phases.

Acoustic Phase

Impedance Phase

(and probably even a time-domain phase)

The Acoustic phase is what we look at for linear phase alignment of drivers through a xover. Impedance phase is mainly just the capacitive/inductive cycles of a design related to the impedance.

To have a linear-phase design, the acoustic phase must be a virtual overlay from driver to driver, almost like a relay race. A stepped baffle lends itself to any slope and still be phase aligned easily. When you have a Z-offset to have the drivers emanating from a different vertical plain, you have to additionally shift the acoustic phase of a driver to align with the next. This is typically done by using an additional xover order on one of the drivers (more accurately the tweeter, but both work) and essentially having asymmetrical xover slopes.

This is the best example of a linear-phase system that I have modeled, namely my "Attitudes" design. (You heard this one, Panomaniac!)

Essentially 3rd order HP on tweeter, 2nd LP and 3rd HP on Mid, and 2nd LP on woofer. I did use an LCR at the input to flatten the impedance magnitude around 3kHz, but otherwise, this is how it went.

It seemed like a lot of this thread has been arguing over what is favorable instead of laying out the details of the issue and how to achieve it.

Yes- FR drivers are inherently minimum phase, as they don't typically have xover phase shifts.

Oh- and a linear acoustic phase is more important from mid to tweeter, since the wavelengths are shorter and easier to discern. The lower the frequency of xover, the less of a concern it becomes for time of arrival to the listener.

Later,

Wolf

I suspect phase is more audible the _lower_ one goes, against received audiophile wisdom (which holds that phase is critical between mid and tweeter), from first principles about neurons: nerve activity is pulse-density-modulation, with denser pulses corresponding to higher sensation, with a maximum pulse repetition rate of about 1 kHz. That's not fast enough to encode the energy at various points of a 3 Khz waveform, but it is fast enough to sample 200 Hz, hence my supposition that mid-to-high phase might not be audible, whereas woofer-to-mid could be.

Ian McNeill was kind enough to demo a bunch of different filters at BAF2009: linear phase, 8th order phase rotation at about 300 Hz, and 8th order phase rotation at about 3 kHz, which we then single-blind auditioned with music. I couldn't hear much difference with the 3 kHz rotation, but the 300 Hz rotation was unmistakable on percussion: snare drums in particular sounded polite, whereas the linear phase system showed them to be much ruder (for lack of a better term) and closer to the drum as I recalled my friends playing them. Apparently the low-mid phase rotation robbed the drum of its impact, the jump factor if you will. Voices didn't seem to change much, nor instruments less dependent on transients.

AS to why, whether the ear read less attack on the initial waveform. or whether it sampled further down the signal, I'm not willing to speculate. You may wish to try the experiment yourself, but preferably on a linear phase speaker since a high-order crossover will already have enough phase to confuse the issue. Perhaps full-range drivers or headphones might fill the bill.

Ian McNeill was kind enough to demo a bunch of different filters at BAF2009: linear phase, 8th order phase rotation at about 300 Hz, and 8th order phase rotation at about 3 kHz, which we then single-blind auditioned with music. I couldn't hear much difference with the 3 kHz rotation, but the 300 Hz rotation was unmistakable on percussion: snare drums in particular sounded polite, whereas the linear phase system showed them to be much ruder (for lack of a better term) and closer to the drum as I recalled my friends playing them. Apparently the low-mid phase rotation robbed the drum of its impact, the jump factor if you will. Voices didn't seem to change much, nor instruments less dependent on transients.

AS to why, whether the ear read less attack on the initial waveform. or whether it sampled further down the signal, I'm not willing to speculate. You may wish to try the experiment yourself, but preferably on a linear phase speaker since a high-order crossover will already have enough phase to confuse the issue. Perhaps full-range drivers or headphones might fill the bill.

To have a linear-phase design, the acoustic phase must be a virtual overlay from driver to driver, almost like a relay race. A stepped baffle lends itself to any slope and still be phase aligned easily. When you have a Z-offset to have the drivers emanating from a different vertical plain, you have to additionally shift the acoustic phase of a driver to align with the next. This is typically done by using an additional xover order on one of the drivers (more accurately the tweeter, but both work) and essentially having asymmetrical xover slopes.

This is the best example of a linear-phase system that I have modeled, namely my "Attitudes" design. (You heard this one, Panomaniac!)

Essentially 3rd order HP on tweeter, 2nd LP and 3rd HP on Mid, and 2nd LP on woofer. I did use an LCR at the input to flatten the impedance magnitude around 3kHz, but otherwise, this is how it went.

Later,

Wolf

In-phase at crossover is a good attribute in that it puts off-axis nulls further from the listening axis, but it doesn't assure linear phase.

Are you sure your own design is linear phase? Note that linear phase means linear when plotted on a linear frequency axis, not log. With the higher order slopes you describe it is unlikely that linear phase is achieved, even with time offset.

Constant slope on a linear F axis means constant group delay. Constant slope on a log axis is not the same.

David

Linear-phase does not have to be low-order. And I don't know why you think a a higher order would be less aligned.

Perhaps I should be clearer: high-order minimum-phase crossovers, such as any passive crossovers beyond first-order, or in other words what you'd find in a typical multiway loudspeaker (save for Thiel, Dunlavy, Spica, and such creatures).

Because that's their gimmick, their product differentiator.

Audio world is full of more gimmicks then science

The order of the crossover depends on the specific drivers/design choosen. You have to pick your posion, there are incredible sounding speakers with high order XOs and there are incredible sounding speakers with low order XOs.

I still want to hear brickwall 300dB sloped XOs with the DEQX!!!

The order of the crossover depends on the specific drivers/design choosen. You have to pick your posion, there are incredible sounding speakers with high order XOs and there are incredible sounding speakers with low order XOs.

I still want to hear brickwall 300dB sloped XOs with the DEQX!!!

Last edited:

if you use brickwall then you will not have an even radiation of sound from the drive units which will be noticeable. So most designers use the lower order types.

I'd like to see some evidence. You can say its a gimmick and they can refute it and theres no conclusion without evidence.

Because that's their gimmick

I'd like to see some evidence. You can say its a gimmick and they can refute it and theres no conclusion without evidence.

- Status

- This old topic is closed. If you want to reopen this topic, contact a moderator using the "Report Post" button.

- Home

- Loudspeakers

- Multi-Way

- significance of phase