Mr Danley

You are at the peak of the pyramid when results are primary. Therefore you are also the target of lesser beings, notwithstanding mathematical competence. Please continue contributing to the education of less blessed persons who somehow get that humility counts. We cannot quantify our gratitude that you participate here.

You are at the peak of the pyramid when results are primary. Therefore you are also the target of lesser beings, notwithstanding mathematical competence. Please continue contributing to the education of less blessed persons who somehow get that humility counts. We cannot quantify our gratitude that you participate here.

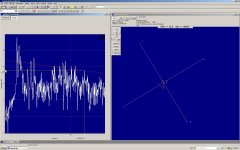

This is my favorite room measuring platform. On the left is a shortened view of an ETC meaurement of a small room, (to fit it in one screen shot) what is not readily seen is that it is six measurements, one in each direction with the speaker being front and close to center. On the right is a 3-D spherical view and the little red dots are reflections that are brought into view by pulling the red line down into the peaks of the ETC, the lower you go the more the sphere is populated. The magic is that each of the peaks and dots have a precise location in time and space as noted in the right side top. You just click on a peak or dot or scroll the curser throough the ETC and look at what ever you want to.

I know that this thread is not about measurement platforms but multi mic routines for reflection location was brought up a couple of posts back. I wont bore you with details but will answer any questions if any that I can.

Tom D. has most likely seen this before, (Doug J. has one) but last I checked there were only 114 of them world wide.

I know that this thread is not about measurement platforms but multi mic routines for reflection location was brought up a couple of posts back. I wont bore you with details but will answer any questions if any that I can.

Tom D. has most likely seen this before, (Doug J. has one) but last I checked there were only 114 of them world wide.

Attachments

Very cool. Goldline, eh? The RTA folks?

About 20 years ago THX used to have a multi-mic portable system for cinema alignment. It just did levels, as far as I remember. Wonder what they have now?

About 20 years ago THX used to have a multi-mic portable system for cinema alignment. It just did levels, as far as I remember. Wonder what they have now?

Very cool. Goldline, eh? The RTA folks?

That's them. GoldLine bought TEF from Crown Audio after Harmon bought Crown and decided it didn't want Crown to be in the broadcast, industrial/medical power supply (Techron MRI) or any other business except manufacturing audio amps.

Too bad, Polar ETC is an acoustic space mapping jewel, a slightly rough jewel that sits on a shelf with zero marketing or development effort.

The one that makes you happy.

Don't go looking for simple one-line answers. There aren't any. If there was, everyone would do it.

Don't go looking for simple one-line answers. There aren't any. If there was, everyone would do it.

Hi Tom

You have to understand that Heysers work was discredited sometime after he died when the AES would not publish his collected works. This is because the work was sent out to several renown mathematicians for review (not AES normal reviewers) and they all concluded that the work was not mathematically correct, so the AES declined to publish it.

Since the velocity is a conjugate variable to the pressure - its the spatial derivative or gradient of the pressure - the derivative of the impulse, Heysers "imaginary" part is a rough approximation of the velocity. Thus the product of the real and imaginary parts are a rough estimate of the intensity and hence the power per unit area. This has to be integrated over time to get the energy.

What a lot of acousticians looked at was using two microphones and directly measuring the pressure (the sum of the two) and the velocity (the difference) and multiply to get the power impulse or intensity. But this measure is directional. To get a non-direction signal we need four microphones and now things are getting tough to handle. However, some architectural firms do use four mics to show the direction of individual reflections.

Heyser came to Penn State and gave a talk while I was there. It was not well recieved.

I wasn't aware that it was "discredited" but would be interested in reading of any technical shortcomings. Certainly, TEF has stood the test of time as one of the big three (TEF, MLSSA and straight impulse) measurement approaches with no more practical plusses and minuses than the others. Impulses have a weak peak to average energy content, MLSSA converts clipping into response errors, etc. You become aware of the pitfals of each system and work around them.

I will admit that I was never a fan of the SynAudCom group. It was very clubby and I never drank that particular coolaid. When Toole and others started to debunk some of the RFZ generalizations ("Hass kickers" etc.) I secretly applauded.

I never felt that Heyser was entrenched in that school so much as they found his measurement capibilities jelled with their needs and adopted him wholeheartedly. That doesn't diminish what he achieved.

Heyser, Doug Rife, Fincham and Berman, they all brought us into the modern era of loudspeaker measurement. I don't think any of them would claim great invention so much as developing a practical a methodology previously demonstrated before them.

David S.

Why was Haas trigger/kicker questioned? It has some important psycoacoustic cues and it adds liveliness which makes the listening experience more comfortable.

I would have to read through the paper again, but there was a Toole/Olive paper on the subject and he covers it well in his book. I think the notion was that a particular later reflection (the "kicker") gave the ear a new time span that would allow for well fused subsequent reflections. This gave the kicker disproportionate importance. I believe what Toole was finding was that the first subsequent reflection wasn't so good at hiding later ones and the whole notion was shaky. This is not to say that the later reflections and their strength timing and direction aren't all important, but that the "kicker" reflection wasn't doing what SynAudCom crowd had thought.

This is an approximate recollection and you should read the papers to get the straight stuff.

David S.

This is an approximate recollection and you should read the papers to get the straight stuff.

David S.

Last edited:

Hi guys

Sorry for the delay, we have an illness in the family that has taken up my focus these days.

I hope I didn’t given the wrong impression about how things were back then, I will try to explain what I saw.

In the olden days (for me), one took loudspeaker measurements out doors with a sound level meter and oscillator or if you could afford one, a GenRad chart recorder setup which could make a real response curve.

Neither of these told you anything about phase, neither could reject reflected sound but it was better than nothing.

Dick Heyser was the first person I had heard of that considered the time of arrival, the actual acoustic phase as well as developing the first technique that allowed anechoic like measurements indoors.

In the day, the AES thought enough of his work on determination of loudspeaker arrival times and the TDS process to include them in the Loudspeaker anthology’s and publish a separate AES anthology on his work called “Time delay spectrometry”.

The TEF machine when introduced was both expensive and very advanced, I was lucky enough to work for a NASA contractor on acoustic levitation transducers so as well as my love (loudspeakers) there was a legitimate justification for it and I tried to use it to measure as many things as I could to sort of show the value of the purchase.

Eventually we were asked to present at a TEF seminar where I talked about using it on levitation sources, servomotor driven woofers, to measure the resonance of pecan shells (we developed a vibration / sound way to separate the shell from the nutmeat before cracking the shell for a customer), finding flaws hidden within a concrete block for Portland Cement and trying to measure the thickness of the bottom carbon layer at what was the largest (working) blast furnace in the states ( a scary project I am glad didn’t pan out) and a bunch of other weirdo acoustic things eventually measuring the inside of the Great Pyramid.

Anyway around the same era (or at least it appears that way now), other mechanical acoustic measurement techniques were entering loudspeaker audio.

Dave, I remember attending a paper Laurie gave about the possibility of using an impulse as the measurement signal. Before systems that arrive at an impulse response measurement, they tried actually using the impulse as the drive signal. As I was working with the servomotor subs then (which had a small amount of hysteresis from static friction) I remember that in his example, just the hysteresis in the batting moving was enough to cause an error. I don’t know how he was to work for but he seemed like a real nice guy.

Now days, there are many ways to measure which superseded the TEF machine in most ways. Programs like ARTA are cheap and powerful and allow you to look at the data in many useful ways. The TDS system on the other hand suffered a little first when they went from the hardware implementation to software. The TEF 10, 12 and 12+ all had quadrature phase detectors (something like a Costas loop) which cannot be “fooled” as there are always two phase measurement with a 90 degree difference between the reference.

The TEF20 and 25 are one sided detectors and can occasionally be fooled traversing 0/360 but that is offset by being so much faster and nicer to use (I use a 20 still).

The first problem TDS faced (as I remember) was when a very outspoken originator of a “smart” system took the TEF on as it’s main competition. That FFT based system at the time was fast, had cool color displays and a phase display that looked real but was only the Hilbert view of the amplitude and NOT actual acoustic phase.

I remember arguing at PSW that technically speaking, knowing the actual acoustic phase could be more useful than what it showed. Anyway, that company was also gobbled up by the Harmon and eventually bought out Sam and became very successful and evolved some very useful software in it’s own right.

The TDS process on the other hand was patented and narrowly licensed to Crown, Brujel and Kajer and some other technical users (maybe like Beta tape). While Crown was a top notch company, they too were eventually “Harmonized” and with what the TEF did being a narrow use too, it was sold to Goldline who I have had little contact with.

What I can say is that side by side with ARTA or the MLS part of the TEF software that it is does what it does differently. The biggest difference is dealing with noise, especially at low frequencies, the TDS is better.

How this shows up most obviously is if you examine the lf slope on a speaker. There is a point say 20, 30, 40DB down from the “flat” level where the curve does not continue to roll off.

Instead there is a notch and then a bunch of “free low end” as you continue down.

That is all noise leaking in and to get real data -40dB and more down, takes more time and averages. A major criticism of the TEF by the impulse based systems was that it required a time measurement first and then the response measurement and so “took longer” which if you needed real data doesn’t appear to be the case at all.

It is no problem with the TEF to start with a measurement noise floor 40 or 50 dB down or more if you take the time to adjust the mic gains. For me, it’s strength is that for crossover use, it delivers magnitude and acoustic phase unambiguously in a multi-way system.

I can’t make all the drivers add into one in the computer or the real thing if I don’t have the real data referenced to the tweeter.

I am not up on the technique enough to “know” but I would wonder that since the systems that compute the mag and phase from the impulse response are the ones that tend to have this noise / dynamic limitation in the data, how does that effect using that same impulse for convolution etc?

1Audiohack brings up another of the weird things that the TEF can do, a polar ETC. This is a way to locate where reflections are coming from and so locates where absorption is best placed etc. This was also something Dick imagined and implemented more than 25 years ago.

As you apparently know, one of Doug’s things is stereo imaging and cool acoustics, it has been a lot of fun having him listen to the speakers and recordings from the mic project. This was a imaging test recording he made a zillion years ago;

Online LEDR - Listening Environment Diagnostic Recording Sound Test

Here is one direction “identifying the source” has gone too, this is an amazing piece of hardware and a lot of fun to play with.

Columbia College Chicago - AA+A Acoustics program featured in WGN Channel 9 News

I wish I could find the video from this;

DDT presentation shot - ProAudioSpace

What isn’t widely known yet (but i think i can say it, too late if i can't) is that as of this Thursday, Doug has retired from teaching at Columbia and come to work for us full time. I remember him talking about the Hass kickers although to be honest my world was pretty much below 100Hz back then. What I can do is ask him if he has time to pop in and comment.

Have to run.

Best,

Tom Danley

Sorry for the delay, we have an illness in the family that has taken up my focus these days.

I hope I didn’t given the wrong impression about how things were back then, I will try to explain what I saw.

In the olden days (for me), one took loudspeaker measurements out doors with a sound level meter and oscillator or if you could afford one, a GenRad chart recorder setup which could make a real response curve.

Neither of these told you anything about phase, neither could reject reflected sound but it was better than nothing.

Dick Heyser was the first person I had heard of that considered the time of arrival, the actual acoustic phase as well as developing the first technique that allowed anechoic like measurements indoors.

In the day, the AES thought enough of his work on determination of loudspeaker arrival times and the TDS process to include them in the Loudspeaker anthology’s and publish a separate AES anthology on his work called “Time delay spectrometry”.

The TEF machine when introduced was both expensive and very advanced, I was lucky enough to work for a NASA contractor on acoustic levitation transducers so as well as my love (loudspeakers) there was a legitimate justification for it and I tried to use it to measure as many things as I could to sort of show the value of the purchase.

Eventually we were asked to present at a TEF seminar where I talked about using it on levitation sources, servomotor driven woofers, to measure the resonance of pecan shells (we developed a vibration / sound way to separate the shell from the nutmeat before cracking the shell for a customer), finding flaws hidden within a concrete block for Portland Cement and trying to measure the thickness of the bottom carbon layer at what was the largest (working) blast furnace in the states ( a scary project I am glad didn’t pan out) and a bunch of other weirdo acoustic things eventually measuring the inside of the Great Pyramid.

Anyway around the same era (or at least it appears that way now), other mechanical acoustic measurement techniques were entering loudspeaker audio.

Dave, I remember attending a paper Laurie gave about the possibility of using an impulse as the measurement signal. Before systems that arrive at an impulse response measurement, they tried actually using the impulse as the drive signal. As I was working with the servomotor subs then (which had a small amount of hysteresis from static friction) I remember that in his example, just the hysteresis in the batting moving was enough to cause an error. I don’t know how he was to work for but he seemed like a real nice guy.

Now days, there are many ways to measure which superseded the TEF machine in most ways. Programs like ARTA are cheap and powerful and allow you to look at the data in many useful ways. The TDS system on the other hand suffered a little first when they went from the hardware implementation to software. The TEF 10, 12 and 12+ all had quadrature phase detectors (something like a Costas loop) which cannot be “fooled” as there are always two phase measurement with a 90 degree difference between the reference.

The TEF20 and 25 are one sided detectors and can occasionally be fooled traversing 0/360 but that is offset by being so much faster and nicer to use (I use a 20 still).

The first problem TDS faced (as I remember) was when a very outspoken originator of a “smart” system took the TEF on as it’s main competition. That FFT based system at the time was fast, had cool color displays and a phase display that looked real but was only the Hilbert view of the amplitude and NOT actual acoustic phase.

I remember arguing at PSW that technically speaking, knowing the actual acoustic phase could be more useful than what it showed. Anyway, that company was also gobbled up by the Harmon and eventually bought out Sam and became very successful and evolved some very useful software in it’s own right.

The TDS process on the other hand was patented and narrowly licensed to Crown, Brujel and Kajer and some other technical users (maybe like Beta tape). While Crown was a top notch company, they too were eventually “Harmonized” and with what the TEF did being a narrow use too, it was sold to Goldline who I have had little contact with.

What I can say is that side by side with ARTA or the MLS part of the TEF software that it is does what it does differently. The biggest difference is dealing with noise, especially at low frequencies, the TDS is better.

How this shows up most obviously is if you examine the lf slope on a speaker. There is a point say 20, 30, 40DB down from the “flat” level where the curve does not continue to roll off.

Instead there is a notch and then a bunch of “free low end” as you continue down.

That is all noise leaking in and to get real data -40dB and more down, takes more time and averages. A major criticism of the TEF by the impulse based systems was that it required a time measurement first and then the response measurement and so “took longer” which if you needed real data doesn’t appear to be the case at all.

It is no problem with the TEF to start with a measurement noise floor 40 or 50 dB down or more if you take the time to adjust the mic gains. For me, it’s strength is that for crossover use, it delivers magnitude and acoustic phase unambiguously in a multi-way system.

I can’t make all the drivers add into one in the computer or the real thing if I don’t have the real data referenced to the tweeter.

I am not up on the technique enough to “know” but I would wonder that since the systems that compute the mag and phase from the impulse response are the ones that tend to have this noise / dynamic limitation in the data, how does that effect using that same impulse for convolution etc?

1Audiohack brings up another of the weird things that the TEF can do, a polar ETC. This is a way to locate where reflections are coming from and so locates where absorption is best placed etc. This was also something Dick imagined and implemented more than 25 years ago.

As you apparently know, one of Doug’s things is stereo imaging and cool acoustics, it has been a lot of fun having him listen to the speakers and recordings from the mic project. This was a imaging test recording he made a zillion years ago;

Online LEDR - Listening Environment Diagnostic Recording Sound Test

Here is one direction “identifying the source” has gone too, this is an amazing piece of hardware and a lot of fun to play with.

Columbia College Chicago - AA+A Acoustics program featured in WGN Channel 9 News

I wish I could find the video from this;

DDT presentation shot - ProAudioSpace

What isn’t widely known yet (but i think i can say it, too late if i can't) is that as of this Thursday, Doug has retired from teaching at Columbia and come to work for us full time. I remember him talking about the Hass kickers although to be honest my world was pretty much below 100Hz back then. What I can do is ask him if he has time to pop in and comment.

Have to run.

Best,

Tom Danley

Dang did that happen already? I thought he was out about the end of the month. Oh well, you said it, not me. 🙂 I hope you keep his fire lit, he's way to sharp to let him get bored.

IMTS again this year?

All the best,

Barry.

IMTS again this year?

All the best,

Barry.

Back to the BBC control rooms and the reflectors:

I finally had the time to sit down and figure out how to draw the CID control room from the BBC paper - using CARA software.

The simulation is quite remarkable. Using the same dimensions and layout they have in figure #12, reflections die very fast. By ~5ms after the first arrival, there is nothing higher than -20dB. All gone. There are a couple of distinct reflections about 10-12dB down at 3 and 4ms, but that's all.

I'll try to stitch together the graphics into a web friendly format later this week, and show you. It really does seem to work well.

I finally had the time to sit down and figure out how to draw the CID control room from the BBC paper - using CARA software.

The simulation is quite remarkable. Using the same dimensions and layout they have in figure #12, reflections die very fast. By ~5ms after the first arrival, there is nothing higher than -20dB. All gone. There are a couple of distinct reflections about 10-12dB down at 3 and 4ms, but that's all.

I'll try to stitch together the graphics into a web friendly format later this week, and show you. It really does seem to work well.

I wasn't aware that it was "discredited" but would be interested in reading of any technical shortcomings.

David S.

It wasn't TEF that was questioned, but Heysers later concepts in imaginary impulses and his Heyser Transform (neither of which are talked about at all these days not having any support in the field). The techniques used in TEF are quite valid, but I have always taken exception to Heyser being the "inventor". These techniques were all well known in sonar and Radar and Heyser would have known that. He applied them to audio which is a really "cool" thing to do, but in no way did he "discover" them or "invent" them. So the only problem that I have with Heyser is the tendancy of his proponents to over play his contributions in audio, they were significant but not groundbreaking. As Tom Danley said, I understand that he was a major contributor at JPL, and was sorely missed.

How this shows up most obviously is if you examine the lf slope on a speaker. There is a point say 20, 30, 40DB down from the “flat” level where the curve does not continue to roll off.

Instead there is a notch and then a bunch of “free low end” as you continue down.

That is all noise leaking in and to get real data

Tom Danley

Hi Tom

That is not entirely correct. It's not the noise that causes that effect but the window. The window creates a situation where there is an error in frequencies that correspond to the time of the window. In other words this will happen even if the data is noise free. The very low frequencies tail on for a much longer time than the window.

Consider the "free" LF sound that you talk of. As the signal progresses it must average to zero in the real world - we cannot magically change the atmoshperic pressure with our devices. If I truncate the waveform prior to its demise then the data will not usually average to zero, there will be an error. This error will show up as a very low level data point at zero Hertz. Now at some higher frequency there will be a point where the data does precisely cancel and this forces the response to zero, as you noted. But none of this is due to noise.

In my own software - all of my analysis software is custom since there isn't anything out there that I like - I avoid this by tapering the window instead of just truncating it. If the window is tapered and the time is varied slightly then this effect can be removed completely. Of course the LF data is still no good at these frequencies, for TEF or any other method, because of the uncertainty principle. They all have to obey that.

TEF does not show this because its "window" is derived in an analog fashion and is by its very nature tapered.

As to precise phase, I do multiple systems all the time where the data is taken on seperate drivers and then combined analytically in a computer. Getting this right along a single axis is almost trivial, but getting this right over the entire polar field is not. Not only do you need precise phase, but you need a way to orient the phase references relative to the entire system and not just one speaker. My techniques are quite unique in this regard and no one else that I know of does this.

Being able to accurately simulate complex crossovers over the entire sound field is key to my designs. Yours too I assume since your crossovers appear quite complex as well.

The techniques used in TEF are quite valid, but I have always taken exception to Heyser being the "inventor". These techniques were all well known in sonar and Radar and Heyser would have known that. He applied them to audio which is a really "cool" thing to do, but in no way did he "discover" them or "invent" them.

Thats basically what I was saying in my last paragraph. We can find precedences in so many areas outside our fields (antenna arrays for line sources, for example) but credit is due to those that have the insight to adapt them to good use in loudspeakers.

Sometimes these "inventors" or "geniuses" are more grounded and humble than the fans that try to elevate them to a status well beyond what they would be comfortable with.

David S.

Hi Earl

That makes sense about the “noise”, I used that word because it was random in shape from measurement to measurement and (like random noise) averaging a number of sequence measurements in Arta, MLS, Easra etc improved the valid data at low frequencies.

With a decent number of averages, one could get as far down (from the flat level) as the TEF.

Programs like ARTA include a marker so you can see where the valid measurement ends due to the sequence length but averages are still needed to accurately show the roll off slope far down from "flat".

An area I have not investigated either is that when you produce an impulse response from a measured magnitude and phase response, it is not always an identical overlay on the impulse response display using a sequence. That may well be another aspect of how the data is parsed or used in the math stage.

Having had a TEF of one kind or another since the early 80’s, I have gotten used to trusting it for driver mag and phase. Arta is my favorite “other” measurement system, the number of ways it can show the measurement leaves the TEF in the dust.

In a way, this might be a futile effort like defending the late inventor of Beta recording tape, but rather than try to turn Heyser into a Tesla (perhaps getting more “new age” credit than actually due) , I would suggest that to me, having been there for part of it back then, it appears he is no longer recognized for what he did.

I have read a bunch of his stuff (and boy he wrote a lot) and have looked at some of his unpublished stuff and notes and to be honest a great deal is well beyond my understanding, I don’t “see” math working in my head like the operation of physical things or electronic circuitry.

I had thought about asking a physics professor I worked with to look some of over but never got around to it. Many times reading it would have been nice to ask “what does this mean?” .

Earl, sometime if you’re interested and don’t have it, get a copy of the AES “Time Delay Spectrometry”, it is a better snap shot of his audio work than I could hope to give.

It would be really interesting to see what strikes you being a math intensive person, keep an eye on the dates too relative to the state of art then.

One thing he seemed to be big on was thinking of alternative frames of reference.

I have done some work on a few related things back in that era and from a James Burke like view of invention and progress, I would offer that saying he simply adapted existing things to a new purpose, doesn’t give as much credit as he is due I think or as much as the AES and his contemporaries thought back then, at least before his death.

Funny too, at intersonics, JPL was our arch rival / competitor for the acoustic levitation flight hardware, Dick was part of the team working on the Acoustic Containerless Positioning Module for the space station and while I didn’t know who he was or what he did then, met him at a meeting at JPL..

In that case, our interference levitator was much more stable, especially as the temperature climbed than their resonant cavity levitator which was nice as we were a small company but that didn't save us when the Shuttle exploded.

At Intersonics, we had an early side consulting job with Portland Cement association who wanted to be able to find small flaws in concrete.

At that time (early 80’s) , the best results they had was using a fancy ultrasonic shock hammer (used in metallurgy) that had an accelerometer built in and using an expensive Nicolett FFT to examine the results.

In that day, the norm for acoustic flaw detection was using an impulse or shock as the source but with the ETC part of the TEF10 (the first production version from Crown) and pzt elements, using a broader bandwidth stimulus one got more of a signal back from the defect and in some cases even make a guess how large the defect was. Most of the imaging techniques back then used an broad band impulse (like in geology, hitting steel plate on the ground with a sledge hammer) or narrow band as in sonar a chirp from a source with a Q or say 20-50, or in radar as well is a narrow bandwidth system dictated by high Q tunings.

In that old paper I saw Laurie Fincham present at AES (i think Gene Cerwinski did one too), that was still when an actual impulse was the excitation signal.

In those cases, as with loudspeakers using an impulse as the stimulus, the energy is more limited than with a longer swept sine.

It was this advantage that made HP, B&K, Tektronics and others use / license the approach back then.

The use of a more robust “sequence” for this happened well after the TDS process which it competed with. The sequence has a small theoretical edge over the swept sine so far as noise but using them side by side, due to the issue i mentioned and or others, the TDS process is often better in a noisy environment.

Remember too when he made the first TDS in the mid 60’s, this was when a typical state of the art loudspeaker driver company would normally have a tube filled General Radio or other chain driven chart recorder / oscillator for a response measurement.

If you wanted an “accurate” measurement, you might send the speaker to Riverbanks labs for a reverberant measurement, in our case, also delivered as a chart recorder plot..

I think the TDS was really clever, a first and to me it was unlike anything of it’s day and logically came from someone who was based in radio, loved audio and had a job that allowed him to “play” with expensive test equipment.

For those not aware here is how TEF rejects reflected sound, it is like how a radio rejects adjacent signals. In an AM or shortwave radio, the broad band antenna signal which contains all the stations is multiplied against an oscillator signal and the output of that is the sum and difference spectrum.

The sum is discarded and the difference spectrum passes through a narrow band filter (an Intermediate Frequency or IF bandpass filter) so that only a narrow slice of energy (in an am radio, often at 455 KHZ) was passed and then detected.

By leaving the IF at a fixed frequency and then making the oscillator adjustable, one can tune up and down the dial rejecting everything on either side of the bandpass.

His idea was to "add" time discrimination to the radio, to use a linearly swept sine wave test signal with the knowledge that the mic signal would be X Hz behind the source and all reflections after / behind that.

By using the same multiplication and IF band pass approach a radio uses, he could tune the receiver to the direct arrival and reject the sound that was outside the direct path’s signal and the bandpass of this IF filter and thus allowed anechoic like measurements in a room only limited by a reflection free zone.

A decent write up on it is here from Rice.

Sound System Measurements

So far as TDS;

To my knowledge, his was the first system that could perform quasi anechoic measurements in a room..

So far as I know of, his system was also the first to actually measure the fixed time delays and then measure the acoustic phase of a distant source.

Many systems now still don’t do that directly, one has to assign “time” based in how phase looks.

How it detected phase was also something looking sort of like the Costas loop, but not exactly and time wise, I would bet TDS came well before Qpsk and probably the Costas loop itself as well.

The later things like the polar ETC were also firsts in this case they could identify “where the reflected sound came from”.

It was unfortunate it was narrowly licensed and like the Beta tape format, that spelled doom so far as wide spread use.

Even if everything else he ever wrote about and did, didn’t work, I believe he deserves more of a place in loudspeaker history than he seems to have now days at the AES.

Acknowledging something of a minor Fanboi hat now, his work and writing about loudspeaker arrival times and those “time” considerations along with his measuring tool has enabled me to take many measurements and make a few discoveries / inventions outside audio and has driven the direction of the full range speakers we make now.

That he was concerned about loudspeaker phase and time when I was trying to get though high school is still something that strikes home for me.

To my recollection, that image dismantling effort began when he was still alive with an outspoken leader of a company that came up with a semi-competing system that appeared to have evolved out of a later dsp software Hypersignal / Hyperception (a program with seemed terribly promising but always had niggling little bugs).

While it has turned into a strong software in it’s own right, back then they were able to paint a picture in the commercial sound area of TEF largely out of techno-fecal matter and bravado.

To a degree, history is written by the winners.

Earl, given the man months I put into Hypersignal in the day building a TDS system and past frustration with the limitations of TDS, you have to know I am envious as heck you can write your own measurement software. How powerful it would be to be able to "ask any question you can think to ask" so to speak.

Hi Barry, ahhh now I know who that is haha.

That was a cool show, if you’re going to be there let me know and maybe we can attend again. I will never forget the EDM demo's, amazing stuff.

Got to run.

Best,

Tom

That makes sense about the “noise”, I used that word because it was random in shape from measurement to measurement and (like random noise) averaging a number of sequence measurements in Arta, MLS, Easra etc improved the valid data at low frequencies.

With a decent number of averages, one could get as far down (from the flat level) as the TEF.

Programs like ARTA include a marker so you can see where the valid measurement ends due to the sequence length but averages are still needed to accurately show the roll off slope far down from "flat".

An area I have not investigated either is that when you produce an impulse response from a measured magnitude and phase response, it is not always an identical overlay on the impulse response display using a sequence. That may well be another aspect of how the data is parsed or used in the math stage.

Having had a TEF of one kind or another since the early 80’s, I have gotten used to trusting it for driver mag and phase. Arta is my favorite “other” measurement system, the number of ways it can show the measurement leaves the TEF in the dust.

In a way, this might be a futile effort like defending the late inventor of Beta recording tape, but rather than try to turn Heyser into a Tesla (perhaps getting more “new age” credit than actually due) , I would suggest that to me, having been there for part of it back then, it appears he is no longer recognized for what he did.

I have read a bunch of his stuff (and boy he wrote a lot) and have looked at some of his unpublished stuff and notes and to be honest a great deal is well beyond my understanding, I don’t “see” math working in my head like the operation of physical things or electronic circuitry.

I had thought about asking a physics professor I worked with to look some of over but never got around to it. Many times reading it would have been nice to ask “what does this mean?” .

Earl, sometime if you’re interested and don’t have it, get a copy of the AES “Time Delay Spectrometry”, it is a better snap shot of his audio work than I could hope to give.

It would be really interesting to see what strikes you being a math intensive person, keep an eye on the dates too relative to the state of art then.

One thing he seemed to be big on was thinking of alternative frames of reference.

I have done some work on a few related things back in that era and from a James Burke like view of invention and progress, I would offer that saying he simply adapted existing things to a new purpose, doesn’t give as much credit as he is due I think or as much as the AES and his contemporaries thought back then, at least before his death.

Funny too, at intersonics, JPL was our arch rival / competitor for the acoustic levitation flight hardware, Dick was part of the team working on the Acoustic Containerless Positioning Module for the space station and while I didn’t know who he was or what he did then, met him at a meeting at JPL..

In that case, our interference levitator was much more stable, especially as the temperature climbed than their resonant cavity levitator which was nice as we were a small company but that didn't save us when the Shuttle exploded.

At Intersonics, we had an early side consulting job with Portland Cement association who wanted to be able to find small flaws in concrete.

At that time (early 80’s) , the best results they had was using a fancy ultrasonic shock hammer (used in metallurgy) that had an accelerometer built in and using an expensive Nicolett FFT to examine the results.

In that day, the norm for acoustic flaw detection was using an impulse or shock as the source but with the ETC part of the TEF10 (the first production version from Crown) and pzt elements, using a broader bandwidth stimulus one got more of a signal back from the defect and in some cases even make a guess how large the defect was. Most of the imaging techniques back then used an broad band impulse (like in geology, hitting steel plate on the ground with a sledge hammer) or narrow band as in sonar a chirp from a source with a Q or say 20-50, or in radar as well is a narrow bandwidth system dictated by high Q tunings.

In that old paper I saw Laurie Fincham present at AES (i think Gene Cerwinski did one too), that was still when an actual impulse was the excitation signal.

In those cases, as with loudspeakers using an impulse as the stimulus, the energy is more limited than with a longer swept sine.

It was this advantage that made HP, B&K, Tektronics and others use / license the approach back then.

The use of a more robust “sequence” for this happened well after the TDS process which it competed with. The sequence has a small theoretical edge over the swept sine so far as noise but using them side by side, due to the issue i mentioned and or others, the TDS process is often better in a noisy environment.

Remember too when he made the first TDS in the mid 60’s, this was when a typical state of the art loudspeaker driver company would normally have a tube filled General Radio or other chain driven chart recorder / oscillator for a response measurement.

If you wanted an “accurate” measurement, you might send the speaker to Riverbanks labs for a reverberant measurement, in our case, also delivered as a chart recorder plot..

I think the TDS was really clever, a first and to me it was unlike anything of it’s day and logically came from someone who was based in radio, loved audio and had a job that allowed him to “play” with expensive test equipment.

For those not aware here is how TEF rejects reflected sound, it is like how a radio rejects adjacent signals. In an AM or shortwave radio, the broad band antenna signal which contains all the stations is multiplied against an oscillator signal and the output of that is the sum and difference spectrum.

The sum is discarded and the difference spectrum passes through a narrow band filter (an Intermediate Frequency or IF bandpass filter) so that only a narrow slice of energy (in an am radio, often at 455 KHZ) was passed and then detected.

By leaving the IF at a fixed frequency and then making the oscillator adjustable, one can tune up and down the dial rejecting everything on either side of the bandpass.

His idea was to "add" time discrimination to the radio, to use a linearly swept sine wave test signal with the knowledge that the mic signal would be X Hz behind the source and all reflections after / behind that.

By using the same multiplication and IF band pass approach a radio uses, he could tune the receiver to the direct arrival and reject the sound that was outside the direct path’s signal and the bandpass of this IF filter and thus allowed anechoic like measurements in a room only limited by a reflection free zone.

A decent write up on it is here from Rice.

Sound System Measurements

So far as TDS;

To my knowledge, his was the first system that could perform quasi anechoic measurements in a room..

So far as I know of, his system was also the first to actually measure the fixed time delays and then measure the acoustic phase of a distant source.

Many systems now still don’t do that directly, one has to assign “time” based in how phase looks.

How it detected phase was also something looking sort of like the Costas loop, but not exactly and time wise, I would bet TDS came well before Qpsk and probably the Costas loop itself as well.

The later things like the polar ETC were also firsts in this case they could identify “where the reflected sound came from”.

It was unfortunate it was narrowly licensed and like the Beta tape format, that spelled doom so far as wide spread use.

Even if everything else he ever wrote about and did, didn’t work, I believe he deserves more of a place in loudspeaker history than he seems to have now days at the AES.

Acknowledging something of a minor Fanboi hat now, his work and writing about loudspeaker arrival times and those “time” considerations along with his measuring tool has enabled me to take many measurements and make a few discoveries / inventions outside audio and has driven the direction of the full range speakers we make now.

That he was concerned about loudspeaker phase and time when I was trying to get though high school is still something that strikes home for me.

To my recollection, that image dismantling effort began when he was still alive with an outspoken leader of a company that came up with a semi-competing system that appeared to have evolved out of a later dsp software Hypersignal / Hyperception (a program with seemed terribly promising but always had niggling little bugs).

While it has turned into a strong software in it’s own right, back then they were able to paint a picture in the commercial sound area of TEF largely out of techno-fecal matter and bravado.

To a degree, history is written by the winners.

Earl, given the man months I put into Hypersignal in the day building a TDS system and past frustration with the limitations of TDS, you have to know I am envious as heck you can write your own measurement software. How powerful it would be to be able to "ask any question you can think to ask" so to speak.

Hi Barry, ahhh now I know who that is haha.

That was a cool show, if you’re going to be there let me know and maybe we can attend again. I will never forget the EDM demo's, amazing stuff.

Got to run.

Best,

Tom

Hi Earl

Earl, given the man months I put into Hypersignal in the day building a TDS system and past frustration with the limitations of TDS, you have to know I am envious as heck you can write your own measurement software. How powerful it would be to be able to "ask any question you can think to ask" so to speak.

Best,

Tom

The field of signal processing and measurements has gone a long ways in the last few decades. What was once state-of-the-art is now everyday or obsolete.

Yes, I find it is a significant advantage to me to be able to "ask any question I can think to ask" and be able to program this question and find the answers. All I want is a clean impulse response and I do the rest from there. I used to write my own code to do this, but when Holm came along there was no reason not to just adapt what Ask had done. In theory there is no better way to make a measurement than what he does. (That was proven several years ago in a very good AES article comparing all the known methods.)

- Status

- Not open for further replies.

- Home

- Loudspeakers

- Multi-Way

- What is the ideal directivity pattern for stereo speakers?