If there is no good hardware and software architecture, it is very difficult for the two stateless endpoints to be able to synchronize. It is recommended to send the two-channel music stream to the right channel for processing, and then separate the left channel to the left speaker, it should be easier to control the synchronization state.

Comments by the tutorial discuss the stabilization delay - IIUC caused by mixing other network NTP servers and GPS source in the NTP server config.

I have no experience with the technology, just found it worth exploring. Maybe the technology has advanced a bit since then. Maybe it could help solving the moving stereo image in combination with the existing network NTP if it were made to work to adjust the time after several minutes of starting the chain. Also the module could be kept powered to avoid cold starts. Many maybes 🙂

Well I don't think the full blown GPS based NTP server is a good idea. But there is another way to synchonize machines - the "fake" NTP time source.

NTP is designed to try and get the "wall clock" time on the computer to be as close to some standard reference time (e.g. from NIST, etc.) as possible. But this is not really the problem that we need to solve for our audio playback chain. We only need to distribute some clock to all machines so that they agree on it closely. What time that clock says, exactly, is not important. I'm glossing over some details here, but in essence that is a different problem than trying to propagate a central standard over a wide area (the entire USA for example).

NTP can be made to work in a special way, using the undisciplined local clock. This can be used to make NTP use the local clock on some machine act like it is a reference clock. Then you can distribute that clock using NTP on your network. This is something that I might try, if I think that the system without NTP synchonization is not working well. Remember, there is an NTP-like playout time synchonization mechanism built into Gstreamer, so NTP is not strictly necessary. It is the Gstreamer-only one that I am using now.

There is another NTP group synchonization method called "Orphan Mode". This mode of operation tries to exchange time data within a group, to keep all the machines synchronized. I tried using this a couple of years ago, and it didn't work well. After some time, on the order of days, the clocks were very different (by minutes) than real time, although they were closely synchronized. They were all accelerating away from real time, and at some point that would fail. So I gave up on that approach.

One thing that is undesirable about NTP is how slow it is to settle, and how it changes the local clock. NTP can take an hour to a day to really settle down and get good, stable control with accurate time sync. This was also a problem in Orphan Mode. Remember, it was not designed for audio work, but to coordinate dedicated time server computers. Also, during the settling process, NTP slews (steps) the clock, sometimes by milliseconds. Image you are playing audio and all of a sudden the time reference used for playback changes by that much. But that is just how NTP works. In fact I am happy to get away from it, if I can.

NTP also has some tradeoffs. Do you want accurate time? Them make sure your computer stays exactly the same temperature! Heating will change its clock rate (this is easily verified if you have a Pi or two around). Since NTP is typically slow to adapt to these changes, when the sun heats up your room the synchronization becomes much worse and rises above 1msec many times. This is based on years of experience I have had trying to get NTP to work for me. You can "speed up" the time constants in NTP, but this just causes more frequent clock slewing. It's really a no-win situation unless you have a very precise local reference like the GPS based one I set up, and do very frequency polling (e.g. every 32 seconds).

What I like about the Gstreamer' NTP-like mechanism is that it starts up immediately and stays well synchronized, well enough to do what I need it to do. It's so much better (in many practical ways) than the NTP based time synchonization that I was using before that I do not want to go back if it is at all possible. I still need to get more experience with it, for example how well does it cope with the heating issue. But for now it seems to be working well for me.

Last edited:

What is actually the NTP-like gstreamer approach? I thought it was just adding timestamps to the stream on the transmitter and fitting the timestamps to the chosen clock on the receiver.

I.e. the two receivers must have exactly same system clocks to time-align the streams + adaptive resampling for the free-wheeling alsa clocks. That's where I find the GPS clocks controlling the two RPis with the PPS precise signal (some specs say 1us) and time-aligning the two receivers. They would not be used for any NTP time distribution, just locally. BTW a similar method was used by the EHT telescopes observing the black hole - a GPS 1Hz (PPS) signal was ADC-sampled along the telescope signal in the second channel, the 2GHz sampling frequency generated by local pre-synchronized atom clocks at each telescope https://www.researchgate.net/profil...s-sample-two-channels-of-2048-GHz-Nyquist.png

I.e. the two receivers must have exactly same system clocks to time-align the streams + adaptive resampling for the free-wheeling alsa clocks. That's where I find the GPS clocks controlling the two RPis with the PPS precise signal (some specs say 1us) and time-aligning the two receivers. They would not be used for any NTP time distribution, just locally. BTW a similar method was used by the EHT telescopes observing the black hole - a GPS 1Hz (PPS) signal was ADC-sampled along the telescope signal in the second channel, the 2GHz sampling frequency generated by local pre-synchronized atom clocks at each telescope https://www.researchgate.net/profil...s-sample-two-channels-of-2048-GHz-Nyquist.png

Last edited:

UPDATE:

After working through a few snafus, I am starting to collect timing data for various types of synchonization under Gstreamer. I hope to get enough data in the next few days time to post plots of the performance data.

After working through a few snafus, I am starting to collect timing data for various types of synchonization under Gstreamer. I hope to get enough data in the next few days time to post plots of the performance data.

I finally have some data to post on the synchronicity of the left and right speakers in the system.

Honestly, it has been a bit of a struggle to get everything up and running. Initially, the measurements were more than a bit disappointing. The synchronization would start out around the 1msec target and then would drift away, sometimes by a lot! So I began a long journey of trying to understand what was going on, and how the various Gstreamer parameters were influencing the performance. I seem to be getting a better understanding of these now, and about various contributions to the timing across the system.

Currently I am using NTP to synchronize the sender and the two clients. The sender is synchronized to internet time servers and maintains around 0.5-1msec accuracy at any time with a polling interval of around 15 minutes. The clients poll the sender every 32 seconds and they stay within 100msec of the sender's time. This is pretty good performance for NTP that does not use a local GPS based time reference (that can be more than 10 times more stable and accurate). But for the purposes of what I am doing here, this is enough accuracy.

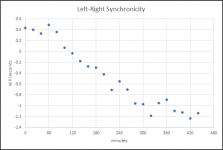

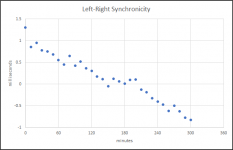

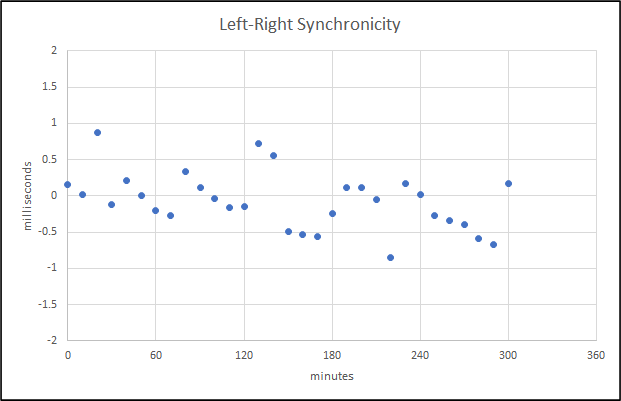

So, with that being said, I am attaching a plot of what I would consider to be "good" performance. The goal was to keep the left and right playback timing synchronized to within about +/- 1 millisecond, and this is more or less achieving that goal.

There is a slow drift in the synchronicity, and I am still trying to track down the source of that.

Honestly, it has been a bit of a struggle to get everything up and running. Initially, the measurements were more than a bit disappointing. The synchronization would start out around the 1msec target and then would drift away, sometimes by a lot! So I began a long journey of trying to understand what was going on, and how the various Gstreamer parameters were influencing the performance. I seem to be getting a better understanding of these now, and about various contributions to the timing across the system.

Currently I am using NTP to synchronize the sender and the two clients. The sender is synchronized to internet time servers and maintains around 0.5-1msec accuracy at any time with a polling interval of around 15 minutes. The clients poll the sender every 32 seconds and they stay within 100msec of the sender's time. This is pretty good performance for NTP that does not use a local GPS based time reference (that can be more than 10 times more stable and accurate). But for the purposes of what I am doing here, this is enough accuracy.

So, with that being said, I am attaching a plot of what I would consider to be "good" performance. The goal was to keep the left and right playback timing synchronized to within about +/- 1 millisecond, and this is more or less achieving that goal.

There is a slow drift in the synchronicity, and I am still trying to track down the source of that.

Attachments

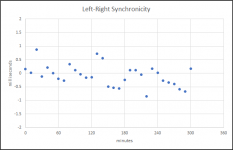

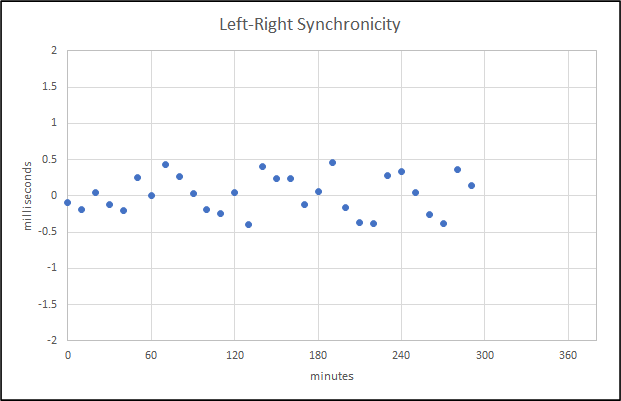

After a small tweak to Gstreamer's alsasink properties, I have been able to compensate for the slow drift shown in the previous two datasets.

The synchronicity between left and right speakers now remains within 1 millisecond, as shown in the measurement below. Most of the time there is less than a +/- 0.5 msec difference.

The synchronicity between left and right speakers now remains within 1 millisecond, as shown in the measurement below. Most of the time there is less than a +/- 0.5 msec difference.

Attachments

Last edited:

That looks very good. 0.5ms means the head is 15cm closer to one of the speakers, IIUC.

It means that the listener's perception is that the stereo image stays centered.

If you prefer to think about it in terms of "distance" that is fine, but I prefer to think about it in terms of what has been studied and reported for the precedence effect. I can support that with my own personal listening experiences as well. When there is a timing difference that is large enough (e.g. several milliseconds) it seems as if one of the speakers has been turned off. When you walk up to that speaker you find it is still playing, but at the listening chair the stereo image is shifted all the way away from it, over to the other side. It's an interesting effect. Once you stay below about 1 msec difference in playback timing, this effect is absent.

I think that many "bit perfect" audiophiles will look at the data above and scoff, but it comes down to whether or not you can hear any difference. Who cares if bits are perfect or not, if you cannot tell one way or another? To get the system synchronized in this way, samples are added or removed or the playback pointer moved as needed. Just back away from the dual channel scope and take a listen! It's otherwise all 24 bit PCM audio.

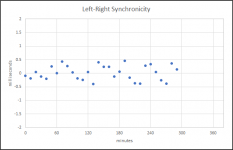

For this series of data, I performed a slightly different experiment. I previously had NTP on the "sender" synchronized to internet NTP time servers, and the two clients synchronized to the sender. For this experiment I wanted to see what happened if there was no internet connection available. The same NTP arrangement can be used, except the time reference can only be the local clock on the sender. That clock is running freely and is not necessarily keeping accurate time. But NTP can still be used to set the other clocks in the system to have the same time, and therefore the same rate, as this "reference". The funny thing is that NTP actually performs BETTER under these conditions. There is less time offset on the clients compared to when internet time servers are used. This is probably because the local clock on the sender Pi is not being modified/slewed to keep it synchronized to anything. By letting it run freely it is only changing smoothly and slowly from external disturbances such as temperature changes, etc. Since the system is set up in my basement where temperature changes are minimal, and the system is constantly running, the clock is probably quite stable even if it is not accurate. Under these conditions the offset on the clients is about 2 or 3 times lower than when using internet time servers as the reference. This amounts to under 50 microseconds of offset, often much less.

You can see how the lower offset improves the left-right synchronicity in the data below, compared to the last dataset I posted. This was collected over about 5 hours. I would have more data except my laptop decided to reboot to install a stupid Windoze update before the run was complete. Thanks Microsoft! But I have every reason to believe it will just keep going like this. Synchronicity is now within about +/- 500 microseconds. In terms of the goal I was hoping to achieve, and with the precedence effect in mind, this is excellent.

I will collect some additional data under the same conditions, and then will probably try to do some listening for a change. After that I might try to explore what happens when there is a larger drift in the reference clock. I think I can force NTP on the sender to have a fixed drift rate of my choosing but I need to read up on NTP in a little more detail to confirm that. Even with a larger drift rate the system should still work properly, however, it would be good to get some confirmatory data. The other experiment I could try is to shut down one of the client Pi for several hours until it cools down to ambient temp. I can then start it up and immediate begin streaming while it heats back up while performing regular left-right synchronicity measurements. This will help to test whether drift in a client's temperature will cause disturbances in the system synchronicity. On paper I expect NTP to be able to compensate for this, but it would be good to measure it.

The latest measurement data is attached, and shown below.

You can see how the lower offset improves the left-right synchronicity in the data below, compared to the last dataset I posted. This was collected over about 5 hours. I would have more data except my laptop decided to reboot to install a stupid Windoze update before the run was complete. Thanks Microsoft! But I have every reason to believe it will just keep going like this. Synchronicity is now within about +/- 500 microseconds. In terms of the goal I was hoping to achieve, and with the precedence effect in mind, this is excellent.

I will collect some additional data under the same conditions, and then will probably try to do some listening for a change. After that I might try to explore what happens when there is a larger drift in the reference clock. I think I can force NTP on the sender to have a fixed drift rate of my choosing but I need to read up on NTP in a little more detail to confirm that. Even with a larger drift rate the system should still work properly, however, it would be good to get some confirmatory data. The other experiment I could try is to shut down one of the client Pi for several hours until it cools down to ambient temp. I can then start it up and immediate begin streaming while it heats back up while performing regular left-right synchronicity measurements. This will help to test whether drift in a client's temperature will cause disturbances in the system synchronicity. On paper I expect NTP to be able to compensate for this, but it would be good to measure it.

The latest measurement data is attached, and shown below.

Attachments

Last edited:

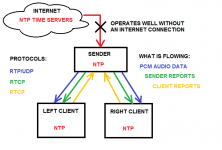

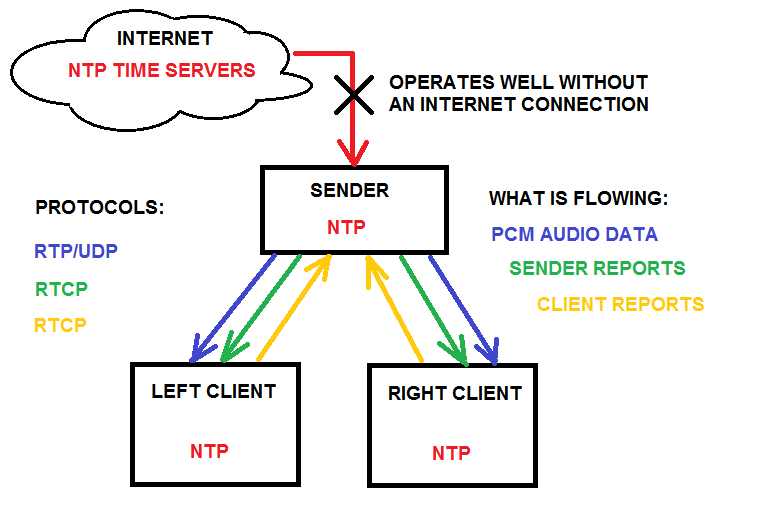

I have attached a schematic of the setup I am using to implement synchronized streaming and measure the synchrony, shown here:

The system hardware consists of three Raspberry Pi 4B configured as:

The sender Pi implements a WiFi hotspot on an unused 5.8GHz band. The software that is used to implement the hotspot are hostapd and dnsmasq. Hostapd is configured to use wmm (quality of service prioritization) for traffic. This results in a low-latency link between the three Pi computers.

Gstreamer is used to implement the streaming, comprised of RTP streaming plus bidirectional RTCP "reports". RTCP reports include timing information and the combination of sender and receiver reports make up a feedback loop. Using this feedback, the system can adjust the Gstreamer pipeline current time and clock rate as necessary to synchronize the rendering of audio from the left and right clients.

The system also uses NTP to synchonize the OS system clocks on each Pi. The reference time used by NTP can be obtained from internet-based NTP time servers OR the sender local clock may be used as a reference for the clients.

To obtain a measurement of the synchronicity of the system, the following additional components/software is used in the manner described:

The above measurement process was automated using the software AutoIT. Using the automation, a series of measurement data files were created at a regular interval (e.g. 10 min, etc.). This comprises a data set that must be post-processed to determine the synchronicity.

Because there is a fixed delay difference between the two speakers and the recordings are triggered by the earlier impulse, the time at which the impulse is recorded minus the difference in the delay that was programmed into the DSP marks the timing difference between the two speakers. For example, if the earlier speaker is delayed by 10msec, the later one is delayed by 20msec, and the recording captures the impulse appearing at 10msec (the difference between 20msec and 10msec) we can say that the two speakers are in perfect synchrony. When the impulse arrival deviates from 10msec there is a timing difference between the two speakers.

To process the measurement data, an Excel spreadsheet was used. Each audio record (consisting of time,amplitude pairs) is imported. The time at which the impulse maximum amplitude is observed is then saved into a table. Each measurement of the data set series is processed manually in this way, and then the data from the fully completed table is plotted. These are the plots that have been presented in this thread. The typical synchronicity is obtained via inspection of the scatter in the plotted data.

The system hardware consists of three Raspberry Pi 4B configured as:

- A SENDER (or server)

- A LEFT CLIENT (or receiver)

- A RIGHT CLIENT (or receiver)

The sender Pi implements a WiFi hotspot on an unused 5.8GHz band. The software that is used to implement the hotspot are hostapd and dnsmasq. Hostapd is configured to use wmm (quality of service prioritization) for traffic. This results in a low-latency link between the three Pi computers.

Gstreamer is used to implement the streaming, comprised of RTP streaming plus bidirectional RTCP "reports". RTCP reports include timing information and the combination of sender and receiver reports make up a feedback loop. Using this feedback, the system can adjust the Gstreamer pipeline current time and clock rate as necessary to synchronize the rendering of audio from the left and right clients.

The system also uses NTP to synchonize the OS system clocks on each Pi. The reference time used by NTP can be obtained from internet-based NTP time servers OR the sender local clock may be used as a reference for the clients.

To obtain a measurement of the synchronicity of the system, the following additional components/software is used in the manner described:

- An analog input (ADC) HAT is used on the "sender" Pi

- A 2-channel AMP HAT on each client Pi powers the tweeter and woofer of a DIY 2-way speaker

- A Windows laptop running ARTA ver 1.9.3 and a Focusrite Clarett 2Pre audio interface is used to perform measurements

- A microphone is placed close to the tweeter of each speaker system. Each mic is connected to an input channel of the 2Pre

- ARTA is operated in the "Record > Signal Time Record" mode

- Delay (implemented as part of Gstreamer playback chain on the clients) is used to introduce a playback timing difference between left and right speakers

- One speaker is delayed by X msec. The mic channel signal from this speaker will be used to trigger the ARTA measurement

- The other speaker is delayed by Y>X msec. The output from this speaker will be captured by the measurement

- The ARTA software sends an impulse out of its analog output, which is connected to the input channels of the sender analog ADC HAT.

- The impulse travels over WiFi to the clients, through the Gstreamer DSP chain, and emerges as audio from the speakers.

- The impulse is first reproduced by the speaker configured to have less delay. ARTA sees this signal and starts recording

- While ARTA is recording, the impulse is reproduced by the speaker configured to have more delay and is captured by the recording.

- The recording ends and the data is saved to file on the Windows machine.

The above measurement process was automated using the software AutoIT. Using the automation, a series of measurement data files were created at a regular interval (e.g. 10 min, etc.). This comprises a data set that must be post-processed to determine the synchronicity.

Because there is a fixed delay difference between the two speakers and the recordings are triggered by the earlier impulse, the time at which the impulse is recorded minus the difference in the delay that was programmed into the DSP marks the timing difference between the two speakers. For example, if the earlier speaker is delayed by 10msec, the later one is delayed by 20msec, and the recording captures the impulse appearing at 10msec (the difference between 20msec and 10msec) we can say that the two speakers are in perfect synchrony. When the impulse arrival deviates from 10msec there is a timing difference between the two speakers.

To process the measurement data, an Excel spreadsheet was used. Each audio record (consisting of time,amplitude pairs) is imported. The time at which the impulse maximum amplitude is observed is then saved into a table. Each measurement of the data set series is processed manually in this way, and then the data from the fully completed table is plotted. These are the plots that have been presented in this thread. The typical synchronicity is obtained via inspection of the scatter in the plotted data.

Attachments

I have this working now. It uses the ALSA library in C to get accurate timestamps from the soundcard, the rest is written in javascript. Each client has local librespot and shairport for local listening. There's also an 'audioserv' RPI which hosts an instance of shairport and librespot for each combination of multiroom audio I want. This means the native interface of spotify and airplay on my phone is all I need to control my system. The clients that have TVs also have SPDIF capture for sound and an IR receiver so I can use the volume buttons on the standard TV remote. All of the possible sources work on a priority system, If multiple sources want to send audio to a client, the one with the highest priority wins. The priority system works well when I'm listening to whole house audio and the kids turn on the TV in the familyroom for example, the familyroom sink automatically switches to the TV. It's designed as modular blocks for lots of flexibility. The audioserv RPI is plugged direct into the router. All clients all run on wifi. The current client list is below:

Sunroom

Livingroom

Kitchen

Bedroom

Laundry

Familyroom

Combinations look like KitSun, KitLiv, KitSunLiv, KitSunLivFam, BedFam, ALL, etc. It's a bit cumbersome to write out all the combinations I want, but the benefit is I can select any single playback device or any pre-configured combination via the native spotify and airplay selection menus in my phone. They're abbreviated so they're readable in the airplay and librespot lists on my phone.

Most clients are Raspberry PI 4 with Hifiberry DACPlus or DACPlusDSP and either one or two JBL LSR305 speakers. Livingroom and Familyroom clients each run on two differnt PIs kind of so I didn't have to run a cable down the wall for TV audio but mostly as an excuse to do it. Livingroom is a Denon 7.1 HDMI receiver running old JBL L100s active 3 way using the guide on Richard Taylor's website. The playback application / crossover part was running on an RPI but the multichannel part broke after an update. I don't know if the raspbien version I used then had multichannel, it was the real time kernal I was messing with that allowed it, or I actually recompiled the kernal back then to change max channels. I decided I didn't want to mess with it anymore and moved the playback and crossover portion of that one client to an old laptop running Ubuntu Server which was much less fussy for me.

There's individual applications written in javascript for source_spdif, source_librespot, source_shairport, controller, and playback_sink. Source_spdif and playback_sink also have parts written in C for access to the ALSA library. These 5 basic pieces are the building blocks of the system. They communicate via UDP sockets so there's not much difference if the applications are all on one system or on multiple. It's the same logic regardless of if it's multi room or not, Local client, distributed client, or multi room server. Any minor setting changes required happen in the systemd service file.

Each client gets it's own controller, audio source application(s), and playback application. Each audio source application is hardcoded with a list of desired playback sink names and a playback priority. By default the audio source only plays to sink 'localhost'. When active, the source application sends the desired playback device name(s) and priority data to the controller. If there is a playback client connected to the controller with a name equal to one of the sinks specified by the source, the controller tells that playback app what hostname and port to use to connect to the selected source. The playback app then connects directly to the source app specified by the controller to negotiate the audio settings and receive audio data. If there are multiple active sources for a playback sink, the controller picks the source with the highest priority. The source apps, playback app, and controller all default to localhost. This way I can copy a working RPI image, change only the hostname, and have a new client up and running fairly easily. I'll see this client's hostname show up in airplay and spotify, and it'll also work for the multiroom audiosource 'All'. More below on that. You can run any of the source or playback applications on another computer, you just have to override 'localhost' with the name of the computer where the controller is.

The multiroom part basically works the same way. The audioserver RPI runs the same controller and audio source applications as the clients. An instance of an audio source application is run on audioserv for each desired multiroom combination. These are named things like KitSun, KitLiv, KitSunLiv, KitSunLivFam, BedFam, ALL, etc. They are each hardcoded to play to the playback sinks which are in their name. It's a bit cumbersome to have an instance for each of the desired combinations, but the benefit is they can be selected with the native menus for spotify and airplay in my phone. All of the audio source applications on audioserv connect to the controller on audioserv. The controller app has an extra piece of code that only runs on the client controllers to connect the client controller as if it was a playback application to the audioserv controller. This allows the multiroom sources to cascade down through the clients and keeps the playback selection priority system one cohesive thing regardless of if it's a local, remote, or multiroom source.

Again there's nothing special or different about the code on the audioserv RPI. I could turn off audioserv and hardcode all the playback clients to connect to the Livingroom controller for example and the whole house would play whatever is happening in the Livingroom. It's just that now I can't easily not do multiroom. Another negative is the source to router hop is now also on wifi. Audioserv sits next to my router and is plugged in with copper ethernet.

The audio time synchronization code runs even if i'm not doing multiroom. I thought about disabling it if there's only one audio sink, but I typically only ever do single sink if the source and sink are on the same machine. In that instance the synchronization logic is stable enough where it's not adding or removing samples after the initial synchronization anyway, so I never bothered. It also keeps the code variations down and greatly reduces the configuration complexity. The one catch with this is fighting with the HDMI clock, but I'm still trying to figure out if I can tweak the HDMI clock via software instead.

The audio synchronization itself starts by time stamping and assigning an index number to the audio period at the source. For SPDIF the timestamp is pulled from ALSA. For Librespot and Shairport the timestamp is created by adding on the period_time scaled by the NTP scaler for each period of audio sent. On the playback side the source timestamp is compared to the timestamp of the playback soundcard then samples are added or removed as necessary to bring the playback sink in synchronization with the source. Using multiples of the same soundcard with it's own onboard clock mean not much adjustment is needed to maintain sync. When testing this part I'd run the left speaker on one RPI and the right on another. From memory I could get up to a 7kHZ sine wave before I could hear the adjustments. The adjustments were inaudible to me with music. To use the ALSA library that part had to be written in C. Everything else is written in Javascript. All clients use NTP to syncronize time to the Audioserver PI on my local network as fast as I could get it to go, every 8 seconds. Audioserver then connects to a non local time server using standard settings.

I actually have ecasound as stdio before the audio synchronization part to take ecasound out of the syncronization calc I don't have to do any fudge factor tweaks between playback devices, the ALSA timestamp seems to work well. I didn't even have to do anything special with the Denon receiver over HDMI. The Denon receiver delay must be part of the HDMI EDID information or something because I didn't do anything special with the timestamps there and it's in perfect sync with the other more standard setups.

For volume I modified both shairport and librespot to always output audio at 100% and print the current volume to stderr. Volume is then scaled from -60db to 0db and is then passed along to the playback application. The idea being to control volume at the latest point in the chain as possible. I read a bunch that says this is unnecessary, but I just couldn't get past my old analog thinking I guess. I must say it was fascinating to see a dynamic range of something like +/-15 in the PCM data at low volume and still sound good. The playback application calls volume.js in the home directory which is setup to control whatever volume control that client has. The HIFIBerry DACs have a hardware volume control addressable from within ALSA, so I use amixer to set the volume there. The Denon has ethernet and can be controlled over telnet, so I use that to control the volume there. The Denon goes to +15db or something like that, but I'm happy to leave that last bit of reserve to the local control only. Safer that way. It's relatively simple but still kind of cool to adjust the volume in spotify on my phone and see it change on the front of the Denon receiver when you think of all the hops and APIs involved.

I'd be happy to share whatever of this you want. I could help get it going on your equipment if you want. I suppose I could do a write up on how to install it if you really wanted.

If you're interested in exchanging efforts I'm just getting into understanding the RTA measurements and time/phase alignment of my active crossover setup and could use some pointers. It's how I stumbled on your post here. Also the more I increase the buffer on ecasound the more it seems my drivers are out of time alignment, by allot, or some kind of feedback echo recycled circular buffer weirdness going on. Or something.

Mike

Sunroom

Livingroom

Kitchen

Bedroom

Laundry

Familyroom

Combinations look like KitSun, KitLiv, KitSunLiv, KitSunLivFam, BedFam, ALL, etc. It's a bit cumbersome to write out all the combinations I want, but the benefit is I can select any single playback device or any pre-configured combination via the native spotify and airplay selection menus in my phone. They're abbreviated so they're readable in the airplay and librespot lists on my phone.

Most clients are Raspberry PI 4 with Hifiberry DACPlus or DACPlusDSP and either one or two JBL LSR305 speakers. Livingroom and Familyroom clients each run on two differnt PIs kind of so I didn't have to run a cable down the wall for TV audio but mostly as an excuse to do it. Livingroom is a Denon 7.1 HDMI receiver running old JBL L100s active 3 way using the guide on Richard Taylor's website. The playback application / crossover part was running on an RPI but the multichannel part broke after an update. I don't know if the raspbien version I used then had multichannel, it was the real time kernal I was messing with that allowed it, or I actually recompiled the kernal back then to change max channels. I decided I didn't want to mess with it anymore and moved the playback and crossover portion of that one client to an old laptop running Ubuntu Server which was much less fussy for me.

There's individual applications written in javascript for source_spdif, source_librespot, source_shairport, controller, and playback_sink. Source_spdif and playback_sink also have parts written in C for access to the ALSA library. These 5 basic pieces are the building blocks of the system. They communicate via UDP sockets so there's not much difference if the applications are all on one system or on multiple. It's the same logic regardless of if it's multi room or not, Local client, distributed client, or multi room server. Any minor setting changes required happen in the systemd service file.

Each client gets it's own controller, audio source application(s), and playback application. Each audio source application is hardcoded with a list of desired playback sink names and a playback priority. By default the audio source only plays to sink 'localhost'. When active, the source application sends the desired playback device name(s) and priority data to the controller. If there is a playback client connected to the controller with a name equal to one of the sinks specified by the source, the controller tells that playback app what hostname and port to use to connect to the selected source. The playback app then connects directly to the source app specified by the controller to negotiate the audio settings and receive audio data. If there are multiple active sources for a playback sink, the controller picks the source with the highest priority. The source apps, playback app, and controller all default to localhost. This way I can copy a working RPI image, change only the hostname, and have a new client up and running fairly easily. I'll see this client's hostname show up in airplay and spotify, and it'll also work for the multiroom audiosource 'All'. More below on that. You can run any of the source or playback applications on another computer, you just have to override 'localhost' with the name of the computer where the controller is.

The multiroom part basically works the same way. The audioserver RPI runs the same controller and audio source applications as the clients. An instance of an audio source application is run on audioserv for each desired multiroom combination. These are named things like KitSun, KitLiv, KitSunLiv, KitSunLivFam, BedFam, ALL, etc. They are each hardcoded to play to the playback sinks which are in their name. It's a bit cumbersome to have an instance for each of the desired combinations, but the benefit is they can be selected with the native menus for spotify and airplay in my phone. All of the audio source applications on audioserv connect to the controller on audioserv. The controller app has an extra piece of code that only runs on the client controllers to connect the client controller as if it was a playback application to the audioserv controller. This allows the multiroom sources to cascade down through the clients and keeps the playback selection priority system one cohesive thing regardless of if it's a local, remote, or multiroom source.

Again there's nothing special or different about the code on the audioserv RPI. I could turn off audioserv and hardcode all the playback clients to connect to the Livingroom controller for example and the whole house would play whatever is happening in the Livingroom. It's just that now I can't easily not do multiroom. Another negative is the source to router hop is now also on wifi. Audioserv sits next to my router and is plugged in with copper ethernet.

The audio time synchronization code runs even if i'm not doing multiroom. I thought about disabling it if there's only one audio sink, but I typically only ever do single sink if the source and sink are on the same machine. In that instance the synchronization logic is stable enough where it's not adding or removing samples after the initial synchronization anyway, so I never bothered. It also keeps the code variations down and greatly reduces the configuration complexity. The one catch with this is fighting with the HDMI clock, but I'm still trying to figure out if I can tweak the HDMI clock via software instead.

The audio synchronization itself starts by time stamping and assigning an index number to the audio period at the source. For SPDIF the timestamp is pulled from ALSA. For Librespot and Shairport the timestamp is created by adding on the period_time scaled by the NTP scaler for each period of audio sent. On the playback side the source timestamp is compared to the timestamp of the playback soundcard then samples are added or removed as necessary to bring the playback sink in synchronization with the source. Using multiples of the same soundcard with it's own onboard clock mean not much adjustment is needed to maintain sync. When testing this part I'd run the left speaker on one RPI and the right on another. From memory I could get up to a 7kHZ sine wave before I could hear the adjustments. The adjustments were inaudible to me with music. To use the ALSA library that part had to be written in C. Everything else is written in Javascript. All clients use NTP to syncronize time to the Audioserver PI on my local network as fast as I could get it to go, every 8 seconds. Audioserver then connects to a non local time server using standard settings.

I actually have ecasound as stdio before the audio synchronization part to take ecasound out of the syncronization calc I don't have to do any fudge factor tweaks between playback devices, the ALSA timestamp seems to work well. I didn't even have to do anything special with the Denon receiver over HDMI. The Denon receiver delay must be part of the HDMI EDID information or something because I didn't do anything special with the timestamps there and it's in perfect sync with the other more standard setups.

For volume I modified both shairport and librespot to always output audio at 100% and print the current volume to stderr. Volume is then scaled from -60db to 0db and is then passed along to the playback application. The idea being to control volume at the latest point in the chain as possible. I read a bunch that says this is unnecessary, but I just couldn't get past my old analog thinking I guess. I must say it was fascinating to see a dynamic range of something like +/-15 in the PCM data at low volume and still sound good. The playback application calls volume.js in the home directory which is setup to control whatever volume control that client has. The HIFIBerry DACs have a hardware volume control addressable from within ALSA, so I use amixer to set the volume there. The Denon has ethernet and can be controlled over telnet, so I use that to control the volume there. The Denon goes to +15db or something like that, but I'm happy to leave that last bit of reserve to the local control only. Safer that way. It's relatively simple but still kind of cool to adjust the volume in spotify on my phone and see it change on the front of the Denon receiver when you think of all the hops and APIs involved.

I'd be happy to share whatever of this you want. I could help get it going on your equipment if you want. I suppose I could do a write up on how to install it if you really wanted.

If you're interested in exchanging efforts I'm just getting into understanding the RTA measurements and time/phase alignment of my active crossover setup and could use some pointers. It's how I stumbled on your post here. Also the more I increase the buffer on ecasound the more it seems my drivers are out of time alignment, by allot, or some kind of feedback echo recycled circular buffer weirdness going on. Or something.

Mike

For this series of data, I performed a slightly different experiment. I previously had NTP on the "sender" synchronized to internet NTP time servers, and the two clients synchronized to the sender. For this experiment I wanted to see what happened if there was no internet connection available. The same NTP arrangement can be used, except the time reference can only be the local clock on the sender. That clock is running freely and is not necessarily keeping accurate time. But NTP can still be used to set the other clocks in the system to have the same time, and therefore the same rate, as this "reference". The funny thing is that NTP actually performs BETTER under these conditions. There is less time offset on the clients compared to when internet time servers are used. This is probably because the local clock on the sender Pi is not being modified/slewed to keep it synchronized to anything. By letting it run freely it is only changing smoothly and slowly from external disturbances such as temperature changes, etc. Since the system is set up in my basement where temperature changes are minimal, and the system is constantly running, the clock is probably quite stable even if it is not accurate. Under these conditions the offset on the clients is about 2 or 3 times lower than when using internet time servers as the reference. This amounts to under 50 microseconds of offset, often much less.

You can see how the lower offset improves the left-right synchronicity in the data below, compared to the last dataset I posted. This was collected over about 5 hours. I would have more data except my laptop decided to reboot to install a stupid Windoze update before the run was complete. Thanks Microsoft! But I have every reason to believe it will just keep going like this. Synchronicity is now within about +/- 500 microseconds. In terms of the goal I was hoping to achieve, and with the precedence effect in mind, this is excellent.

I will collect some additional data under the same conditions, and then will probably try to do some listening for a change. After that I might try to explore what happens when there is a larger drift in the reference clock. I think I can force NTP on the sender to have a fixed drift rate of my choosing but I need to read up on NTP in a little more detail to confirm that. Even with a larger drift rate the system should still work properly, however, it would be good to get some confirmatory data. The other experiment I could try is to shut down one of the client Pi for several hours until it cools down to ambient temp. I can then start it up and immediate begin streaming while it heats back up while performing regular left-right synchronicity measurements. This will help to test whether drift in a client's temperature will cause disturbances in the system synchronicity. On paper I expect NTP to be able to compensate for this, but it would be good to measure it.

The latest measurement data is attached, and shown below.

I had my system setup using an isolated master NTP clock on a local server all my clients connect to. Seemed to work fine. In my observations NTP wouldn't make a step change of the system time unless things got really out of wack. It tries instead to make small tweaks to it's time scaler to bring things back inline. The biggest issues seemed to come when NTP itself adjusted it's own time scaler, but I didn't know that's what was happening yet. I had previously tried to compensate for accumulating error by taking a running average to determine the rate at which that error accumulated, then use my acquired error drift rate to correct for drift. This seemed to work well, until something happened to upset my calculations. It would stabilize and work again on it's own, but not without a big interruption in the audio. Once I figured out the NTP scaler change is what was causing my issue, I figured out how to read that scaler instead of guess at it. It's basically 1 + (1 / (1000000 / the value in /var/lib/ntp/ntp.drift)). If you're doing something like creating an audio timestamp by constantly adding in the period_time, multiply the period_time by that scaler first and it won't drift away from the system time anymore.

Once I moved to the ALSA API library to get an accurate timestamp each time I send a period of audio, I didn't need NTP correction anymore as ALSA takes care of that for you.

The only time I use the NTP correction scaler now is generating timestamps and metering data out of librespot and shairport to be a multiroom audio source.

Eventually I got annoyed at how far off the timestamp would drift from the correct time. It made my head hurt just a little more when troubleshooting issues, so I turned on NTP correction of the audioserver with whatever the default settings are. All my clients sync to the audioserver every 8 seconds. Jitter on the clients I care about is typically less than 0.02 milliseconds. Jitter on the worst client is 1.5 milliseconds, but that's the kitchen, so I'm not too worried about it. Not exactly sure why the kitchen is so bad, it's fairly close to an access point. I typically look at what NTP itself reports for offset and jitter on each client using this command. watch -n1 ntpq -pn

Mike

I have thought about this problem some. GPS came to mind, but in reality it is over kill. GPS gives you absolute time, all we need is relative to sync.

Some day I want to build master and satellite dac boards with RF sync. They would use a signal similar to your garage door opener in the Mhz region. This would give a fraction of a degree phase stability at 1kHz! The master would send out a ping, and the satellites would sync. You could sync up to an npt on the some Nth iteration of the RF ping. One could theoretically do this with wifi alone, if you could have some kind of hardware interrupt. But seems like a separate RF Mhz signal would be easier to implement. I actually think this is what sonos does.

To my mind this is the cheapest and best way to do this. However, it does require some legwork. Nevertheless, it is better than trying to do this over network wifi alone, which is bound to cause jitter in a clock.

Some day I want to build master and satellite dac boards with RF sync. They would use a signal similar to your garage door opener in the Mhz region. This would give a fraction of a degree phase stability at 1kHz! The master would send out a ping, and the satellites would sync. You could sync up to an npt on the some Nth iteration of the RF ping. One could theoretically do this with wifi alone, if you could have some kind of hardware interrupt. But seems like a separate RF Mhz signal would be easier to implement. I actually think this is what sonos does.

To my mind this is the cheapest and best way to do this. However, it does require some legwork. Nevertheless, it is better than trying to do this over network wifi alone, which is bound to cause jitter in a clock.

Here is my working project on github. GitHub - mikeszila/smartsoundsync

It can be installed and running pretty quickly by running the command below. I'd only run this on a fresh linux install for this purpose, certainly not your everyday computer, mostly because of the ntp changes it makes.

cd && wget https://raw.githubusercontent.com/mikeszila/smartsoundsync/main/install.sh -O - | sudo /bin/bash

You'll have to modify the config file at /usr/local/etc/smartsoundsyncconf.js to give it the name of your soundcard and volume mixer, then run the above script again, otherwise it should run. The same script is used to setup the multiroom server. A multiroom server config file example is at /usr/local/lib/smartsoundsync/config_examples/multiroomserverconf.js

I'll post updates as I get more working. Should have a config example up for active 3 way using a 7.1 HDMI receiver in a few days.

It can be installed and running pretty quickly by running the command below. I'd only run this on a fresh linux install for this purpose, certainly not your everyday computer, mostly because of the ntp changes it makes.

cd && wget https://raw.githubusercontent.com/mikeszila/smartsoundsync/main/install.sh -O - | sudo /bin/bash

You'll have to modify the config file at /usr/local/etc/smartsoundsyncconf.js to give it the name of your soundcard and volume mixer, then run the above script again, otherwise it should run. The same script is used to setup the multiroom server. A multiroom server config file example is at /usr/local/lib/smartsoundsync/config_examples/multiroomserverconf.js

I'll post updates as I get more working. Should have a config example up for active 3 way using a 7.1 HDMI receiver in a few days.

Hi Mike,

Glad you persisted with your project. That looks like an awesome system, and the level of synchrony is very good! Thanks for sharing it here. I hope some people will give it a try.

Glad you persisted with your project. That looks like an awesome system, and the level of synchrony is very good! Thanks for sharing it here. I hope some people will give it a try.

Thanks for taking a look! I've been working on it pretty consistently since my previous posts, mostly inspired by the fact that I was wrong in some of the things I said. NTP is not sufficient to keep source and sink close enough to prevent having to mess with samples to keep in sync. To fix this I basically passed the error at the sink back to the source to pull stuff faster or slower out of the source buffer there. With a single sink this prevents cutting or stuffing samples completely. With multiple sinks the error of all the sinks is averaged and applied to the source, so the closer the clocks of your currently playing sinks, the less cutting and stuffing of samples that has to happen across them all. This only works when you have control of the upstream data flow. I've only implemented it with Spotify so far. Spotify/Librespot will let you pull data out a little faster/slower to allow for stuff like this. Airplay might allow for this, not sure. SPDIF I think we're pretty much out of luck.

If you run 'journalctl -u smartsoundsyncsink.service -f' you'll see a line of output for each sample adjustment. AdjustSource is simulated at the source by pulling the data out of the source buffer 1 sample faster/slower and does not affect audio quality. AdjustSink is an actual adjustment cutting or stuffing an audio sample. Effort is made to do that as little as physically possible, and I think it's pretty close.

The sync logic currently lets the error get to +/- 2 samples of error before adjusting by 1 sample to correct. +/- 2 was to get rid of an over correction/jitter issue I was having, but I think I can get it down to +/- 1 sample. Even still 4 samples of error is 0.09 ms at 44100 which I'm assuming is less than the clock error NTP can maintain, but I'm not sure. On the list of things is to get an analog capture card and figure out how to actually measure the error. If you've done that I'd be very interested in the results. Based on what I see the sample adjust logic doing I'd expect to see minimal jitter, but I'm not sure if NTP is allowing any sort constant offset in the system clocks to linger. NTPs own output says no, which you can see by running 'watch -n1 ntpq -pn'.

One thing that may offend your ears is when a positive correction is needed I'm simply inserting a single silent sample right now. If that happens at the right part of the audio you'll hear a small click. I plan to make the new sample the average of the sample before and after the new one, just have to do it. You'll only get this if your sink is faster than your source, and only if you're playing audio to more than one sink. I'll fix it I promise! If you're aware of a better sample adjustment method I'd be very interested in hearing it.

Thanks,

Michael Szilagyi

If you run 'journalctl -u smartsoundsyncsink.service -f' you'll see a line of output for each sample adjustment. AdjustSource is simulated at the source by pulling the data out of the source buffer 1 sample faster/slower and does not affect audio quality. AdjustSink is an actual adjustment cutting or stuffing an audio sample. Effort is made to do that as little as physically possible, and I think it's pretty close.

The sync logic currently lets the error get to +/- 2 samples of error before adjusting by 1 sample to correct. +/- 2 was to get rid of an over correction/jitter issue I was having, but I think I can get it down to +/- 1 sample. Even still 4 samples of error is 0.09 ms at 44100 which I'm assuming is less than the clock error NTP can maintain, but I'm not sure. On the list of things is to get an analog capture card and figure out how to actually measure the error. If you've done that I'd be very interested in the results. Based on what I see the sample adjust logic doing I'd expect to see minimal jitter, but I'm not sure if NTP is allowing any sort constant offset in the system clocks to linger. NTPs own output says no, which you can see by running 'watch -n1 ntpq -pn'.

One thing that may offend your ears is when a positive correction is needed I'm simply inserting a single silent sample right now. If that happens at the right part of the audio you'll hear a small click. I plan to make the new sample the average of the sample before and after the new one, just have to do it. You'll only get this if your sink is faster than your source, and only if you're playing audio to more than one sink. I'll fix it I promise! If you're aware of a better sample adjustment method I'd be very interested in hearing it.

Thanks,

Michael Szilagyi

Last edited:

@mikesz

I talked about how I measured the synchonicity between a sender and two sinks, each running on independent hardware in post 41. I use ARTA. The latest version has a mode called "Signal Time Record" that records N samples from one channel based on a trigger from another channel. This mode can use a variety of signals to trigger the recording of the time record, and I used an impulse for this.

It's a bit of a manual process because you have to account for the fact that the channel that will be recorded is running slower or faster than the trigger channel, and if it is running faster then the impulse reaches it before the recording is triggered, and you end up recording only the tail and of it and not the peak. To get around this, if I expected the delay to be no more than 10msec for example, I would delay the channel that I am recording by 10msec which has the effect of moving the center of the recorded impulse to 10msec after recording starts. To get the offset/error between system I imported the data into Excel and used a worksheet function to spit out at what time the peak maximum in the dataset was found and then I subtracted whatever delay I had used to get the inter-channel offset.

The hardware you need is a Windows computer running the latest ARTA version, and a card that can operate in duplex mode (simultaneously playback and record) with at least two capture channels.

By the way, Gstreamer (the engine for my synchonized streaming app) also inserts zero valued samples and so sometimes the playback sounds like you are listening to a vinyl records that has dust on it. There can be long stretches when this does not happen, and it is only obvious on certain types of music with long extended tones where a pop sticks out.

I talked about how I measured the synchonicity between a sender and two sinks, each running on independent hardware in post 41. I use ARTA. The latest version has a mode called "Signal Time Record" that records N samples from one channel based on a trigger from another channel. This mode can use a variety of signals to trigger the recording of the time record, and I used an impulse for this.

It's a bit of a manual process because you have to account for the fact that the channel that will be recorded is running slower or faster than the trigger channel, and if it is running faster then the impulse reaches it before the recording is triggered, and you end up recording only the tail and of it and not the peak. To get around this, if I expected the delay to be no more than 10msec for example, I would delay the channel that I am recording by 10msec which has the effect of moving the center of the recorded impulse to 10msec after recording starts. To get the offset/error between system I imported the data into Excel and used a worksheet function to spit out at what time the peak maximum in the dataset was found and then I subtracted whatever delay I had used to get the inter-channel offset.

The hardware you need is a Windows computer running the latest ARTA version, and a card that can operate in duplex mode (simultaneously playback and record) with at least two capture channels.

By the way, Gstreamer (the engine for my synchonized streaming app) also inserts zero valued samples and so sometimes the playback sounds like you are listening to a vinyl records that has dust on it. There can be long stretches when this does not happen, and it is only obvious on certain types of music with long extended tones where a pop sticks out.

Last edited:

The above mostly speaks to where I'm at with the design goals of the playback section of this. Those goals are:

1- Bit perfect audio when playing to a single sink. Adjust the source rate to match the sink when possible so sample adjustment is not required.

2- Using the same code for playing to a single sink as when playing multiroom for ease of code maintenance as well as simplicity in configuration while maintaining modularity and flexibility.

3- Multiroom synchronization as tight as is possible while affecting the audio as little as is possible. Adjust the source rate to fall in the middle of the currently playing sinks to minimize corrections required at each sink, all using the same exact code that provides bit perfect audio with a single sink.

1- Bit perfect audio when playing to a single sink. Adjust the source rate to match the sink when possible so sample adjustment is not required.

2- Using the same code for playing to a single sink as when playing multiroom for ease of code maintenance as well as simplicity in configuration while maintaining modularity and flexibility.

3- Multiroom synchronization as tight as is possible while affecting the audio as little as is possible. Adjust the source rate to fall in the middle of the currently playing sinks to minimize corrections required at each sink, all using the same exact code that provides bit perfect audio with a single sink.

- Home

- Source & Line

- PC Based

- synchonized streaming to multiple clients/loudspeakers