Hello,

I'm starting a new project, inspired by many ideas collected here and there. Basic design:

- LxMini speakers,

- two similar FX-Audio D802 FDA USB amps (no analog conversion in the chain)

- RPI (or other similar) as source + Crossover/DSP, exporting 4 channels (one USB for Left tweeter + L Woofer driven by the first D802, other USB for Right tweeter + Right Woofer driven by the first D802.

I have read a lot, including the videoof Charlie Laub speach at Burning Amp 2015, but I'm not so clear if I must expect time synch issues between the R and L speaker becvause of the use of 2 distinct USB and 2 amps, or if it should work.

I imagine that using the mahe USB and Amp for the tweeter and Woofer of the same speaker should provide a "perfect" sync between those two

What would be your idea on that topic?

Best regards,

JMF

I'm starting a new project, inspired by many ideas collected here and there. Basic design:

- LxMini speakers,

- two similar FX-Audio D802 FDA USB amps (no analog conversion in the chain)

- RPI (or other similar) as source + Crossover/DSP, exporting 4 channels (one USB for Left tweeter + L Woofer driven by the first D802, other USB for Right tweeter + Right Woofer driven by the first D802.

I have read a lot, including the videoof Charlie Laub speach at Burning Amp 2015, but I'm not so clear if I must expect time synch issues between the R and L speaker becvause of the use of 2 distinct USB and 2 amps, or if it should work.

I imagine that using the mahe USB and Amp for the tweeter and Woofer of the same speaker should provide a "perfect" sync between those two

What would be your idea on that topic?

Best regards,

JMF

Time sync issue arises from different clocks feeding the DACs.

IF your USB soundcards use adaptive mode (i.e. clock derived from the incoming USB stream) AND all USB soundcards are hooked to the same USB controller, they all will share single clock (that of USB controller) and will not drift apart.

I could not google out what usb-audio mode that D802 uses. Output of the linux lsusb -v command would tell all the details.

IF your USB soundcards use adaptive mode (i.e. clock derived from the incoming USB stream) AND all USB soundcards are hooked to the same USB controller, they all will share single clock (that of USB controller) and will not drift apart.

I could not google out what usb-audio mode that D802 uses. Output of the linux lsusb -v command would tell all the details.

The amp should arrive this week (I found one second hand, and need to find a second one).

The USB chip seems to be a VT1630A (VT1630A - VIA ). I don't see on the web the type of USB mode. I may need to wait for the amp to try the command you propose.

I'm dubious about the complexity thrown in those cheap amps: DSP at the VT1630 level, DSP also in the STA326 ST audio chip. All this looks like an unsed power house. I would be happy, either to be able to use all those DSP for DIY applications, or find a "lean product", with just USB=>Digital power stage...

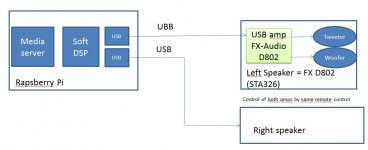

Picture of the architecture:

The USB chip seems to be a VT1630A (VT1630A - VIA ). I don't see on the web the type of USB mode. I may need to wait for the amp to try the command you propose.

I'm dubious about the complexity thrown in those cheap amps: DSP at the VT1630 level, DSP also in the STA326 ST audio chip. All this looks like an unsed power house. I would be happy, either to be able to use all those DSP for DIY applications, or find a "lean product", with just USB=>Digital power stage...

Picture of the architecture:

Attachments

Last edited:

The chip offers USB audio v.1, most likely running in adaptive mode. Very few v.1 devices implemented async mode.

Thanks for the feedback. I will control this as soon as I receive the amp.

I would be happy to understtand a bit more about those clock questions: clock of the R Pi + Clock of the Amp + adaptative USB link: how the amp time would be expected to deal with the amp USB time that is, if I understand well, is hooked to the R Pi (if adaptative mode) and the amp own clock (that is I imagine necessary when working for example with analog inputs).

If you have indications about some digest, or good website to understand the concepts, I would be very interested. I have the feeling that it is an area where you can either build a good design from the bottom, or mess everything from the beginning.

JMF

I would be happy to understtand a bit more about those clock questions: clock of the R Pi + Clock of the Amp + adaptative USB link: how the amp time would be expected to deal with the amp USB time that is, if I understand well, is hooked to the R Pi (if adaptative mode) and the amp own clock (that is I imagine necessary when working for example with analog inputs).

If you have indications about some digest, or good website to understand the concepts, I would be very interested. I have the feeling that it is an area where you can either build a good design from the bottom, or mess everything from the beginning.

JMF

These may be useful......?

The Well-Tempered Computer

The Well-Tempered Computer

USB in a NutShell - Chapter 4 - Endpoint Types

The Well-Tempered Computer

The Well-Tempered Computer

USB in a NutShell - Chapter 4 - Endpoint Types

- two similar FX-Audio D802 FDA USB amps (no analog conversion in the chain)

If the USB chip inside is VT1630A, it outputs analog signals generated by its integrated DAC and the "digital" amplifiers convert the analog input signal to the amplified PWM.

Which leads to your question of clocks - the "digital" amplifier clocks are independent as the input is analog. And the the clock regarding the digital signal is generated from USB. Just like in any other adaptive USB device.

Hi Phofman and Drone7,

Thanks for your replies,

I only have partial info. The amp has only an I2S input. The french web site says:

Amplificateur Class D STA326 complet pilotable via télécommande, le FX-AUDIO D802 intègre en standard un convertisseur numérique analogique 24bit 192kHz associé à une interface S/PDIF AKM AK4113 et une interface Audio/DSP VIA VT1630A.

Cet amplificateur a pour caractéristique d'être un "Full Digital" c'est à dire que le signal S/PDIF est converti en I2s par le AK4113, puis directement amplifié par le STA326.

Basically: S/PDIS => AK4113 => I2S=>STA326 (full digital).

The process for the USB signal is not so clear as about how it goes to the amp. I just hope that they don't do USB>DAC>ADC>SPDIF>I2S>Amp..

Thanks for the links about the USB. I'll read all that.

Best regards,

JMF

Thanks for your replies,

I only have partial info. The amp has only an I2S input. The french web site says:

Amplificateur Class D STA326 complet pilotable via télécommande, le FX-AUDIO D802 intègre en standard un convertisseur numérique analogique 24bit 192kHz associé à une interface S/PDIF AKM AK4113 et une interface Audio/DSP VIA VT1630A.

Cet amplificateur a pour caractéristique d'être un "Full Digital" c'est à dire que le signal S/PDIF est converti en I2s par le AK4113, puis directement amplifié par le STA326.

Basically: S/PDIS => AK4113 => I2S=>STA326 (full digital).

The process for the USB signal is not so clear as about how it goes to the amp. I just hope that they don't do USB>DAC>ADC>SPDIF>I2S>Amp..

Thanks for the links about the USB. I'll read all that.

Best regards,

JMF

Ok, STA326 has I2S input only, then I2S output from VT1630A must used (I did not find any VIA datasheet depicting the chip pinout, and the block diagram available does not show any digital outputs, only analog ones).

Then two sources outputting clocked I2S are used - usb VIA chip or the AKM SPDIF receiver.

There is no reason for an intermediate SPDIF conversion.

Then two sources outputting clocked I2S are used - usb VIA chip or the AKM SPDIF receiver.

There is no reason for an intermediate SPDIF conversion.

I have spent part of the evening learning about USB, adaptative and Async modes.

I understand that for the majority of the devices, the adaptative mode is implemented. This means that the controller (in my schema the R Pi) drives the process without being really concerned by what happens on the receiver side. I imagine that the tranfer of data from the USB converter to the Amp won't add much more "time" asynchronism.

So the clock of the R Pi, in my config, will drive the music. Is this understanding roughtly OK?

Async tranfer to the DAC seems a preferred solution (or a more easy option to get things implemented well). It is not clear to me what would be the effect in my config of having 2 async USB DAC/Amps:

- async USB DAC/Amps to left speaker (one USB for Left tweeter + Left Woofer)

- asynch USB DAC/Amp to right speaker (one USB for Right tweeter + Right Woofer)

Surre to fail ? Sure to work ? Do not know?

JMF

I understand that for the majority of the devices, the adaptative mode is implemented. This means that the controller (in my schema the R Pi) drives the process without being really concerned by what happens on the receiver side. I imagine that the tranfer of data from the USB converter to the Amp won't add much more "time" asynchronism.

So the clock of the R Pi, in my config, will drive the music. Is this understanding roughtly OK?

Async tranfer to the DAC seems a preferred solution (or a more easy option to get things implemented well). It is not clear to me what would be the effect in my config of having 2 async USB DAC/Amps:

- async USB DAC/Amps to left speaker (one USB for Left tweeter + Left Woofer)

- asynch USB DAC/Amp to right speaker (one USB for Right tweeter + Right Woofer)

Surre to fail ? Sure to work ? Do not know?

JMF

In async you will have two independent clocks, each running at slightly different pace. Gradually your buffers will under/overrun and a correction will have to occur - audible click, depending on the software used.

How long it will take for the under/overrun to happen depends on the clock difference. When I was comparing a CD player 44.1kHz clock to a soundcard 44.1kHz clock, I measured a difference of one sample per second.

Using two clock domains without any adaptive resampling (aligning the clock domains) is just a bad design. Both jackd and pulseaudio can work with two clock domains but use adaptive resampling on the boundary.

How long it will take for the under/overrun to happen depends on the clock difference. When I was comparing a CD player 44.1kHz clock to a soundcard 44.1kHz clock, I measured a difference of one sample per second.

Using two clock domains without any adaptive resampling (aligning the clock domains) is just a bad design. Both jackd and pulseaudio can work with two clock domains but use adaptive resampling on the boundary.

I spend some time learning about Audio on USB, SPDIF, jitter..., adaptative, asynchronous. I start to better understand the different concepts.

The websites of XCOM and Benchmark media provide interesting white papers (sure there is some marketing inside also, but also real info).

In my understanding, from pure engineering view (not sure that I would ear a difference), I consider that by order of Timing reliability we have:

- SPDIF (as the clock is coded in the signal, the receiver has not to guess it),

- USB in adptative mode. Maybe slightly lower timing accucary, but still proven in use.

This paper from Benchmark, explains that with their DACs, as the DACs clocks are very precise, plus the implementation of Asynchronous Sample Rate Converter (ASRC), they can achieve nearly perfect synchonization of 2 DACS, and use them in phase coherent systems.

It seems that Asynchronous USB, with ASRC + very precise DAC clock can do the job to resyncronize less than perfect upstream systems.

So to compelent my previous list:

consider that by order of Timing reliability we have:

- SPDIF (as the clock is coded in the signal, the receiver has not to guess it) / Equivalent alternative Asynchronous USB, with ASRC + very precise DAC clock

- USB in adptative mode. Maybe slightly lower timing accucary, but still proven in use.

Does this looks correct ?

JMF

The websites of XCOM and Benchmark media provide interesting white papers (sure there is some marketing inside also, but also real info).

In my understanding, from pure engineering view (not sure that I would ear a difference), I consider that by order of Timing reliability we have:

- SPDIF (as the clock is coded in the signal, the receiver has not to guess it),

- USB in adptative mode. Maybe slightly lower timing accucary, but still proven in use.

This paper from Benchmark, explains that with their DACs, as the DACs clocks are very precise, plus the implementation of Asynchronous Sample Rate Converter (ASRC), they can achieve nearly perfect synchonization of 2 DACS, and use them in phase coherent systems.

It seems that Asynchronous USB, with ASRC + very precise DAC clock can do the job to resyncronize less than perfect upstream systems.

So to compelent my previous list:

consider that by order of Timing reliability we have:

- SPDIF (as the clock is coded in the signal, the receiver has not to guess it) / Equivalent alternative Asynchronous USB, with ASRC + very precise DAC clock

- USB in adptative mode. Maybe slightly lower timing accucary, but still proven in use.

Does this looks correct ?

JMF

SPDIF requires PLL just like USB adaptive. From this POW they are similar.

USB async does not require subsequent ASRC. ASRC is used to convert between two clock domains, there is only one clock domain in the USB async (the pace PC sends the audio data is controlled by the DAC clock - buffer over/underrun should not happen).

USB async does not require subsequent ASRC. ASRC is used to convert between two clock domains, there is only one clock domain in the USB async (the pace PC sends the audio data is controlled by the DAC clock - buffer over/underrun should not happen).

Could the ASRC in Benchmark DAC design be intented to create a second time domain, with highest quality clock, to play the samples at the exact pace, when the source clock (first time domain) could not be at the expected accuracy level (computer or soundcard)?

JMF

JMF

Async usb audio does not need two clocks. The DAC clocks is the primary (and only needed) clock while the computer usb driver is instructed to speed-up/slow-down the data rate by feedback control messages generated by the USB device. This way the USB device input buffer is kept filled optimally and reading from the input buffer can be clocked by the fixed precise DAC clock.

ASRC in principle cannot fix everything - jitter in time domain is traded for imperfections in recalculated sample values.

ASRC in principle cannot fix everything - jitter in time domain is traded for imperfections in recalculated sample values.

Ok understood.

I like the idea of an Async USB last stage in the design, that would be the only stage with high precision clock in the whole digital chain. Upstream, conventional IT of the shelf components could be used, with only Audiophile quality clock before driving the driver.

JMF

I like the idea of an Async USB last stage in the design, that would be the only stage with high precision clock in the whole digital chain. Upstream, conventional IT of the shelf components could be used, with only Audiophile quality clock before driving the driver.

JMF

Hello,

An update on the concept. I have it now working. An Orange Pi (instead of a RPi), and 2 FX-Audio D802 driven by the USB input.

MPD as music player, ecasound+ACDf LADSPA plugins to perform the software DSP crossover. One Amp drives the Woofer/Tweeter of the left channel, the other amp drives the Woofer/Tweeter of the left channel.

This delivers beautiful sound, but I have an odd behaviour about the stereo image. It is not centered, it is pulled to a side... I did a lot of testing to identify the culprit: not the room, not the speakers, measurement of the amps with the same speaker and setup gave same sensitivity, not the track.

Then I identified the way to change the side to which the image is pulled. It changes when I replace

With:

Changing the sequence I feed the amps, pulls the image to one side or the other. There is a timing issue that can be clearly detected.

I would not have imagined that it would be so sensitive... I have to see if I can improve the situation by using a virtual device.

JMF

An update on the concept. I have it now working. An Orange Pi (instead of a RPi), and 2 FX-Audio D802 driven by the USB input.

MPD as music player, ecasound+ACDf LADSPA plugins to perform the software DSP crossover. One Amp drives the Woofer/Tweeter of the left channel, the other amp drives the Woofer/Tweeter of the left channel.

This delivers beautiful sound, but I have an odd behaviour about the stereo image. It is not centered, it is pulled to a side... I did a lot of testing to identify the culprit: not the room, not the speakers, measurement of the amps with the same speaker and setup gave same sensitivity, not the track.

Then I identified the way to change the side to which the image is pulled. It changes when I replace

Code:

ecasound -d -z:mixmode,sum -B:rt -tl\

-a:pre -i:"HeCanOnlyHoldHer.flac" -eadb:-2 -pf:/home/jmf/ecasound/ACDf2$

-a:woofer,tweeter -i:loop,1 \

-a:woofer -pf:/home/jmf/ecasound/ACDf2WooferRef.ecp -chorder:1,0,2,0 \

-a:tweeter -pf:/home/jmf/ecasound/ACDf2TweeterRef.ecp -chorder:0,1,0,2 \

-a:woofer,tweeter -f:32,4,44100 -o:loop,2 \

-a:delay -i:loop,2 -el:mTAP,0,0.06,0,0.06 -o:loop,3 \

-a:DAC1,DAC2 -i:loop,3 \

-a:DAC1 -chorder:1,2 -f:16,2,44100 -o:alsa,front:FXAUDIOD802 \

-a:DAC2 -chorder:1,2 -f:16,2,44100 -o:alsa,front:FXAUDIOD802_1

Code:

ecasound -d -z:mixmode,sum -B:rt -tl\

-a:pre -i:"HeCanOnlyHoldHer.flac" -eadb:-2 -pf:/home/jmf/ecasound/ACDf2$

-a:woofer,tweeter -i:loop,1 \

-a:woofer -pf:/home/jmf/ecasound/ACDf2WooferRef.ecp -chorder:1,0,2,0 \

-a:tweeter -pf:/home/jmf/ecasound/ACDf2TweeterRef.ecp -chorder:0,1,0,2 \

-a:woofer,tweeter -f:32,4,44100 -o:loop,2 \

-a:delay -i:loop,2 -el:mTAP,0,0.06,0,0.06 -o:loop,3 \

-a:DAC1,DAC2 -i:loop,3 \

-a:DAC2 -chorder:1,2 -f:16,2,44100 -o:alsa,front:FXAUDIOD802_1 \

-a:DAC1 -chorder:1,2 -f:16,2,44100 -o:alsa,front:FXAUDIOD802Changing the sequence I feed the amps, pulls the image to one side or the other. There is a timing issue that can be clearly detected.

I would not have imagined that it would be so sensitive... I have to see if I can improve the situation by using a virtual device.

JMF

I just tried the multi device as defined in http://www.diyaudio.com/forums/pc-b...virtual-multi-channel-device.html#post4729740

I doesn't work properly: I have some strange digital distortion in the woofer, using my "multi", "ttable", "plug:ttable". It also provides some strange feeling of acceleration.

The command I use is:

Last alternative is to change the amps configuration, to have one amp managing the 2 woofers, and the other the 2 tweeters. But I will then have some unexpected delay between the tweeters and woofers. I don't know which config is the worst.

JMF

JMF

I doesn't work properly: I have some strange digital distortion in the woofer, using my "multi", "ttable", "plug:ttable". It also provides some strange feeling of acceleration.

The command I use is:

Code:

ecasound -d -z:mixmode,sum -B:rt -tl\

-a:pre -i:"HeCanOnlyHoldHer.flac" -f:32,2,44100 -pf:/home/jmf/ecasound/$

-a:woofer,tweeter -i:loop,1 \

-a:woofer -pf:/home/jmf/ecasound/ACDf2WooferRef.ecp -chorder:1,0,2,0 \

-a:tweeter -pf:/home/jmf/ecasound/ACDf2TweeterRef.ecp -chorder:0,1,0,2 \

-a:woofer,tweeter -f:32,4,44100 -o:loop,2 \

-a:delay -i:loop,2 -el:mTAP,0,0.06,0,0.06 -o:loop,3 \

-a:DAC -i:loop,3 -f:16,4,44100 -o:alsa,plug:ttableLast alternative is to change the amps configuration, to have one amp managing the 2 woofers, and the other the 2 tweeters. But I will then have some unexpected delay between the tweeters and woofers. I don't know which config is the worst.

JMF

JMF

IMO the same-time samples for each card are not placed into the the same 1ms USB frame - the soundcards were not started together at the same time/within single USB frame. It really depends how ecasound starts the two devices - very likely in series, with some delay.

I would definitely try using the multichannel virtual device, hopefully alsa will start the two devices closer together.

I would definitely try using the multichannel virtual device, hopefully alsa will start the two devices closer together.

- Status

- Not open for further replies.

- Home

- Source & Line

- PC Based

- RPI + 2x USB FDA amps - time synch OK or issue?