Anatech, I cant believe we are still discussing this. Even an independently made test report will not convince you of the improved playback capabilities of CD-ROM drives.

The problem with that is that it is an internal thing. The memory used is only for the music data included with status information , like the C flags. You can only read these is you have access to the data stream at this point (you don't). The only flag you might have access to is the C2 flag (all is lost).

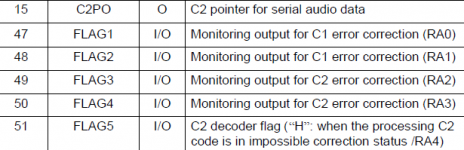

Attached is some terminals/pins of the Samsung DSP I talked about earlier which relates with C1/C2 handling. Why do you think C2 pointer is not sufficient?

There really is no excuse for any interpretation problems between software or hardware. Everything is clearly defined. Can a chip maker mess this up? Absolutely.

I believe it is more about the programmer's responsibility. As long as the programmer knows what he is doing, including how each chip vendor "do" their things, it should be okay, meaning that when the chip vendor mess things up, the programmer should know and can report it to users. That's why such software as posted by Mark do not work for "ANY" drives (or any chips).

Attachments

The limits are very clear and set in the Redbook. BLER<220 and E32=0. The problem is that the player leaves you guessing at what levels of error correction is active. This is why I suggested monitoring the error correction at the beginning of this thread.

You seem to have missed or passed over the point I was making though.

If they were "new" they would not be "classic". Anyone for a new Opel kadett E? (Vauxhall Astra Mark 2 in the UK)

Well, you didn't mention the word 'new' in the text I was responding to, but as you have now, maybe this helps:

Morgan Motor Company

or this:

Classic Jaguar Replicas | C Type | D Type | XKSS | CJR | Jag Replicas | XK SS

or this:

Lynx Motors (International) Ltd

or this:

Caterham Cars

or this:

Home - AK Sportscars - Cobra Replica Kit Car

Ok, that's enough, I'll stop - it's not a car forum afterall. 🙂

Jon.

You seem to have missed or passed over the point I was making though.

Which is?

Hi Chris,

OK, perhaps I didn't phrase my original Q. that well. What I meant was 'Don't most decent external DACs nowadays buffer the signal and use an internal clock signal afresh to derive their own timing?' (hence mitigate jitter problems).

Incidentally, I came about one of those external "re-clockers" you mention last year somewhat by accident - haven't tried it yet. It's not something I've seen much over here (gauged by not having seen any on ebay!). Do they really accomplish zero?

Jon.

Hi Jon,

All external DACs reclock. There is not clock transmitted, it has to be regenerated from the data stream. Jitter is a problem here. There are external "re-clockers" available to address that issue. Then they resend the data serially again. Essentially accomplishing zero but reducing your bank balance.

OK, perhaps I didn't phrase my original Q. that well. What I meant was 'Don't most decent external DACs nowadays buffer the signal and use an internal clock signal afresh to derive their own timing?' (hence mitigate jitter problems).

Incidentally, I came about one of those external "re-clockers" you mention last year somewhat by accident - haven't tried it yet. It's not something I've seen much over here (gauged by not having seen any on ebay!). Do they really accomplish zero?

Jon.

Which is?

The best possible accurate 1x one pass read is paramount.

Just a reply to a reply...

A car built to exacting standards by hand (with real humans attached no less!).

Gotta love the C-type...if only I was born rich...

And lightweight E-types

Which is much like the Morgan as they have been in continuous production for so long...

And you if you love Cobras, I don't think you can beat the "new" ones, other than if you get one from Shelby themselves. Personally I'd love an aluminum-blocked 351W or 302 in a Shelby American FIA or early Cobra aluminum body. Roller valve train...Getrag 5 speed (cause 6speeds would be simply too many)...hhmmmm

Oh, but it could be...😉

to all: The point being made here is that often quality or good "old stuff" is at worse old "good stuff", whilst new "junk" will always be "junk" , particularly when it becomes old "junk". I'm not a tech, but I do appreciate things that are well thought out and built far more robustly than they need to be. The idea is to build something that mechanically (and electronically too) is not a bottleneck to performance. Anatech is (I think) just trying to make the point that if we are looking for a superior cd transport, we should not settle for a dvd or blu-ray transport. Mechanically they may get the job done, but if trying to build something that is better than what is currently available it should be superior. I've owed a few 1st and 2nd generation players that I wish that I had never sold. The technology and DAC capabilities may have improved, but the quality of the transports has not. Look at Goldmund as an example. Put a cheap Pioneer dvd player into a fancy box and (maybe) tweak it a little and it goes from a $100 player to a $12000 player!

Mechanically the best of the cd transports (including the optics) should be something to aim for, and that (perhaps) mixing and matching certain aspects of particular transports might be something to consider.

Now regarding the use of computer based transports, I recall that years ago many suggested the NEC mutispin drives for audio and even a few magazines suggested using one (Positive Feedback IIRC) They were quite robustly built, and could be set up to read dvd with some work. (Just another thought on an interesting design that may be suitable. I haven't heard much about NEC lately, and can't recall if any suggested something like that already in this thread. Years ago I had a Samsung dvd player that was set up or 24 bit/192 audio playback that had a stupidly cheap transport, but good electronics.

So let's just stop the arguing over what transport to emulate or what DVD transport to adopt. Let's build us something that is superior instead of arguing.

You seem to have missed or passed over the point I was making though.

Well, you didn't mention the word 'new' in the text I was responding to, but as you have now, maybe this helps:

Morgan Motor Company

A car built to exacting standards by hand (with real humans attached no less!).

Gotta love the C-type...if only I was born rich...

And lightweight E-types

or this:Caterham Cars

Which is much like the Morgan as they have been in continuous production for so long...

And you if you love Cobras, I don't think you can beat the "new" ones, other than if you get one from Shelby themselves. Personally I'd love an aluminum-blocked 351W or 302 in a Shelby American FIA or early Cobra aluminum body. Roller valve train...Getrag 5 speed (cause 6speeds would be simply too many)...hhmmmm

Ok, that's enough, I'll stop - it's not a car forum afterall. 🙂

Jon.

Oh, but it could be...😉

to all: The point being made here is that often quality or good "old stuff" is at worse old "good stuff", whilst new "junk" will always be "junk" , particularly when it becomes old "junk". I'm not a tech, but I do appreciate things that are well thought out and built far more robustly than they need to be. The idea is to build something that mechanically (and electronically too) is not a bottleneck to performance. Anatech is (I think) just trying to make the point that if we are looking for a superior cd transport, we should not settle for a dvd or blu-ray transport. Mechanically they may get the job done, but if trying to build something that is better than what is currently available it should be superior. I've owed a few 1st and 2nd generation players that I wish that I had never sold. The technology and DAC capabilities may have improved, but the quality of the transports has not. Look at Goldmund as an example. Put a cheap Pioneer dvd player into a fancy box and (maybe) tweak it a little and it goes from a $100 player to a $12000 player!

Mechanically the best of the cd transports (including the optics) should be something to aim for, and that (perhaps) mixing and matching certain aspects of particular transports might be something to consider.

Now regarding the use of computer based transports, I recall that years ago many suggested the NEC mutispin drives for audio and even a few magazines suggested using one (Positive Feedback IIRC) They were quite robustly built, and could be set up to read dvd with some work. (Just another thought on an interesting design that may be suitable. I haven't heard much about NEC lately, and can't recall if any suggested something like that already in this thread. Years ago I had a Samsung dvd player that was set up or 24 bit/192 audio playback that had a stupidly cheap transport, but good electronics.

So let's just stop the arguing over what transport to emulate or what DVD transport to adopt. Let's build us something that is superior instead of arguing.

Hi Jay,

If you want to gauge the quality of a transport, you have to look at the quality of the data coming off, not how many times it was too large a loss to reconstruct.

It's like looking at a car's steering. If it went around the corner, it could be said that the car steers fine (Mark), but counting the corrections necessary to keep it on course will tell you there are problems if there are constant course corrections required. You would need to know this before buying a car for example.

-Chris

Yes, perfect. That chip can be monitored nicely. It may simply be that the other chips label the pins "factory use", or "internal connection, do not use" or something similar.Attached is some terminals/pins of the Samsung DSP I talked about earlier which relates with C1/C2 handling.

Simple. The C1 flag tells you when error correction is invoked. You can then determine how much you are relying on error correction whereas the C2 flag only tells you that error correction has failed and further action will generate valid data frames, but the original information has in fact been lost.Why do you think C2 pointer is not sufficient?

If you want to gauge the quality of a transport, you have to look at the quality of the data coming off, not how many times it was too large a loss to reconstruct.

It's like looking at a car's steering. If it went around the corner, it could be said that the car steers fine (Mark), but counting the corrections necessary to keep it on course will tell you there are problems if there are constant course corrections required. You would need to know this before buying a car for example.

I think it is more basic. It depends on whether that memory can be peeked or not.That's why such software as posted by Mark do not work for "ANY" drives (or any chips).

-Chris

It's like looking at a car's steering. If it went around the corner, it could be said that the car steers fine (Mark), but counting the corrections necessary to keep it on course will tell you there are problems if there are constant course corrections required. You would need to know this before buying a car for example.

-Chris

I am promoting the counting of errors. How do you test the quality of the data coming of the disc? If not by counting the errors it contains?

Last edited:

Hi Mark,

Maybe the massive difference in our viewpoints comes from the fact that I have dealt with both professional CD transports, and the entire range of consumer transports. I optimized the reading ability of these transports - which is exactly the experience that is required to speak on this subject. I have also looked at the eye patterns of computer drives and know them to be not great performers at retrieving data off a CD in the same way normal CD players are. You seem to have maybe read reports on computer drives.

If my read on you is correct, you only care about the C2 counts (or however you decide to subdivide that number). You are blind to how good or bad the data read is as long as the error correction can save your bacon.

The measure of quality for any data channel is the quality of the eye pattern. Period. This holds true for tape formats, optical and copper cable or even CD or DVD ROMs. Until you take the time to look at and examine eye patterns, you are incapable of rendering a decision on the relative ranking of any CD or DVD ROM drive. Not only that, but failure to acknowledge the importance of eye pattern examination means that you will not have transferable skills for your new job. You can play with stats all you want, but they do not give you the real story. For that you need to invest some time and possibly money to actually see what the numbers on a report mean. You need to examine a wide range of eye patterns until you understand them. Then, maybe, the light will come on and you will understand what I have been trying to tell you.

Just for the record, we used to test BLER rates (had a jig for that even). We stopped when it became clear that the finer "numbers" were in the analog eye pattern. It wasn't just us that stopped, the entire industry stopped using BLER rates for service and performance tuning. A BLER rate is only a number that is used as a metric for a pass / fail kind of test. You can use a BLER rate counter to very roughly adjust a drive (stupid if you aren't looking at the eye pattern!). The eye pattern will tell you instantly if you are adjusting the right thing, and if you are going in the right direction with the adjustment. Looking at Cx errors and BLER rate numbers is pretty close to playing a game of "Battleship".

-Chris

Yeah, neither can I. I can't believe that you just don't get it.Anatech, I cant believe we are still discussing this.

Hmmm, how on earth did you come to that conclusion??? Nothing in that report draws a comparison between a good consumer or professional transport and a DVDROM player. What I got out of that report was that they tested a good batch of TDK blanks. Not once was any other type of player even mentioned. Not even a cheap and nasty transport.Even an independently made test report will not convince you of the improved playback capabilities of CD-ROM drives.

Maybe the massive difference in our viewpoints comes from the fact that I have dealt with both professional CD transports, and the entire range of consumer transports. I optimized the reading ability of these transports - which is exactly the experience that is required to speak on this subject. I have also looked at the eye patterns of computer drives and know them to be not great performers at retrieving data off a CD in the same way normal CD players are. You seem to have maybe read reports on computer drives.

If my read on you is correct, you only care about the C2 counts (or however you decide to subdivide that number). You are blind to how good or bad the data read is as long as the error correction can save your bacon.

The measure of quality for any data channel is the quality of the eye pattern. Period. This holds true for tape formats, optical and copper cable or even CD or DVD ROMs. Until you take the time to look at and examine eye patterns, you are incapable of rendering a decision on the relative ranking of any CD or DVD ROM drive. Not only that, but failure to acknowledge the importance of eye pattern examination means that you will not have transferable skills for your new job. You can play with stats all you want, but they do not give you the real story. For that you need to invest some time and possibly money to actually see what the numbers on a report mean. You need to examine a wide range of eye patterns until you understand them. Then, maybe, the light will come on and you will understand what I have been trying to tell you.

Just for the record, we used to test BLER rates (had a jig for that even). We stopped when it became clear that the finer "numbers" were in the analog eye pattern. It wasn't just us that stopped, the entire industry stopped using BLER rates for service and performance tuning. A BLER rate is only a number that is used as a metric for a pass / fail kind of test. You can use a BLER rate counter to very roughly adjust a drive (stupid if you aren't looking at the eye pattern!). The eye pattern will tell you instantly if you are adjusting the right thing, and if you are going in the right direction with the adjustment. Looking at Cx errors and BLER rate numbers is pretty close to playing a game of "Battleship".

-Chris

Hi Mark,

-Chris

A picture of an eye pattern gives you far more information. If you want to include error counts, do so. That is supplementary information at best.I am promoting the counting of errors. How do you test the quality of the data coming of the disc? If not by counting the errors it contains?

-Chris

Hi Jon,

If you put one in line, then fail to bring those signals out separately to the DAC, they recombine the signals and you are in the same boat that you were before. I have some of those things here too. I was authorized warranty for Audio Alchemy for a bit. I am familiar with them.

The main issue is the combining of those signals into a single serial data stream. The clock must then be recreated. That process has high jitter unless you use the sync to steer a very stable clock. The clock is tied to the input data. You can't use a different clock for data determination because you will have clock slip and errors due to this. When you decode data, you must use the recovered clock from that data and clock it into memory - then clock that out using a stable clock. Buffer under and overruns will occur unless there is some way to control the far end clock so you can keep your buffer memory partially full.

It makes more sense to get a really good DAC board that has a high end re-clocker built in. Once you separate clock, data and L-R clock, you must keep them separated for there to be any improvement. It's a fun topic.

-Chris

Yupper!Do they really accomplish zero?

If you put one in line, then fail to bring those signals out separately to the DAC, they recombine the signals and you are in the same boat that you were before. I have some of those things here too. I was authorized warranty for Audio Alchemy for a bit. I am familiar with them.

The main issue is the combining of those signals into a single serial data stream. The clock must then be recreated. That process has high jitter unless you use the sync to steer a very stable clock. The clock is tied to the input data. You can't use a different clock for data determination because you will have clock slip and errors due to this. When you decode data, you must use the recovered clock from that data and clock it into memory - then clock that out using a stable clock. Buffer under and overruns will occur unless there is some way to control the far end clock so you can keep your buffer memory partially full.

It makes more sense to get a really good DAC board that has a high end re-clocker built in. Once you separate clock, data and L-R clock, you must keep them separated for there to be any improvement. It's a fun topic.

-Chris

Hmmm, how on earth did you come to that conclusion??? Nothing in that report draws a comparison between a good consumer or professional transport and a DVDROM player. What I got out of that report was that they tested a good batch of TDK blanks. Not once was any other type of player even mentioned. Not even a cheap and nasty transport.

Every disc was tested on three players. The results are clear. The Plextor in the Clover analyser reads at a lower error rate than the Philips in the SA3. The players are repeatedly mentioned throughout the report.

If my read on you is correct, you only care about the C2 counts (or however you decide to subdivide that number). You are blind to how good or bad the data read is as long as the error correction can save your bacon.

I hope that no one would claim that the signal coming from the disc is not important. Interpreting the eye-pattern is something else. If monitoring the eye-pattern is important then it should be build it into the player. A small display (or to a tablet/TV) could be added.

My take on it is that a better eye-pattern will also reduce error rates. Monitor the error rates and you know that the eye-pattern must be good. The opposite can not be claimed, a good looking eye-pattern does not guaranty low error rates.

A user could benefit from knowing the amount of error correction that is taking place, particularly when the DSP is active.

Hi Jay,

Yes, perfect. That chip can be monitored nicely. It may simply be that the other chips label the pins "factory use", or "internal connection, do not use" or something similar.

That's why since the beginning I have expected people to come up with their favorite chipset (including PLC programming if preferred).

Whatever high end a CD player, it cannot live without error correction. And the quality of error correction (and interpolation) is NOT equal from chip to chip.

And yes, some chips don't even have PDF on the net (of course, it can be just a matter searching skill). But I prefer a "proven" chip, meaning that the same chip has been used in top sounding players.

Simple. The C1 flag tells you when error correction is invoked. You can then determine how much you are relying on error correction whereas the C2 flag only tells you that error correction has failed and further action will generate valid data frames, but the original information has in fact been lost.

If you want to gauge the quality of a transport, you have to look at the quality of the data coming off, not how many times it was too large a loss to reconstruct.

What I meant was (*), look at the Samsung chip. It has 5 terminals (FLAG1 to FLAG5) to monitor the flow of error correction (C1 and C2). These can be used to monitor how many times an error occurred. The other terminal (C2PO) is used for interpolation, which is not what you want.

(*Note: I'm a programmer and I was a bit confused with the interchangeable use of the words "pointer" and "flag". In programming, "pointer" usually refers to a "position", e.g. in a memory. So, I prefer C2PO to be expressed as C2FLG).

I think it is more basic. It depends on whether that memory can be peeked or not.

I wish we have some proposals for the chipset right now, so we can look into that chip in detail. You have mentioned your preference for Sony or Sanyo. I think I don't like Sanyo, just because of bad experience with certain servo chipset from this brand.

I am promoting the counting of errors. How do you test the quality of the data coming of the disc? If not by counting the errors it contains?

For CDROM, error correction is more important than for CD player. Not that it is not important in CDP, but with slow speed (and especially when we talk about good product) the quality can be controlled from the eye pattern (The error handling is done by a chipset).

Having a good eye pattern in combination with using good chipset will guarantee small error. Only a little error can be contributed by other factors but being a good transport (of sufficient budget) this can be handled easily as the mechanism is well understood.

But I'm agree with you that CDROM is becoming better and better and I think it is the future of transport, or at higher level (if there is a demand for high end audio CDROM) it can be made perfect, even better than a CD player. The problem is then the need to separate audio analog from the switching noise of digital computer and power supply.

The best possible accurate 1x one pass read is paramount.

I did get it wrong then. I thought you were asking about the limits of error correction and when it might be audible.

Hi Mark,

The focus of that report was on the blank CDROMs, not the standard players used to assess them. They did provide a comparison between those three players but also indicated that the exact condition was not known with any degree of certainty. They were saying that the comparison was only valid in that lab at that point in time. You need to read between the lines for this to some extent, but the message was pretty clear. The only thing they could reasonably certify was the quality of that batch of blank CDROMs. Period.

Mark, you can't read any more in that report as its limits were mentioned. This is proper lab procedure in the calibration world (which I also worked in). Since they can't send out their primary instrumentation assets (the three CDROM readers / writers), they did the best they could do and performed a "cross-check". A cross-check is merely a sanity check to see if the instrumentation agrees within limits and is not in any way a lab procedure that can lead to certification for any of those assets. The best they could do is to run a "bogey" CDROM and check the eye pattern with a limit mask, and maybe count the incursions.

In short, that report does not support any kind of qualitative certifications for the equipment they used. It does support that the batch of blank CDROMs is of high quality, passing the limits laid down by the governing body for blank CDROM quality assurance for that industry. That is all.

As you well know, economics come into play. The manufacturers in your industry will not pay for anything extra than what is required by the governing body for quality control. Every industry is the same. To add such a display to the products will require an interface jig, and the equivalent of a decent oscilloscope into the QC equipment. Then they must also use skilled operators who can interpret these displays. Some companies may do this as it is an excellent way to figure out where developing problems may be developing. You will see defects in the eye pattern before any serious Cx errors begin to appear. Canary in a coalmine.

If you can, spend some time with an error rate counter and an oscilloscope with some CD transports. Make some adjustments so you can see the connection between eye pattern quality and error rates. This is something I have actually done and can recommend it highly.

-Chris

That's all well and good, but you are attempting to "read across" to the consumer and professional CD transports. There isn't any data to suggest that this is valid. In fact, "read across" has name and definition, doing this is widely condemned by most if not all standards associations. In other words, don't do it.Every disc was tested on three players. The results are clear. The Plextor in the Clover analyser reads at a lower error rate than the Philips in the SA3.

The focus of that report was on the blank CDROMs, not the standard players used to assess them. They did provide a comparison between those three players but also indicated that the exact condition was not known with any degree of certainty. They were saying that the comparison was only valid in that lab at that point in time. You need to read between the lines for this to some extent, but the message was pretty clear. The only thing they could reasonably certify was the quality of that batch of blank CDROMs. Period.

Mark, you can't read any more in that report as its limits were mentioned. This is proper lab procedure in the calibration world (which I also worked in). Since they can't send out their primary instrumentation assets (the three CDROM readers / writers), they did the best they could do and performed a "cross-check". A cross-check is merely a sanity check to see if the instrumentation agrees within limits and is not in any way a lab procedure that can lead to certification for any of those assets. The best they could do is to run a "bogey" CDROM and check the eye pattern with a limit mask, and maybe count the incursions.

In short, that report does not support any kind of qualitative certifications for the equipment they used. It does support that the batch of blank CDROMs is of high quality, passing the limits laid down by the governing body for blank CDROM quality assurance for that industry. That is all.

Not so.If monitoring the eye-pattern is important then it should be build it into the player. A small display (or to a tablet/TV) could be added.

As you well know, economics come into play. The manufacturers in your industry will not pay for anything extra than what is required by the governing body for quality control. Every industry is the same. To add such a display to the products will require an interface jig, and the equivalent of a decent oscilloscope into the QC equipment. Then they must also use skilled operators who can interpret these displays. Some companies may do this as it is an excellent way to figure out where developing problems may be developing. You will see defects in the eye pattern before any serious Cx errors begin to appear. Canary in a coalmine.

This is not always true, but certainly could be depending on the impairment.My take on it is that a better eye-pattern will also reduce error rates. Monitor the error rates and you know that the eye-pattern must be good.

Patently untrue. A good eye pattern guaranties proper decoding of the information. That's what it is all about. You can't have a good eye pattern and decode errors at the same time unless the equipment is mis-adjusted or defective in the decode circuitry. This will probably affect the eye pattern anyway.The opposite can not be claimed, a good looking eye-pattern does not guaranty low error rates.

I can't agree more with you on this!A user could benefit from knowing the amount of error correction that is taking place, particularly when the DSP is active.

If you can, spend some time with an error rate counter and an oscilloscope with some CD transports. Make some adjustments so you can see the connection between eye pattern quality and error rates. This is something I have actually done and can recommend it highly.

-Chris

Hi Jay,

-Chris

Completely agree with you on this!Whatever high end a CD player, it cannot live without error correction. And the quality of error correction (and interpolation) is NOT equal from chip to chip.

I have not compared chip sets to error correction or access to Cx monitoring test points. I'll be honest with you, if you have done any study in that area, you are far more qualified to comment than I am.That's why since the beginning I have expected people to come up with their favorite chipset (including PLC programming if preferred).

Then, this is my favorite chip set to use, simple. I'll assume you like the error correction algorithms over other chips as well.What I meant was (*), look at the Samsung chip. It has 5 terminals (FLAG1 to FLAG5) to monitor the flow of error correction (C1 and C2). These can be used to monitor how many times an error occurred. The other terminal (C2PO) is used for interpolation, which is not what you want.

My preferences are due to the amount of machines with these chip sets and the quality of the eye pattern they deliver. Since you are more familiar with the other technical aspects, why not provide guidance for us?I wish we have some proposals for the chipset right now, so we can look into that chip in detail. You have mentioned your preference for Sony or Sanyo. I think I don't like Sanyo, just because of bad experience with certain servo chipset from this brand.

-Chris

Anatech,

In my industry, the oscilloscope could be the cheapest part of the analyser. For 100k you would expect them to give you one in for free.

The test report may not be up to your standards. On the other hand, many design decisions are being made with less data or understanding. When they test a disc and get different results, it can only be caused by the abilities of the players.

Jay, it should be possible to access the error flags with the controller and send them to the players display. Just need to decide which data you want to present to the user.

In my industry, the oscilloscope could be the cheapest part of the analyser. For 100k you would expect them to give you one in for free.

The test report may not be up to your standards. On the other hand, many design decisions are being made with less data or understanding. When they test a disc and get different results, it can only be caused by the abilities of the players.

Jay, it should be possible to access the error flags with the controller and send them to the players display. Just need to decide which data you want to present to the user.

Last edited:

- Home

- Source & Line

- Digital Source

- Quality CD-Mechanisms are long gone - let us build one ourselves!