How is it actually related to the audio layer? I thought the communication was via network, hence the sockets. Thanks.

Here's the code zipped for anyone that wants to try it.

As the code has to support the RPC port 111 it has to run as root. So Siglent's security really helps as the scope is hard coded to 704 too. You could translate the ports from 111 etc external to something above 1024 to run as non-root.

This means your pyserial, numpy and simple audio need installing under root as

As the code has to support the RPC port 111 it has to run as root. So Siglent's security really helps as the scope is hard coded to 704 too. You could translate the ports from 111 etc external to something above 1024 to run as non-root.

Code:

sudo python3 bode.py softwareawgThis means your pyserial, numpy and simple audio need installing under root as

Code:

sudo python3 -m pip install <module>Attachments

Last edited:

The SDS talks to the python code that then simply creates and plays the right frequency out through the audio.

The SDS itself tells the python a set of operations "play this frequency, phase, level, offset" etc for each step of the bode plot. The scope then uses two channels - one on the input and one of the output of the DUT.

I should point out that the code only supports frequency and ignores phase, level, offset and channel at the moment.

The SDS itself tells the python a set of operations "play this frequency, phase, level, offset" etc for each step of the bode plot. The scope then uses two channels - one on the input and one of the output of the DUT.

I should point out that the code only supports frequency and ignores phase, level, offset and channel at the moment.

Last edited:

Perfect. Have you considered uploading to github, or forking the original repo and pushing your python3 patch? That way your work would stay alive and easy to find.

Typically these audio python packages require compilation which requires having audio lib headers installed - apt-get install libasound2-dev

Yes - you will need this installed.

With ubuntu 20, libasound2 comes pre-installed.

The main fun I had was attempting to get a network connection - I'm using a LAN physical cable.

1. I set up the Mac mini OSX network for the Ethernet network as DCHP.

2. I connect the scope via LAN to the ethernet port of the Mac mini.

3. I set the Virtualbox with a bridged adaptor with "Ethernet"

4. I set the Siglent's IP address using the I/O menu to a manual address

5. I set the virtual box bridge adaptor to a manual address on the same network.

6. I set the ubuntu VM network so that it has a second adaptor that uses the bridged ethernet port

7. I set the Bode plot IP to point to the second ubuntu ethernet adaptor IP address.

This allows the Siglent SDS to connect to the IP address of the VM as the Ethernet port is shared and for the ubuntu VM to connect to the SDS using chrome for example.

You could use WLAN on your WiFi with an appropriate siglent compatible WiFi and come through the normal WiFi connection for the VM client OS.

Perfect. Have you considered uploading to github, or forking the original repo and pushing your python3 patch? That way your work would stay alive and easy to find.

Out of time today.. more jobs todo.

Sorry to be off-topic but I'm about to get an sds1104x-e (I believe that's the model you wanted to write on the title) and I was wondering how the bode plot behaves in the lower frequencies like 5Hz to 5kHZ. Is it accurate enough for the audio bandwidth?

Many thanks

The SDS has a lower limit of 10Hz it appears on first attempts (with the log sweep, the linear may support this).

Accuracy - well it's free 😉 hence it the result and accuracy would depend on your operating system, the hardware of the computer and the cabling.

Now I want to get an AD9850, set this up with a usb interface (Arduino perhaps) then have the software drive the AD9850 directly over USB or use an ardino with a basic command protocol for the functions.

Just trying this between applying coats of sealant.

It certainly works, the only issues are:

* simple audio start/stop playing of samples sometimes has some noise associated with it.

* seems to show a near flat output from the Mac mini except at the low frequency.

It certainly works, the only issues are:

* simple audio start/stop playing of samples sometimes has some noise associated with it.

* seems to show a near flat output from the Mac mini except at the low frequency.

There’s a few other python signal generators out there that I sse don’t have the same problem the simple audio sample generator has. I’m hoping they will simply Vary the sound without the start/stop noise.

Looks like all the python stuff is a prep and run, so it's impossible to alter the sound on the fly.

However. Linux has a OpenAL interface and it would be relatively simple to create a C implementation that can vary the frequency on the fly without having any noise.

Example: openal-sine-wave/sine_wave at master * hideyuki/openal-sine-wave * GitHub

This C code simply uses the interface to byte stuff the audio bytes into the waveform in realtime.

The code could then simply tell the audio what maths function to use and parameters and it would then output audio.

Linux used to have siggen and the signalgen package, however it was never updated to use the now defecto audio interface (only been 23 years old) thus /dev/dsp is no longer available unless you start hacking in modules that aren't really supported anymore.

So it looks like a C process to generate the signal is needed and taking commands from the python over a socket.

Actually this: ALSA project - the C library reference: /test/pcm.c

However. Linux has a OpenAL interface and it would be relatively simple to create a C implementation that can vary the frequency on the fly without having any noise.

Example: openal-sine-wave/sine_wave at master * hideyuki/openal-sine-wave * GitHub

This C code simply uses the interface to byte stuff the audio bytes into the waveform in realtime.

The code could then simply tell the audio what maths function to use and parameters and it would then output audio.

Linux used to have siggen and the signalgen package, however it was never updated to use the now defecto audio interface (only been 23 years old) thus /dev/dsp is no longer available unless you start hacking in modules that aren't really supported anymore.

So it looks like a C process to generate the signal is needed and taking commands from the python over a socket.

Actually this: ALSA project - the C library reference: /test/pcm.c

Last edited:

Do you need to generate just sine waves?

There might be an easier way to do what you want than trying to talk to a soundcard through a Python program. I like Python, but I don't like working with audio devices in it.

I have done it before, but it is a pain. Selecting a soundcard and which output is not fun, then you have to handle the callbacks to deliver the generated audio.

The underlying problem is that audio under Linux is difficult in general. The Python libraries kind of reflect the messiness of the audio system.

There might be an easier way to do what you want than trying to talk to a soundcard through a Python program. I like Python, but I don't like working with audio devices in it.

I have done it before, but it is a pain. Selecting a soundcard and which output is not fun, then you have to handle the callbacks to deliver the generated audio.

The underlying problem is that audio under Linux is difficult in general. The Python libraries kind of reflect the messiness of the audio system.

Do you need to generate just sine waves?

There might be an easier way to do what you want than trying to talk to a soundcard through a Python program. I like Python, but I don't like working with audio devices in it.

I have done it before, but it is a pain. Selecting a soundcard and which output is not fun, then you have to handle the callbacks to deliver the generated audio.

The underlying problem is that audio under Linux is difficult in general. The Python libraries kind of reflect the messiness of the audio system.

Direct loading of sound waves into a the sound output queue is relatively simple. I used todo that in assembler back on the old computers 🙂 however now there's a thick stack of API libraries between.

The problem is that a single sine wave works - the issue is that switching playback object buffers for each frequency causes simple audio to output noise. A continuous stream that is simply frequency adjusted on the fly without restarting the buffer would solve the issue.

There's a few options - the stream could be used and the bytes pushed direct to the ALSA stack through a pipe in python however I suspect that the python interpretative maths functions, to make the stream vary in pitch without changing discrete sample buffers, aren't going to keep up.

The obvious thing is to have a fast component, with a command protocol between the two using a pipe or socket. Just means lots of coding and it would probably be better to simply code up something with the AD8950 using a Arduino project on GitHub-o-verse. Essentially writing a awgdriver for that in python. Osc -> Python code -> Arduino -> AD9850.

Last edited:

IMO there is no reason C should generate better audio than python, it's just a programming language, they both use the same alsa-lib. The simplealsa library is very simple, not being able to select alsa device is kind of a problem. Lots of solutions link to portaudio (multiplatform audio library), IMO pyaudio is quite popular in python. No callbacks are needed, alsa supports several modes. The callback mode at device period boundary is most performant (AFAIK used e.g. by jackd) but also most complicated to program. You can just write or read samples in a loop, with or without blocking, e.g. Playing and Recording Sound in Python – Real Python

I have used portaudio in octave's playrec library and it works perfectly on linux, both hw and pcm devices are enumerated. Many projects use portaudio internally, e.g. audacity.

I have used portaudio in octave's playrec library and it works perfectly on linux, both hw and pcm devices are enumerated. Many projects use portaudio internally, e.g. audacity.

A continuous stream that is simply frequency adjusted on the fly without restarting the buffer would solve the issue.

You always need a sequence of buffers/arrays filled with audio samples. What these arrays contain is up to your application. A continuous frequency sweep can be generated instead of a single frequency tone. The frequency can be obtained continuously from some external source, whatever you code.

IMO there is no reason C should generate better audio than python, it's just a programming language, they both use the same alsa-lib. The simplealsa library is very simple, not being able to select alsa device is kind of a problem. Lots of solutions link to portaudio (multiplatform audio library), IMO pyaudio is quite popular in python. No callbacks are needed, alsa supports several modes. The callback mode at device period boundary is most performant (AFAIK used e.g. by jackd) but also most complicated to program. You can just write or read samples in a loop, with or without blocking, e.g. Playing and Recording Sound in Python – Real Python

I have used portaudio in octave's playrec library and it works perfectly on linux, both hw and pcm devices are enumerated. Many projects use portaudio internally, e.g. audacity.

Most apps use a component or library to play a previously created buffer - the component that loads that buffer byte-by-byte is usually a compiled language and probably C but that's often hidden behind the APIs.

You can't load each byte of data on an interrupt requests using python, java or anything else slow.

PyAudio is a API binding for PortAudio which in turn is C/C++ layer that executes against the OS APIs.

PortAudio's GitHub has a sine wave generator test app: portaudio/test at master * PortAudio/portaudio * GitHub

If you have a look at portaudio/patest_sine_srate.c at master * PortAudio/portaudio * GitHub you can see a stream based loading in the example program. If there's a python based binding for those API functions you could stream and use python generate the sample data (ie the sine wave).

Under the 'wire' tab there's a binding example for stream loading:

PyAudio: PortAudio v19 Python Bindings

Now how good that will be at high speed is something to find out. Coding as we speak..

Last edited:

Every audio API consumes samples in buffers. The buffer is period-long in alsa - just several single/tens of milliseconds. Typically while the API consumes the previous buffer at pace of the soundcard clock your app prepares the next buffer. Python can easily manage to generate sines at any frequency for standard audio rates (32-768kHz), especially if the optimized C-based Numpy is used.

Alsa-lib is in C, so bindings are typically a thinner or thicker layer in C/C++ too. But still just a layer which takes the app buffers.

EDIT: The APIs in most cases consume the previous buffers, not just one. AFAIK perhaps just ASIO has only two buffers (call bufferSwitch), other audio APIs offer 2+ buffers, allowing to have enough buffers prepared in advance to minimize the risk of underruns/xruns.

Alsa-lib is in C, so bindings are typically a thinner or thicker layer in C/C++ too. But still just a layer which takes the app buffers.

EDIT: The APIs in most cases consume the previous buffers, not just one. AFAIK perhaps just ASIO has only two buffers (call bufferSwitch), other audio APIs offer 2+ buffers, allowing to have enough buffers prepared in advance to minimize the risk of underruns/xruns.

Last edited:

So I have a threaded loader for the bytes, and I'm just trying it out for the first time..

I just noted that the stream has a callback for filling - hence I'm switching threading out..

I just noted that the stream has a callback for filling - hence I'm switching threading out..

Last edited:

So I had the portaudio sine test example working (C) and I have the pyaudio callback codes and running but I’m still trying to get some audio output. The callback is only called once but not on an ongoing basis as expected with the non-blocking form.

I’ll have another bash at it tomorrow.

I’ll have another bash at it tomorrow.

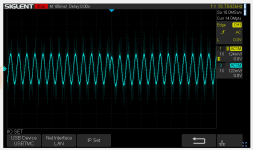

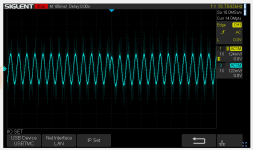

Ok so this is working with pyAudio and PortAudio.

Again - you will need to sudo apt-get install portaudio and the libportaudiodev files for your installation.

It's not perfect - the sample buffer is filled with samples to the sample rate. The number of samples (frames) per buffer is constant and fixed at stream opening. So additional work will be needed to correct for the zero point cross over for normal stream operation and for transitions.

Not much sleep last night so patience is low at the moment. So I'll get to it when I can.

Just looking at the waveform, it's clear that the sample start-stop needs some work 😀 (just as discussed earlier) - this may take the form of using the timing information passed on the callback to resolve this.

Again - you will need to sudo apt-get install portaudio and the libportaudiodev files for your installation.

It's not perfect - the sample buffer is filled with samples to the sample rate. The number of samples (frames) per buffer is constant and fixed at stream opening. So additional work will be needed to correct for the zero point cross over for normal stream operation and for transitions.

Not much sleep last night so patience is low at the moment. So I'll get to it when I can.

Just looking at the waveform, it's clear that the sample start-stop needs some work 😀 (just as discussed earlier) - this may take the form of using the timing information passed on the callback to resolve this.

Attachments

Last edited:

- Home

- Design & Build

- Equipment & Tools

- Python Software AWG for SDS1404X-E