Direct Stream Digital - Wikipedia

maybe i was misinformed...

Yes I am well aware of what it is, a dying format that brings no value. Topic for another thread.

Some people find DSD (as distinct from SACD) to be quite useful. Long live (high sample rate) DSD!

It's certainly a nice format when you want to DIY your own DAC, see the noDAC and Signalyst DSC1 threads, but it has some drawbacks: unsuitable for most kinds of digital signal processing, like those used during editing, too few quantization levels to allow normal dithering, incompatibility with the highest-performance DAC technologies unless it is first converted to PCM. This is very off-topic of course.

Last edited:

...incompatibility with the highest-performance DAC technologies unless it is first converted to PCM.

What about it the digital audio started out as 16/44 (or 24/192) and was converted to DSD512? Is it still incompatible with the highest performance dac technologies?

What about it the digital audio started out as 16/44 (or 24/192) and was converted to DSD512? Is it still incompatible with the highest performance dac technologies?

No, high performance DACs are not single bit anymore. Thus it must be converted. Might as well be PCM to begin with. Surely, you see how weird it is to record it, convert to 352.8 PCM (DXD) to do all processing, convert it back to 1-bit DSD, transport it to a DAC, which then converts it back to some internal 6-bit representation.

In a world where we can just use high res PCM to begin with, I don't see the point. It might have made sense if 44.1 is the only alternative or the world stuck with 1-bit converters.

Last edited:

Surely, you see how weird it is...which then converts it back to some internal 6-bit representation.

Not exactly. Don't know about all dacs, but do know about Sabre dacs. They handle DSD somewhat differently from PCM, and the data sheet shows a very distinct difference (unfortunately under NDA). If conversion to DSD512 is done very carefully, subjective SQ can better vs high sample rate PCM. However, dacs with high quality external PCM interpolation filters may compete very well with high sample rate DSD. For most dacs in diy use, it is easier to obtain good sounding DSD using computer software vs producing good sounding PCM using an FPGA interpolation filter.

Not exactly. Don't know about all dacs, but do know about Sabre dacs. They handle DSD somewhat differently from PCM, and the data sheet shows a very distinct difference (unfortunately under NDA). If conversion to DSD512 is done very carefully, subjective SQ can better vs high sample rate PCM. However, dacs with high quality external PCM interpolation filters may compete very well with high sample rate DSD. For most dacs in diy use, it is easier to obtain good sounding DSD using computer software vs producing good sounding PCM using an FPGA interpolation filter.

No offense, I don't think you understand what's going on. I have the datasheet, just took a look at it. This is off topic so best to just leave it.

No offense, I don't think you understand what's going on. I have the datasheet, just took a look at it. This is off topic so best to just leave it.

I could say exactly the same to you.

Good day.

I could say exactly the same to you.

Good day.

If you start another thread or PM me you can maybe explain how the DAC does not convert it to the internally required 6-bit format as suggested by the block diagram on the second page in the datasheet.

I didn't know that Bruce is gone. We talked regularly back as far as the days of nothing but op-amp based analog filters. Last we talked he was working on some software to help his daughter deal with ADHD.

Yes Scott, as you probably know by now (google), died piloting a plane.

I never met him but have to say, he did an incredible amount in the time he was here, from electronics design / manufacturing to FOHS for Elvis, Bruce, Streisand...

Pretty amazing life by any standards.

Is there any existing threat that discuss pros and cons of software based digital filter/ oversampling vs common hardware based solution e.g. inside the dac chip or a DF chip?

Is there any existing threat that discuss pros and cons of software based digital filter/ oversampling vs common hardware based solution e.g. inside the dac chip or a DF chip?

quantran,

If you go over to computer audiophile, search for threads about HQplayer software and specifically input by the designer, name = 'Miska' , (I forget his actual name).

Incredibly talented software engineer that does share some insight.

Is there any existing threat that discuss pros and cons of software based digital filter/ oversampling vs common hardware based solution e.g. inside the dac chip or a DF chip?

Generally speaking. If real-time streaming (i.e., streaming audio) performance isn't required, then a software filter can easily be made to any arbitrarily high performance. Just add additional math instructions, or increase the filter coefficient precision bit-depth, it's only code. If real-time throughput is required from a software filter, then the underlying hardware processing engine (CPU or DSP) must also be of sufficient performance.

Hardware filters can easily support real-time streaming data throughput, but are not as easily made to an arbitrarily high filter performance.

Is there any existing threat that discuss pros and cons of software based digital filter/ oversampling vs common hardware based solution e.g. inside the dac chip or a DF chip?

Best way to research it is trying it yourself. It's free to try it if you already have a computer + audio interface. You may not hear any meaningful difference, and you can sleep better. 😉

Generally speaking. If real-time streaming (i.e., streaming audio) performance isn't required, then a software filter can easily be made to any arbitrarily high performance.

IIRC BruteFIR was capable of 3.4 million floating point taps on a 1G processor real time, years ago.

IIRC BruteFIR was capable of 3.4 million floating point taps on a 1G processor real time, years ago.

From: BruteFIR

With a massive convolution configuration file setting up BruteFIR to run 26 filters, each 131072 taps long, each connected to its own input and output (that is 26 inputs and outputs), meaning a total of 3407872 filter taps, a 1 GHz AMD Athlon with 266 MHz DDR RAM gets about 90% processor load, and can successfully run it in real time. The sample rate was 44.1 kHz, BruteFIR was compiled with 32 bit floating point precision, and the I/O delay was set to 375 ms. The sound card used was an RME Audio Hammerfall.

You remember correctly. 🙂 I'll need to double check (if I can find a decent benchmark), and it will depend on FP vs INT calculations, but I'm betting on that Athlon being quite a bit slower than a single A53 (big) core of a RPI3 chip clocked similarly.

Edit: here we go! https://www.element14.com/community...ensive-raspberry-pi-comparison-benchmark-ever

Quite a bit slower was incorrect, but trading blows fairly closely!

Last edited:

Iirc Wadia used in the 80s their so-called "french curve algorithm" based on spline interpolation, i should reread about Bezier curves but think it is special as in contradiction to most other interpolation strategies the resulting continuos line is a smooth one, but does not have the original samples to be part of this curve.

Wadia obviously traded better amplitude linearity and stopband attenuation for better time response (short impulse reaction) with the risk of more IMD if high level content around the upper audio bandwidth limit was sampled.

Currently I do not know, why no one uses the spline as WADIA did.

I still have the WADI 27 and have still more music (as I am able to here) as AKM 4490 DAC or ESS9038Pro DAC. Always listening to acoustical music content as boom boom.. 😀

Things gets even worst (listening wise as pre/post echos who simple do not exists) not using the fast filter mode.

Hp

Iirc Wadia used in the 80s their so-called "french curve algorithm" based on spline interpolation, i should reread about Bezier curves but think it is special as in contradiction to most other interpolation strategies the resulting continuos line is a smooth one, but does not have the original samples to be part of this curve.

.

That is correct, there is a huge body of literature on polynomial spline interpolation. The mathematical trick is to keep the derivatives continuous so there are no discontinuities which are physically unreal. IMO frankly the whole idea was based on the "doesn't this look nicer" principle and it has the usual problem with aliasing.

BTW has anyone ever tried a brute force FFT based filter at the length of an entire song. A 3 minute piece at 96K would take a 17,280,000 point transform, a modern PC should be able to handle that at double precision floating point.

Last edited:

That is correct, there is a huge body of literature on polynomial spline interpolation. The mathematical trick is to keep the derivatives continuous so there are no discontinuities which are physically unreal. IMO frankly the whole idea was based on the "doesn't this look nicer" principle and it has the usual problem with aliasing.

BTW has anyone ever tried a brute force FFT based filter at the length of an entire song. A 3 minute piece at 96K would take a 17,280,000 point transform, a modern PC should be able to handle that at double precision floating point.

WADIA used the R2R BB PCM1702/04 chips with high SR as x64. Today's AKM/ESS DAC's are not able to feed this high SR. Also with the WADIA spline some early roll of at 22kHz was the trade off.

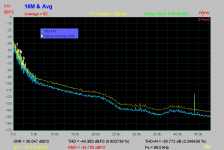

BTW: Did a 16M FFT ver. 82 times avg 256k FFT, looks close as equal. IMHO a spectral or TFD (time freq distribution as used to analyze birds) would show much more the real beef.

Picture Note: Lindberg 2L-087_stereo-96kHz_06 source (nice to see some spurious 😀)

Attachments

- Status

- Not open for further replies.

- Home

- Source & Line

- Digital Line Level

- Oversampled DAC without digital filter vs NOS