Some horrible effects can be mitigated using Baker clamps on the VAS and even on the CCS. You don't need to make it symmetrical to implement a BC on the CCS.

Perhaps we can experiment on the grounded bridge.

ANother aid is to make sure that the VAS stage does not invert when saturated. The BC helps to stop saturation, but this also means making sure that the driver/output stage clips first. To make sure the driver/Output clip use higher supply rails for the VAS/CCS than the output.

Having 2 supplies makes the psu overly complex. But I always heard that clipping is better if done before the output stages, as it would be softer and less damaging.

These two requirements (just below the clipping level, not too much distortion) can't both be satisfied. You can choose which you prefer, that is all. If you want low distortion then the clipping must be very smooth and so must start early, so you get some distortion where the original amp would give none. Leave it late and the clipping (however you do it) mist be sharp and so generate lots of high-order distortion components. Fourier can't be cheated! If it was possible to do what you want then it would have been done by now. Mathematics says it is impossible.spookydd said:Just a slighty lower output level. It needs to be properly calibrated to act right before the real clipping occurs. And if the action is to limit drive level, then the extra distortion can't be that much.

One proviso: you could do better by doing it in near-real-time rather than real-time. A lookahead function (i.e. delay the signal, to give you time to do the processing) could detect upcoming clipping events and modify the signal smoothly to avoid them. You still get distortion when doing this, but between events you get the signal undistorted.

...

That is what I'm looking for. Doing some behavioral taming on the amp or compressing isn't what I'm looking into, although I was considering compression a while back. Compression is a bit too complex, to do it right, and it does cause some distortion.

I think it can be done. We'll find a way.

If you are thinking of logarithmic compression then, yes, that is distortion.

And, to LIMIT the signal's ability to drive the amp into clipping then there (by definition) is going to be distortion.

However, if you detect a signal level that would normally produce clipping and through optical control of a light dependent resistor (LDR) you adjust the gain of of an amplifier stage, only the first few cycles will have non-linear gain applied to them.

If the level of signal persists then the gain would stay fixed and no delta-gain distortion would occur.

The power amplifier would never see a level of signal that could produce consistent clipping.

Think about it.

Just a slighty lower output level. It needs to be properly calibrated to act right before the real clipping occurs. And if the action is to limit drive level, then the extra distortion can't be that much.

I would think it is. When doing PA, sometimes the drive level can be pushed too far and noone really notices, when so many amps and speakers are used. And then tweeters can be harmed, or worse...

If an amp is used for PA sometimes, and not only for private listening, clipping is likely to occur.

I will look into limiting the input drive level. This is the best way to go I think.

MC2 Audio used a digitally controlled psu in their MC series. If it detected too high an input voltage it would reduce the supply rail voltage to avoid clipping the amps output and fade it back up when danger passed.

At least that is how I understood the inner workings of those amps.

These two requirements (just below the clipping level, not too much distortion) can't both be satisfied. You can choose which you prefer, that is all. If you want low distortion then the clipping must be very smooth and so must start early, so you get some distortion where the original amp would give none. Leave it late and the clipping (however you do it) mist be sharp and so generate lots of high-order distortion components. Fourier can't be cheated! If it was possible to do what you want then it would have been done by now. Mathematics says it is impossible.

Since higher distortion happens either way and we don't want the amp to ever clip, then it's the trade off to make.

I'm not seeking to change the amp's behavior at clipping, I'm trying to find a way to prevent it from being driven into clipping in the first place.

If there is a delayed reaction in the input drive limiting, then the amp may be able to clip for a short time, so it should be made to clip as "nicely" as possible of course, avoiding rail sticking and things like that, but since the input drive would quickly be reduced, then the clipping would be very limited.

Besides, this can be taken as a warning that the amp is being over-driven, so the guy at the console takes appropriate action and pulls back on the throttle a bit, which then would stop over-driving and stop any clipping and the associated distortion.

One proviso: you could do better by doing it in near-real-time rather than real-time. A lookahead function (i.e. delay the signal, to give you time to do the processing) could detect upcoming clipping events and modify the signal smoothly to avoid them. You still get distortion when doing this, but between events you get the signal undistorted.

That means introducing a lag, a phase shift, which would probably not be so pretty in a multi-way speaker system and in a big PA system with many speakers, although in the brouhaha it may go unnoticed as does most of the distortion. Who can distinguish a small amount of distortion in a concert or ballroom at high power levels??

Situations of clipping can only occur when someone is pushing the amps hard, and that (usually) doesn't happen in private use, but may happen a lot in PA.

The best way is to have much more power than really needed and never push them too hard.

If you are thinking of logarithmic compression then, yes, that is distortion.

Sure, and it's better than a destructive signal sent to tweeters. Plus this is a warning to the dude at the controls that he's pushing it too hard, so he should pull back a bit to stop that distortion.

I'm only thinking about "protection", not a normal way to function. No amp should be kept at clipping all the time anyway. There will be distortion in any case, so the point is to prevent it, and there is no way to prevent that in the hardware, so it's the software (the dude pushing the volume) that needs to react.

However, if you detect a signal level that would normally produce clipping and through optical control of a light dependent resistor (LDR) you adjust the gain of of an amplifier stage, only the first few cycles will have non-linear gain applied to them.

If the level of signal persists then the gain would stay fixed and no delta-gain

distortion would occur.

The power amplifier would never see a level of signal that could produce consistent clipping.

Now we're getting somewhere! 🙂😀

It's in such directions that I would be looking...

Think about it.

Thinking....

MC2 Audio used a digitally controlled psu in their MC series. If it detected too high an input voltage it would reduce the supply rail voltage to avoid clipping the amps output and fade it back up when danger passed.

At least that is how I understood the inner workings of those amps.

This is rather complex, but it's in the line of what I'm thinking.

It would complicate the psu a lot.

Plus I suppose this would only happen on the rails on the input stages, to not clip on the outputs. But I'm not sure how this would prevent clipping though, as lowering the rails would lower the threshold at which clipping happens.

Or you could soft clip and set the gain to the final amp to match.

Maybe, if there is no good way to act on the gain before the amp's input.

This would likely cause less distortion than that caused by the amp's clipping.

The question is, which causes more distortion? this soft clipping or a simple reduction in gain?

Hi,

Dropping PSU voltage in response to a high input signal

is hardly going to prevent clipping, it will do the opposite.

The basic premise that anything is better than amplifier

clipping is simply wrong, and there is no such thing as

an amplifier that doesn't clip, all linear amplifiers do.

Any schema that prevents an amplifier clipping sounds

terrible, full stop and is not hifi. This is a "problem" that

doesn't need fixing. Turn it down a bit if it sounds naff.

rgds, sreten.

Dropping PSU voltage in response to a high input signal

is hardly going to prevent clipping, it will do the opposite.

The basic premise that anything is better than amplifier

clipping is simply wrong, and there is no such thing as

an amplifier that doesn't clip, all linear amplifiers do.

Any schema that prevents an amplifier clipping sounds

terrible, full stop and is not hifi. This is a "problem" that

doesn't need fixing. Turn it down a bit if it sounds naff.

rgds, sreten.

Dropping PSU voltage in response to a high input signal

is hardly going to prevent clipping, it will do the opposite.

That was pretty much what I thought, it just lowers the threshold for clipping, to it makes it happen earlier.

doesn't need fixing. Turn it down a bit if it sounds naff.

When used in PA, the clipping may go unnoticed, or the guy at the console may not even care and keeps the volume up, regardless of distortion and other things that may happen.

What I'm trying to do is have that volume turned down a bit, automatically, and keep it below the actual amp's clipping level. The distortion induced by a volume lowering must be less damaging and perhaps much less audible than the amp's clipping induced one.

I'm thinking something along the line of an opamp, as a VCA, which just stays at unity gain most of the time, but then beyond a set threshold it lowers gain. There would be some lag in response, so perhaps there is a way to help that.

The clipping is not a normal mode in which to function anyway, in any case there is distortion induced, and the main point is to avoid that clipping induced distortion from the amp, which is harsher, but can damage tweeters. It doesn't really matter if a little extra distortion is caused by the clipping prevention, as it would be created anyway by the amp while clipping, and they key is to be able to set a threshold just right so the clipping prevention circuitry acts just a tad before the clipping would happen in the amp. Can't that be done? I think there must be a way.

Hi,

It can be done, its not hifi. It's not new. It sounds sh*t*.

And it is far more complicated than it first appears.

The problem has existed in radio since it was

invented and not surprisingly there is a copious

quantity of information available on the subject.

Also in recording. I'll leave you to work out why

it doesn't apply to recorded playback generally.

rgds, sreten.

It can be done, its not hifi. It's not new. It sounds sh*t*.

And it is far more complicated than it first appears.

The problem has existed in radio since it was

invented and not surprisingly there is a copious

quantity of information available on the subject.

Also in recording. I'll leave you to work out why

it doesn't apply to recorded playback generally.

rgds, sreten.

Last edited:

Have you measured the voltage on the speakers when listening ?

Unless your speakers are extremely inefficient you will be surprised... 1W is already pretty loud... your amps actually clip ? or maybe not ?...

Whatis the problem with clipping btw ? (unless we're talking PA...)

Unless your speakers are extremely inefficient you will be surprised... 1W is already pretty loud... your amps actually clip ? or maybe not ?...

Whatis the problem with clipping btw ? (unless we're talking PA...)

Amps rarely clip in "normal" use, but PA isn't really normal use and clipping will happen in PA.

Regardless, with or without prevention, it will happen, and distortion will be present. However the distortion is the least of the worries, as what's more to be worried about is the speaker damage, and as long as the clipping stays light, there may not be much to worry about, except perhaps for some sensitive tweeters.

What must be prevented at all cost is the hard clipping, the one that causes DC to be present on the speakers and that if sustained, can cause serious damage.

Like I said, a good prevention doesn't need at act until the last moment, right before the onset of clipping, leaving the sound program intact (mostly) and when it acts, it's more to prevent damage than to avoid hearing distortion.

When distortion is heard, that is a clear sign that someone should turn down the volume on that thing, and if the dude who's supposed to watch this is not there to limit that drive level, then this must happen automatically. That is what I am after.

And this isn't something too hard to do and certainly not impossible. It's been done for years by commercial amps makers, like carver, crown, crest, etc...

I think a good one might be carver's limiter (clipping eliminator), which does exactly what I am describing, and it's placed ahead of the amp's input, acting on its drive level to keep it below clipping.

I'm not looking for the 5 legged sheep or white elephant, I think this seems like an acceptable method and it doesn't seem overly complex and doesn't require so many parts. Plus it can be switched off, so when the amp isn't used for PA and there won't be an idiot driving into hard clipping, then the protection can safely be turned off and all is well.

The amp still needs to have its clipping behavior checked out and tamed if need be, just in case, but that doesn't mean it can remain without protection in case it's placed in the hands of someone who doesn't care or doesn't pay attention, or worse, who's an ignoraNus. 😀😀😀

I never let my amps clip, I want clean sound and can't stand it when I hear bad sound, so if clipping does happens, I immediately remedy the situation.

Regardless, with or without prevention, it will happen, and distortion will be present. However the distortion is the least of the worries, as what's more to be worried about is the speaker damage, and as long as the clipping stays light, there may not be much to worry about, except perhaps for some sensitive tweeters.

What must be prevented at all cost is the hard clipping, the one that causes DC to be present on the speakers and that if sustained, can cause serious damage.

Like I said, a good prevention doesn't need at act until the last moment, right before the onset of clipping, leaving the sound program intact (mostly) and when it acts, it's more to prevent damage than to avoid hearing distortion.

When distortion is heard, that is a clear sign that someone should turn down the volume on that thing, and if the dude who's supposed to watch this is not there to limit that drive level, then this must happen automatically. That is what I am after.

And this isn't something too hard to do and certainly not impossible. It's been done for years by commercial amps makers, like carver, crown, crest, etc...

I think a good one might be carver's limiter (clipping eliminator), which does exactly what I am describing, and it's placed ahead of the amp's input, acting on its drive level to keep it below clipping.

I'm not looking for the 5 legged sheep or white elephant, I think this seems like an acceptable method and it doesn't seem overly complex and doesn't require so many parts. Plus it can be switched off, so when the amp isn't used for PA and there won't be an idiot driving into hard clipping, then the protection can safely be turned off and all is well.

The amp still needs to have its clipping behavior checked out and tamed if need be, just in case, but that doesn't mean it can remain without protection in case it's placed in the hands of someone who doesn't care or doesn't pay attention, or worse, who's an ignoraNus. 😀😀😀

I never let my amps clip, I want clean sound and can't stand it when I hear bad sound, so if clipping does happens, I immediately remedy the situation.

Hi Members,

There are claims that SYMEF does not clip and that it easily out-paces an amp on +-75 rails despite being on +-40 rails. It could be a starting point to designing a non clipping amplifier.

kind regards,

Harrison.

There are claims that SYMEF does not clip and that it easily out-paces an amp on +-75 rails despite being on +-40 rails. It could be a starting point to designing a non clipping amplifier.

kind regards,

Harrison.

Hi Harrison,

Haven't seen you around for a while, but that is quite a bold claim to make. What was the outcome of designing the best sounding amplifier ever?

An amplifier, any amplifier clips when the output voltage swing approaches the supply rails. It is very difficult to predicatively adjust either the supply voltage (like many Japanese amps did in the 80's by switching momentarily to a higher rail voltage) or lowering the amplifier gain in "real time". There were some gain compression/expansion units around these times also attempting to address this problem. At best listeners commented that the sound is of a pumping nature and not very appealing.

Haven't seen you around for a while, but that is quite a bold claim to make. What was the outcome of designing the best sounding amplifier ever?

An amplifier, any amplifier clips when the output voltage swing approaches the supply rails. It is very difficult to predicatively adjust either the supply voltage (like many Japanese amps did in the 80's by switching momentarily to a higher rail voltage) or lowering the amplifier gain in "real time". There were some gain compression/expansion units around these times also attempting to address this problem. At best listeners commented that the sound is of a pumping nature and not very appealing.

There are claims that SYMEF does not clip and that it easily out-paces an amp on +-75 rails despite being on +-40 rails. It could be a starting point to designing a non clipping amplifier.

That's a white elephant! There is no such thing as a non clipping amp.

However there are ways to prevent the clipping from happening.

I think the method used by carver is a good one, but I'll take a look at what crown does as well. Maybe crest also has a good way to do it.

Any amp will clip, that's a given, but we can make sure this doesn't happen, so the amp never gets driven into it (or rarely).

rail voltage) or lowering the amplifier gain in "real time". There were some gain compression/expansion units around these times also attempting to address this problem. At best listeners commented that the sound is of a pumping nature and not very appealing.

I think the limiter used should use some average and not act too quickly, to avoid that pumping. Of course there is a lag and there would be some clipping happening, for fairly short periods of time, and if the averaging is done right, the short transient peaks are not taken into account, they do clip, but for brief times and those mild clips aren't too destructive. The actions taken would cause extra distortion, that can and should be used to induce some common sense and lower the drive level.

What really must be prevented is the hard over drive, which causes DC and very high distortion, to prevent speaker damage.

Whoever continues pushing an amp into hard clipping is an idiot anyway, and the distortion caused should be regarded as a result of stupidity.

Occasional and short lived mild clipping is unavoidable when pushing some good amount of power, but as long as it remains limited, that's not so much of a big deal.

Have you measured the voltage on the speakers when listening ?

Unless your speakers are extremely inefficient you will be surprised... 1W is already pretty loud... your amps actually clip ? or maybe not ?...

Whatis the problem with clipping btw ? (unless we're talking PA...)

I often do it with the voltage peak hold function of fast voltmeters. I also look at the peak currents. I also look at the voltage peaks at the amp input (= preamp output or D/A converters output). I also look at the content of records using various programs (Audacity, Wavespectra, Dynamic Range Meter and many more). I also look at the voltage gain of the circuits and the volume control positions. Everybody should do the same. The conclusion is that most of the time, power amps of 10 W / 8 Ohm would be sufficient to live happily without clips.

I've found a schematic from a carver amp pm1400, and I've been examining how it's made and how they handle the clip elimination.

It might turn out fairly simple if it's not done completely as they do it. They include more than one factor in the clipping control circuitry, such as output current sensing, drive level from the amp's input opamp, and there is even a heat sensing influence in it.

The thing is, sensing the output current is making use of the action taken by the vi limiters, so that obviates using them.

An other thing that I think could be avoided, is using the actual drive level from the amp as a feedback info to decide when to act against clipping. There can only be a lag and without some serious averaging, it can induce that pumping effect in the clipping prevention.

I'm thinking that the limiting could be simply preset to the known level right before the amp clips.

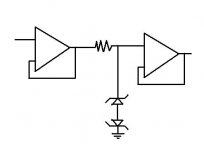

They use a diode bridge in which they feed the drive level from the amp's input opamp, then what comes out from that bridge is used in an opto-coupler that has a photo-resistor in it, and that resistor comes in parallel to a resistor at the input of a buffering opamp.

Fairly simple and the key would be to be able to set the proper threshold for action and use the input drive level, but not from the downstream amp, to avoid any pumping action.

Some clipping would be permitted, but short lived and mild, which the amp could handle and that would not be significant enough to cause any damage to speakers.

If the threshold is set close to the amp's clipping level and no action is taken below that, then this should be fairly transparent.

A switch can be there to turn off the anti-clipping circuit.

It might turn out fairly simple if it's not done completely as they do it. They include more than one factor in the clipping control circuitry, such as output current sensing, drive level from the amp's input opamp, and there is even a heat sensing influence in it.

The thing is, sensing the output current is making use of the action taken by the vi limiters, so that obviates using them.

An other thing that I think could be avoided, is using the actual drive level from the amp as a feedback info to decide when to act against clipping. There can only be a lag and without some serious averaging, it can induce that pumping effect in the clipping prevention.

I'm thinking that the limiting could be simply preset to the known level right before the amp clips.

They use a diode bridge in which they feed the drive level from the amp's input opamp, then what comes out from that bridge is used in an opto-coupler that has a photo-resistor in it, and that resistor comes in parallel to a resistor at the input of a buffering opamp.

Fairly simple and the key would be to be able to set the proper threshold for action and use the input drive level, but not from the downstream amp, to avoid any pumping action.

Some clipping would be permitted, but short lived and mild, which the amp could handle and that would not be significant enough to cause any damage to speakers.

If the threshold is set close to the amp's clipping level and no action is taken below that, then this should be fairly transparent.

A switch can be there to turn off the anti-clipping circuit.

- Status

- Not open for further replies.

- Home

- Amplifiers

- Solid State

- Non-Clipping Amplifier