More importantly don't use waveforms that can't by definition exist in any recording to prove some point, the point BTW is invariably "look at that doesn't that look un-natural?" It's easy to create a .wav with a plus to minus full scale transition in one sample, but that could never exist in any actual recording without violating Nyquist. In fact creating a fast acoustic transition is very difficult even with a high energy spark discharge there is little energy >30kHz.

Even so pseudo-science might be too strong a word, more like blinding with science.

Yeah, people forget the goal is to reproduce music, not build an arbitrary waveform generator.

This paper seems to dissect it pretty well, only read a few pages in though:

https://www.xivero.com/downloads/MQA-Technical_Analysis-Hypotheses-Paper.pdf

https://www.xivero.com/downloads/MQA-Technical_Analysis-Hypotheses-Paper.pdf

Another comment on things like MQA in general, I have a rule to not bet on anything that depends on Moore's Law stalling. In all likelihood in a couple of years folks will stream and store uncompressed 192/24 files without even thinking about it.

Years ago we invested in a startup that had an algorithm to make 640 X 480 VGA look better with pixel interpolation. I just shook my head, VP's throwing a few million around while Europe and Asia were spending billions trying to make high res flat panels work. Of course it folded before it even got going.

Years ago we invested in a startup that had an algorithm to make 640 X 480 VGA look better with pixel interpolation. I just shook my head, VP's throwing a few million around while Europe and Asia were spending billions trying to make high res flat panels work. Of course it folded before it even got going.

It would be very easy to add a unique id watermark to the payload data, allowing sharing to be detected. Not something I particularly object toNot sure how defeatable it is but, essentially, they have enough room in the noise shaping file for a lock on the higher resolution substream. So not a total block, but compromising the quality pretty heavily..

Another comment on things like MQA in general, I have a rule to not bet on anything that depends on Moore's Law stalling. In all likelihood in a couple of years folks will stream and store uncompressed 192/24 files without even thinking about it.

Years ago we invested in a startup that had an algorithm to make 640 X 480 VGA look better with pixel interpolation. I just shook my head, VP's throwing a few million around while Europe and Asia were spending billions trying to make high res flat panels work. Of course it folded before it even got going.

Yeah, the cost of CDN keeps going down, but if Tidal can save 50% of the CDN cost, it is a big benefit for everyone except Akamai. 😀

It would be very easy to add a unique id watermark to the payload data, allowing sharing to be detected. Not something I particularly object to

Neither do I. My objections lie in the unnecessarily closed ecosystem that provides an inferior end product to already available open source formats (and that's straight from a signal/systems perspective, whether audible or not).

Data storage/bandwidth perspective or not, I've heard zero commentary to suggest that 20/96 as an output stream doesn't cover the entire DR and widest bandwidth pickups of any recording device. And that's being generous (thermal noise floor on the microphone/pickup after mixing, never mind the background noise of the playback room, nor does anyone ever claim music energy north of 30 kHz). FLAC/ALAC a 20/96 file format, zero pad to 24 bit as a baked in volume control in the DAC and one is off to the races in terms of both reasonably covering the bandwidth/DR and reasonably minimizing file size.

Last edited:

true about the need for data reduced formats - it won't be a problem soon enough. it just means folks have to monetise stuff FAST before their tech becomes obsolete..

with regard to MQA though, the main claim I took from the Sound on Sound magazine report was about time-domain reproduction improvement. It's a music production /studio magazine so not focused on the end user/consumer though..

with regard to MQA though, the main claim I took from the Sound on Sound magazine report was about time-domain reproduction improvement. It's a music production /studio magazine so not focused on the end user/consumer though..

Not relevant at all I'm afraid, unless I misunderstand. This sounds basically like another implementation of undersampling.

http://www.ti.com/lit/an/slaa594a/slaa594a.pdf

It looks to be touching on mathematical developments and theory that are much newer than Nyquist (although have origins in the 1930s too) and used to develop other sampling theorems, which allow bandwidth limited signals to be sampled with greater resolution wuithou increasing data rate as Nyquist would.. practical applications in such things as radar and medical imaging and already well developed and working.

it may not be relevant to MQA but appeared to be in the ballpark of relevance..

i'm looking for things related but without going to anything directly MQA related. it's more interesting that way 😀

Last edited:

It looks to be touching on mathematical developments and theory that are much newer than Nyquist (although have origins in the 1930s too) and used to develop other sampling theorems, which allow bandwidth limited signals to be sampled with greater resolution wuithou increasing data rate as Nyquist would.. practical applications in such things as radar and medical imaging and already well developed and working.

it may not be relevant to MQA but appeared to be in the ballpark of relevance..

i'm looking for things related but without going to anything directly MQA related. it's more interesting that way 😀

You need to be able to walk before you can run.

Undersampling doesn't violate Nyquist/Shannon, anyhow, it just works with the constraints. There's no "post-Nyquist" -- that'd be like saying "post-gravity" or something silly like that.

The whole thing about "fixing" Gibbs effect (or pre-ringing of an impulse response) is IMO rather ridiculous. It's goal is to disguise how a geometric sound signal looks, to make it seem more like it might appear -- to EYES -- if it weren't being bandlimited! Not by fixing the bandlimiting (if there were judged to be needed) but by messing the signal up so it would better fool eyes looking at an oscilloscope plot, and not in ways that relate to how hearing works. There isn't a built-in oscilloscope in our brains or ears, and without looking at bandlimited squarewaves or impulse responses on an oscilloscope or the like, there's nothing that would make a MQA "fixed" impulse response seem more like a non-bandlimited impulse. Hearing works with a set of bandpass filters to sense time domain envelopes over individual overlapping bands (not by somehow graphing time domain full-band instantaneous pressure).

I've spent some years working up software to utilize better digital hardware for use in technical measurements, and spent a fair amount of time getting people to stop using misguided DAC designs that resulted in outright wrong measurements. Specifically, the ones that "fixed" impulse or square wave time domain response by corrupting the phase and/or amplitude response. Wadia, I'm looking at you.

The whole "fix the impulse wiggles" seems to be trying to fix eyeball perceived characteristics by screwing up phase response or band rolloffs. And for something that is being reproduced not for eyes but for ears. You don't improve a camera lens by encasing it in semitransparent rubber so that it sounds better when you drop it on a concrete floor!

I've spent some years working up software to utilize better digital hardware for use in technical measurements, and spent a fair amount of time getting people to stop using misguided DAC designs that resulted in outright wrong measurements. Specifically, the ones that "fixed" impulse or square wave time domain response by corrupting the phase and/or amplitude response. Wadia, I'm looking at you.

The whole "fix the impulse wiggles" seems to be trying to fix eyeball perceived characteristics by screwing up phase response or band rolloffs. And for something that is being reproduced not for eyes but for ears. You don't improve a camera lens by encasing it in semitransparent rubber so that it sounds better when you drop it on a concrete floor!

Last edited:

The whole thing about "fixing" Gibbs effect (or pre-ringing of an impulse response) is IMO rather ridiculous.

Archimago's Musings: MUSINGS: Digital Interpolation Filters and Ringing (plus other Nyquist discussions and "proof" of High-Resolution Audio audibility)

Undersampling doesn't violate Nyquist/Shannon, anyhow, it just works with the constraints. There's no "post-Nyquist" -- that'd be like saying "post-gravity" or something silly like that.

True, but down sampling from 48 to 44.1 or the like is bad unless you think interpolation is a positive attribute.

True, but down sampling from 48 to 44.1 or the like is bad unless you think interpolation is a positive attribute.

I don't think you read any of the related posts / links? It has nothing to do with audio.

More on Gibbs effect

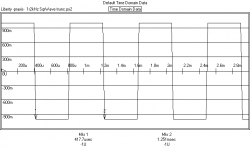

These were made from some files for an article I was going to write for Linear Audio some years back.

Some have argued "Wait a minute, I can draw a square waves or impulses without pre-ring or Gibb's effect, why can't a correct DAC produce them?". You can create perfect files like that, making sure each transition is on a sample point, and if you plot the samples and connect the dots, you do get a waveform that doesn't show the Gibb's effect. But that's just because the Gibbs phenomenon is happening between the actually sampled points. For instance, here's a 1.2kHz square wave, sampled at 48ksps, created by editing points, nice and square:

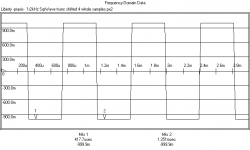

And if we shift it in time equivalent to any number of whole samples, it stays looking pretty:

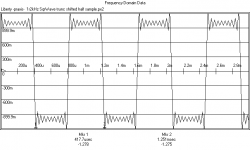

But if we shift by anything other than an integer number of samples, then some of the points start falling where the Gibb's effect shows. Worst is odd# of half samples, like this:

The Gibb's effect is there, but you have to keep in mind that time domain sampled data exists at instants of time, and graphs connect the points only for convenience of the viewer -- straight lines between the points aren't really in the actual data. When a filter fills/smooths in time between the points (like in a real world DAC), it doesn't get any chance to hop over the Gibb's ripples so they appear. Or if you upsample to higher sample rates, the "hidden" ripples will appear, too.

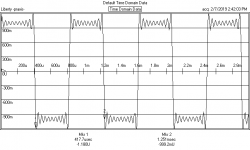

Here's the square wave, without shift, but sampled at 100ksps:

(If you're wondering, theses pics were generated by FFTing the data from the first plot into the frequency domain, shifting in time there, then IFFTing back to time domain).

These were made from some files for an article I was going to write for Linear Audio some years back.

Some have argued "Wait a minute, I can draw a square waves or impulses without pre-ring or Gibb's effect, why can't a correct DAC produce them?". You can create perfect files like that, making sure each transition is on a sample point, and if you plot the samples and connect the dots, you do get a waveform that doesn't show the Gibb's effect. But that's just because the Gibbs phenomenon is happening between the actually sampled points. For instance, here's a 1.2kHz square wave, sampled at 48ksps, created by editing points, nice and square:

And if we shift it in time equivalent to any number of whole samples, it stays looking pretty:

But if we shift by anything other than an integer number of samples, then some of the points start falling where the Gibb's effect shows. Worst is odd# of half samples, like this:

The Gibb's effect is there, but you have to keep in mind that time domain sampled data exists at instants of time, and graphs connect the points only for convenience of the viewer -- straight lines between the points aren't really in the actual data. When a filter fills/smooths in time between the points (like in a real world DAC), it doesn't get any chance to hop over the Gibb's ripples so they appear. Or if you upsample to higher sample rates, the "hidden" ripples will appear, too.

Here's the square wave, without shift, but sampled at 100ksps:

(If you're wondering, theses pics were generated by FFTing the data from the first plot into the frequency domain, shifting in time there, then IFFTing back to time domain).

Attachments

Last edited:

True, but down sampling from 48 to 44.1 or the like is bad unless you think interpolation is a positive attribute.

That's downsampling (i.e. interpolation) vs undersampling. And to be entirely sure, I'm not suggesting anything of the sort, just canonically that you cannot "beat" Shannon/Nyquist, and all these techniques are used within the context of the mathematics described by these two (and others!)

Chris posted a TI article on undersampling that goes very nicely through the basics, which may help some.

Undersampling doesn't violate Nyquist/Shannon, anyhow, it just works with the constraints. There's no "post-Nyquist" -- that'd be like saying "post-gravity" or something silly like that.

Or post-Newtonian mechanics? I that another one? Anyway, sampling theorum is about mathematical technique and not specifically about the physical world. I think one would be wrong to conflate the two.

You need to be able to walk before you can run.

Finding examples of practical application or confirmation of the validity of the techniques used in MQA doesn't even need full understanding - it's a research exercise rather than one of depth of knowledge of the subject.

I baulk at the idea of forum users dismissing scientific / engineering / mathematical on the basis that it's not what *they* know.. If these kinds of forums were around in the days of Nils Bohr or such, people would say that they're theories were pseudo-science because of Newtonian mechanics.. haha

Thats not to say the claims in MQA are necessarily true, but at least have some interest to find out..

Seems we might have moved on from the claim of "pseudo-science" to "blinding by science". That sounds more measured at least.

Finding examples of practical application or confirmation of the validity of the techniques used in MQA doesn't even need full understanding - it's a research exercise rather than one of depth of knowledge of the subject.

I baulk at the idea of forum users dismissing scientific / engineering / mathematical on the basis that it's not what *they* know.. If these kinds of forums were around in the days of Nils Bohr or such, people would say that they're theories were pseudo-science because of Newtonian mechanics.. haha

Thats not to say the claims in MQA are necessarily true, but at least have some interest to find out..

Seems we might have moved on from the claim of "pseudo-science" to "blinding by science". That sounds more measured at least.

I am sorry, there is no point in continuing this discussion. It’s already been “found out”. I really cannot help the fact that your understanding is not even enough to see that. Your research exercise is pointless because you don’t understand what you’re reading. You’ve already picked one paper that has zero relevance to the topic of discussion.

There have been several good posts and at least 3 different links / sources explaining why just in the last few pages.

Last edited:

- Status

- Not open for further replies.