But this one sounds (again) as if Nyquist does not apply to short events.

How can any sideband creep outside the Nyquist criterium while a brick wall filter is there to prevent this from happening ?

As so often, I've expressed myself poorly, by leaving assumptions unwritten. Examples are (still!) being proposed of signals with nominal frequency components below the Nyquist limit, and intended to show failures in the ability to accurately sample and reproduce *any* legal sample. These proposed examples violate Nyquist in a subtle way that would not pass an actual brickwall LPF, but can be easily generated in software.

Much thanks, as always,

Chris

Someone else stated that all that was needed to reconstruct any sine being sampled at just over 2x was three samples.

So my question was, can this be shown using redbook.

The polar projection of this problem is three points and a unique circle, so we know the answer from high school geometry. When the points start to coincide to only two the uncertainty due to quantization of the amplitude or time tends to blow up to infinity. At times I feel this is like saying the log function is flawed because the log of 0 is undefined.

Another point, this "transient" stuff should stop. An ideal single sample impulse has a well defined spectra, band limiting it gives a sinc function. There is no requirement for a "repetitive" signal in the sampling theorem, this is a nonsense. If the impulse is at an exact sample time Nyquist is not violated. Try it yourself, the ideal sinc reconstruction in this case has all zeros everywhere else.

When you send 16bit data to an FFT you don't truncate the answer before you use it, you use all the data. That is you can delay the impulse an arbitrarily small amount by rotating the phase of the FFT and doing an inverse FFT. The time information is contained in both the amplitude and the sample rate.

The question is should I waste my time doing some mathematical simulations just to have them ignored as usual.

That was exactly what I was showing. A three point sine fit did not fit the overall concern of redbook accuracy.

I am in no position to comment on markw4's assertion that 3 samples is sufficient to recreate, or uniquely characterize, a sine wave, though I expect that once again context is everything. So let's do what you proposed, and take exactly 3 samples of a 10kHz sine, using Fs=44.1kHz, triggered at random intervals. Now repeat but use Fs=88.2kHz and take 6 samples per event. What, if anything, has the increased sample frequency bought you?

Now play back all those samples, and let us know how they sound. 🙂

I was (sort of) joking. As I said on another thread, I can't afford the airfare to go to Mark's, so what's the best we can do on the forum when someone says they can hear a difference?That still won't do. Why? See below.

When we get this blanket statement of only needing three samples, do do mean "three samples" or "three samples per period for n>1000 periods" ?

That's not the question.The question is should I waste my time doing some mathematical simulations just to have them ignored as usual.

The question is, can you get accuracy using a 3 point window with an FFT?

And before you answer, yes I know you can't even start the math with only 3 points.

The closest I could think of would be a 3 point running algorithm, and I could not even think of how to do that, nor what kind of garbage would come out of it.

As I said ad nauseum, there is a tradeoff with window width and accuracy when it comes to signal processing. Why use 64 samples when 512 is just as easy, or 1024, or whatever 2^n number you choose.

With redbook, what is the equivalent window? And does it support ITD?

I have been hit with an awful lot of trash talk, but nobody can properly support a good answer. so may squirrels outside the window, my goodness.

jn

3 samples was not markw4's initial premise, it was a statement by hostile forces intended to obfuscate.I am in no position to comment on markw4's assertion that 3 samples is sufficient to recreate, or uniquely characterize, a sine wave, though I expect that once again context is everything. So let's do what you proposed, and take exactly 3 samples of a 10kHz sine, using Fs=44.1kHz, triggered at random intervals. Now repeat but use Fs=88.2kHz and take 6 samples per event. What, if anything, has the increased sample frequency bought you?

Now play back all those samples, and let us know how they sound. 🙂

I can see using 4, 8, 16 samples to calculate a best fit reconstruction of the analog signal properly sampled even at 44.1k. The question in essence is, does that level of DSP eliminate the possibility of ITD error (or the filter rolloff Lavry seemed to show) that might be inherent in the reconstruction via NRZ at 44.1k with brickwall?

Or is it easier to quadruple the sampling rate and be done?

jn

When we get this blanket statement of only needing three samples, do do mean "three samples" or "three samples per period for n>1000 periods" ?

I believe the culprit intended to say 3 samples only, for an infinitely long sine.

jn

Again redbook 20kHz. And do not forget that sudden turn on or turn off has infinite spectrum, so it cannot be displayed as you would want. This is true for any BW limited system, even for analog, depends only how deeply you are nit-picking.

That's just a repeat of what you already posted.

Rather than another pic of the same, why not answer my questions?

Here, so you don't have to click back one page...

Is it NRZ that was filtered through a brickwall, or is there some math going on prior to the brick wall.

Also, is it consistent with full scale? IOW, if swept from 5k to 20k, does the peak remain the same?

It appears to be a simulation, is that correct?

Lavry showed that a 17Khz signal was dropped 50% with respect to the analog, so is this dropping even more?

Did Lavry err in his calculation or setup? Or was the NRZ above band energy filtered out the reason for the 50% drop in level?

jn

That's not the question.

The question is, can you get accuracy using a 3 point window with an FFT?

I don't understand what you want. I can take a 3 min rip from a Redbook CD and shift the entire left channel 1us (or 250ns) using FFT techniques. The ITD resolution and reconstructing a sine from three samples don't seem to be the same problem to me.

...The question in essence is, does that level of DSP eliminate the possibility of ITD error (or the filter rolloff Lavry seemed to show) that might be inherent in the reconstruction via NRZ at 44.1k with brickwall?

Or is it easier to quadruple the sampling rate and be done?

jn

I don't understand what you want. I can take a 3 min rip from a Redbook CD and shift the entire left channel 1us (or 250ns) using FFT techniques. The ITD resolution and reconstructing a sine from three samples don't seem to be the same problem to me.

Yeah it seems like increased sample rate is being used here as a band-aid to fix a problem that has not been demonstrated to exist. Increasing sample rate may well be "simpler" than some other thing, but I still haven't seen any evidence of ITD corruption at redbook sample rates.

Looking at the cymbal waveform and spectra information here:

Cymbal Reviews with Spectral Analysis | Drummerworld Forum

we have some of the cymbal sounds mostly just lasting for a fraction of a second, some about 0.1 to 0.2 secs before decaying by 20 db, so we have up to 4000 cycles only. that puts some numbers to what I think is JN's concern.

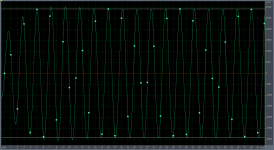

Also, if you scan along PMA's 20kHz graph, a few of the cycles only have 2 points and not three. But at my age that is a moot point, not going to hear those any more. Mind you I was in my 30's when I heard my last bat, so the ears survived those rock concerts quite well.

Cymbal Reviews with Spectral Analysis | Drummerworld Forum

we have some of the cymbal sounds mostly just lasting for a fraction of a second, some about 0.1 to 0.2 secs before decaying by 20 db, so we have up to 4000 cycles only. that puts some numbers to what I think is JN's concern.

Also, if you scan along PMA's 20kHz graph, a few of the cycles only have 2 points and not three. But at my age that is a moot point, not going to hear those any more. Mind you I was in my 30's when I heard my last bat, so the ears survived those rock concerts quite well.

No I mean in hardware. can you just double the clock and few other things tweeked and have it?

I have BenchMark ADC and DAC

Benchmark DAC-3 upsamples all PCM to 211kHz. For DSD it only supports DSD64 over DoP. No way to go to a higher sample rate with that hardware.

Don't know anything about their particular ADC circuitry.

Lavry showed that a 17Khz signal was dropped 50% with respect to the analog, so is this dropping even more?

Did Lavry err in his calculation or setup? Or was the NRZ above band energy filtered out the reason for the 50% drop in level?

For context, at the time Lavry published that paper he was promoting his NOS Ladder Dac that was limited to 48KHz. It was a heroic effort with a 1/2 hour warmup and self calibrating process. However he seems to have abandoned that in more recent products.

Analog brick wall filters like the Lavery would need are really difficult to make. Sony had a hybrid circuit for the filter and I would suspect it had laser trimming to hold the necessary tolerances.

The conversation has been quite blurry with different, barely related, issues being argued at cross purposes. It seems pretty easy to get emotions warmed up unnecessarily when its difficult to discern what the premise of the arguments and support are.

As to redbook as adequate for ITD accuracy with actual music, you assume it is adequate based on what, distortion?

Most everybody who read my post understood perfectly, I guess. And also understood the context in which it was placed. Perhaps you might reread because "..., distortion?" means that you did not get the point.

The "ear codes for zero crossings" however, may not be accurate. Over the years the researchers have been changing the model of our ear response, I've seen zero crossing, rectification, SAW, envelope modulation,

all manner of models.

Jn, amazing. I recommend Pickles. All this stuff is known in great detail for decades. Found out by measuring directly in the audio nerve the response to stimuli. Below a certain frequency, spike discharges in each tonotopically organized nerve fibre are locked to one phase of the stimulating waveform.

Poor cats, and Jn, I think your often condescending tone is completely out of kilter with of this failure to observe an objective view and try to learn.

- Status

- Not open for further replies.

- Home

- Member Areas

- The Lounge

- John Curl's Blowtorch preamplifier part III