Last night i downloaded a declip software to see how it works , not bad , after decliping Pink´s Funhouse cd it sounded like some of my early 90´s cd´s. Luckly 90% of my cd´s are from the 86-92 period.Returning to Pink´s cd , no dynamics , everything is blasted at the same level and compressed.After decliping the sound aint harsh anymore , dont know if it´s my ears but the original sounds almost distorted . I also tryed Dave matthews Before These Crowded Streets , a very good recorded cd ( at least i thought it was ) , but it has the same loudness issues .After decliping everything sounded more natural , less distorted if i may say.Cd´s could sound much better if they weren´t made for crappy stereo and if producers didn´t overload volume and treble levels.

Which software are you using ....?

At least it's on topic. 😎Quote:

Here we go again.Originally Posted by tnargs

It's *commercial interest*.

Grain of salt the size of a house. Make that a block of flats: you have to question whether the 'listening' ever actually occurred.

BTW the reasons why LP might be preferred by some listeners to CD are well (over)documented; most of them nonsense, some real.

Biggest real reason is personal bias during uncontrolled tests, and the extreme difficulty of conducting controlled preference tests when it is dead easy to identify an LP with its high noise, distortion and fault (scratches etc.) rate.

SeeDeClip Duo Pro does a fine job with CD tracks like "Diana Krall-The Look of Love" too.

A friend thought the DeClipped CD version sounded better than the 24/96 from HDTracks.It will also work with 24/96 .wav files of 24/96 .flac origin, but surprisingly, not 24/96 .wav files extracted from DVD-A.

SandyK

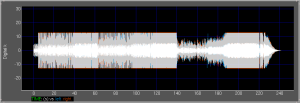

On the topic of declipping... I looked at the cutestudio site and this image...

...reminded me of how surprised I was when I examined in detail a similar-looking waveform that I assumed was clipped to blazes. The more I spread out the timeline the less and less clipping (consecutive samples at max digital value) I saw, until looking at individual sample values there was almost none for the whole song. I think I found one occurence of four consecutive samples at max value.

I learned to look at a waveform in detail before assuming it is clipped.

I think a waveform such as this one, even if it turns out not to be clipped, is probably exhibiting low dynamic range and/or compression.

...reminded me of how surprised I was when I examined in detail a similar-looking waveform that I assumed was clipped to blazes. The more I spread out the timeline the less and less clipping (consecutive samples at max digital value) I saw, until looking at individual sample values there was almost none for the whole song. I think I found one occurence of four consecutive samples at max value.

I learned to look at a waveform in detail before assuming it is clipped.

I think a waveform such as this one, even if it turns out not to be clipped, is probably exhibiting low dynamic range and/or compression.

Attachments

I like to say "grain silo of salt." 🙂It's *commercial interest*.

Grain of salt the size of a house. Make that a block of flats: you have to question whether the 'listening' ever actually occurred.

I've looked at many CD's and there are many that are compressed and limited as you describe, but there ARE quite a few that DO show clipping, at least four and maybe as many as 20 sample points sitting on a rail (or maybe 25 out of 35 consecutive samples are at a rail), and this happens every beat, or at least every kick drum or snare drum. Yes, it's done intentionally, it adds distortion and "excitement" to the sound, but of course after a while it adds ear fatigue as well.On the topic of declipping... I looked at the cutestudio site and this image...

...reminded me of how surprised I was when I examined in detail a similar-looking waveform that I assumed was clipped to blazes. The more I spread out the timeline the less and less clipping (consecutive samples at max digital value) I saw, until looking at individual sample values there was almost none for the whole song. I think I found one occurence of four consecutive samples at max value.

I learned to look at a waveform in detail before assuming it is clipped.

I think a waveform such as this one, even if it turns out not to be clipped, is probably exhibiting low dynamic range and/or compression.

But compressors and levelers have always been used to some extent or another. (except of course for acoustic recordings and the earliest electric recordings). Record the first couple of Beatles LP's onto your computer and look at it - the overall waveform of each song looks pretty much like a rectangular blob of constant-volume sound. This DID make it so everything could be heard on a cheap record player.

I think by the mid '60's sound engineers started letting more dynamics come through on pop records. Perhaps the availability and increasing popularity of better "hi-fi" home stereos helped. I notice Cat Stevens LP's from the first half of the '70's have really good dynamics and the music feels as "real" as any pop CD I've heard.

A friend thought the DeClipped CD version sounded better than the 24/96 from HDTracks.

I've seen too many from them that were badly clipped, and some that were 44.1K upsampled to "Hi-Res." No thanks.

I think a waveform such as this one, even if it turns out not to be clipped, is probably exhibiting low dynamic range and/or compression.

Indeed. A totally squashed recording need not be clipped.

In the initial years of the digital audio workstation-driven part of the loudness race so-called shredding was used, the deliberate clipping or hard-limiting of the signal. No need to tell you what the result was.

But shredding requires no tools, so the plug-in makers quickly started to develop and sell a new breed of very aggressive compressors and limiters that have a similar effect on loudness as shredding, but without actually slicing off the wave's tops. I presume their underlying mechanism is compression with its parameters auto-adapted on a cycle-by-cycle base.

You can do things with CD waveforms that you can't with Vinyl.

Sadly this is not entirely true. You can always prepare a shredded digital master and then cut it, carefully, to LP. There is always a high-pass filter somewhere in the chain, taking off the flat tops. The LP will sound as bad as the compressed CD, but its waveform will not look as 'blocky' as the CDs, due to that filter's action.

That all said, because of some other ideal conditions imposed by Nyquist, the higher the sampling in real world the better it is. I am not going to go into it more than than saying Nyquist requires infinite signal and preknowledge of the whole signal, which are both not realistic in real life.

First, it wasn't Harry, but rather Claude who formulated all of this in a concise and complete way, and then proved it for once and for all. The Nyquist guy really didn't have that much to do with it.

Second, there is absolutely no requirement of "infinite signal and preknowledge of the whole signal", apart of the simple notion that a practical signal cannot be concurrently limited in time and limited in bandwidth.

But this is not a problem at all. Assuming a signal that is truly limited in time (let's say one beat on a drum, or the whole Ring Cycle), mathematics tell us that it can't be fully band-limited, i.e. it will have some non-zero components above Fs/2. But these non-zero components can be at an arbitrarily low level. That's engineering. Would -140dB satisfy you?

After all the ear contains a similar band-limiting mechanism. This doesn't keep us from hearing what we hear, and occasionally enjoying it, right?

I am always amused by claims, even today, that there is something very major and fundamental wrong with sampling. If this were so, then surely it would be trivially easy to demonstrate these huge errors?

I've seen too many from them that were badly clipped, and some that were 44.1K upsampled to "Hi-Res." No thanks.

Michael

My understanding is that HD TRacks was not aware of the upsampling, and when notified , removed those tracks from sale.

There is a thread in Computer Audiophile where they have been examining many recent releases from HD Tracks and others, using an Audio editing program, and posting the screenshots of the Spectrum Analysis.

Alex

Second, there is absolutely no requirement of "infinite signal and preknowledge of the whole signal", apart of the simple notion that a practical signal cannot be concurrently limited in time and limited in bandwidth.

It is in the math, you need to take bit of deeper look.

Ear's or any other natural filter's mechanism is not similar to what the sampling theory requires to be used, they don't react to input values from future.After all the ear contains a similar band-limiting mechanism. This doesn't keep us from hearing what we hear, and occasionally enjoying it, right?

I am always amused by claims, even today, that there is something very major and fundamental wrong with sampling. If this were so, then surely it would be trivially easy to demonstrate these huge errors?

I didn't say they are huge but they are there. And people know about this who are more intimate with what is going on.

The higher the sampling frequency from what is really required the better performance you get in keeping the original and reproducing it.

The proof offered by Nyquist uses SINC funtions, not uniformly sized square waves, regardless of their frequency.

There will never be proof of Nyquist that uses square waves of a uniform size and number.

Consequently, any implementation of A/D - D/A cannot invoke Nyquist to support their work.

Certainly, the higher the sample rate, the better you can approximate the original signal, and perhaps to the point where the human ear cannot detect any deviation from the original source.

But there is still information missing. That you cannot hear it is irrelevant. Also, the point at which you cannot hear it will be forever debatable.

As to LP, there is no question that LP is a lossy format. One look at rumble -S/N is enough. If they did not screw up 44.1/16, it would probably be good enough for 99% of the population, including me. And I love the sound of vinyl.

There will never be proof of Nyquist that uses square waves of a uniform size and number.

Consequently, any implementation of A/D - D/A cannot invoke Nyquist to support their work.

Certainly, the higher the sample rate, the better you can approximate the original signal, and perhaps to the point where the human ear cannot detect any deviation from the original source.

But there is still information missing. That you cannot hear it is irrelevant. Also, the point at which you cannot hear it will be forever debatable.

As to LP, there is no question that LP is a lossy format. One look at rumble -S/N is enough. If they did not screw up 44.1/16, it would probably be good enough for 99% of the population, including me. And I love the sound of vinyl.

I've seen too many from them that were badly clipped, and some that were 44.1K upsampled to "Hi-Res." No thanks.

Agree they are the worst with this ......

The proof offered by Nyquist uses SINC funtions, not uniformly sized square waves, regardless of their frequency.

There will never be proof of Nyquist that uses square waves of a uniform size and number.

Consequently, any implementation of A/D - D/A cannot invoke Nyquist to support their work.

Again, I see how much sampling Nyquist is misunderstood in its intracies even though it is very famous mostly because of digital audio (CD's etc) being part of everyday life, and keep getting quoted.

No A/D D/A uses square waves as you wrote, this is not correct.

Every A/D has an input low pass filter and every D/A has an output low pass filter.

The thing is, the sampling theorem requires a brickwall filter in both these places, whose time response is sinc() function as you wrote.

A sinc() function starts at -infinity and goes to +infinity, which means brickwall filter will start reacting to an input signal's last value at the very beginning in time. This in math is okay but in real life and real time is not possible. So if a reqular filter is used, then some phase errors at higher in band frequencies get introduced and some of the higher frequency components that are out of the band will get folded back to the inband causing more errors (aliasing). And this happens in both A/D and D/A side.

To come close to the theory requirements, some implementations uses oversampling and then digital filtering to emulate sinc() brickwall filter to reduce these errors, but then what happens is you get ringing before an event happened in time. Which is perfectly correct in terms of theory, and actually exactly what is supposed to happen, but this is unnatural to the listener. For instance, a piano in real life doesn't ever start making a sound before the key is stroke, as so a drum or anything else.

If a higher than necessary sampling is used, and if a regular analog filter is used, since its corner frequency will be high away from human's hearing upper frequency, in the human hearing band it will have very little phase distortion. And the components of the signal that get folded back to inband of the sampling will fall into a range where human's can't hear as well. And the higher the sampling frequency the more this will be and get better.

Or again with a higher than necessary sampling and an oversampling brickwall digital filter is used instead of analog filter, the dependency of the future values of the brickwall filter becomes less than a lower sampling frequency case. (The sinc function becomes narrower as its filter frequency increases.)Which reduces the end result signal starting to act before the real event happened.

P.S. "time limited" signal is still an infinite signal. It is infinite because we know (or assume) that it has values zero before and after its "time limited" period. We don't take these parts of the signal as unknown.

Interesting. Let me try to get this straight.

Is a single sample before filtering appear as a square wave/pulse with certain amplitude?

Then this "brickwall" filter modifies this pulse to 1/2 ( or more ) of a sinc function due to it's time response.

In a one-bit D/A, this would be done for each bit.

Is a single sample before filtering appear as a square wave/pulse with certain amplitude?

Then this "brickwall" filter modifies this pulse to 1/2 ( or more ) of a sinc function due to it's time response.

In a one-bit D/A, this would be done for each bit.

Is a single sample before filtering appear as a square wave/pulse with certain amplitude?

Then this "brickwall" filter modifies this pulse to 1/2 ( or more ) of a sinc function due to it's time response.

In a one-bit D/A, this would be done for each bit.

Output of the brickwall filter will be the whole (not 1/2) sinc function itself. (in theoretical case)

I had read about one-bit D/A in the past, but don't remember now how it works, so can't comment on that.

OK, so what shape is the input to the brickwall filter in a regular DAC?

I always assumed that was a square.

I always assumed that was a square.

OK, so what shape is the input to the brickwall filter in a regular DAC?

I always assumed that was a square.

I don't know, depends on the DAC I guess, and my guess it will be something like a trapezoid, it rises to the sampled point with a slope, then stays there a bit and then goes down to zero with a slope. It depends on settling time etc I suppose. But since these get filtered out, it is not that important as long as all this happens fast enough to get filtered, I think 🙂

If you ask in theory what is there before the filter, it is a single point in time: zero, then jump to value, then back to zero immediately.

It is in the math, you need to take bit of deeper look.

I know the math of the proof very intimately.

The thing is, the sampling theorem requires a brickwall filter in both these places, whose time response is sinc() function as you wrote.

Sinc() is indeed the required reconstructor/anti-imaging filter. But the theorem does not specify the anti-alias filter. The story of the theorem starts with the assumption that the input signal has been band-limited. It does not tell you how to do this.

A sinc() function starts at -infinity and goes to +infinity, which means brickwall filter will start reacting to an input signal's last value at the very beginning in time. This in math is okay but in real life and real time is not possible.

It can be done to arbitrary accuracy, and the cost isn't even that high.

It takes only hundreds of filter taps before the terms in the Sinc() impulse response fall far below the system noise. Try it.

So if a reqular filter is used, then some phase errors at higher in band frequencies get introduced and some of the higher frequency components that are out of the band will get folded back to the inband causing more errors (aliasing).

You describe as it was in 1984.

To come close to the theory requirements, some implementations uses oversampling and then digital filtering to emulate sinc()

Not 'some', but rather 'the overwhelming majority'.

you get ringing before an event happened in time. Which is perfectly correct in terms of theory, and actually exactly what is supposed to happen, but this is unnatural to the listener.

First the replay side. If you play back a properly band-limited signal through a pre- and post-ringing Sinc() reconstructor there will be no pre- and post-ringing added to the output signal. Really. Try it if you don't believe me.

Now the input side ... yes, if the ADC's anti-aliasing filter was made as a linear phase Sinc()-emulating FIR then indeed the sampled signal will have the pre- and post-ringing of the AA imprinted on it. This ringing is at Fs/2, and it is common to all steep linear phase filters.

Is it audible?

With Fs/2 below 16kHz one would say yes. In fact this caused serious problems in the development of the sub-band filters for perceptual coders like MP3. These filters operate all over the audible band, and so their ringing has a fair chance of becoming audible.

But from Fs/2 > 20 kHz this is far less clear. In fact there is not a single study that gives proof that pre-ringing at 22.05kHz is generally audible. Sorry. (If you have evidence of this then please post it here, I would welcome such warmly.)

Of course no-one forces anyone to use linear phase Sinc()-like filters for anti-aliasing. But still, that's exactly what the industry as a whole started to do when sigma-delta ADCs became the architecture of choice: half-band linear phase FIRs were/are about the most economical solutions, and further, all the math and development were already done before, for the DAC side. So it was a natural. Perhaps not the optimal. Who knows?

You are aware of Peter Craven / Meridian's minimum-phase apodizing filters for CD replay, not? These get rid of the ADC's pre-ringing. But again, there is no solid evidence that this matters, not for adults and for music as the payload signal(*). So if the Meridian players sound better than other gear then the fundamental reason still may be unrelated to this whole ringing story.

(* Are you aware of K.Howard's blind listening experiments with maximum phase filters?)

If a higher than necessary sampling is used, and if a regular analog filter is used...

Yes. But that's not how today's ADCs work.

Since the signal being sampled is initially an analog (the real sound from human voice or piano or cymbal) there is no gurantee that it will naturally be bandlimited to 20Khz. So the theorem says it must not contain any component above sampling frequency over two at all. And how will you do this when your input has (or can have) components over fs/2? You either have to brickwall it, hence sinc(), or you have to chose a higher than required sampling frequency and use an analog (casual) filter with a corner frequency a lot lower than fs/2 so that the high frequency components that pass over the filter are insignificant in amplitude. So, repeating myself, but you have two choices, brickwall or higher sampling.Sinc() is indeed the required reconstructor/anti-imaging filter. But the theorem does not specify the anti-alias filter. The story of the theorem starts with the assumption that the input signal has been band-limited. It does not tell you how to do this.

You are writing about how well the sinc() brickwall be approximated in practice. I didn't say anything about that. My point is, the real sinc() itself, not how well the approximation is done, is problematic; because it is acasual.It can be done to arbitrary accuracy, and the cost isn't even that high.

It takes only hundreds of filter taps before the terms in the Sinc() impulse response fall far below the system noise. Try it.

You are requoting the theory, of course it won't if the signal was "properly band-limited". Real music is not guranteed to be properly band limited. Then it brings back to D/A's filter which I wrote my point above.First the replay side. If you play back a properly band-limited signal through a pre- and post-ringing Sinc() reconstructor there will be no pre- and post-ringing added to the output signal. Really. Try it if you don't believe me.

Now the input side ... yes, if the ADC's anti-aliasing filter was made as a linear phase Sinc()-emulating FIR then indeed the sampled signal will have the pre- and post-ringing of the AA imprinted on it. This ringing is at Fs/2, and it is common to all steep linear phase filters.

As you wrote above yourselve, if the input signal didn't have components (properly band limited) above Fs/2, it wouldn't have any pre/post ringing with sinc() usage. Just saying 🙂

Is it audible?

I don't know, but for some reason people don't tell this part of the story when it comes to Nyquist.

What is publicized and quoted over and over again that because of Nyquist (and Shannon and Whittikar and who else?? 🙂 ) you record and reproduce exactly without any problems, which is not that simple.

What bothers me most is that it is acasual, which is completely not natural as I wrote, this doesn't happen in nature. Just because it bothers me in my mind does it bother when I am listening also? I don't know, never really tested this on myself. I know that I don't like my current processor's sound, I used like the previous one. There is something unnatural, strange, uninvolving about this one.... I digress though..

I have seen their document on this, haven't had time to read it yet though. My initial reaction (without readingn but seeing the title and decription) was somewhat sceptical since they don't know what kind of filter is used in the recording side, how they can remove it? But again I haven't read it yet.You are aware of Peter Craven / Meridian's minimum-phase apodizing filters for CD replay, not? These get rid of the ADC's pre-ringing. But again, there is no solid evidence that this matters, not for adults and for music as the payload signal(*). So if the Meridian players sound better than other gear then the fundamental reason still may be unrelated to this whole ringing story.

(* Are you aware of K.Howard's blind listening experiments with maximum phase filters?)

"maximum phase" is not the issue here. Acausualness (preringing) is the issue. I had heard and read different number being quoted for the threshold of audibility of group delay (which relates to maximum phase) with different frequencies etc. but these were all about analog filters and so only post ringing. So I don't know how relevant what you refer to.

If you noticed no where in my posts so far I mentioned anything about being "audiable". In my opinion it is very difficult to do controlled tests with limiting the amount of variables that can effect the results (for instance as you say if Meridian sounds better what is the gurantee if it wasn't something it does different). I am not by the way a person who believes caps sound better just because or etc. And I don't own an LP.

I am pointing out that A/D to D/A chain has still has its thorns, even though it is a rose 🙂

And unfortunately most people don't know or see or talk about the real issue(s) but talk about wrong conclusions saying A/D/A can't differentiate phase from amplitude at high frequencies etc, as you saw.

And whether or not it is audible if it is possible to do better why not do better? It was supposed to be High Fidelity, to reproduce the original thing as original as possible. I can understand the argument for audibility when there has to be a trade off made between two things which both break the fidelity. But if the trade off is removed why not do it higher fidelity whether or not it is audible?

- Status

- Not open for further replies.

- Home

- Source & Line

- Analogue Source

- How better is a Turntable compared to a CD?