To me this sounds like another argument for a big narrow waveguide (NOT beaming!) 🙂 - narrow because of the elimination of the (very) early reflections without killing the necessary reverberation contributing to spaciousness, and big because than it can naturally behave like a transient-perfect ("non phase-mingling") source over a wider frequency range. How wide is enough? Down to 700 Hz? 500 Hz? Even lower? ...

230-290 Hz for my various conical syn/unity's, 90-75 degree horiz. (per Keele's formula)

mark100, have you paid attention to listening distance and quality of stereo image / phantom center? have you noticed a listening distance with your setup where the local room sound mostly disappears, the transition between close and far sound? If you have, does for example phase linearization have effect on the transition distance, does it improve sound at far? how about sound at close?

If this really is same/similar thing what Griesinger writes about so that perceptually brain either locks in or not, a on / off thing, then it should be enough to have directivity and room acoustics such that the close sound happens at the practical listening position.

If this really is same/similar thing what Griesinger writes about so that perceptually brain either locks in or not, a on / off thing, then it should be enough to have directivity and room acoustics such that the close sound happens at the practical listening position.

Last edited:

It's still not at all clear to me how can a brain "lock in" on something that it doesn't know how it should sound in the first place. Does it work only for familiar-enough sounds, like human speech? How do you know what are the correct/actual phases of harmonics of a particular instrument? And are they preserved in the recording at all? It just doesn't make much sense to me.

Last edited:

These hex waveguides look like they'd be really easy to Synergize.- I've added a new waveguide to my website (type 460-36):

http://www.at-horns.eu/athex.html

https://a360.co/3DlzDxv

You should checkout Griesinger materials, basically your voice for example, has a fundamental frequency and then a harmonic series that is integer multiple of the fundamental. If your voice fundamental was say 100Hz then there would be harmonics at 200Hz, 300Hz, 400Hz etc. this should be familiar stuff. Griesinger uses picture of recorded voice waveform to illustrate this; when phase of the harmonics is preserved there is huge amplitude peak for every cycle of the fundamental when all harmonic are in phase with it which gives some crazy signal to noise ratio, basically the sound sticks out by some 12db above back ground noise or something, much much above sound whose phases are not aligned. This was on some youtube presentation I watched the other night. I think he said its effectively same hearing mechanism that hears pitch, and brain is able to track several person speaking simultalenously as long as they have even slightly different pitch to track individually. There was something on the interleaving frequency band filters and stuff, got impression that hearing system is eventually quite simple (low information) but capable of very complicated /accurate things with little bit of processing.It's still not at all clear to me how can a brain "lock in" on something that it doesn't know how it should sound in the first place. Does it work only for familiar-enough sounds, like human speech? How do you know what are the correct phases of harmonics of a particular instrument? And are they preserved in the recording at all? It just doesn't make much sense to me.

I'm not expert on this, just some reading done, but it makes lots of sense to me this way 🙂 Preserved phase of harmonics means better signal to noise ratio, brain just tries to make sense of all the noise going on, automatic focus on the close stuff that has higher SNR which means it would be close and likely important for surviving.

Last edited:

Well think of the following: the ears are on 24x7, a constant stream of audible noises, how does your brain decide that a sound/noise actually is something meaningfull to process and then after processing is was f.i. someone calling your name? (This process is in fact much faster than you can reed this ;-))

Check latest presentations of david griesinger , it begins with some cue to trigger the attention of one's brain , this can f.i. be a visual cue.

Check latest presentations of david griesinger , it begins with some cue to trigger the attention of one's brain , this can f.i. be a visual cue.

So basically for this to work you need as many high-level transient peaks in the signal as possible, right? Maybe a random "phase mingler" tends to average this so the peaks are gone but I think e.g. for a typical smooth phase response of a loudspeaker, the transients are still there to a large degree, only the shape of the signal waveform changes... That would lead to an observation that the phase response (and its deviation from flat) is really not that relevant for the direct sound.

Well think of the following: the ears are on 24x7, a constant stream of audible noises, how does your brain decide that a sound/noise actually is something meaningfull to process and then after processing is was f.i. someone calling your name? (This process is in fact much faster than you can reed this ;-))

Check latest presentations of david griesinger , it begins with some cue to trigger the attention of one's brain , this can f.i. be a visual cue.

Yeah and if I remember he told on a speech that detection happens on the high frequencies I think, harmonics high up in kHz range can be detected very fast, so localization and stuff like that happens fast. Fundamental of a voice could be removed even because information about it is already encoded within the harmonic series. For example small bluetooth speakers take advantage of this, the bass is just the harmonics, probably two octaves of actual bass is just not there except the brain "hears" it due to harmonics.

I'm not sure how transients relate here, they probably do, but for example a sustained note from an instrument or from your voice would have the fundamental and harmonic series playing at the same time all along.So basically for this to work you need as many high-level transient peaks in the signal as possible, right? Maybe a random "phase mingler" tends to average this so the peaks are gone but I think e.g. for a typical smooth phase response of a loudspeaker, the transients are still there to a large degree, only the shape of the signal waveform changes... That would lead to an observation that the phase response (and its deviation from flat) is really not that relevant for the direct sound.

In a griesinger presentation he demonstrates the importance of phase to retain the correct envelope of a sound. So phase is quite important.So basically for this to work you need as many high-level transient peaks in the signal as possible, right? Maybe a random "phase mingler" tends to average this so the peaks are gone but I think e.g. for a typical smooth phase response of a loudspeaker, the transients are still there to a large degree, only the shape of the signal waveform changes... That would lead to an observation that the phase response (and its deviation from flat) is really not that relevant for the direct sound.

Here is one for example, this is what I "watched" few nights ago, fell a sleep few times but the same stuff is all over his videos:

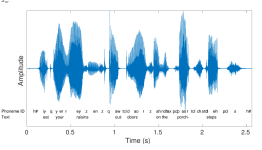

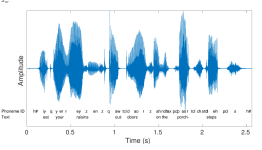

edit. here planet10 posted an image that shows "phase coherency", which basically is this. https://www.diyaudio.com/community/...s-under-5000-pair-passive.400207/post-7377232 All harmonics are in phase with the fundamental so every cycle they would sum as huge peak. Can't find good image now how the recorded waveform envelope looks like, something like this, lots of peaks, the light blue hair 🙂

Another papers off his on the subject 🙂

https://www.akutek.info/Papers/DG_Neural_Mechanism.pdf

edit. here planet10 posted an image that shows "phase coherency", which basically is this. https://www.diyaudio.com/community/...s-under-5000-pair-passive.400207/post-7377232 All harmonics are in phase with the fundamental so every cycle they would sum as huge peak. Can't find good image now how the recorded waveform envelope looks like, something like this, lots of peaks, the light blue hair 🙂

Another papers off his on the subject 🙂

https://www.akutek.info/Papers/DG_Neural_Mechanism.pdf

Last edited:

mark100, have you paid attention to listening distance and quality of stereo image / phantom center? have you noticed a listening distance with your setup where the local room sound mostly disappears, the transition between close and far sound? If you have, does for example phase linearization have effect on the transition distance, does it improve sound at far? how about sound at close?

Hi tmuikku,

I'm afraid i am not a good one to ask these type questions....particularly about stereo, imaging and envelopment.

I love audio in general much more than stereo. To me good stereo is a subset of good audio, and not primary goal.

So I listen to stereo, mono, LCR; both near and far, at listening position and at all around, including other rooms and outdoors.

Without giving too much credence to stereo itself.

My experience is that achieving good time and phase alignment improves sound with all of my various listening methods.

Perhaps mainly because it also assures excellent frequency response and better polars,.... but whatever the reason, it no doubt helps all listening methods.

The whole direct field, critical distance, reflected field ....that comes into plays indoors as I move around from near to far, makes for such a different jumble of in-room auditory experiences....I just try to enjoy what each location offers..

How does a brain know what is the correct envelope? This explanation doesn't make sense.In a griesinger presentation he demonstrates the importance of phase to retain the correct envelope of a sound. So phase is quite important.

I would understand, however, that the high-level (repeating) peaks in the signal provide some important cues. So it would be important not to lose those (which can happen as a result of a (gross) phase distortion, but probably not due to a phase reposnse of a typical loudspeaker).

Last edited:

Hi, thanks mark100!

pattern control down to low frequency makes sense for your context: if you happen to listen from side of the speakers, highs are projected forward but lows are not and the closest speaker seems just mumbling around, dominates the perception. I've got nice time intensity toe-in stereo setup working in living room and have it. Its spooky to sit near (well off-axis) the other speaker but not hear much sound from it, sound (phantom center) pretty much still appears to be between speakers except for the lows. The nearby speaker is mumbling and takes attention, not too nice, but its just exception being close and side to one speaker while further away in the room sound averages out better and balance is mostly nice.

It would be fun to be able to listen system like you have, fullrange point source 🙂

pattern control down to low frequency makes sense for your context: if you happen to listen from side of the speakers, highs are projected forward but lows are not and the closest speaker seems just mumbling around, dominates the perception. I've got nice time intensity toe-in stereo setup working in living room and have it. Its spooky to sit near (well off-axis) the other speaker but not hear much sound from it, sound (phantom center) pretty much still appears to be between speakers except for the lows. The nearby speaker is mumbling and takes attention, not too nice, but its just exception being close and side to one speaker while further away in the room sound averages out better and balance is mostly nice.

It would be fun to be able to listen system like you have, fullrange point source 🙂

Last edited:

This is all very interesting.

I wish I understood more, perhaps I can evolve that over time🙂

With my system, I've tried nearer listening positions and hear the more direct sound, open soundstage, still enough central image..

I've also tried further away and hear the mid and upper mids image less wide and interesting.

The flip side is that over 4m from the mid bass horn mouths and tapped horns, they really sing and give the bass punch they should.

So it's a bit of a dilemma.

I'm fortunate in that I can leave the bass channels where they are and move the upper channels physically nearer to offset this.

I time align in DSP.

For years the usual 1st peak positives arriving at ear / microphone.

More recently I've been playing with, and liking aligning the mid bass, mids and upper mid/tweeter horn mouths to the exit time + the delay for tapped horn path.

This was something of a revelation. @hornydude first introduced me to this refinement.

Something to do with the sound exiting the horn mouths to integrate with the room in unison, rather than in strict time alignment.

That's how I can make sense of it anyway.

The difference is quite noticeable.

I'd sum it up as distortion free / a shrillness taken out on higher pitched vocals and music.

I wish I understood more, perhaps I can evolve that over time🙂

With my system, I've tried nearer listening positions and hear the more direct sound, open soundstage, still enough central image..

I've also tried further away and hear the mid and upper mids image less wide and interesting.

The flip side is that over 4m from the mid bass horn mouths and tapped horns, they really sing and give the bass punch they should.

So it's a bit of a dilemma.

I'm fortunate in that I can leave the bass channels where they are and move the upper channels physically nearer to offset this.

I time align in DSP.

For years the usual 1st peak positives arriving at ear / microphone.

More recently I've been playing with, and liking aligning the mid bass, mids and upper mid/tweeter horn mouths to the exit time + the delay for tapped horn path.

This was something of a revelation. @hornydude first introduced me to this refinement.

Something to do with the sound exiting the horn mouths to integrate with the room in unison, rather than in strict time alignment.

That's how I can make sense of it anyway.

The difference is quite noticeable.

I'd sum it up as distortion free / a shrillness taken out on higher pitched vocals and music.

It's still not at all clear to me how can a brain "lock in" on something that it doesn't know how it should sound in the first place. Does it work only for familiar-enough sounds, like human speech? How do you know what are the correct/actual phases of harmonics of a particular instrument? And are they preserved in the recording at all? It just doesn't make much sense to me.

I think it's called evolution 😉. Survival of the fittest. We generally want to survive and still pay attention to sounds that are close by.

It isn't that hard for the brain to distinguish between a close sound vs a sound further away, as the "pop" on starting consonants as Griesinger called it is still clear if the phase information is in tact. (that "pop" stems from the alignment of the phase, as @tmuikku said: louder at the start, hence the "pop")

It should be there in instrument sounds as well, the attack of a note.

Yes, basically if you look at the sound processing of our system, it reminds me of the ERG concept. Existence, Relationships, Growth. Time of processing : microseconds, milliseconds seconds and more.

If the harmonics phases remain constant than they are correlated. If correlated, then the ear will insert a correlated fundamental (even if the fundamental is missing.) This is why the harmonics, say > 700 Hz are more critical than the fundamentals and why it is not necessary to have high DI at LFs - the ear will just insert the correct fundamental and the image is solid.How does a brain know what is the correct envelope? This explanation doesn't make sense.

Having studied Greisinger, I have two comments: First, it is not straightforward to discern what of his auditorium-centric work is relevant to loudspeakers; two, his interest is strictly in the perception of orchestral music - he never even mentions studio work. Hence, while his work is enlightening and interesting it is not specifically relevant to what we are doing (or at least what I was doing.)

The "correct envelope" is the one that was the original envelope of the source. The ear will create this if the signal is correlated.

And, yes, our HF perception happens very fast, which I have been saying for a long time. You can see this by looking at the gammatone filters that Gresinger (and everyone else) use in their models of hearing.

Is this what you mean by the transition distance? There is a name for this and it's called the critical distance where the direct field and the reverberation field have equal strength. Close-in and the direct field dominates, farther out the reverberation field becomes important. This IS NOT the "near field" (as often mis-stated,) that is something else.a listening distance with your setup where the local room sound mostly disappears, the transition between close and far sound

- Home

- Loudspeakers

- Multi-Way

- Acoustic Horn Design – The Easy Way (Ath4)