Anyone running a center channel? seems easy enough to generate from the LR channels in a DSP*.

*I can't recall the source but the signal should be (L+R)*k (where the value of k is in a source I can't recall!)

It was a freqeutly used configuration during the early days of stereo exprimentation that seems to have been dropped just for cost reasons:

Stereophonic sound - Wikipedia (also mentioned in the horn book)

Two days ago I picked up a matching center channel to my main speakers, which use Celestion 1425 with 10” WG and 10” woofer. I have been using Dolby surround for music listening with stereo LR and surrounds, it’s fantastic. If you haven’t tried Dolby surround I highly recommend it. It’s a completely different ballpark from older up-mixing formats.

I was stunned to realize that the center image was smaller with LCR than just LR. The overall soundstage was smaller and less 3D. The center image was just as strong with LR. I had to go up a couple times and make sure the center wasn't on. This was with full Audyssey XT32 in a room that has nothing in terms of diffraction between the speakers and listener.

Needless to say, the center channel is going back and I’ll be sticking to LR + surround.

Attachments

Last edited:

Interesting. When I've used a center for music I had to turn it down 4-6dB below where it "should" be. That might work for you. Basically I'd start it very low and turn it up slowly until it just had an audible effect. That's what I liked.

I once ran an old cinema in San Francisco where we had mono + surround. It worked just fine, but it was kinda funny.

I once ran an old cinema in San Francisco where we had mono + surround. It worked just fine, but it was kinda funny.

In terms of gradually turning it up from zero, while the Audyssey app is generally fantastic, it makes adjustments like that quite time consuming, as you have to upload the settings to the receiver each time.

I did try tinkering with the db of the center a bit though, little over, little under. But no matter what I did, it was a clear detraction from stereo LR. This was NOT the result that I was expecting. I'm having jzagaja (Jack) make some large waveguides for me. I was going to have him make three, as I assumed sight unseen (sound unheard?) that they would be superior to just stereo LR....

Glad I did this little experiment beforehand.

With properly setup stereo waveguides, the center image, and imaging in general, is just so spot on. Saved me some $$$.

I did try tinkering with the db of the center a bit though, little over, little under. But no matter what I did, it was a clear detraction from stereo LR. This was NOT the result that I was expecting. I'm having jzagaja (Jack) make some large waveguides for me. I was going to have him make three, as I assumed sight unseen (sound unheard?) that they would be superior to just stereo LR....

Glad I did this little experiment beforehand.

With properly setup stereo waveguides, the center image, and imaging in general, is just so spot on. Saved me some $$$.

Last edited:

Three identical speakers is recommended for home theater. I don't know if that will hold true for Atmos music or not. You might want a center channel that has an anthropomorphic polar pattern.

It should be noted this is not at all exclusive in any way to wave guides. Doesn't matter what the speakers are. Correct/precise symmetry yields exactly the same result. However the more directional they are the more remarkable the dial in will be. Flat panels for example have a range of tolerance of about 1/4" max out of alignment from your listening position. Vertical alignment is just as important.With properly setup stereo waveguides, the center image, and imaging in general, is just so spot on.

No, early reflections from non directivity controlled speakers will detract from imaging in most domestic spaces.

Of course some speakers are better than others but the same principals apply in terms of realizing optimal performance.

Recording my speakers playback binaurally with mics integrated into their headset and playing it back through the headphones I could not tell a difference.

I think this is a far better way to try to convey the effects of system changes we think effect "how it sounds" to others. One would think with the performance of digital recording systems available today, plus suitable mics - this would be what we should all be arguing about; who can hear the change in the recording, same as the OP did?

I'm currently playing with home recording of my bedroom speaker system. I'm using a binaural mic and my own head for the dummy. I've got links posted at the end of TABAQ for Tangband, over in the full range speaker section.

I'm hoping someone who's built these speakers can listen to the recordings and say if my implementation sounds on or off to their ears. Perhaps even suggest some stuffing recommendations based on resonances they're hearing, that I wouldnt have noticed yet.

So far, no takers on the binaural recordings I posted. I agree; with the headphones on, they can sound quite like the speakers in this bedroom do, minus the visceral of course. I mean, what a conversation piece if everyone could easily show how their system sounds to others here on the forum?

Last edited:

If that can be achieved through internet, the business shills will be out of business.I mean, what a conversation piece if everyone could easily show how their system sounds to others here on the forum?

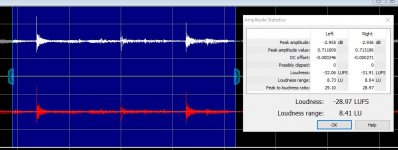

On the dynamics front, having a play with Goldwave (well more a poke and flail) and here is a random 2 second fragment of Rickie Lee where you can see how the snare dominates the track. Over the whole track the Loudness range is 10.7LU. Next step is to work out what that actually means 🙂

Attachments

My streamer has a function that operates on the lufs scale....this article helped me to grasp it better. You might already know what’s written here but it’ll help others that don’t know lufs. Access to this page has been denied.

Edit....it says link denied but it works when I hit it?

Edit....it says link denied but it works when I hit it?

hi Krivium, what I meant was to try and get my head around it for a comparion between different music so I can try and translate if 10dB of loudness is good bad or meh. I did that extract whilst on a boring conference call so didn't have any research time 🙂

Hi Bill,

Start with Kaffiman's post on first page. Ludb was not developed for music at first ( it was for broadcast and in particular commercials) time of integration is very long ( something like 1mn) and will take the whole material into account hence the 'high' reading from this track: the snare take over.

Link given by Mountainman Bob should help too.

Start with Kaffiman's post on first page. Ludb was not developed for music at first ( it was for broadcast and in particular commercials) time of integration is very long ( something like 1mn) and will take the whole material into account hence the 'high' reading from this track: the snare take over.

Link given by Mountainman Bob should help too.

My streamer has a function that operates on the lufs scale....this article helped me to grasp it better. You might already know what’s written here but it’ll help others that don’t know lufs. Access to this page has been denied.

Edit....it says link denied but it works when I hit it?

Thats what the VU meter was for. It was supposed to show you loudness. So the industry found ways to get around this. Theres many ways to make something sound louder without changing the VU meter reading. And this makes it sound louder over the radio without exceding the transmiter power. How long before producers do the same with LUFS?

You can cheat anything, and people do. But the LUFs do a pretty good job if you want to volume level some tracks. It's the frequency curve, the integration time and the gate that make it useful.

Bill, I hope that Goldwave will be helpful. It has taught me a lot over the years.

Bill, I hope that Goldwave will be helpful. It has taught me a lot over the years.

^ Sure LUFS is of great help but as it is a relatively new tool it needs some adaptation to feel how to use it.

At least it was ( and still is in a way) for me. Sometimes results given doesn't really follow what you expected from other way to analyse.

That was the reason i pointed to previous discussion.

Goldwave is a nice alternative to other editor.

No nonsense soft.

How are the restauration tools? ( they wasn't available last time i tried it - looong time ago!).

At least it was ( and still is in a way) for me. Sometimes results given doesn't really follow what you expected from other way to analyse.

That was the reason i pointed to previous discussion.

Goldwave is a nice alternative to other editor.

No nonsense soft.

How are the restauration tools? ( they wasn't available last time i tried it - looong time ago!).

Last edited:

For my Elac discovery streamer it is a function where you can select the LUFS to a setting where everything is the same level.....23 lufs is standard I have mine set to 17 as it seems to be a little smoother at high volumes. You can disable the function but I find it much easier on the system and the ears when one of those compressed songs show up on the playlist. It doesn’t seem to degrade the sound other than you have to turn up the volume a little more......so is it decompressing the compressed music?

^ i don't think it 'decompress' this is much more probably 'leveling'. In other words it is an automated volume control.

You set a reference level and from this the overall level is decreased ( when low dynamic ( high rms/squashed) range signal is present) or increased ( when to high dynamic range is present). As it is a streaming service tracks are analyzed and dynamic is embeded as metadata giving the range of level up/down needed.

This is explained in the article you linked by C.Anderton: same principle used by 'broadcaster' ( youtube, spotify,...).

This isn't new: when mastering this is the only way to compare what you are doing for example.

You have to lower the level of the processed track ( on which you probably have made rms level up) to match the original.

In my own case i analyze the loudest part of the ( original) track then lower the processed one to have same rms level between the two.

Only then you can make comparison .

It has been shown many times that even a 0.1db difference will systematically make you prefer the louder one). And in most case the comparison is not in favour of the processed one, but as you gained a lot of rms level artist and/or producer crave for the louder one.

You set a reference level and from this the overall level is decreased ( when low dynamic ( high rms/squashed) range signal is present) or increased ( when to high dynamic range is present). As it is a streaming service tracks are analyzed and dynamic is embeded as metadata giving the range of level up/down needed.

This is explained in the article you linked by C.Anderton: same principle used by 'broadcaster' ( youtube, spotify,...).

This isn't new: when mastering this is the only way to compare what you are doing for example.

You have to lower the level of the processed track ( on which you probably have made rms level up) to match the original.

In my own case i analyze the loudest part of the ( original) track then lower the processed one to have same rms level between the two.

Only then you can make comparison .

It has been shown many times that even a 0.1db difference will systematically make you prefer the louder one). And in most case the comparison is not in favour of the processed one, but as you gained a lot of rms level artist and/or producer crave for the louder one.

- Home

- Member Areas

- The Lounge

- The Weak Links of Today's Audio