SPDIF in most devices is limited to 20 bit

https://en.wikipedia.org/wiki/S/PDIF

But the space for 24 bits is always there.

There is nothing at the SPDIF signal path from the source to the destination in a home environment that deterministically restricts transmition to 20 bits (PCB tracks, resistors, pulse isolation transformers, 1m coaxial interconnect, RCA plugs).

AES3 and S/PDIF differ in signal amplitude and impedances but audio data format is the same between AES3 and S/PDIF, they have different subcode formats.

Does the subcode governs the bit depth of the audio data?

George

AES3 and S/PDIF differ in signal amplitude and impedances but audio data format is the same between AES3 and S/PDIF, they have different subcode formats.

Does the subcode governs the bit depth of the audio data?

George

Attachments

Does the subcode governs the bit depth of the audio data?

George

Yes all bit depths from 16-24 are set by a subcode. Considering the awkwardness of not doing things on 8bit byte boundaries I can only imagine that the 20bits is simply due to a particular DAC or A/D chip. My Philips CD recorder was 20bit.

Try sometimes converting Motorola to Intel 24 bit integers.

Last edited:

But that is NOT what you said all those years ago?

As the topic is about signal slew not amplifier slew we can safely say the OP was answered on page one. You assert that 100V/us is needed for a power amp and 10 for a preamp. Reading the article I worry about correlation vs causality, but I know that has been argued to death on other threads and 100V/us on a power amp is not excessively hard for a DIYer to do.

Hardly worth arguing about now I'm sober…

I agree with that last bold line. Moreover, as (and I really am just guessing here) John's extraordinarily competent 100+ watt amplifiers had at least 75 volt rails internally for dynamics reproduction, using the 0.63 V/μs per rail volt, that's what… almost 50 V/μs? Purdy-darn-close to 100 V/μs. Pretty darn close indeedy.

Said 75 V+RAIL amplifier … with perhaps 5 volts chopped off the edges to allow for semiconductor voltage drop and the like, would be capable of 600 W peaks into 8 Ω … and would comfortably hum along all night long at a more reasonable (⅓ … ½)²(75 - 5)² ÷ 8 = 70 to 150 watts of program power. Dynamics overhead ≡ excellent. Nominal 8 Ω full power 100 watts. Sounds like a John Curl amplifier! 50–100 V/μs would be a perfectly reasonable slew spec, as would a 100 W level bandwidth of 100 kHz. The final “design point” would be to build the current handling capability of the output to both deal with 2 Ω dynamic loads, and have some sort of self-preserving hard current limit circuitry. (10 to 20 amps?) Has to be able to withstand getting hit with a full short-circuit, say.

Thanks John! Correct me if I'm in error, but I think “I've got it” after all these decades.

GoatGuy

Last edited:

SPDIF is a limitation, installed in about 60% of all CD players

Not an absolute limitation if one skip to extract a clock from it. It'll pass the bits.

CD players don't use s/pdif - drivers and d/a do.

//

Last edited:

Yes all bit depths from 16-24 are set by a subcode.

Thank you Scott. I searched for some details, see attachment 1.

Considering the awkwardness of not doing things on 8bit byte boundaries I can only imagine that the 20bits is simply due to a particular DAC or A/D chip. My Philips CD recorder was 20bit.

No, unfortunately it’s not on the DAC.

On attachment 2 (extract from IEC 60958-4 Edition 2.1 2008-07) down at Note 4, you will read that the formation of the subcode is done by the transmitter chip. The receiver chip is left to adjust the bit depth accordingly.

Reading hierarchy:

Byte 0, bit 0. If it contains “1” (which identifies professional AES/EBU) then according to contents of bits 16, 17, 18 of Byte 2, receiver sets the bit depth accordingly (subsequently reading bits 19 to 21) .

If Byte 0, bit 0 contains “0” (which identifies consumer S/PDIF), receiver defaults to maximum bit depth of 20bits. It further reads bits 16, 17, 18, 19 for to set number of channels (mono, stereo, multi-channel up to 15). See attachment 3 (extract from IEC 60958-3 Issue 2003)

Thanks to Chris Daly for pointing to this technical restriction.

George

Attachments

Last edited:

Thank you Scott. I searched for some details, see attachment 1.

George

Wait a second George, why would it matter if a player for consumer CD's had 24 bits in the first place? I sort of missed the point of the first comment then, I assumed it was on the capabilities of SPDIF.

That would be cool actually if all optical outs on most USB and firewire sound devices were 20bit.

Last edited:

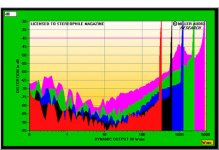

Real peak power performance:

Well if you want excess in everything or own classic apogees. For the rest of us it is somewhere between overkill and overcompensating!

Thank you Scott. I searched for some details, see attachment 1.

George here's another reference (It was hard to find one that actually went through all the differences between consumer and pro bit by bit). He starts at byte 1 so maybe he hasn't programmed since Fortran. 🙂

http://www.av-iq.com/avcat/images/documents/pdfs/digaudiochannelstatusbits.pdf

There is no provision for any sampling rate above 48K in consumer so I would assume any 96K+ stream could do 24bits. Also this contradicts the other reference clearly showing 16-24 bit capability embedded in the consumer format.

Last edited:

if you had a 24 bit source and it was truncated to 20 bits what would one actually lose given most converters are 18-19 bits accuracy?

Real peak power performance:

John,

I take it that

• RED = 16 Ω: breakup at 320 W

• BLK = 8 Ω: breakup at 640 W

• BLU = 4 Ω: breakup at 1,250 W

• GRN = 2 Ω: breakup at 2,500 W

• VIO = 1 Ω: breakup at 4,800 W

Loads? Seems about right. Each of the colors goes into clipping distortion at almost exactly 2× its lower neighbor. Must be those. Those are some wicked amplifier specifications. If solid state (of course), then you're looking at ± 100 volt rails. I'd bet that you'd also need forced-air cooling anywhere near the limit power output levels.

Which ironically isn't very different from my Vintage 1980s Crown '4000' amp. 100 volt rails. Forced air cooling. Can drive basically ANY load. The VIOLET = 1 Ω seems to be a little shy (4,800 W instead of 5,000 W), but I bet that's because of the self-protection current limiter circuitry kicking in. Right around 50 max amps, right?

Thanks again!

GoatGuy

GoatGuy

GG you need to fix your sig 10us rise time is 35kHz BW by standard convention.

Wait a second George, why would it matter if a player for consumer CD's had 24 bits in the first place? I sort of missed the point of the first comment then, I assumed it was on the capabilities of SPDIF.

That would be cool actually if all optical outs on most USB and firewire sound devices were 20bit.

Scott

Chris Daly’s comment has taken me too by surprise. I said, it can’t be so.

But when I started reading the IEC standard, it became clear to me that bit depth depends on the S/PDIF transmitter formation of the Channel Status Byte 0, bit 0.

An S/PDIF port of a device may output 24bit audio stream, if the digital audio transmitter it contains is suitable for both professional and consumer audio applications and is properly programmed/configured.

Just a few ICs that I found doing the job.

http://www.ti.com/lit/ds/symlink/dit4096.pdf

http://www.ti.com/lit/ds/symlink/dir9001.pdf

http://www.st.com/st-web-ui/static/active/en/resource/technical/document/datasheet/CD00001937.pdf

https://www.cirrus.com/en/pubs/proDatasheet/CS8406_F6.pdf

(I can't find one from AD. I am sure you will)

George

if you had a 24 bit source and it was truncated to 20 bits what would one actually lose given most converters are 18–19 bits accuracy?

(½)²⁰ = 0.000001 V/V (volts per volt of output max amplitude)

20 log₁₀((½)²⁰) = –120 dB signal jitter/noise, at 20 bits.

If a 24 bit digital stream is fed to a D/A converter that only offers 18 to 19 bits of “accuracy”, remember that “accuracy” comes in two flavors: absolute accuracy (which is in this case a degree with which a digital value is proportionate to the absolute range of the device, as in 'digits of precision' on a calculator), and monotonicity accuracy (which defines the smallest adjacent increment-of-difference which is linearly related to other adjacent values).

Many remarkably good 24 bit D/A converters might only give 18 bits of absolute accuracy over their range, but include a guarantee that all digital-to-analog representations are on a monotonic transfer function curve that doesn't have any surreptitious adjacency incremental differences greater than ½ LSB. So that 0b₀₁₁₁₁₁₁₁₁₁₁₁₁₁₁₁₁₁₁₁₁₁₁₁ is different from 0b₁₀₀₀₀₀₀₀₀₀₀₀₀₀₀₀₀₀₀₀₀₀₀₀ (the absolute hardest case for a D→A converter) by (½)²⁴ parts, or about –144 dB down from the absolute value of the rails.

_______

Long ago, in the early days of converters, the little resistors (ultimately) which became current references on board the chips or within the discrete designs simply couldn't be trimmed to better than 100 parts per million. Even that was a bit of a challenge, as over the operating temperature range of the chip, resistor value temperature dependence might be substantially similar to 100+ ppm.

100 ppm is ¹⁰⁰/₁₀₀₀₀₀₀, which again by 20log₁₀( ¹⁰⁰/₁₀₀₀₀₀₀ ) is –80 dB (of full rail-to-rail output). This inability to trim had haunted the digital era for a LONG time, so it was thought that either that it wasn't something to be overcome, or that it'd simply cost too much for all but the most well heeled audiophiles to purchase. This, in combination with the need for 72 minutes of music, the wavelength of infrared lasers, the numerical aperture of close-field lenses, the size of the CD disk … in turn drove that first digital audio standard to 44.1 kHz, 16 bit per channel rates. And the 100 ppm D→A chip limits.

Sometime tho' in the mid 1990s, the Japanese discovered ways to go from digital to analog and deliver competent absolute linearity as well as outstanding differential resolution. Thus we now know the beauty and wonder that comes from 96 (or 192) kHz analog, with resolved limits of 24 bits.

GoatGuy

Last edited:

Sometime tho' in the mid 1990s, the Japanese discovered ways to go from digital to analog and deliver competent absolute linearity as well as outstanding differential resolution. Thus we now know the beauty and wonder that comes from 96 (or 192) kHz analog, with resolved limits of 24 bits.

GoatGuy

You what? Where do you get your stash and do you have a phone number?

You what? Where do you get your stash and do you have a phone number?

LOL, Thanks Bill. You hopefully did see through the intentional irony throw. GoatGuy

(It was hard to find one that actually went through all the differences between consumer and pro bit by bit

Here you are (a lot of bits by bits)😀

https://law.resource.org/pub/in/bis/S04/is.iec.60958.1.2004.pdf

https://law.resource.org/pub/in/bis/S04/is.iec.60958.4.2003.pdf

https://law.resource.org/pub/in/bis/S04/is.iec.60958.3.2003.pdf

if you had a 24 bit source and it was truncated to 20 bits what would one actually lose given most converters are 18-19 bits accuracy?

Me, nothing. I am very satisfied with the 16 bits (Bill, it’s only for the fun of technical discussion)

George

- Status

- Not open for further replies.

- Home

- Amplifiers

- Solid State

- What is the steepest realistic audio transient in terms of V/us?