Howie, does Scott's description help give insight into the utility of said apodization filter?

The gist of my comment was that recording at 96k but starting your filter flat to 20k and tuning it to give a more Gaussian than sinc impulse response has always been on the table. Certainly as you approach 48K the information is less useful anyway. So you use a 24/96 container and effectively waste some of the space and are not getting DC - 48kHz response.

This is very different than the several techniques for anti-imaging filters on 16/44.1 CD audio that start just below 20k and allow the possibility of aliasing in order to get the ringing reduction. AFAIK WADIA started it but in a time domain form rather than frequency domain.

A complaint I had at the beginning was the implied beating of information theory. The Shannon entropy of a signal determines the maximum lossless compression achievable. That is why pure noise can not be compressed and why the best compressed files look like noise. The statistics of 256 QUAM ADSL is pure noise.

I think the newer Wolfson DACs have a "non-aliasing" apodizing filter available for selection. Maybe the latest AKM and ESS too, not sure.

If MQA is mandating a filter in the DAC that is into the stopband by Fs/2 (non-aliasing or apodizing) but also using a slow rolloff filter starting much earlier, that's fine but I'm not sure I see the point.

I don't think there is any evidence that pre-ringing in a steep, linear phase FIR as used in DACs is audible, just a lot of handwaving and claims about "smearing" and "temporal resolution".

If MQA is mandating a filter in the DAC that is into the stopband by Fs/2 (non-aliasing or apodizing) but also using a slow rolloff filter starting much earlier, that's fine but I'm not sure I see the point.

I don't think there is any evidence that pre-ringing in a steep, linear phase FIR as used in DACs is audible, just a lot of handwaving and claims about "smearing" and "temporal resolution".

I don't think there is any evidence that pre-ringing in a steep, linear phase FIR as used in DACs is audible, just a lot of handwaving and claims about "smearing" and "temporal resolution".

And we're right back to oversampling/interpolation with gentle filters.

I think the newer Wolfson DACs have a "non-aliasing" apodizing filter available for selection. Maybe the latest AKM and ESS too, not sure.

Makes sense, I think some folks are realizing that at 24/192 recording every detail up to 96k probably is not adding much to the party. Years ago Analog invested money in a startup that had a DSP trick to make VGA displays look nicer with interpolation. Poor little me said this is stupid the march to ultra hi-rez displays is backed by billions from all sides. Same thing with file types that save on disk space or download time, they have such a brief window of opportunity that I don't see the point.

The gist of my comment was that recording at 96k but starting your filter flat to 20k and tuning it to give a more Gaussian than sinc impulse response has always been on the table. Certainly as you approach 48K the information is less useful anyway.

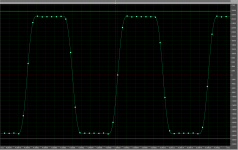

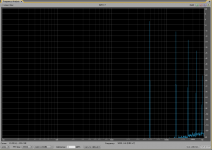

This idea is not new (in fact it is the first approach one would imagine), is easy to realize and test. Please see attached plus the wav file of such filter 5kHz square response. Do you expect any audible improvements? Me, I do not.

Attachments

Last edited:

The rest of the scheme is a music label's dream, allowing one file to be sold at various quality levels.

This lone point, to me at least, transcends all considerations in relationship to the acoustic merits or lack thereof in MQA.

This is also at the heart of the business models moving from ownership to rental ( i.e. music library as a service). This is not because it is of any benefit to the end user.

It is concerning to me on a deep level... Can anyone else relate? Has that good lsd you all took in the 60's finally worn off?

I personally seek to avoid wherever possible the support of systems or services in general that are functionally designed to limit my freedom / mobility for their profit.

It also tends to cut sharply at the profits of the original artists.

For streaming music, Spotify is an excellent example of this. Plex (fork of an open source streaming software) to me represents its antithesis, roughly..... although it lacks a lot of audio related features and capabilities presently... not very mature.

Also, speaking of open source, and some discussion of those who still store their data on hard disks:

Has this thread ever gone over copy on-write, bit rot and other file system issues that relate audio file corruption and degradation?

To those running Linux: you can also use BTRFS which I believe is stable now and has many of the same features. ZFS on Linux is also available and free.

ZFS can also run on OS X using the free and open source OpenZFS downloadable here:

https://openzfsonosx.org/wiki/Downloads

This is also very useful in preventing data loss from disk failures (supports software raid).

Imho it's indispensable in the toolbox of someone who listens to computer audio, fwiw.

Last edited:

For domestic audio I can't see the point of ZFS. It doesn't give you a backup, just resilance for disks and few of use have enough music to need more than 2 or 3 big discs worth, at which point std raid works. What you need is an off-site backup, which I have.

ZFS IS useful if you have an array of dozens of disks, but that is not my use case.

ZFS IS useful if you have an array of dozens of disks, but that is not my use case.

DPH;5099674 Long story short said:A Meta-Analysis of High Resolution Audio Perceptual Evaluation

http://www.aes.org/tmpFiles/elib/20170608/18296.pdf

George

A Meta-Analysis of High Resolution Audio Perceptual Evaluation

http://www.aes.org/tmpFiles/elib/20170608/18296.pdf

George

He does conclude that the more one trains their hearing system the more statistically significant the identification of HD material. The only justification I need for HD Audio is the fact that many CD masters are compressed or limited mixes of the original 24 bit or analog source. I have heard really exciting mixdown sessions of both studio and live recordings become much less so after peak limiting for CD release. All true audio and music lovers should want to hear a mix the way it arrived at the stereo buss of the mixing desk! Of course if one just listens to 3dB DNR pop music, who cares...which makes HD a niche market for sure.

Howie

FWIW I was involved with getting MPD to support 24bit audio and several ALSA USB audio driver fixes.Has anyone here contributed their talents to open source audio engines? If so which and in what capacity?

I don't mean that to sound snarky- genuinely curious.

Sent from my LG-H811 using Tapatalk

For domestic audio I can't see the point of ZFS. It doesn't give you a backup, just resilance for disks and few of use have enough music to need more than 2 or 3 big discs worth, at which point std raid works. What you need is an off-site backup, which I have.

ZFS IS useful if you have an array of dozens of disks, but that is not my use case.

x2 at least for audio -- you really could use a local raid 1 (since TB are stupid cheap and audio files are small), and have a slow remote backup service for redundancy (Box, Amazon, etc).

George--thanks for the link!

I guess another reason you don't see the younger crowd at audio shows.

Possibly not, according to a new survey conducted for the BBC by market research agency ICM Unlimited, which found that nearly half of vinyl buyers don't actually play the records once they've purchased them. Breaking it down, as NME reports, this is made up of the 41 per cent of respondents who admitted that they do not use their record player in addition to a further seven per cent who don't even own a record player to use in the first place.

...A complaint I had at the beginning was the implied beating of information theory. The Shannon entropy of a signal determines the maximum lossless compression achievable. That is why pure noise can not be compressed and why the best compressed files look like noise. The statistics of 256 QUAM ADSL is pure noise.

Scott, I hope we are talking about the same thing when I say I am very bothered by the "Digital Origami" description at the bottom of the MQA page:

MQA Time-domain Accuracy & Digital Audio Quality |

I know you understand the information theory behind this, but from my more layman's perspective, the only way I can conceive of "folding" an upper frequency band back into the baseband is through lossy compression to lower the data rate down to that of the bands being used for storage, which the designers admit. In this way MQA cannot possibly be as accurate a storage medium as linear 24/96 in the amplitude domain, or as Pavel stated, the time domain. The amount of loss would vary depending on program material, and as you say, there is relatively little information >24KHz.

So here is where I stand on the MQA issue:

1) The loss in storing higher band information in a lower band would be material dependent (see above).

2) We have established that they made a great platform for major labels to extract additional money from music distribution. WEA/Tidal and others have signed on to this cash stream.

3) It is of value to consumers and providers alike that a single file can be distributed and extracted in several bandwidths.

4) They admit that the >24KHz information is lossy compressed.

5) Robert Stuart and Peter Craven go into great detail describing the human hearing system's timing resolution, but I am unaware what they could have implemented which would result in improved timing accuracy. They claim this is due to a new filter topology or some new filter encode/decode pair. In reality this can be the only possible improvement over a flat linear encoded file of similar bitrate/sampling freq.

Not being a digital system engineer I cannot see how their claims can be substantiated. Since John heard the demo and was told the source he preferred was MQA processed, it makes me wonder what the comparison at the demo actually was.

Sorry to belabor this point, but while we argue other minutiae here, this MQA thing is gathering momentum due to major label greed, so we may have to deal with it in the future if we want HD files from those content owners. Is MQA good enough for my purposes? Consider most people listen to music through ear buds, and HD aficionados are a niche market, so I don't think ultimate fidelity is MQA's primary design goal. I do not wish to be locked into a format I do not understand...I'd like to understand it better.

Howie

Last edited:

x2 at least for audio -- you really could use a local raid 1 (since TB are stupid cheap and audio files are small), and have a slow remote backup service for redundancy (Box, Amazon, etc).

George--thanks for the link!

Slightly OT: I have three PCs at 2 locations and use Dropbox on all three. That means that each PC as well as the Dropbox servers all have a copy of all my files, documents as well as music. Cost: € 8 per month for up to a terabyte (I am currently using 200G).

Up/download is pretty much instantaneous. So I have 4 distributed copies available, that should be enough for all contingencies save world-wide nuclear war. And no hassle with hardware.

Jan

In terms of the review paper, I see some useful data, namely the importance of training, but the data and funnel plots are alarming: namely the asymmetry in the funnel plot towards positive results suggests pretty healthy publication bias. Also, by lumping sample rate and bit depth together we're left with muddled results. In a more conservative interpretation, it's hard to say that this meta-analysis would show the same result as a large, controlled test. Doubly troubling is the lack of effect size, so we really might be looking at bias dumped on top of noise. A large group of underpowered studies gives really variable results -- all we have in audio testing is underpowered studies.

The author repeatedly bemoans the disparate test methodologies, small N per study, and lack of individual test results, which I have to agree with him on. I think his second paragraph under 4.4 is the best conclusion. Taking this from a lens of medical paper meta-analyses, I'd say the data is inconclusive.

The author repeatedly bemoans the disparate test methodologies, small N per study, and lack of individual test results, which I have to agree with him on. I think his second paragraph under 4.4 is the best conclusion. Taking this from a lens of medical paper meta-analyses, I'd say the data is inconclusive.

Last edited:

Howie,

To simplify a little, I think part of what Scott was trying to explain is that in most music, not all frequencies are fully in use all the time. That being the case, there must be some potential bandwidth that is not being used. If someone can figure out a way to cleverly compress only the fraction of the bandwidth actually being used, then potentially it might be done without loss.

Also, again in lay terms, in the case of noise, it uses all frequencies all the time so available bandwidth is always fully in use.

To simplify a little, I think part of what Scott was trying to explain is that in most music, not all frequencies are fully in use all the time. That being the case, there must be some potential bandwidth that is not being used. If someone can figure out a way to cleverly compress only the fraction of the bandwidth actually being used, then potentially it might be done without loss.

Also, again in lay terms, in the case of noise, it uses all frequencies all the time so available bandwidth is always fully in use.

FWIW I was involved with getting MPD to support 24bit audio and several ALSA USB audio driver fixes.

Sent from my LG-H811 using Tapatalk

Thank you! MPD is the source of digital music on the main system and not sure what I would do without it. A minor pain to get running on usb initially but now just gets on with the job

- Status

- Not open for further replies.

- Home

- Member Areas

- The Lounge

- John Curl's Blowtorch preamplifier part II