A while back, when short-loop feedback (followers, degeneration, etc.) was being discussed, DF96 and Bruno Putzeys helped me to realize that the fundamental difference between conventional long-loop feedback and short loop feedback wasn't fundamental at all, but rather was where (not if) in frequency a (truly fundamental) 90 degree phase shift was located.

The significance (or not?) of the in-band-of-interest or out-of-band-of-interest, or both, 90 degrees in the forward path remains beyond my grasp.

Thanks,

Chris

The significance (or not?) of the in-band-of-interest or out-of-band-of-interest, or both, 90 degrees in the forward path remains beyond my grasp.

Thanks,

Chris

Last edited:

I never said you did. You appeared to be offended by SY's statement, which seems strange if the unaddressed statement does not apply to you. If the hat doesn't fit please don't wear it!

Did I misunderstand this comment from you? It just seemed you were addressing delay to distortion as coming from me.

Issues such as re-entrant distortion are predicted by the algebra, so are not phenomena caused by any delay.

Cheers.

Last edited:

Positron:

I was responding to your question in post 26292, by clarifying what I was saying as you requested; I was not implying that my criticism applies to you. It is for you to decide that. I am puzzled. You appear to feel offended by unaddressed comments which you feel do not apply to you. If they don't apply to you and they are not addressed to you why be offended by them?

I was responding to your question in post 26292, by clarifying what I was saying as you requested; I was not implying that my criticism applies to you. It is for you to decide that. I am puzzled. You appear to feel offended by unaddressed comments which you feel do not apply to you. If they don't apply to you and they are not addressed to you why be offended by them?

Positron:

I was responding to your question in post 26292, by clarifying what I was saying as you requested; I was not implying that my criticism applies to you.

Glad to hear it.

Cheers 96.

Last edited:

The significance (or not?) of the in-band-of-interest or out-of-band-of-interest, or both, 90 degrees in the forward path remains beyond my grasp.

90 degrees is the maximum that a single pole can add. However, in real amps there are still more than one pole, so for feedback stability purposes one pole that is called dominant is way below other poles in frequency, in order to get amplification factor less than 1 through the loop on the frequency where phase shift is not yet close to 180 degrees, i.e. the amp is still stable.

When we analyze the real amp we have to remember that it's open loop amplification factor is not stable, and it is variable with signal due to non-linearities that we linearize using feedback. That means we need some margin for unconditional stability.

The difference between "in-band" and "out of band" is, how well the feedback serves function on the high end of the band in interest. For example, if we have 60 dB feedback on 10 Hz frequency, but 20 dB only on 10 KHz frequency due to open-loop frequency response, we are getting the amp with only 20 dB feedback on 10 kHz frequency, despite of 60 dB of feedack on 10 Hz frequency.

Also, since the amp is non-linear, linearizing it we correct phase shift also, but this correction depends again on the amplification factor, so if it is modulated by signal resulting phase shift is as well modulated by signal. It adds phase intermodulations.

Last edited:

Also, since the amp is non-linear, linearizing it we correct phase shift also, but this correction depends again on the amplification factor, so if it is modulated by signal resulting phase shift is as well modulated by signal. It adds phase intermodulations.

I'm swayed by the arguments that phase modulations are too small to matter. Pathological cases to show the principle have big enough numbers but real amplifiers seem to be too good in this respect.

By "significance" I meant within the context of the original discussion, that of a special dispensation for short loop feedback from the perceived sonic ills of evil "feedback". What's different or special about these short loop feedbacks (besides the obvious)? The location in frequency of the 90 degrees of the dominant pole in the forward path.

Of course this is obvious to everybody, but I'm not the sharpest pencil in the pack. The relevance to re-entrant distortion remains beyond my grasp. I'm not even convinced that a generalization *can* be made, because forward transfer functions vary.

Thanks, as always,

Chris

Last edited:

Your question was regarding "Why out of band", no? So the answer was, about degree of significance. If phase modulation is 1/5 of 20 KHz frequency period only it is already audible, even though 100 KHz signal is inaudible.

Edit: The same people who say "Less feedback is better" often also say "Local feedback is better". But "Local feedback is better" is because it means more of feedback on high end of the band. When they say, "The amp has too much of feedback" that actually means "Feedback on high end of the band is too low".

Edit: The same people who say "Less feedback is better" often also say "Local feedback is better". But "Local feedback is better" is because it means more of feedback on high end of the band. When they say, "The amp has too much of feedback" that actually means "Feedback on high end of the band is too low".

Last edited:

When they say, "The amp has too much of feedback" that actually means "Feedback on high end of the band is too low".

See the Morgan Jones quote earlier.

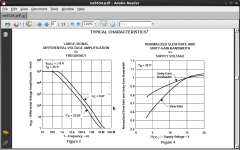

Here is a good example. Why NE5534 is still considered as great opamp? Look at the picture: for 40 dB of amplification it still has about 25 dB amplification factor on 20 KHz that means 25 dB of negative feedback. It is very good, despite it's closed loop THD on 20 KHz is twice higher than on low frequencies.

Edit: but if we take a tube amp with much lower, 65 dB only amplification factor in all band and way beyond, and apply 25 dB of feedback only it will sound even better.

Does it mean "Less feedback is better"? No. It means "Better amp is better".

Edit: but if we take a tube amp with much lower, 65 dB only amplification factor in all band and way beyond, and apply 25 dB of feedback only it will sound even better.

Does it mean "Less feedback is better"? No. It means "Better amp is better".

Last edited:

Did I misunderstand this comment from you? It just seemed you were addressing delay to distortion as coming from me.

Cheers.

The post in question, I appriciate the fact that you might just want to forget this so be it. The professor is dead long live the professor.

Originally Posted by Positron

It is also important to understand, as point "A" arrives at the output, and is fedback to the input, "A" becomes "A1" now,,, is not a one time event.

"A1" does not just disappear but again arrives at the output and is again fedback to the input at BetaEo I believe it is called (been decades since I used the terminology), now called "A2". It becomes A3 until so small as to be inconsequential. This continually happens over time. Higher rates of feedback are worse.

Cheers.

Here is the picture: 65 dB on 20 KHz is still enough for 25 dB of negative feedback on this frequency, if we want a stage with 40 dB amplification. However if we take another opamp compensated for unity gain stability despite on 10 Hz it may have more feedback, on 20 kHz it will have less feedback.

Attachments

How much advanced math is needed in applied engineering?

DF96 ->Those are true words given --

I finally got back on DIYAUDIO --- prelude: There are a few National Labs where high level research is done of national interest... the technology is later downloaded to industry. That's the plan. Because of the fact that the research is always something which has not been done before and high risk and cost (too high risk and expensive for industry to do)...... LLNL, Sandia, Oak ridge, et al find the best of the best. With that in mind --

I never saw any EE ever use calculus and a seasoned EE told me that there wasnt but maybe 5 EE's -out of 500-that actually needed to use calculus in thier work at LLNL. Once, I did work for a PHD EE who took me over to a device he got a patent on -- and showed me the self aligning laser he did. he might have used calculus. Maybe not. And, this is an Applied Science lab. Building useful things that actually have to work right.

Inspection of the calculus is all that was needed to see it is averaging. You dont need to be a math wizz to figure it out. You dont even have to do any calulations or derivations. Just understand the math concept/principles. So it would be very helpful to leave higher level math out the discussions here unless actually needed... and from my experience, it rarely is needed.

All said IMHO, of course. -Dick Marsh (RNM)

You may be confusing two different issues. One is the use of mathematics to understand something. The other is the use of mathematics to calculate something useful

.

DF96 ->Those are true words given --

I finally got back on DIYAUDIO --- prelude: There are a few National Labs where high level research is done of national interest... the technology is later downloaded to industry. That's the plan. Because of the fact that the research is always something which has not been done before and high risk and cost (too high risk and expensive for industry to do)...... LLNL, Sandia, Oak ridge, et al find the best of the best. With that in mind --

I never saw any EE ever use calculus and a seasoned EE told me that there wasnt but maybe 5 EE's -out of 500-that actually needed to use calculus in thier work at LLNL. Once, I did work for a PHD EE who took me over to a device he got a patent on -- and showed me the self aligning laser he did. he might have used calculus. Maybe not. And, this is an Applied Science lab. Building useful things that actually have to work right.

Inspection of the calculus is all that was needed to see it is averaging. You dont need to be a math wizz to figure it out. You dont even have to do any calulations or derivations. Just understand the math concept/principles. So it would be very helpful to leave higher level math out the discussions here unless actually needed... and from my experience, it rarely is needed.

All said IMHO, of course. -Dick Marsh (RNM)

Last edited:

This post was discussed quite a while ago, I won't repeat my comments. I would prefer to build a Zen rock garden in my front yard.

To tell the truth -- me too. Zen rock garden. Anyone still hanging in here after so many years and pages should get a gold medal. Or at least a gold star on one's forehead.

Did anything new come of the discussion awhile ago? Where did it die and why?

Last edited:

No, he is right. DF means presence in parallel with capacitor of rated capacitance of one more "phantom" capacitor with resistance in series. If capacitance is rated as infinite that means that it's impedance is zero on any frequency except zero Hertz, so no matter what you add in parallel to zero Ohm, it's impedance on any frequency will still be zero.

However, if you have own definitions of DF and infinity you are welcome to present them here and now.

DF?

DF refers specifically to losses encountered at low frequencies (typically 120 Hz to 1 KHz). At high frequencies, capacitor dielectric losses are described in terms of loss tangent (tan x). The higher the loss tangent, the greater the capacitors equivalent series resistance (ESR) to signal power. Which impacts the phase angle.

The parallel DA is what you described, not DF.

BTW - The parallel DA also reduces the perfect caps 90 degree phase angle to something less. In coupling, phase angle change from ideal probably does not Directly affect sound and can be reduced (at a cost/size penalty) by increasing the value of C. But, in filtering applications, the results may be audible if sum and differnces affect the resultant frequency response.

Thx, RNM

Last edited:

DF96 ->Those are true words given --

I finally got back on DIYAUDIO --- prelude: There are a few National Labs where high level research is done of national interest... the technology is later downloaded to industry. That's the plan. Because of the fact that the research is always something which has not been done before and high risk and cost (too high risk and expensive for industry to do)...... LLNL, Sandia, Oak ridge, et al find the best of the best. With that in mind --

I never saw any EE ever use calculus and a seasoned EE told me that there wasnt but maybe 5 EE's -out of 500-that actually needed to use calculus in thier work at LLNL. Once, I did work for a PHD EE who took me over to a device he got a patent on -- and showed me the self aligning laser he did. he might have used calculus. Maybe not. And, this is an Applied Science lab. Building useful things that actually have to work right.

Inspection of the calculus is all that was needed to see it is averaging. You dont need to be a math wizz to figure it out. You dont even have to do any calulations or derivations. Just understand the math concept/principles. So it would be very helpful to leave higher level math out the discussions here unless actually needed... and from my experience, it rarely is needed.

All said IMHO, of course. -Dick Marsh (RNM)

Perhaps the charged word "calculus" is misleading. I stick to my guns --- the more and deeper understanding of mathematics, the better one is equipped to understand physics and thus electronics. It is not chest-bumping to say this (shame on you Robert, fawning sycophant!). It is the simple fact that math (and Number) is the language of science (as Tobias Dantzig titled his great little book many years ago).

Of course we have all gotten lazy. Math students use various packages (Mathcad etc., as Marsh mentioned Greene laments), and engineers mostly fall back on simulators like Spice. But in the absence of a more fundamental understanding we can go terribly wrong.

Maths are no substitute for creativity, or even intuition. And John Curl has a point, that one can still do interesting work without the comprehensive underpinnings.

But basic lack of understanding of signal and system theory is crippling. It's not that tough to learn, and it won't crimp your style. Really.

Brad Wood

Perhaps the charged word "calculus" is misleading. I stick to my guns --- the more and deeper understanding of mathematics, the better one is equipped to understand physics and thus electronics.

I offer up David Bohm (and by extension, Einstein) as counter-examples to this claim.

Infinite Potential - the Life and Times of David Bohm

<edit> Snippet from the link for those who don't wish to digest it all :

Speaking of Bohm :

His colleague at Birckbeck College, Basil Hiley, once remarked, "Dave always arrives at the right conclusions, but his mathematics is terrible. I take it home and find all sorts of errors and then have to spend the night trying to develop the correct proof. But in the end, the result is always exactly the same as the one Dave saw directly"

Last edited:

Sorry, I meant DA that was earlier in the thread blamed in audible sound degradation. My bad. I was heated by sun and discussions. Also I did not pay good attention because was busy with work, lurking on the forum time to time to relax a bit.

Of course, DF is Dissipation Factor that caused power loss in motor run capacitors. I never used this term before, ESR is more convenient.

DA is a big issue in sample and hold devices, less in analog filters. For amps it is not an issue. However, if capacitors with high DA don't have other problems as well, but it is not DA that is guilty.

I offer up David Bohm (and by extension, Einstein) as counter-examples to this claim.

Infinite Potential - the Life and Times of David Bohm

<edit> Snippet from the link for those who don't wish to digest it all :

Speaking of Bohm :

His colleague at Birckbeck College, Basil Hiley, once remarked, "Dave always arrives at the right conclusions, but his mathematics is terrible. I take it home and find all sorts of errors and then have to spend the night trying to develop the correct proof. But in the end, the result is always exactly the same as the one Dave saw directly"

Well yes, Einstein was indeed lousy at maths. He needed a whole lot of help with tensor calculus to present his notions of general relativity. Alas, Bohm (and I love him very much) had some great ideas, but the big ones that had a direct bearing on contemporary physics (e.g. hidden variable theories as a deterministic adjunct to QM) have been generally discredited. But there is still time (or perhaps no time at all <cackles> ).

Again I don't wish to discount intuition. It is crucial. Although, as the old magazine for chem students (young ones) used to remind: "Chance favors the prepared mind."

It's analogous to the "creativity" that springs from altered states of consciousness. Without the preparation, such insights are not likely to prove fruitful.

Well yes, Einstein was indeed lousy at maths. He needed a whole lot of help with tensor calculus to present his notions of general relativity.

To me this says that its the insight or intuition that's the foundation of understanding. Mathematics is the language to express that understanding. A person better versed in math is better equipped to communicate their insights.

Alas, Bohm (and I love him very much) had some great ideas, but the big ones that had a direct bearing on contemporary physics (e.g. hidden variable theories as a deterministic adjunct to QM) have been generally discredited.

I concur that hidden variables was rather misguided. I speculate whether this might have at least in part arisen from his contact with Einstein who could not accept god playing dice. But his other notions I find more inspiring, like the implicate order and the primacy of verbs over nouns for example.

Again I don't wish to discount intuition. It is crucial. Although, as the old magazine for chem students (young ones) used to remind: "Chance favors the prepared mind."

But you still wish to argue that math ability is more crucial than intuition?

<edit> I suggest the following rewording of your original claim:

the more and deeper understanding of mathematics, the better one is equipped to communicate one's understanding of physics and thus electronics.

Last edited:

- Status

- Not open for further replies.

- Home

- Member Areas

- The Lounge

- John Curl's Blowtorch preamplifier part II