(rant)

Commercially available audio products and the lore around them is strange and frustrating. An associate wants to generate sounds in the 80kHz range for animal tests (multichannel, one for 8 or 10 animals) and was asking for a good way to do this. There's the idea of adding a/d chips to R-PIs, one per animal. I immediately thought there are various mulichannel audio interfaces running on USB that are pretty cheap thesedays that run up to 24/192, and should reproduce 80kHz just fine (does anyone see where this is going yet?). There's not a lot of reviews here on diyaudio, but there's that site with "science" in the URL ... I found an 8-channel 24/192 DAC-only for about $200 street price, let me double-check the frequency response goes well over 20kHz. But nooooo...

i found one thread on interface specs, many don't care about response above 20k, so their tests don't do it, but another asked "then why do you need 192k sampling rate? 44.1k is fine for 20kHz."

One response: "but you see, I want four, eight, as many data points as I can get for that 20kHz waveform ..." Apparently never heard of Nyquist and doesn't care, it's the thought, the belief of having better audio that's important.

I asked on the manufacturer's Youtube video blurb for the product, and got a similar response:

Commercially available audio products and the lore around them is strange and frustrating. An associate wants to generate sounds in the 80kHz range for animal tests (multichannel, one for 8 or 10 animals) and was asking for a good way to do this. There's the idea of adding a/d chips to R-PIs, one per animal. I immediately thought there are various mulichannel audio interfaces running on USB that are pretty cheap thesedays that run up to 24/192, and should reproduce 80kHz just fine (does anyone see where this is going yet?). There's not a lot of reviews here on diyaudio, but there's that site with "science" in the URL ... I found an 8-channel 24/192 DAC-only for about $200 street price, let me double-check the frequency response goes well over 20kHz. But nooooo...

i found one thread on interface specs, many don't care about response above 20k, so their tests don't do it, but another asked "then why do you need 192k sampling rate? 44.1k is fine for 20kHz."

One response: "but you see, I want four, eight, as many data points as I can get for that 20kHz waveform ..." Apparently never heard of Nyquist and doesn't care, it's the thought, the belief of having better audio that's important.

I asked on the manufacturer's Youtube video blurb for the product, and got a similar response:

For music production we started out with 16 bit 44k samples.

Basically CD resolution. Thought we were hot stuff and sound

quality be high. Even in 1998 is was possible to be at 24 bit

92k. It would just bog down your computer to run software

and a soundcard at high resolution.

Dual then Quad

cores made it easier to work at 24 bit. Eventually 24 bit

92k became a standard for sample packs / sound design.

The difference was very noticeable and older works

sounded very dated. Likewise software pushed forward

and computers. Eventually working at 32 bit 192k res.

So even mastering down to 16 bit for a CD copy/ mix down.

Starting at a higher resolution makes a big difference.

So depends on what your doing.

Far as sound design and hearing thousands of samples

and music passages. Most producers starting out

at 16 bit can instantly tell the difference between.

Higher resolution. And yes there is plenty of ways

to make 16 bit sound better fuller more detailed.

Yada yada. But even then we could hear and call out

old samples being fluffed.

Basically CD resolution. Thought we were hot stuff and sound

quality be high. Even in 1998 is was possible to be at 24 bit

92k. It would just bog down your computer to run software

and a soundcard at high resolution.

Dual then Quad

cores made it easier to work at 24 bit. Eventually 24 bit

92k became a standard for sample packs / sound design.

The difference was very noticeable and older works

sounded very dated. Likewise software pushed forward

and computers. Eventually working at 32 bit 192k res.

So even mastering down to 16 bit for a CD copy/ mix down.

Starting at a higher resolution makes a big difference.

So depends on what your doing.

Far as sound design and hearing thousands of samples

and music passages. Most producers starting out

at 16 bit can instantly tell the difference between.

Higher resolution. And yes there is plenty of ways

to make 16 bit sound better fuller more detailed.

Yada yada. But even then we could hear and call out

old samples being fluffed.

I guess working with multichannel hi-res recording in a studio environment is a no-brainer, as digital sound processing like mixing and digital sound processing may eat the initial sound quality away. But mastering to 44k/16bit after the mixing/producing phase seems perfectly o.k. to me. In the end, the placement of microphones and the quality of the mixing process seem more influential to me than the "lesser" resolution of the mastering to CD format.

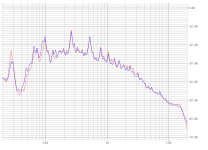

Just from the shape of the spectrum. I can't think of any other reason why the bump would occur with acoustic instruments.

Ed

Ed

It could well be aliasing, but concluding this from the shape of the bump sounds like cutting corners to get to that conclusion.

It could be confirmed by finding folded back harmonics when the original sample rate is know, it would need careful spectrogram analysis of the relevant audio part.

It could be confirmed by finding folded back harmonics when the original sample rate is know, it would need careful spectrogram analysis of the relevant audio part.

Yes, but higher harmonics are not on the CD and were likely never recorded. This is what I have to work with. I have looked at enough music spectrums to recognize artifacts from electronics.

Ed

Ed

If there was aliasing in the recording these higher harmonics would be there and folded back to frequencies below fs/2

- Home

- General Interest

- Music

- Why stream 24bits when 16bits is the gold standard?