Windoze on ARM?

Supposedly Windoz (x86) will run in Parallels using Rosetta faster than most native Intel boxes.

Apple has been develping the ARM core with a lot of their own enhancements starting with the first chips they designed for the iPhone.

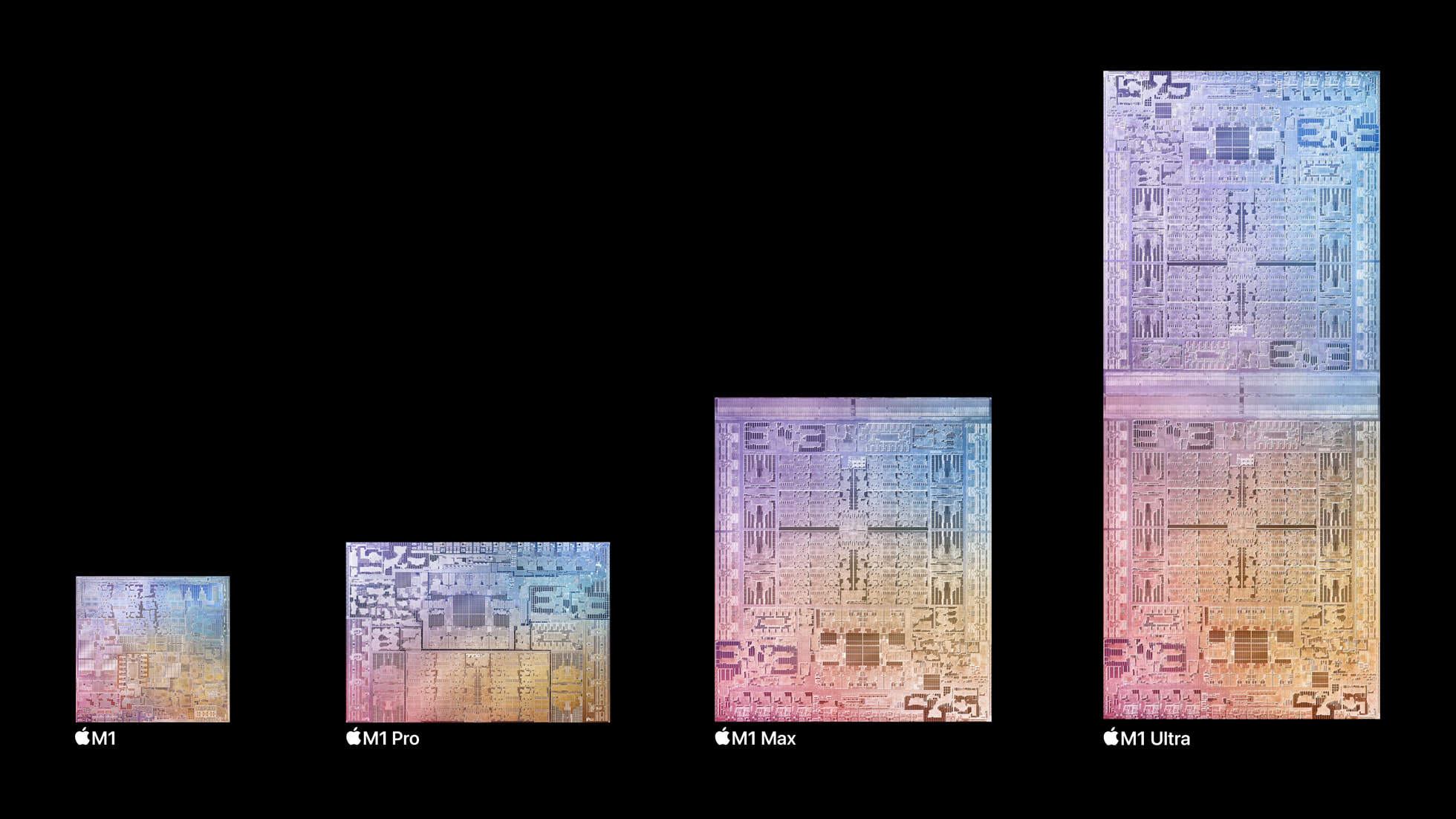

A14 Bionic was the base for the original M1. Then M1 Pro, M1 Max, and then 2 M1 Max glued together to make an M1 Ultra.

To achieve the speeds they do everything is monolithic, evrything is essential on board th chip, All CPUs, GPUs, Neural Engine cores are called all access the same bank of memory. Memory is part of the monolith and has serious bandwidth, doubling from Pro to Max to Ultra. You order a chip, choose your RAM, that;s it. The bigger ones are being run hard. In the Studio, moving from thr M1 Max (as in the high end MacBook Pros) to the M1 Ultra, 2 pounds of heatsink/cooling is added.

Expect M2 Pro, M2 Max, M2 Ultra in the same mould as the M1s, and M2 Extreme, where 4 M2 Max will be glued together. I expect this will be VERY fast. And they are just getting started.

keep in mind that not only are all the hardware bits very tightly integrated, the CPUs and MacOs, iOS are simpatico, the hardware compleenting the software.

There is a lot of innovation in these chips.

dave

I never knew that India is an Android development hub.

I have a friend, architect, uses an iPad as camera, and presentation device. 10" screen.

And you can actually connect many devices over wi-fi and Bluetooth without connectors. No need for many USB sockets.

Bear in mind that ARM is essentially a RISC unit, good for dedicated controllers as in HDD, not as good as Wndoze in user interface.

Android is a little basic in comparison, but does 90$ of the work for most people. Like banking, travel tickets, and so on.

And also, the variety of software in M$ is much more, in office, accounting, graphics and so on.

Android is free, I think, so Google makes money on it through some other way.

Never met a bean counter who was generous about budgets...

My concern with Android devices is:

(1) Not enough connectivity: I want a power interface that is separate from IO. Two USB-C ports. With a secondary USB-C port, those SoCs could easily support many devices. The OTG standard needs to become a "standard".

(2) Too much reliance on fingers... there should be a mode where it natively supports the keyboard and the mouse and a good Command Line Interface (CLI). I want to see the Unix directly and I can do a lot of work with the CLI.

(3) Remember that Android is Linux. In one job, we were using Android with the upgrades I want. The SoCs were meant for smart phones and we wanted to test them. So with an updated Android Kernel we did both the work of users ( simulating the user processes as if those were user fingers running the applications in the processes ) as well as running the white/black tests and OS hardware test interfaces.

(4) Those smart phone SoCs are very powerful nowadays. And they have build in multimedia renderers, optimized for power consumption as well as performance. It offers superb audio (specially with an USB DAC) and built in video. A modern SoC for an Android device can replace most user PCs. If only they offered the CLI.... I must say I have not looked for a CLI "app".

(5) India is not an "Android development hub". What they have in Bangalore is an Android app development hub.

(6) I was in a job once, I was asked to cost it out a small project. I figured one man year, about two men doing it in six months. Bangalore bid it in three months calendar total -no break down. The Program Manager gave it to Bangalore. Two months later, Bangalore dropped the ball so they gave it back to me. The program manager wanted it done in six months. I told him that we could not use the work from Bangalore. Short story... we did it in six calendar months. Two developers. All told, we wasted the budget from Bangalore and two calendar months. This is very typical.

(7) Android is not free. Nothing is free. You give up your privacy when you use a Google Android device.

(8) There are no restrictions due to RISC. And yeah, I've done some SSD devices with 50 ARM cores, plus dedicated back end Flash controllers. Currently working on a Xylinx FPGA with six ARM cores, plus dedicated FPGA IO multimedia and IO devices... avionics.

Supposedly Windoa will runin Parallels using Rosetta faster than most Intel boxes.

Apple has been develping the ARM core with a lot of their own enhancements starting with the first chips they designed for the iPhone.

...

keep in mind that not only are all the hardware bits very tightly integrated, the CPUs and MacOs, iOS are simpatico, the hardware compleenting the software.

dave

(1) Apple has been moving into bringing all of their hardware development in house. They have been moving towards developing their own SSD controllers, all they need is to buy the flash, EEC and RAM components. Plus the modems, screen and modems, of course -those are still being outsourced.

(2) By definition, an SoC is absolutely integrated. That's its greatest strength... giving you the most performance for the least power. Power management is built into the hardware design and power management firmware which throttles not only power to peripherals but will switch between A15 to A7 -and back- depending on the need. Whole sections of the SoC run off different power "maps".

(3) The integration of an SoC can also be its greatest weakness though. That's because the OS limits it.

Can you use wireless keyboard and mouse on an Android device?

That should solve part of your problem.

And a separate charging port can be cut into the case, space could be an issue.

Or simply use a larger 3-D printed case. And connect the battery to a charging circuit, use a different type of jack to charge it.

Apart from Bangalore, the cities of Hyderabad, Pune, Delhi, Chandigarh, and some smaller cities are also software centers. Not sure about Android.

Bangalore has terrible traffic, and real estate is expensive, new investment is slowing down.

That should solve part of your problem.

And a separate charging port can be cut into the case, space could be an issue.

Or simply use a larger 3-D printed case. And connect the battery to a charging circuit, use a different type of jack to charge it.

Apart from Bangalore, the cities of Hyderabad, Pune, Delhi, Chandigarh, and some smaller cities are also software centers. Not sure about Android.

Bangalore has terrible traffic, and real estate is expensive, new investment is slowing down.

Can you use wireless keyboard and mouse on an Android device?

That should solve part of your problem.

And a separate charging port can be cut into the case, space could be an issue.

Or simply use a larger 3-D printed case. And connect the battery to a charging circuit, use a different type of jack to charge it.

Apart from Bangalore, the cities of Hyderabad, Pune, Delhi, Chandigarh, and some smaller cities are also software centers. Not sure about Android.

Bangalore has terrible traffic, and real estate is expensive, new investment is slowing down.

I have found some luck in those hydra OTG-Charge gizmos... but it's a crapshoot whether they work on you device since its based on the impedance in the circuit (or something like that)... but it's not standard.

The idea is to use a USB-C docking station, the way I do with my MFF PCs and laptops. And yes, I can use the wireless charger for the phone (my tablets don't have that, I'm cheap). The docking station is simple and offers all kinds of possibilities.

Now, I do open the case to swap batteries... but have you looked at the internals of a smart phone / tablet? Good luck soldering anything in there.

BTW, I did find some CLI applications for Android. I might play with them, right now I'm too busy playing with a Pearl 2 that is fully adjustable...

View attachment 1110512

View attachment 1110513

Off the net, just suggestions, no ties to sellers.

Top one is Audio and USB C...

Thanks... but those are easy to find. I already run UBS-OTG cables to my fancy DACs.

I want the USB-C AND POWER charging. At the same time.

And I wonder if a USB-C docking station will work with USB-OTG.

It's pretty crazy. Transistors are free... 🙂Some of those SoCs are loaded with cores... the SoCs in smart phones are astounding in their complexity. Then you got hard/SSD drives. Memory management, DMA, bus matrices, multimedia, modems, flash, etc... We're talking about some truly serious hardware processing into chips that are smaller than an Intel core.

Also, this came across my desk this morning: https://bytecellar.com/2022/11/16/a...ension-to-accommodate-an-intel-8080-artifact/

Turns out Apple decided to include hardware to compute some of the flags (PF and AF) dating back to the 8080 to avoid having to do so in software for x86/x64 emulation. Granted PF can be computed with a handful of XOR gates. XOR is somewhat complex and large for a logic gate, but the handful needed for the PF math wouldn't even show as a single pixel in the chip photo. 🙂 The tricks you can do when you design your own CPU - even if you're using someone else's building blocks.

Tom

And then you got to make those things work.

Last time I was playing with such, I spent 15 days, 20 hours a day, with a Palladium emulator to figure out why the DMA wasn't working with some addresses.

Turns out the cache tables were not correct and there was an overlap which was not caught in the design. So, since the Palladium was running 1 hours of wall clock for each second of hardware time, it could take anywhere between four to six hours to catch the condition and hit the breakpoint.

Then, you also get lots of straightforward hardware acceleration by the simple fact of putting lots of M cores in the design. Just waist cores to speed up certain functionality... run everything in parallel, SMP mode.

I really need to retire. This is giving me a headache and I'd rather spin records and pick up my soldering iron. Limit my programming to Raspberries.

Last time I was playing with such, I spent 15 days, 20 hours a day, with a Palladium emulator to figure out why the DMA wasn't working with some addresses.

Turns out the cache tables were not correct and there was an overlap which was not caught in the design. So, since the Palladium was running 1 hours of wall clock for each second of hardware time, it could take anywhere between four to six hours to catch the condition and hit the breakpoint.

Then, you also get lots of straightforward hardware acceleration by the simple fact of putting lots of M cores in the design. Just waist cores to speed up certain functionality... run everything in parallel, SMP mode.

I really need to retire. This is giving me a headache and I'd rather spin records and pick up my soldering iron. Limit my programming to Raspberries.

Last edited by a moderator:

Turns out Apple decided to include hardware to compute some of the flags (PF and AF) dating back to the 8080 to avoid having to do so in software for x86/x64 emulation

Thanx for the confirmation, that some specfix instructions toimprove emulation speed were built into hardware.

dave

Limit my programming to Raspberries.

The bit about SoC reminded me of the Novix where they essentially did an SoC in HW available in the early 80s to have a stand alone computer that exectuted FORTH directly and at blazing speeds. I was wondering what it would take to bake that into a chip these days? It would be tiny. FORTH is by far my favorite programming language. I had one of the few working boards until recently.

https://en.wikichip.org/wiki/novix/nc4016

dave

(1) The Forth programmers I have known over the years would take a shower at most twice a week... and did not know the meaning of shaving/trimming your beard. Truly weird people... only "bettered" by the Lisp programmers. I think I must have broken the mold when I spent several months integrating Lisp ( showered, shaved, changed into clean clothes daily ).

(2) it's not uncommon nowadays to provide FPGAs in an SoC or a SBC. I think Cisco was the trailblazer in MY20 when they starting pushing the routing and switching decisions to specialized ASICs -at the time FPGAs were expensive and Cisco had the volume to amortize taping them out.

(3) Honestly, I'm looking at code today with two GOTOs.... I don't know how the he!! we're gonna validate that crap.

(4) Time to simplify my life. Build a high efficiency speaker... just buy a flat pack and enjoy the time finishing the wood... I love finishing wood.

(2) it's not uncommon nowadays to provide FPGAs in an SoC or a SBC. I think Cisco was the trailblazer in MY20 when they starting pushing the routing and switching decisions to specialized ASICs -at the time FPGAs were expensive and Cisco had the volume to amortize taping them out.

(3) Honestly, I'm looking at code today with two GOTOs.... I don't know how the he!! we're gonna validate that crap.

(4) Time to simplify my life. Build a high efficiency speaker... just buy a flat pack and enjoy the time finishing the wood... I love finishing wood.

Last edited by a moderator:

Yes.TSMC, I think, makes the ARM CPUs for Apple.

They will buy an Arizona-based fab (probably a TSMC-built one) and use it in 2024.Never heard of an Apple fab as in a chip making facility owned by Apple.

Are you sure about that?Yes.

They will buy an Arizona-based fab (probably a TSMC-built one) and use it in 2024.

TSMC has been building their AZ fab for at least 3 years. I just can't imagine they would sell that to one of their customers. It's a crown jewel for them.

You can look up the USB C, older USB had separate data and power lines, so it was possible to charge my phone, and use it as a modem...I still do that.

Quite possible on USB C, I feel.

There are some big incentives for incvesting in chip plants in the USA, even Intel is in that line of planning.

Quite possible on USB C, I feel.

There are some big incentives for incvesting in chip plants in the USA, even Intel is in that line of planning.

(1) The Forth programmers I have known over the years would take a shower at most twice a week... and did not know the meaning of shaving/trimming your beard. Truly weird people...

I met and hung out with one that probably fits that classification, the infamous Captain Crunch, at a FORTH conference in Palo Alto.

dave

ROTFLOL

Yeah.... Silicon Valley. Good ol' Sunnyvale. I was offered a job at Cisco in '95. My wife didn't want to move.

If I had, I wouldn't be talking to you guys in this forum... I'd likely be in the McLaren and Magico forums belly aching about the fuel and crew costs of sharing a wet lease in the Gulf, the micro dynamics of my Pass XA200.8s and the hassle of hiring lawyers to manage the apartment complexes in San Jose. ;-)

Imagine my putative signature... Tony E., Senior Principal Director of Internetworking Fabric Applications, Cisco. (retired).

As a matter of fact, four of my coworkers in 95 who took their Cisco offers had signatures like that.

Yeah.... Silicon Valley. Good ol' Sunnyvale. I was offered a job at Cisco in '95. My wife didn't want to move.

If I had, I wouldn't be talking to you guys in this forum... I'd likely be in the McLaren and Magico forums belly aching about the fuel and crew costs of sharing a wet lease in the Gulf, the micro dynamics of my Pass XA200.8s and the hassle of hiring lawyers to manage the apartment complexes in San Jose. ;-)

Imagine my putative signature... Tony E., Senior Principal Director of Internetworking Fabric Applications, Cisco. (retired).

As a matter of fact, four of my coworkers in 95 who took their Cisco offers had signatures like that.

Last edited by a moderator:

all code has goto / branch instructions. High level languages just hide it from the coder. And give some really handy compiler bugs instead...(3) Honestly, I'm looking at code today with two GOTOs.... I don't know how the he!! we're gonna validate that crap.

Sorry to go off topic. Is this the same PRR who was such an asset to Compuserve's PC hardware forum? --tom w.Long ago, 8088 and NetWare days, Novell even gave you a driver which would spoof the MAC. In the rush from $300 3Com to the $10 bottom, some copycat net-cards had the same MAC number on ALL cards. They worked fine individually, how they got past prototype testing, but two or more on one LAN was a bust. So the BAT file to load the driver had to be hand-crafted to over-ride the MACs with a unique number on every PC.

all code has goto / branch instructions. High level languages just hide it from the coder. And give some really handy compiler bugs instead...

True, assembly uses jmp instructions, but it IS not allowed in source code.

Just like we don't allow dynamic allocation.. pffft....

Don't call me a coder.... we're talking real time C device driver code here.... not Python scripts for some ill behaved web interface.

- Home

- Member Areas

- The Lounge

- The dangers of upgrading a PC