Please elaborate why there should be any difference in the analog delivery of files of same format and also files of different formats and what those differences might be ?.

Thanks in advance.

I thought it was pretty clear that the issue was repeatability and removing other issues that may cause a change in the audio. With a lossy compressed file a lot of the sound is being recreated by the codec. I have seen nothing that suggests it will recreate exactly the same output every time. it would be an interesting exercize to explore, one I'm leaving to others.

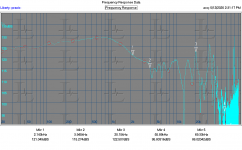

Below is the frequency response of a headphone with and without a ferrite on the cable. Without is the red trace you cannot see under the teal trace.

Attachments

Ahem, "interesting" stuff. At least it was the last time I gave a cursory look.

When Heyser submitted his manuscript to the Audio Engineering society it was met

with a fair amount of skepticism. The professional and academic Audio community of

the time was still trying to come to grips with the Fourier transform and Heyser’s

ideas were seen to be “ too esoteric” and of little practical value.

You have to be kidding me Fourier theory was 130 yr. old this is some kind of worshipful fantasy.

Read Heyser's article "Unpublished Writings from Audio magazine" on the internet and Richard Heyser will explain the relationship between FFT and TDS mathematically. It show be convincing to most here.

The links there still work

John Curl's Blowtorch preamplifier part III

What is wrong with op-amps?

George

John Curl's Blowtorch preamplifier part III

What is wrong with op-amps?

George

I thought it was pretty clear that the issue was repeatability and removing other issues that may cause a change in the audio. With a lossy compressed file a lot of the sound is being recreated by the codec. I have seen nothing that suggests it will recreate exactly the same output every time. it would be an interesting exercize to explore, one I'm leaving to others.

Below is the frequency response of a headphone with and without a ferrite on the cable. Without is the red trace you cannot see under the teal trace.

I have no idea why the same codec in decompression would be non-deterministic. Should be pretty easy to verify this.

Read Heyser's article "Unpublished Writings from Audio magazine" on the internet and Richard Heyser will explain the relationship between FFT and TDS mathematically. It show be convincing to most here.

So the audio community was 130 yr. behind the times what else is new.

Demian,

I am impressed with your apparent high frequency hearing ability! 😉

Since the other part of the system was the source...

But just for fun could you wrap the headphone cord around the ferrite core a few times to see if that changes anything?

Then maybe try a couple of rusty nails or similar to cover the range of ferrites!

Not sure how to include a sampling of cellphones into the equation. 🙂

Oh and since the claim comes from down under could you repeat the tests by moonlight, just to be sure!!!

I am impressed with your apparent high frequency hearing ability! 😉

Since the other part of the system was the source...

But just for fun could you wrap the headphone cord around the ferrite core a few times to see if that changes anything?

Then maybe try a couple of rusty nails or similar to cover the range of ferrites!

Not sure how to include a sampling of cellphones into the equation. 🙂

Oh and since the claim comes from down under could you repeat the tests by moonlight, just to be sure!!!

So the audio community was 130 yr. behind the times what else is new.

Wow first reference I can find to the Fast Fourier Transform was 1965.

Pretty sure FFT analyzers came after that. I also shudder to think what it took in the 60's to build one.

I did get my first Fouier based analyzer around 1976. It was based on a Reticon chip. Parts cost was around $1,800.00 for mine without a microphone.

Demian,

I am impressed with your apparent high frequency hearing ability! 😉

Since the other part of the system was the source...

But just for fun could you wrap the headphone cord around the ferrite core a few times to see if that changes anything?

Then maybe try a couple of rusty nails or similar to cover the range of ferrites!

Not sure how to include a sampling of cellphones into the equation. 🙂

Oh and since the claim comes from down under could you repeat the tests by moonlight, just to be sure!!!

The ferrite only had room for one pass of the headphone cable in it. If I saw anything I would try some others I have here but the dog needs walking.

I can see where there was little numerical application back then. a Digital Fourier Transform has a time complexity of O(n^2) for n samples, whereas the then newly (re)discovered "Fast" [Digital] Fourier Transform is O(n * sqrt👎) so the time it takes doesn't go up quite so ridiculously fast with the number of points, but still computers weren't as fast, cheap or plentiful as they are now.You have to be kidding me Fourier theory was 130 yr. old this is some kind of worshipful fantasy.

Here's an interesting mechanical device from over a century ago. It's pretty neat, I can only wonder why it wasn't more popular:

Albert Michelson's Harmonic Analyzer (book details))

Wow first reference I can find to the Fast Fourier Transform was 1965.

Pretty sure FFT analyzers came after that. I also shudder to think what it took in the 60's to build one.

I did get my first Fouier based analyzer around 1976. It was based on a Reticon chip. Parts cost was around $1,800.00 for mine without a microphone.

As usual who said anything about the FFT, do you actually think it gives a different answer than the Fourier integrals defined in 1822 (or earlier)? Big boner here Ed. I did explicit Fourier integrals on sampled data all the time, computationally expensive but all the theory was old stuff.

Did you actually read what was said, "The professional and academic Audio community of

the time was still trying to come to grips with the Fourier transform?"

What makes this even more stupid Mr. Heyser's original works were all done all analog.

Last edited:

I have no idea why the same codec in decompression would be non-deterministic. Should be pretty easy to verify this.

x2. If the system is heavily burdened you might hit buffer underruns, but that's a different beast. And of course EMI.

* if streaming under high bandwidth demand, then, yes, we can fully expect drops in bit rates as content servers have found people prefer continuity to quality.

As I understand it you raise removing interference sources such as RF stages and associated OS processes.I thought it was pretty clear that the issue was repeatability and removing other issues that may cause a change in the audio.

How does this apply to an asynchronous USB DAC ?.

Huh what does that mean ?.With a lossy compressed file a lot of the sound is being recreated by the codec.

Huh ?.....so decoding MP3 Flac or Wav is random process or are you saying the encoding process is random or both ?I have seen nothing that suggests it will recreate exactly the same output every time. it would be an interesting exercise to explore, one I'm leaving to others.

For sine wave excitation sure.Below is the frequency response of a headphone with and without a ferrite on the cable. Without is the red trace you cannot see under the teal trace.

Last edited:

As usual who said anything about the FFT, do you actually think it gives a different answer than the Fourier integrals defined in 1822 (or earlier)? Big boner here Ed. I did explicit Fourier integrals on sampled data all the time, computationally expensive but all the theory was old stuff.

Did you actually read what was said, "The professional and academic Audio community of

the time was still trying to come to grips with the Fourier transform?"

What makes this even more stupid Mr. Heyser's original works were all done all analog.

The use of Fourier analysis was fine in math, but until test equipment came into general use it was relegated to the back bench.

The first home run in the application not surprisingly was with the military. They used the Bruel and Kjaer offering. A faster unit for NATO countries and a slower model for Soviet block countries.

What Heyser was proud of was the non-classified use of the Time Energy Frequency method of analysis rather than the more basic frequency analysis done more recently with FFT analyzers. But General Radio made an oscillator that was linked by a chain to the analyzer section. By sliding the link a bit you could allow for a frequency offset but as it was not linear or precision speed drive it would not do tracking allowing for the transducers offsets.

I did see the B&K unit still in use at Bose when I did a factory visit.

The TEF unit became the pro sound industry standard from almost the introduction until a number of folks offered smaller lower cost systems based on personal computers. TEF is still being used as it allows virtual anechoic measurements.

And what were you analyzing with the Fourier Transform? When did it become possible to even get 16 bit A/D accuracy at audio frquencies? In the late 70's I wasn't seeing real linear 16 bit units.

Last edited:

As I understand it you raise removing interference sources such as RF stages and associated OS processes.

How does this apply to an asynchronous USB DAC ?.

You were using a cell phone per your description.

I said wave or flac will be very predictable but I'm not confident that MP3 will give the same bit every time. it may but I have never seen a discussion of MP3 "bit perfectness" before, given how compromised the lossy formats are.Huh ?.....so decoding MP3 Flac or Wav is random process or are you saying the encoding process is random or both ?

For sine wave excitation sure.

Except it was not from a sine wave. Those were measured using a 500 mS chirp which is actually a pretty complex wave. However I'll go back tomorrow and record some music through the chain both ways. I can even use music to measure the response but its not that good a signal for measuring, poor SNR.

I said wave or flac will be very predictable but I'm not confident that MP3 will give the same bit every time. it may but I have never seen a discussion of MP3 "bit perfectness" before, given how compromised the lossy formats are.

I'm not sure what's going on with his obviously broken setup, but I did binary comparison of an MP3 decompressed to WAV and confirmed the files were identical. I used LAME as the decoder. It seems completely deterministic as long as you don't add dither.

USB DAC, Cell Phone in Normal mode or Airplane mode, same subjective difference with ferrite IME.You were using a cell phone per your description.

How can audio decoding be 'very predictable' surely it is perfectly predictable?I said wave or flac will be very predictable but I'm not confident that MP3 will give the same bit every time. it may but I have never seen a discussion of MP3 "bit perfectness" before, given how compromised the lossy formats are.

So perhaps that is the clue ie changes in the 'poor SNR' is the difference signal to look for?Except it was not from a sine wave. Those were measured using a 500 mS chirp which is actually a pretty complex wave. However I'll go back tomorrow and record some music through the chain both ways. I can even use music to measure the response but its not that good a signal for measuring, poor SNR.

I thought it was pretty clear that the issue was repeatability and removing other issues that may cause a change in the audio. With a lossy compressed file a lot of the sound is being recreated by the codec. I have seen nothing that suggests it will recreate exactly the same output every time. it would be an interesting exercize to explore, one I'm leaving to others.

Below is the frequency response of a headphone with and without a ferrite on the cable. Without is the red trace you cannot see under the teal trace.

Why do you rise to this stuff? Indeed, why do I?

The dog needs walking.

😉

So perhaps that is the clue ie changes in the 'poor SNR' is the difference signal to look for?

How's the Goop doing? 🙂

- Home

- Member Areas

- The Lounge

- The Black Hole......