(...) for which the original quantization noise images are maintened and still need to be filtered sharply (like in NOS but by using a "digital" filter).(...)

Most of this thread is about imaging, which has nothing to do with quantization noise.

When you have an oversampled digital signal, you can put it in a noise shaper or sigma-delta modulator that rounds it to a lower word length, for example to reduce the word length requirements of a DAC. This rounding results in quantization noise, but most of this noise is pushed outside the band of interest by the noise shaper/sigma-delta.

This works best with considerable oversampling ratios, but the type of filter used in the oversampling process has little to do with it. That is, if you dislike normal digital filters, you can in principle use a zero-order hold (repeating samples) for the oversampling.

The sigma-delta usually has a low-pass transfer of itself, but you can design it for a flat signal transfer function if you like.

Hi

... or to print for Mass market our 16/41.2 compact discs!

At least my understanding it is made in the ADC during the reccording/mixing process. I believe now studios have mostly 96/98 k Hz to 176/192 K Hz analog to digital gears.

So when they reduce the word length in order to get 41.2 or 44 k Hz for compact discs printing, can one assume we get rid of most of the quantification noise already ? Or does an other upsampling process during the playback may improve things yet ?

Don't want to be off topic, sorry if. Seems to me it makes sense to talk of what we expect from an oversampling playback operation in relation to the word length of the ADC reccording process. At least it could make sense perhaps for the op's project.

Or can we assume most of the material of today comes from 44.1/44 k Hz ADC recordings hence the theorical discussion here ? (and so the need of upsampling in real life of oour today's dac!)

When you have an oversampled digital signal, you can put it in a noise shaper or sigma-delta modulator that rounds it to a lower word length, for example to reduce the word length requirements of a DAC.

... or to print for Mass market our 16/41.2 compact discs!

At least my understanding it is made in the ADC during the reccording/mixing process. I believe now studios have mostly 96/98 k Hz to 176/192 K Hz analog to digital gears.

So when they reduce the word length in order to get 41.2 or 44 k Hz for compact discs printing, can one assume we get rid of most of the quantification noise already ? Or does an other upsampling process during the playback may improve things yet ?

Don't want to be off topic, sorry if. Seems to me it makes sense to talk of what we expect from an oversampling playback operation in relation to the word length of the ADC reccording process. At least it could make sense perhaps for the op's project.

Or can we assume most of the material of today comes from 44.1/44 k Hz ADC recordings hence the theorical discussion here ? (and so the need of upsampling in real life of oour today's dac!)

Last edited:

Btw this is puzzling me "

Pre-emphasis

Main article: Emphasis (telecommunications)

Some CDs are mastered with pre-emphasis, an artificial boost of high audio frequencies. The pre-emphasis improves the apparent signal-to-noise ratio by making better use of the channel's dynamic range. On playback, the player applies a de-emphasis filter to restore the frequency response curve to an overall flat one. Pre-emphasis time constants are 50µs and 15µs (9.49 dB boost at 20 kHz), and a binary flag in the disc subcode instructs the player to apply de-emphasis filtering if appropriate. Playback of such discs in a computer or 'ripping' to wave files typically does not take into account the pre-emphasis, so such files play back with a distorted frequency response."

from : Compact Disc Digital Audio - Wikipedia

Pre-emphasis

Main article: Emphasis (telecommunications)

Some CDs are mastered with pre-emphasis, an artificial boost of high audio frequencies. The pre-emphasis improves the apparent signal-to-noise ratio by making better use of the channel's dynamic range. On playback, the player applies a de-emphasis filter to restore the frequency response curve to an overall flat one. Pre-emphasis time constants are 50µs and 15µs (9.49 dB boost at 20 kHz), and a binary flag in the disc subcode instructs the player to apply de-emphasis filtering if appropriate. Playback of such discs in a computer or 'ripping' to wave files typically does not take into account the pre-emphasis, so such files play back with a distorted frequency response."

from : Compact Disc Digital Audio - Wikipedia

Btw this is puzzling me "

Pre-emphasis

This is now rare or non-existent, IIRC there is a database somewhere that lists titles with pre-emphasis bit set. Most folks here generally agree they have almost never come across one. BTW word length has nothing to do with sample rate.

Thanks Scott Wurcer... I was to cry looking at my HDD library.... (ripped)

Oups.... word length is about the bitx number... my bad.

Oups.... word length is about the bitx number... my bad.

Last edited:

Truly off topic.

I own a few with PE.

//

I own a few with PE.

//

Btw this is puzzling me "

Pre-emphasis

Main article: Emphasis (telecommunications)

Some CDs are mastered with pre-emphasis, an artificial boost of high audio frequencies. The pre-emphasis improves the apparent signal-to-noise ratio by making better use of the channel's dynamic range. On playback, the player applies a de-emphasis filter to restore the frequency response curve to an overall flat one. Pre-emphasis time constants are 50µs and 15µs (9.49 dB boost at 20 kHz), and a binary flag in the disc subcode instructs the player to apply de-emphasis filtering if appropriate. Playback of such discs in a computer or 'ripping' to wave files typically does not take into account the pre-emphasis, so such files play back with a distorted frequency response."

from : Compact Disc Digital Audio - Wikipedia

You upsample Scott Wurcer thought...

... I stop reading Wikipedia... your fault !

Trully Non Topic is the post before as well ?

... I stop reading Wikipedia... your fault !

Trully Non Topic is the post before as well ?

Hi

... or to print for Mass market our 16/41.2 compact discs!

At least my understanding it is made in the ADC during the reccording/mixing process. I believe now studios have mostly 96/98 k Hz to 176/192 K Hz analog to digital gears.

So when they reduce the word length in order to get 41.2 or 44 k Hz for compact discs printing, can one assume we get rid of most of the quantification noise already ? Or does an other upsampling process during the playback may improve things yet ?

Don't want to be off topic, sorry if. Seems to me it makes sense to talk of what we expect from an oversampling playback operation in relation to the word length of the ADC reccording process. At least it could make sense perhaps for the op's project.

Or can we assume most of the material of today comes from 44.1/44 k Hz ADC recordings hence the theorical discussion here ? (and so the need of upsampling in real life of oour today's dac!)

As far as I know, most studio recordings are indeed made at higher sample rates and higher word lengths and then need to be decimated and requantized for CD release.

For the requantization, to some extent you can use noise shaping without oversampling. You then shape the noise such that most of it occurs at frequencies where the human auditory system is least sensitive.

For the case of 44.1 kHz sample rate, you can shift it to low frequencies and to the band between 18 kHz or so and 22.05 kHz. It is not nearly as effective as noise shaping of an oversampled signal, but it can improve the weighted noise by a bit or two at the expense of higher unweighted noise.

Nope.

Could you elaborate. There are no DSP or FPGA algorithms that can not be run/simulated on a CPU, the key is real time/latency, and cost. My example of a 32 million point FIR might not be doable at a reasonable cost, it could be done BTW by one of the super parallel GPU engines they use for CGI. At 96k a 32M point FIR represents about 6 min. of latency probably not acceptable in some applications.

Last edited:

Most of this thread is about imaging, which has nothing to do with quantization noise.

Correct Marcel, as usual 😉

You are right. Two totally different concepts

Let me re-summarize:

1) Sample rate = Fs

2) Nyquist bandwidth = Fs/2

3) Image components are "separated" in frequency space and are "mirrored copies" of the original signal at repeating intervals, at integer multiples of the sample rate (Fs).

4) Filter needs has to cut everithing after the Nyquist bandwidth (Fs/2),to remove the mirrored component of the original signal

5) Oversampling with factor X, moves the first image spectrum to X-time higher frequency. Sample rate is now X*Fs and the Nyquist bandwidth is now X*Fs/2.

6) The quantization noise is "equally distributed" across the Nyquist bandwidth (Fs/2)

7) Oversampling with factor X results in the quantization noise being pushed out towards X*Fs/2

8) By increasing the sampling rate, the same quantization noise is spread over a larger Nyquist bandwidth

9) Oversampling with factor X, lower the level of the quantization noise to 1/X of what was before

10) Oversampling by X allows simpler and less critical lowpass analog filter because:

a) filter has to act in a X-time higher frequency window (two due point 5)

b) oversampling by definition performs filtering mainly in the digital domain, lightening the requirements of the analog filter

c) quantization noise to be cleaned is X-times lower (due to point 8 and 9)

11) Digital filter mainly removes the "mirrored copies" of the original signal; the following Analog filter has just to suppress remaining noise ("quantization"?) till around X*Fs/2

Is this a correct simplified view?

Last edited:

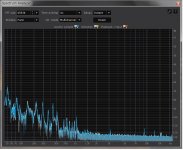

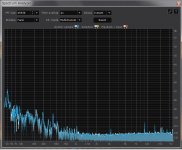

This is off topic but for your reference. There was a little surprise about pre-emphasis last month. I was under the impression that PE was already gone, no need to care about. But I found my music file ripped from "Afanassiev plays Mozart(COCO84057 released 2005)" was PE. I couldn't hear the difference even if they had an HF increase. The 1st pic is correct. The 2nd is incorrect which I used to listen with satisfaction. PE may be an assistant for my aging ear. I guess Wiki has some truth.

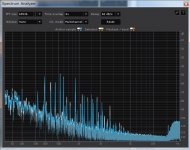

But there is a CD which I don't want to listen again because of the "hot" HF. This is the very disc MarcelvdG wrote at #128. "Souvenir by Suwanai Akiko (PHCP9675 released 1998)" has "noise-shaping" even if it's 44.1kHz sampling. The 3rd pic shows an artificial increase from 15kHz which is the consequence of noise-shaping without oversampling. Many CDs had such noise-shaping those days. I guess an engineer had concerning about decimating from 24bit to 16bit. They were in trial and error in the late '90s. I'm sure this was a failure. I prefer without DE to "noise-shaping" though some people prefer "noise-shaping."

But there is a CD which I don't want to listen again because of the "hot" HF. This is the very disc MarcelvdG wrote at #128. "Souvenir by Suwanai Akiko (PHCP9675 released 1998)" has "noise-shaping" even if it's 44.1kHz sampling. The 3rd pic shows an artificial increase from 15kHz which is the consequence of noise-shaping without oversampling. Many CDs had such noise-shaping those days. I guess an engineer had concerning about decimating from 24bit to 16bit. They were in trial and error in the late '90s. I'm sure this was a failure. I prefer without DE to "noise-shaping" though some people prefer "noise-shaping."

Attachments

Could you elaborate. There are no DSP or FPGA algorithms that can not be run/simulated on a CPU, the key is real time/latency, and cost. My example of a 32 million point FIR might not be doable at a reasonable cost, it could be done BTW by one of the super parallel GPU engines they use for CGI. At 96k a 32M point FIR represents about 6 min. of latency probably not acceptable in some applications.

Hi Scott, The commercial oversampling DAC chips only have a few hundred taps. Chord DAC that is advertised to have extremely long taps utilizing FPGA is still 60000 or so. We can have 260000 taps with Equilibrium with a reasonable latency (a few seconds) with ubiquitous Intel CPU. It might not be suitable for video playback, but perfectly fine for audiophile I guess.

Correct Marcel, as usual 😉

You are right. Two totally different concepts

Let me re-summarize:

1) Sample rate = Fs

2) Nyquist bandwidth = Fs/2

3) Image components are "separated" in frequency space and are "mirrored copies" of the original signal at repeating intervals, at integer multiples of the sample rate (Fs).

4) Filter needs has to cut everithing after the Nyquist bandwidth (Fs/2),to remove the mirrored component of the original signal

5) Oversampling with factor X, moves the first image spectrum to X-time higher frequency. Sample rate is now X*Fs and the Nyquist bandwidth is now X*Fs/2.

6) The quantization noise is "equally distributed" across the Nyquist bandwidth (Fs/2)

7) Oversampling with factor X results in the quantization noise being pushed out towards X*Fs/2

8) By increasing the sampling rate, the same quantization noise is spread over a larger Nyquist bandwidth

9) Oversampling with factor X, lower the level of the quantization noise to 1/X of what was before

10) Oversampling by X allows simpler and less critical lowpass analog filter because:

a) filter has to act in a X-time higher frequency window (two due point 5)

b) oversampling by definition performs filtering mainly in the digital domain, lightening the requirements of the analog filter

c) quantization noise to be cleaned is X-times lower (due to point 8 and 9)

11) Digital filter mainly removes the "mirrored copies" of the original signal; the following Analog filter has just to suppress remaining noise ("quantization"?) till around X*Fs/2

Is this a correct simplified view?

Depends on what quantization noise you write about. The density of quantization noise that's already there in the original recording is not reduced by oversampling. It's treated the same as the desired signal, as the oversampler just sees an input signal and doesn't know what part of it is desired and what part of it is the quantization noise of the original recording.

If you have to requantize after the oversampling, then this requantization noise is indeed spread over a larger bandwidth (and it can be shaped, if desired).

Again, noise shaping has nothing to do with pre-emphasis. PE is pre and post manipulation of "user data" carried by red-book CD. The standard supports a flag to indicate if PE is present so that a filter; f=5600 Hz, Q=0.485, gain=-10.1 dB or 50/15 uS can be applied on what comes out of the D/A process. It can be in digital space but was typically done as a switch in analogue filter.

//

//

Last edited:

Again, noise shaping has nothing to do with pre-emphasis.

Indeed, but did anyone claim or suggest otherwise?

Oversampling is merely a tool to make a digital filter useful. Your "disposable income" by a digital filter in Fs is up to 0.5*Fs, i.e., up to 24kHz in 48kHz sampling. This means that you can't remove the 1st image, the 2nd, and 3rd... , whatever filter length you have. It's meaningless. The only solution to boost your disposable income is to make Fs large. If you do x8OSR, you can remove up to 192kHz where post analog filter becomes a soft one. A digital filter in x1OSR can remove no images because it also has images. In other words, a digital filter is a periodic function. That's all.🙂

This is indeed ana interesting topic. I have to confess that I really thought I had a correct idea of what is going on in PCM and OS. After reading this thread I'm not so sure anymore.

And I don't think anyone has yet made that super clear, explain to my mother, kind of description on how it really works.

Pictures would help I suppose. Using 44,1 and picturing as a start something like...

//

And I don't think anyone has yet made that super clear, explain to my mother, kind of description on how it really works.

Pictures would help I suppose. Using 44,1 and picturing as a start something like...

//

Attachments

@ ygg-it,

you have to keep in mind that the image spectra are a result of the time sampling process while the so-called quantization noise is a result of the quantization process due to the limited number of bits hence the limited number space. An additional contribution comes from the zero order hold if that is used.

"5) Oversampling with factor X, moves the first image spectrum to X-time higher frequency. Sample rate is now X*Fs and the Nyquist bandwidth is now X*Fs/2."

No, but the digital low pass filter tries to remove all image spectra at Fos and up to (X-1) x Fos. (Fos = original sampling rate)

In reality the digital low pass filter will not be able to remove the image spectra but only attenuates it (by how much is given by the so-called stopband attenuation).

The term "new" Nyquist bandwidth isn´t imo not suitable as the original audio bandwidth is still the same as before, but the quantization noise will be spread out over a greater bandwidth, so as a result the noise level in the audio band (0 - 20 kHz) will be lower.

"11) Digital filter mainly removes the "mirrored copies" of the original signal; the following Analog filter has just to suppress remaining noise ("quantization"?) till around X*Fs/2"

As said above, the digital low pass filter left over the image spectra around the new sampling frequency of X x Fos and its multiples (and below the residue to the non perfect filtering = limited stoppband attenuation).

So the now gentler analog low pass filter has to remove the left over image spectra and provides some additional attenuation for the residue.

you have to keep in mind that the image spectra are a result of the time sampling process while the so-called quantization noise is a result of the quantization process due to the limited number of bits hence the limited number space. An additional contribution comes from the zero order hold if that is used.

"5) Oversampling with factor X, moves the first image spectrum to X-time higher frequency. Sample rate is now X*Fs and the Nyquist bandwidth is now X*Fs/2."

No, but the digital low pass filter tries to remove all image spectra at Fos and up to (X-1) x Fos. (Fos = original sampling rate)

In reality the digital low pass filter will not be able to remove the image spectra but only attenuates it (by how much is given by the so-called stopband attenuation).

The term "new" Nyquist bandwidth isn´t imo not suitable as the original audio bandwidth is still the same as before, but the quantization noise will be spread out over a greater bandwidth, so as a result the noise level in the audio band (0 - 20 kHz) will be lower.

"11) Digital filter mainly removes the "mirrored copies" of the original signal; the following Analog filter has just to suppress remaining noise ("quantization"?) till around X*Fs/2"

As said above, the digital low pass filter left over the image spectra around the new sampling frequency of X x Fos and its multiples (and below the residue to the non perfect filtering = limited stoppband attenuation).

So the now gentler analog low pass filter has to remove the left over image spectra and provides some additional attenuation for the residue.

- Status

- Not open for further replies.

- Home

- Source & Line

- Digital Line Level

- Oversampled DAC without digital filter vs NOS