Can someone explain the relationship between the two? Specifically is it better to use an OPT whose wattage rating is greater than the output power of the amplifier? What would happen if you use an OPT rated at 120 watts in an amplifier rated at 200 watts?

You'd get core saturation and so distortion, more obviously at low frequencies in typical listening use.

Best to have a bigger OPT than not, especially if LF is important.

_-_-bear

Best to have a bigger OPT than not, especially if LF is important.

_-_-bear

Can someone explain the relationship between the two? Specifically is it better to use an OPT whose wattage rating is greater than the output power of the amplifier? What would happen if you use an OPT rated at 120 watts in an amplifier rated at 200 watts?

here you go....output-trans-theory

unlike SS amps, power rating in a tube amp is never constant across the audio band....

it is lower at either side of the 20hz-20khz audio band....

most tube power ratings are quoted at 1khz...so that a 120 watt rated traffo at 30 to 16khz can be a 60 watt traffo at 20hz to 20khz

then there is also the 1watt power frequency response, it is much wider at that power level....

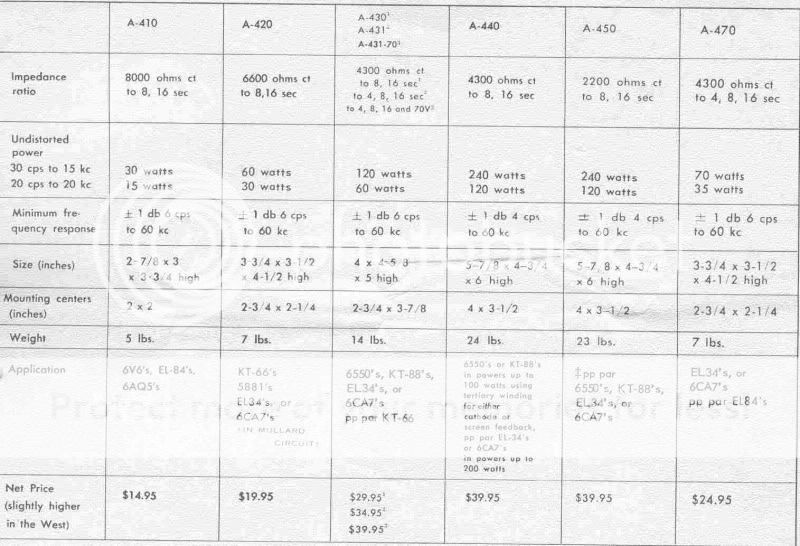

a blast from the past, Dynaco irons.....

we have to live with the reality of things.....but then again, OPT's can be designed to any spec if one wishes, those are Dynaco offerings and considered as very good at the time....Patrick Turner has an excellent exposition re OPT's in his website, read it carefully and you will understand why...

Thanks Tony. That's kinda depressing. Undistorted power drops to half at the frequency extremes.

reason is that the OPT primary inductance drops as frequency decreases...OPT design is more of an art than science...a compromise as characteristics are working against each other.....Norman Crowhurst has written many fine articles about OPT's....

- Status

- Not open for further replies.