Is it a hypothesis that above difference is causing a change in the sound, or have you proven that it is the cause?The problem here is that "Default_SS_App" and "mySS_uc_App" are not the same.

I'm wondering if your computer could be injecting EMI/RFI over one or both of the interfaces. When you say the sound is more clear and has a better soundstage in one case, sometimes jitter can create a perceptual effect like that. Maybe EMI/RFI can cause it too.

The other thing I'm wondering about is whether any code will run without sounding different, or is it only your particular code?

Also wondering if the problem was always there, or did it start happening at some particular point in time?

Regarding phase noise, in some types of equipment close-in phase noise has different practical effects as opposed to far-out phase noise. Therefore, a distinction is sometimes made between the two. Also, phase noise measurement gear often works using different technology from jitter measurement gear. Conversion between the two types of measurements is not always possible, although phase noise can be used to estimate jitter, IIRC. Some info at: https://www.analog.com/media/en/training-seminars/tutorials/MT-008.pdf

Last edited:

Yes, but the interesting phase noise for audio is close-in phase noise which cannot be seen on ASR J-test measurements.Phase noise is simply jitter converted from time domain to freq domain.

https://en.m.wikipedia.org/wiki/Phase_noise

As always in cases like this, the level is close to the noise level of "do I imagine this or not?".Is it a hypothesis that above difference is causing a change in the sound, or have you proven that it is the cause?

It is not super-obvious, but we are a few golden ears here that agree that something fishy is going on.

Enough to not wave it aside, a measurement would be very interesting and worth the effort.

So what can be the cause, and what can we measure?

Elusive impressions like this are difficult to handle, and you always have to remember our front end, the signal processing in our heads.

My favourite theory is that if the sound is free from imperfections, e.g. as in live music, our built-in sound processor can decode the information without too much effort, stereo image and depth is percieved properly and we can hear the placement of the musicians with our eyes closed.

In the case when the sound is garbled in any way, our brain may have problems translating the information, less "processing power" is available to build the internal model of the performance and the audible impression is flat and boring. Our perception of the problem may have very little to do with what is actually the technical problem. Even worse, the description of the problem may be different for different people.

This is my view, and not everyone (or even very few indeed) will agree on this.

Regarding the target of the measurement, we have to be open minded. The evidence will be highly circumstantial.

If I find a difference, I may be able to pinpoint where the problem is, but I will not necessarily be able to explain why it is causing the audible effect.

Possibly, but I would be surprised if this is the case. But it cannot be ruled out.I'm wondering if your computer could be injecting EMI/RFI over one or both of the interfaces. When you say the sound is more clear and has a better soundstage in one case, sometimes jitter can create a perceptual effect like that. Maybe EMI/RFI can cause it too.

This is difficult to say and will involve a greater effort. What we have periceved is along with the DSP process development, and it will be an entirely new project to evaluate the effect on a "straight wire" DSP schematic. Since this is intended to be an active crossover it will be difficult to test since a "straight wire DSP" will have to be connected to something else, probably a conventional passive crossover speaker system. But maybe it will be possible to hear a similar difference if it is really there.The other thing I'm wondering about is whether any code will run without sounding different, or is it only your particular code?

Actually, it became less apparent after upgrading the CCES framework to a new version recently.Also wondering if the problem was always there, or did it start happening at some particular point in time?

If we cannot see any difference now, we may have to go back to the old version just out of interest.

bohrok2610 has shown how to visualize spectral line noise skirts using FFTs. When noise is strongly correlated with a test signal, it tends to show up as skirt widening, which takes a sufficiently high resolution FFT to visualize well. IIRC the exact shape of the widening may be different for amplitude noise versus phase noise, as jitter is less bounded than Vref voltage.So what can be the cause, and what can we measure?

The other way noise differences can manifest is as a change in the overall noise floor depending on whether a test signal is present or not. In that case a low res FFT with fewer bins may make noise floor changes easier to see, that and enough signal averaging to show an average noise floor level.

Otherwise, if you have distortion, that should be easy to see using standard measurements.

The other thing about soundstage imaging is to maintain good phase coherence between stereo channels. That's because the ear/brain system uses ITD (interaural time delay) as one localization mechanism. https://en.wikipedia.org/wiki/Sound_localization

Last edited:

You are sure that the exact same cables are attached to the system in both cases. An extra ground connection (to a programmer etc or what not..) may cause a change....

//

//

Are "we" sure about this... it feels so logical but is it a goast?Yes, but the interesting phase noise for audio is close-in phase noise which cannot be seen on ASR J-test measurements.

What is the theoretical cause of events - can you describe these. From "accident 2 impact"....

This question is not a rhetorical troll one, but a sincere one.

//

This tutorial gives the common explanation of phase noise impacts:

https://www.analog.com/media/en/training-seminars/tutorials/MT-008.pdf

Simplified: far-out phase noise impacts SNR while close-in phase noise "smears" the fundamental signal. Whether or not the impact is audible is another story.

https://www.analog.com/media/en/training-seminars/tutorials/MT-008.pdf

Simplified: far-out phase noise impacts SNR while close-in phase noise "smears" the fundamental signal. Whether or not the impact is audible is another story.

Well, it discusses PN / jitter but does not venture into any application impact. I have a background in radio - there, the PN has an absolute and easily measurable impact om the transfer quality on L1 depending on PN in the used oscillators. Vibration has an impact - I have troubleshooted dropped calls which in the end was found to be correlated to the sub-way schedule 🙂. I dont think I have seen any creditable report on close-in PN vs. SQ? Is there one? Or a serious controlled PN vs analog side measurements... I mean lab style proper. Is there one?

//

//

i would avoid talking about sq or audibility you know ...

iirc in that closed thread, Marcel pointed out that higher PN will result in higher noise at the dac output, testings appeared being made but seems no further follow up.

so wouldnt it be much easier (or possible) to estimate the PN by just measuring the dac output noise?

iirc in that closed thread, Marcel pointed out that higher PN will result in higher noise at the dac output, testings appeared being made but seems no further follow up.

so wouldnt it be much easier (or possible) to estimate the PN by just measuring the dac output noise?

Close-in phase noise does not show up in normal DAC measurements other than as higher noise skirts which have little impact on SINAD so dac with high SINAD can have high noise skirts. Without measurements the only way to settle the audibility issue would be through controlled DBTs. So far no reports of such tests have been published. Neither supporting audibility nor not supporting audibility.

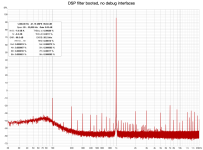

Some results here captured with REW RTA and Cosmos ADC.

I can se very little or no difference between the standalone booted DSP without interfaces connected, and the development setup.

As I am a measurement and analysis newbie, I have to ask if this result is good/satisfactory/poor?

I can se very little or no difference between the standalone booted DSP without interfaces connected, and the development setup.

As I am a measurement and analysis newbie, I have to ask if this result is good/satisfactory/poor?

Attachments

The level was -6dB input to be safe from clipping, 400mVRMS out, Cosmos ADC input range 4.5V.

The DSP filter driver EQ was active, with a PK/notch -6dB at 1kHz to compensate for a combined baffle diffraction and cone resonance peak.

Hence the low output level.

The DSP filter driver EQ was active, with a PK/notch -6dB at 1kHz to compensate for a combined baffle diffraction and cone resonance peak.

Hence the low output level.

It would be better to use dBFS (or dBc) on vertical scale. The noise floor shape seems a bit odd at 75Hz but other than that your measurements seem ok. But I'm not sure what are you looking for in these measurements.

The noise floor is probably due to the filter design, I am not so worried about it.

I entered the Cosmos ADC full scale, 4.5V and 400mVRMS is -21dB in this context.

Do you mean dBFS in the context of the DAC maximum output (2VRMS) level would be better?

What I am looking for?

I am looking for any kind of difference between the two working modes, DSP booted or DSP debug mode, which was the reason I opened this thread.

Since I can see none, I instead want to ask the experts if my system is performing good or bad in the general sense.

There may be a difference between a good measurement and a well performing DUT.

I will continue to compare the operating modes using an older DSP software framework, where we could hear a difference.

With the present version I am not so sure.

Since the present situation seems to be adequate, the original question is more of an academic one, why did we hear a difference-

We are just curious.

I entered the Cosmos ADC full scale, 4.5V and 400mVRMS is -21dB in this context.

Do you mean dBFS in the context of the DAC maximum output (2VRMS) level would be better?

What I am looking for?

I am looking for any kind of difference between the two working modes, DSP booted or DSP debug mode, which was the reason I opened this thread.

Since I can see none, I instead want to ask the experts if my system is performing good or bad in the general sense.

There may be a difference between a good measurement and a well performing DUT.

I will continue to compare the operating modes using an older DSP software framework, where we could hear a difference.

With the present version I am not so sure.

Since the present situation seems to be adequate, the original question is more of an academic one, why did we hear a difference-

We are just curious.

The normal convention is that you set 0 dB at clip / max and then you can see your distortion as "dB down" more directly without having to do any calculation 😉

The two measurements look very similar but not identical... but can we really hear that low level differences... well...

//

The two measurements look very similar but not identical... but can we really hear that low level differences... well...

//

- Home

- Source & Line

- Digital Line Level

- Measuring phase jitter