I have a 0-1mA D.C. Milliamperes meter by the Beede Electrical instrument Company Inc that I wanted to use as a testing meter for tube bias. I measured the meter and it measures roughly 45 ohms. I thought maybe this was too low and that I needed some type of circuit to help so that when I used the meter I would obtain the correct reading without pulling the circuit down. I used a TL082 op amp in which to drive the meter thinking that it would raise the impedance of the meter as seen by a circuit. It didn't seem to work as I wanted it to. Measuring tube bias in a circuit with a 10 ohm resistor from cathode to ground with a standard VOM meter I saw .350 volt across the 10 ohm resistor and with the meter and circuit it dragged it down to about .300 VDC across the 10 ohm resistor.

Any ideas?

Any ideas?

Are you trying to insert the meter directly in the cathode circuit or put it across a sensing resistor that's already in the cathode circuit?

Sy,

Thanks for the reply.

I was putting the meter across the 10 ohm resistor as I do the VOM meter.

Thanks for the reply.

I was putting the meter across the 10 ohm resistor as I do the VOM meter.

If you put the meter across the 10 ohm you have resistors in parallel so yes it will pull it down.

You need either a high resistance multiplier and read the voltage or a small value shunt in series with the cathode resistor.

Link:

http://sound.westhost.com/articles/meters.htm

This may help..

Regards

M. Gregg

You need either a high resistance multiplier and read the voltage or a small value shunt in series with the cathode resistor.

Link:

http://sound.westhost.com/articles/meters.htm

This may help..

Regards

M. Gregg

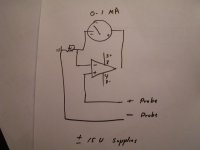

I have an idea. You show us the circuit, so we know exactly what you did with a TL082. Then we will think about where things went wrong. The alternative may require us to read your mind; we are good, but not that good!

That looks OK, but is that what you built? Check your wiring, and the opamp pinout, very carefully.

OK, so what you want to do is apparently to convert your milliammeter into a voltmeter. You can do that with a simple series resistor- see the Rod Elliott article for the calculations- no opamp needed. Your cathode resistor is 10R, so the voltage across it (0.350V) means you have 35mA. Where do you want that on the milliammeter? A convenient spot is 0.35mA (but you can make that anywhere you like). The total series resistance in the meter circuit is R = 0.35V/0.00035A = 1k. You have 45R already, so the series resistor will need to be 955R.

The 1k total meter resistance shunted across the 10R sense resistor will perturb the reading a bit, but not much.

The 1k total meter resistance shunted across the 10R sense resistor will perturb the reading a bit, but not much.

- Status

- Not open for further replies.

- Home

- Amplifiers

- Tubes / Valves

- mA meter drags the circuit down