Hi,

I wrote an article people might find interesting. It's a thorough analysis of the impact the quality of an analog interconnect has.

I designed and built an 'interlink difference amplifier'. It takes a signal, buffers it with two buffers, sends it through two interlinks back to an instrument amplifier, which will filter out the common signal, and leave only the difference caused by either/both cable(s).

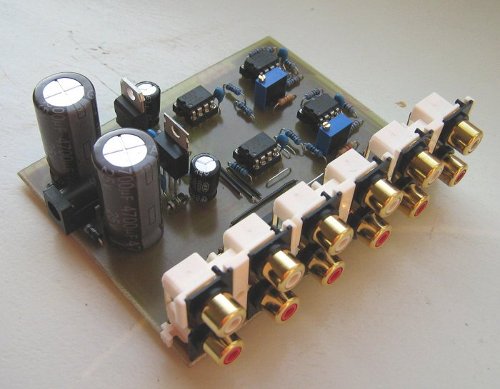

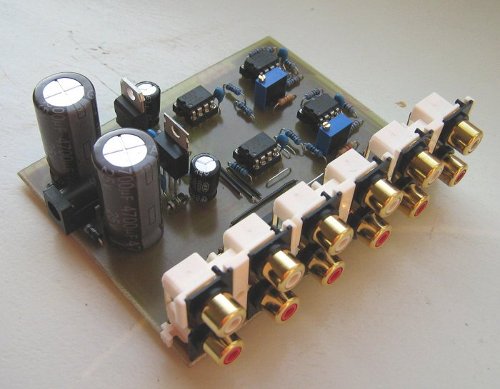

Finished, it looks like:

Here is the article:

Analog audio interlinks: does quality matter?

There are recordings available (because numbers don't mean much) and they show quite clearly there is virtually no difference.

I wrote an article people might find interesting. It's a thorough analysis of the impact the quality of an analog interconnect has.

I designed and built an 'interlink difference amplifier'. It takes a signal, buffers it with two buffers, sends it through two interlinks back to an instrument amplifier, which will filter out the common signal, and leave only the difference caused by either/both cable(s).

Finished, it looks like:

Here is the article:

Analog audio interlinks: does quality matter?

There are recordings available (because numbers don't mean much) and they show quite clearly there is virtually no difference.

Two questions:

1. The distortion levels in graph 14, are they the harmonics of the difference signal compared to the fundamental of the difference signal or the harmonics of the difference signal compared to the fundamental of the input signal? In the latter case, I don't understand why the distortion levels are so high.

2. Why do you assume linear errors to be inaudible?

1. The distortion levels in graph 14, are they the harmonics of the difference signal compared to the fundamental of the difference signal or the harmonics of the difference signal compared to the fundamental of the input signal? In the latter case, I don't understand why the distortion levels are so high.

2. Why do you assume linear errors to be inaudible?

I think to really test cables you would need a much better sig gen with less distortion, and a higher source resistance which might begin to expose nonlinear dielectrics. The result might be the same (little change between cables) but it would be more robust against the criticism of those who are sure cables sound different.

MarcelvdG said:1. The distortion levels in graph 14, are they the harmonics of the difference signal compared to the fundamental of the difference signal or the harmonics of the difference signal compared to the fundamental of the input signal? In the latter case, I don't understand why the distortion levels are so high.

Graph 14 has nothing to do with the difference amplifier. It's the signal from my signal generator fed through the various interlinks.

It's to try to determine, using measurements, if cables introduce non-linear distortion.

MarcelvdG said:2. Why do you assume linear errors to be inaudible?

Because linear distortion is just a gain difference, which is irrelevant.

DF96 said:I think to really test cables you would need a much better sig gen with less distortion

Why? It's a difference amplifier. What the incoming signal is, is irrelevant. It can be a sine tone with 0% THD or music with 50% THD. There is only one voltage at any point in time at both inputs of the difference amplifier, and the difference between those is the output.

"Because linear distortion is just a gain difference, which is irrelevant."

Because you measure at one frequency at a time you mean? For a music signal, when the gain for some frequencies is different than for others, that can definitely be audible. Some people can hear differences of 0.2 dB under double-blind conditions, according to Stanley Lipshitz and John Vanderkooy.

It could be that some of your linear errors are due to differences in delay. As long as the group delay is flat over the audio band, it is surely irrelevant. Just hit the play button a hundred nanoseconds earlier to make up for it.

Because you measure at one frequency at a time you mean? For a music signal, when the gain for some frequencies is different than for others, that can definitely be audible. Some people can hear differences of 0.2 dB under double-blind conditions, according to Stanley Lipshitz and John Vanderkooy.

It could be that some of your linear errors are due to differences in delay. As long as the group delay is flat over the audio band, it is surely irrelevant. Just hit the play button a hundred nanoseconds earlier to make up for it.

Your amp loses separation at higher frequencies, which makes it harder to distinguish between harmonics injected by the sig gen and harmonics allegedly generated by the cable being tested. You provide a loophole for the cable believers to escape through.

There's always a loophole to escape through as long as you decide a priori that others are required to prove a negative and that you needn't provide evidence.

There's an easier way to do this comparison- use the test cables as loopback from in to out of the sound card, record musical or test tone selections, then subtract the files digitally to see/hear the residue.

There's an easier way to do this comparison- use the test cables as loopback from in to out of the sound card, record musical or test tone selections, then subtract the files digitally to see/hear the residue.

"Because linear distortion is just a gain difference, which is irrelevant."

Because you measure at one frequency at a time you mean? For a music signal, when the gain for some frequencies is different than for others, that can definitely be audible. Some people can hear differences of 0.2 dB under double-blind conditions, according to Stanley Lipshitz and John Vanderkooy.

It could be that some of your linear errors are due to differences in delay. As long as the group delay is flat over the audio band, it is surely irrelevant. Just hit the play button a hundred nanoseconds earlier to make up for it.

You're talking about frequency response characteristics, and I do agree that that would be audible.

Non-linear distortion is something else. Non-linear distortion is when a system has a gain that is not the same for different input voltages. For instance, when an amplifier has a gain of 20 when the input voltage is 0.1 V and 22 when it's 0.5V, an input sine wave (which alternates between -0.5V and 0.5V) will not be cleanly reproduced as sine wave on the output. This is what causes harmonic distortion in systems.

The linear distortion of a cable can just be compensated for with the volume control (except that even the most minuscule difference you can attain with the volume control is much, much more than the gain loss of even a relatively large resistor).

Your amp loses separation at higher frequencies, which makes it harder to distinguish between harmonics injected by the sig gen and harmonics allegedly generated by the cable being tested. You provide a loophole for the cable believers to escape through.

Technically, that's true, but that doesn't really have to do with the source signal being a low or high distortion sine wave. When the input is a low distortion 20 kHz signal, it will already be at the upper limit of human hearing and in the region the difference amp performs worst.

There's an easier way to do this comparison- use the test cables as loopback from in to out of the sound card, record musical or test tone selections, then subtract the files digitally to see/hear the residue.

Well, you'll also include the difference caused by the DAC/ADC of the sound card, but I guess it's possible. Digital subtraction is quite easy: just invert one of the sources and mix them.

- Status

- Not open for further replies.

- Home

- Source & Line

- Analog Line Level

- In-depth analysis of effect of high-quality interconnects