I now, like Hawksford, deliberately design nonlinearity into my drivers. It actually makes them sound better, but not for the reasons that you might think. It raises the "good" distortion and lowers the "bad" - a lot like collesteral.

Could you perhaps explain what the difference is between good and bad distortion? Is this the "speaker distortion is not audible" vs "electronic distortion is audible"? I'd like to learn more about this and try to understand why this would be the case.

The main thing that you ned to do is to seperate your understanding of what "distortion" is from its perception. There is a close linkage in peoples mind between a process changing the shape of a waveform and its "distortion" - and this is true viusually, but not as far as audible perception is concerned. There are changes, or "distortion" than are relatively benign and cannot be heard while others are quite objectionable. This simple fact can be understood by considering that the ear itself is highly nonlinear and as such its own processing can "mask" many forms of "distortion".

Classic terms such as "THD" or "IMD" imply that these are "errors" in and of themselves, but that is not the case. These measures at the results of specific imput signals and reflect the symptoms of a nonlinearity on those specific waveforms. There is no relationship between the perception of an underlying system nonlinearity and the levels of THD or IMD.

The ear turns out to be quite sensitive to low lying shrap changes in the transfer characteristics of the nonlinear system, things like crossover distortion etc. It is quite tollerant, even immune to slow changes in the lineararity characteristic such as a second or third order change as often found in a louspeaker suspension or magnet structure. High rates of change in any system are undesirable, but especially so when they affect low level signals. These are not typical problems in a mechanical device like a loudspeaker, but they are in electronics.

This was the high level explaination, all I had time for right now. But its clear that all types of distortion are not equal and that trading off an inaudible one to reduce an audible one could be a very good idea.

Classic terms such as "THD" or "IMD" imply that these are "errors" in and of themselves, but that is not the case. These measures at the results of specific imput signals and reflect the symptoms of a nonlinearity on those specific waveforms. There is no relationship between the perception of an underlying system nonlinearity and the levels of THD or IMD.

The ear turns out to be quite sensitive to low lying shrap changes in the transfer characteristics of the nonlinear system, things like crossover distortion etc. It is quite tollerant, even immune to slow changes in the lineararity characteristic such as a second or third order change as often found in a louspeaker suspension or magnet structure. High rates of change in any system are undesirable, but especially so when they affect low level signals. These are not typical problems in a mechanical device like a loudspeaker, but they are in electronics.

This was the high level explaination, all I had time for right now. But its clear that all types of distortion are not equal and that trading off an inaudible one to reduce an audible one could be a very good idea.

Last edited:

Output don't match input = distortion

Yea, thats the nieve point of view that leads to erroneous conclusions.

Rupert Neve’s designs always attempted to reduce IC crossover distortion😉 .Yea, thats the nieve point of view that leads to erroneous conclusions.

Hi Earl,

Thanks for your reply. Using psychoacoustics to separate audible distortion from less or inaudible distortion makes sense. So far nothing new, except I had not heard the 'fast' vs 'slow' angle before.

So in the hypothetical case where an amplifier only adds second harmonic distortion, or a speaker only adds the same level of second harmonic, they would both be equally audible. It boils down to the physics of the device that termines what kind of distortion it is capable of.

My question now is how and when can 'good' distortion mask 'bad' distortion?

Thanks for your reply. Using psychoacoustics to separate audible distortion from less or inaudible distortion makes sense. So far nothing new, except I had not heard the 'fast' vs 'slow' angle before.

So in the hypothetical case where an amplifier only adds second harmonic distortion, or a speaker only adds the same level of second harmonic, they would both be equally audible. It boils down to the physics of the device that termines what kind of distortion it is capable of.

My question now is how and when can 'good' distortion mask 'bad' distortion?

There is no "good" distortion, only audible and inaudible distortion in a continuum. Distortion in the systen cannot mask other distortion in the system, but the distortion in the ear (the receiver) can mask the lower orders of distortion in the system.

It seems I misunderstood when you said:

I assumed that adding the nonlinearity to your driver masked other less present distortion in the system. But when I read your statement again it sais 'lowers' not 'masks'. Can you explain how that works?

I now, like Hawksford, deliberately design nonlinearity into my drivers. It actually makes them sound better, but not for the reasons that you might think. It raises the "good" distortion and lowers the "bad"

I assumed that adding the nonlinearity to your driver masked other less present distortion in the system. But when I read your statement again it sais 'lowers' not 'masks'. Can you explain how that works?

I didn't say "masks", I said "lowers", there is a big difference. Since no system can have infinite amplitude of its variables (like volts or excursion) one designs for this limited capability by insuring that the nonlinearities that are present - have to be present - are as low an order as possible. Hence this tends to increase the lower orders in order to decrease the higher orders. This is not masking, but changing the nature of the nonlinearities.

I didn't say "masks", I said "lowers", there is a big difference. Since no system can have infinite amplitude of its variables (like volts or excursion) one designs for this limited capability by insuring that the nonlinearities that are present - have to be present - are as low an order as possible. Hence this tends to increase the lower orders in order to decrease the higher orders. This is not masking, but changing the nature of the nonlinearities.

Dr. Geddes,

I think if lowering the non-linear distortion is the main reason, Line Array should works best.

Most of us may never get to the boundary of a driver non-linear distortion region unless one is really seriously wanting to become a deaf.

Hence, one of my very beginning question is that how importance of the non-linear distortion for a designer and what is the acceptable level before a driver start behaving crazy? Is it 10W or 100W or 200W for a very common 88dB sensitivity driver?

It is my theory a driver should not present non-linearties up to about 75% of the max SPL according to a driver T/S parameters (such as Xmax, Pmax, etc.).

I am more concerned with the linear distortion but even in life, we can't live without distortion. Distortion is a naturally occurring behavior and our brains and ears are very used to it.

But we are not used to all kinds of distortion and thus we have to define which is good or which is bad to our system?

Last edited:

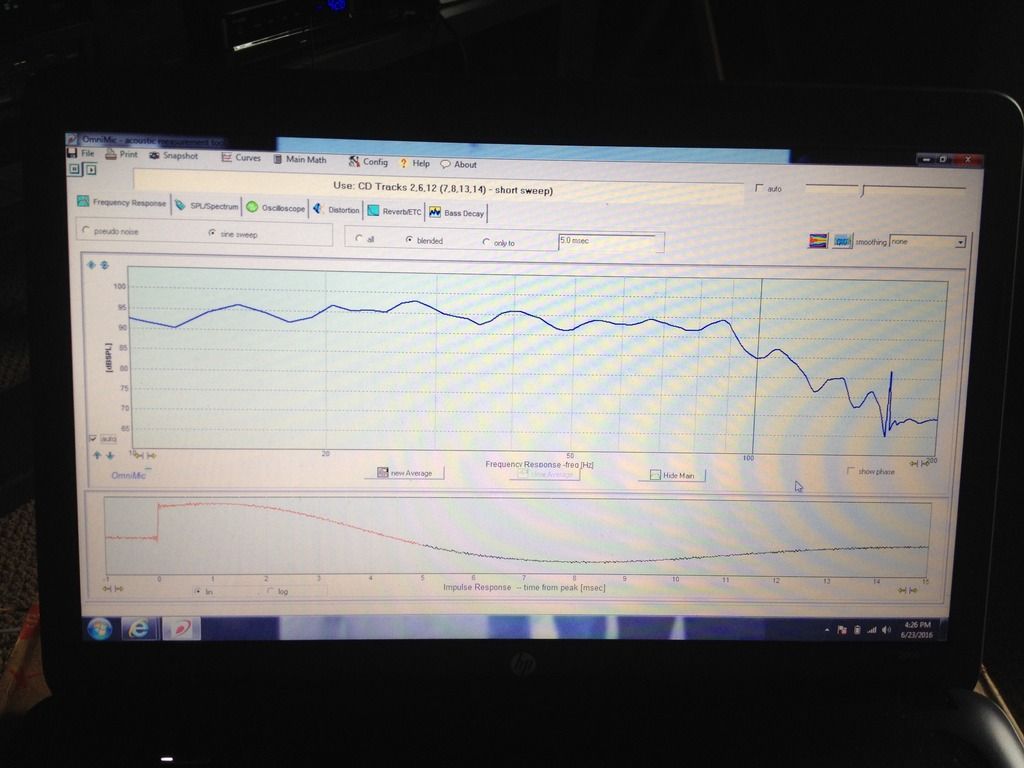

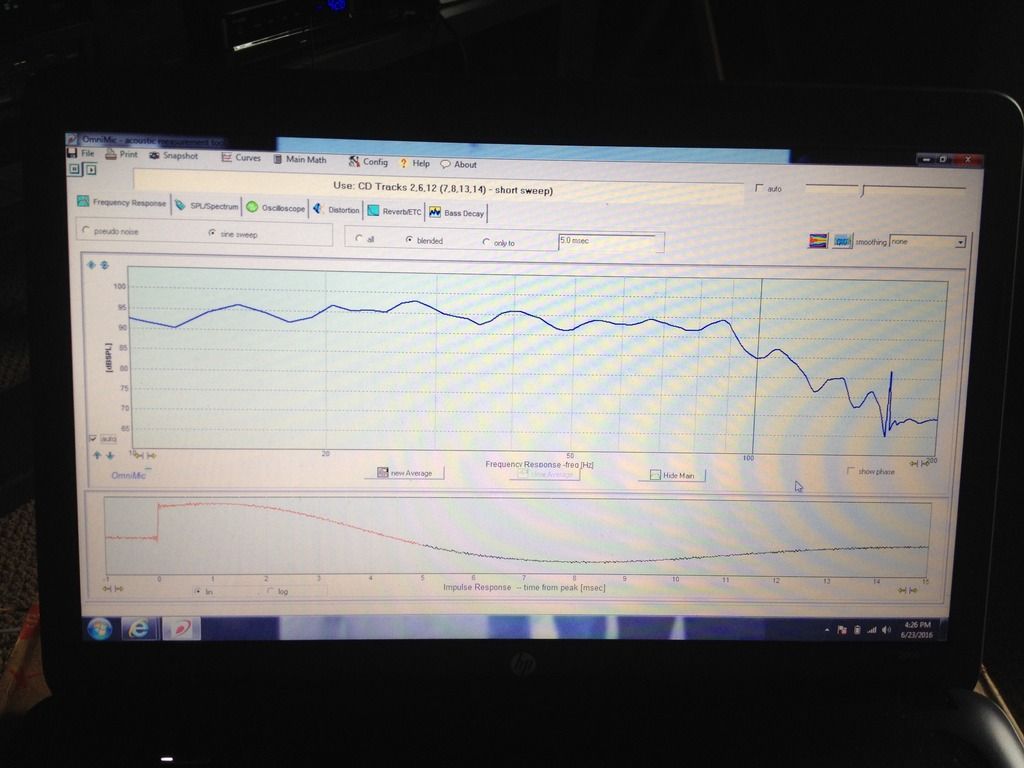

Thoughts on measuring my four subs from the LP. Here's how they measured

I would like to see how they measure from the LP so im not taxing the system too much.. So, im interested in distortion testing, i like the FR though..

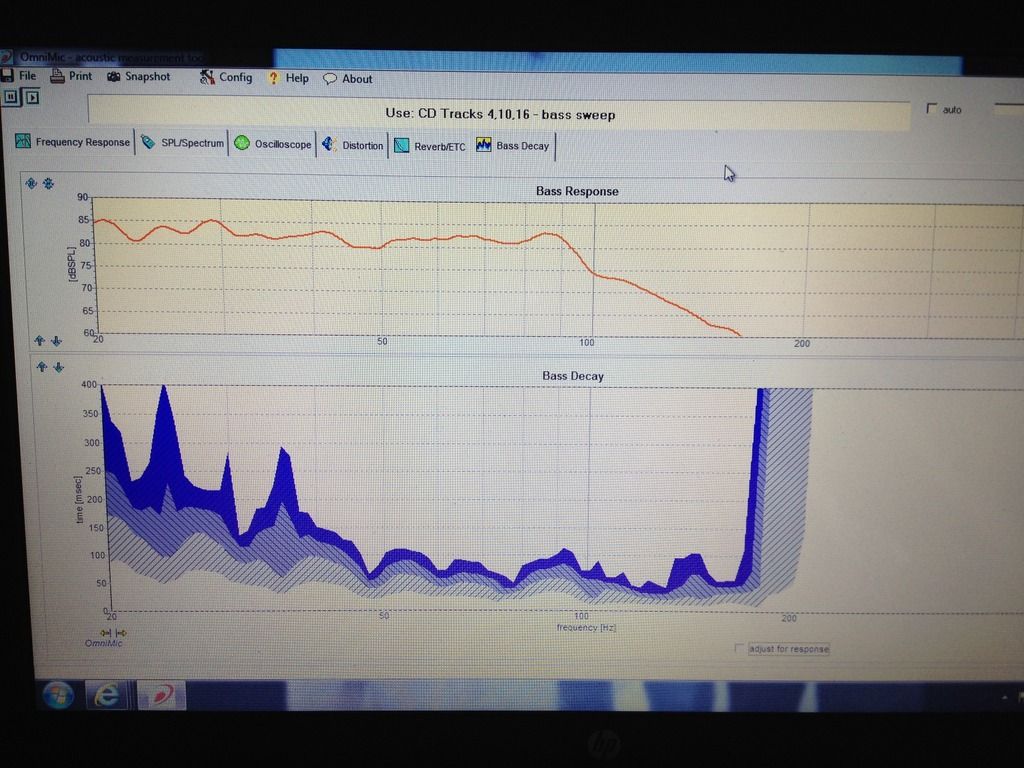

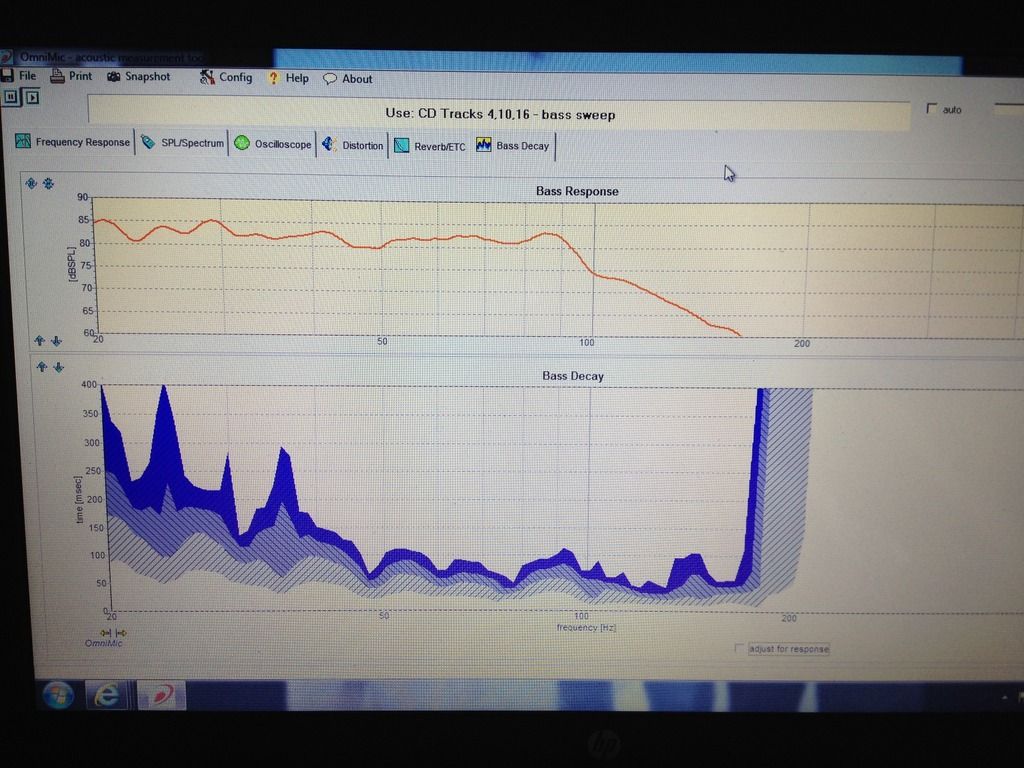

Here's some decay plots

I would like to see how they measure from the LP so im not taxing the system too much.. So, im interested in distortion testing, i like the FR though..

Here's some decay plots

Last edited:

Measuring distortion of subs indoors can be difficult. The problem is that to avoid the measurement being too fouled by room reflections and modes, you have to have the microphone up very close to the sub driver. But then, you can't test at too high a level since the microphone is easily overdriven when that close (and when playing at the usually very high subwoofer levels). You can easily end up measuring the distortion of an overdriven mic capsule.

With OmniMic, you want to keep the level at the mic below about 115dBSPL (varies from mic-to-mic, other electret-based mics will be similar). Just use the OmniMic distortion measurement tab-page and put the mic up within about an inch of the woofer (assuming a sealed sub). ***BTW, if you're in Micropsoft Vista or later, make sure that the OM is seen by the system as "2-channel, 48kHz" mode (the OS defaults all USB mics to mono, which can make the OM levels read very wrong!); and run OmniMic software in WindowsXP SP3 emulation mode (as with Windows 8 or later, Microsoft changed the way mic sensitivity settings get interpreted, which can also make the levels read very wrong -- a new version 5 of OM software should be released very soon, that internally fixes this second issue).

If measuring outside, put the woofer away from all large surfaces except the ground and put the mic on the ground at some distance from the woofer. Then you can play the woofer louder, but that's still not an easy solution, because outside, a woofer an any drive level won't cause nearly as high SPL as it will be indoors. No room reinforcement happens outside -- which can be a lot for subwoofers.

With OmniMic, you want to keep the level at the mic below about 115dBSPL (varies from mic-to-mic, other electret-based mics will be similar). Just use the OmniMic distortion measurement tab-page and put the mic up within about an inch of the woofer (assuming a sealed sub). ***BTW, if you're in Micropsoft Vista or later, make sure that the OM is seen by the system as "2-channel, 48kHz" mode (the OS defaults all USB mics to mono, which can make the OM levels read very wrong!); and run OmniMic software in WindowsXP SP3 emulation mode (as with Windows 8 or later, Microsoft changed the way mic sensitivity settings get interpreted, which can also make the levels read very wrong -- a new version 5 of OM software should be released very soon, that internally fixes this second issue).

If measuring outside, put the woofer away from all large surfaces except the ground and put the mic on the ground at some distance from the woofer. Then you can play the woofer louder, but that's still not an easy solution, because outside, a woofer an any drive level won't cause nearly as high SPL as it will be indoors. No room reinforcement happens outside -- which can be a lot for subwoofers.

Hi,

isn't it all just 'measuring' wild cards when You don't know the frequency- and level dependant THD of the measuring setup?

When measured with a freshly calibrated B&K capsule the figures for my ESLs at 110dB were roundabout 0.1% lower than those measured with an MB MCD550.

When asked B&K told me that the THD of their capsule already could be ~1/2 of the measured value.

Do You really expect believable (and reproducable) values from cheap electret capsules of which one doesn't know amplitude response nor THD behaviour (the already better ones coming with one-fits-all amplitude calibration files only)?

How do You discriminate against the behaviour of the DUT and the measuring setup?

no pun intended, just wondering 😉

jauu

Calvin

isn't it all just 'measuring' wild cards when You don't know the frequency- and level dependant THD of the measuring setup?

When measured with a freshly calibrated B&K capsule the figures for my ESLs at 110dB were roundabout 0.1% lower than those measured with an MB MCD550.

When asked B&K told me that the THD of their capsule already could be ~1/2 of the measured value.

Do You really expect believable (and reproducable) values from cheap electret capsules of which one doesn't know amplitude response nor THD behaviour (the already better ones coming with one-fits-all amplitude calibration files only)?

How do You discriminate against the behaviour of the DUT and the measuring setup?

no pun intended, just wondering 😉

jauu

Calvin

You can't totally discriminate if the values are similar. But if the measurement comes out as a few tenths of a percent, you can be pretty sure it isn't really whole percentages, since the distortion is unlikely to cancel that much (or at all for 3rd harmonic). There will always be a resolution floor to any measurement, you can only ever verify that the result isn't much worse than your test gear.

You can also measure at different levels and see how distortion increases with level. If distortion is from a single contributor, then (at levels well above background noise anyway), 2nd harmonic level will tend to increase 2dB for each 1dB increase in drive. 3rd will tend toward 3dB per 1dB increase. If that behavior is seen, then likely the distortion is dominated by just the speaker (or by just the microphone if you're nearing its limits - you can move the mic away to reduce the SPL it sees and see if the distortion changes with its level).

OmniMics, BTW, are individually calibrated for amplitude, not one-fits-all. Admittedly with some roadbumps along the way (but I think those are ironed out now).

You can also measure at different levels and see how distortion increases with level. If distortion is from a single contributor, then (at levels well above background noise anyway), 2nd harmonic level will tend to increase 2dB for each 1dB increase in drive. 3rd will tend toward 3dB per 1dB increase. If that behavior is seen, then likely the distortion is dominated by just the speaker (or by just the microphone if you're nearing its limits - you can move the mic away to reduce the SPL it sees and see if the distortion changes with its level).

OmniMics, BTW, are individually calibrated for amplitude, not one-fits-all. Admittedly with some roadbumps along the way (but I think those are ironed out now).

where do I find these settings...

With OmniMic, you want to keep the level at the mic below about 115dBSPL (varies from mic-to-mic, other electret-based mics will be similar). Just use the OmniMic distortion measurement tab-page and put the mic up within about an inch of the woofer (assuming a sealed sub). ***BTW, if you're in Micropsoft Vista or later, make sure that the OM is seen by the system as "2-channel, 48kHz" mode (the OS defaults all USB mics to mono, which can make the OM levels read very wrong!); and run OmniMic software in WindowsXP SP3 emulation mode (as with Windows 8 or later, Microsoft changed the way mic sensitivity settings get interpreted, which can also make the levels read very wrong -- a new version 5 of OM software should be released very soon, that internally fixes this second issue).

For the microphone, plug it into a usb port, go to Control Panel and find the Sound settings for Recording devices.

For the software, right click on the Omnimic icon, choose Proprties, Compatibilities settings.

Im not on a Windows machine right now, but for more detailed instruction go to the DaytonAudio.com site and look under Omnimic...

http://www.daytonaudio.com/index.php/omnimic-v2-precision-measurement-system.html

For the software, right click on the Omnimic icon, choose Proprties, Compatibilities settings.

Im not on a Windows machine right now, but for more detailed instruction go to the DaytonAudio.com site and look under Omnimic...

http://www.daytonaudio.com/index.php/omnimic-v2-precision-measurement-system.html

Last edited:

How do You discriminate against the behaviour of the DUT and the measuring setup?

no pun intended, just wondering 😉

jauu

Calvin

Some folks have tried 2 speakers and 1 microphone with slightly different frequencies in each speaker, the imtermods are only due to the mic so there is an opportunity to separate stimulus from source.

good point, forgot about that technique. That lets you know how much distortion from the mic (you have to add the amplitude --not in dB!-- of the two tones to relate to similar level of a single tone equivalent), then if speaker's distortion (playing both tones together) is a reasonable bit more you can assign it there. That does get ttricky, though, you have to make sure both tones are playing through the same driver to avoid the crossover "fixing" the distortion generation of the electromechanical department.

For the microphone, plug it into a usb port, go to Control Panel and find the Sound settings for Recording devices.

For the software, right click on the Omnimic icon, choose Proprties, Compatibilities settings.

Im not on a Windows machine right now, but for more detailed instruction go to the DaytonAudio.com site and look under Omnimic...

Dayton Audio Dayton Audio OmniMic V2

Thank you, i'll double check that tonite

- Status

- Not open for further replies.

- Home

- Loudspeakers

- Multi-Way

- How to Measure Speaker Distortion Correctly?